Chapter 19 Ollama: R and AI

This section discusses on how to run artificial intelligence–large language models (LLM-s) using ollama environment. Ollama (ollama.com) lets you to run models locally on your own computer–given you have a computer that is beefy enough. It also offers to run larger versions of the models on the cloud, so you can develop and test your tasks locally, and then switch over to the cloud.

19.1 Installing Ollama

You can install ollama directly from its main page, or alternatively from its Github assets. The latter allows you to choose the exact version you want to install, not just the default version for your system. But note that the downloadable files are large (except for mac), of the order of 1GB.

The default linux installation suggestion

curl -fsSL https://ollama.com/install.sh | shwill change your computer configuration by adding users and startup

services. I recommend to just unpack ollama-linux-amd64.tgz instead

to avoid such problems.

Mac and Windows default installations are system-friendly, as far as I know.

On mac and windows, ollama by default opens a graphical interface where you can chat with selected models (on linux you have to rely on command line). This resembles in many ways how you access cloud-based AI through browser.

However, ollama will by default run the models on your computer. This means the models also need to be downloaded, and the models can be much much larger than ollama itself (after all, the are called large language models). You can see all available models on ollama’s models page https://ollama.com/search. These are the models that do all the hard work, ollama itself is just an interface that allows to use the models in an easy way.

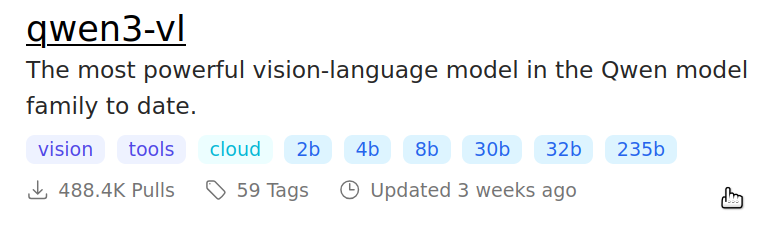

Example versions of qwen3-vl models on ollama’s models page. There are six versions of qwen3-vl, with 2, 4, 8, 30, 32 and 235 billion of parameters. One parameter is roughly one byte, so for instance, qwen3-vl:2b will download approximately 2GB of data and take 2GB of ram, but the latter may depend on the task size. On a computer with 16GB ram, you should be able to run qwen3-vl:8b, but not the 30b version. So pick your models accordingly!

19.2 Using Ollama

You can use ollama in a fairly similar fashion as any other web-based AI tools–enter questions at the prompt and the model replies to it. You can solve many tasks in this way, I, for instance, am using it to translate sentences. But here we use R to work with the models. This opens many other kind of possibilities, in particular you can use LLM-s to analyze data and including images. While it is easy to manually prompt a single image, you cannot easily copy-paste thousands of lines and thousands of documents.

Web API

Start server

How much resources it requires

Each time a different answer

19.3 ollamar-package

This book uses ollamar package.

19.3.1 A quick look at the usage

Here is a very simple usage example:

library(ollamar)

model <- "llama3.2:1b" # assume you have downloaded the model

response <- generate(model,

"When was Mahatma Gandhi born?",

output = "text")

cat(response, "\n")

## Mahatma Gandhi was born on October 2, 1869.The model is correct.

Now let’s explain it in a more detail:- Before you even begin–you need to start ollama server. This should be done outside R, by starting the ollama app. You may also want to download the necessary models directly from the app.

- First you want to load the packages (see Section 5.6 about how to install R packages).

- Select the model. The example here uses a very small, 1 billion parameter version of llama 3.2. This model is reasonably fast even on weak computers, but it is also quite limited in terms of what it can do.

- The central function here is

generate(). It takes several arguments, most importantly- model: the model to use, it must already be downloaded;

- prompt: the textual prompt that tells the model what to do;

- output: the output format. Here we use text, the simplest of these, but you may pick something more complex to receive more information about how did the query go.

- Finally, we use

cat()to print the response as this function prints the text better.

But Llama 3.2:1b is quite limited:

response <- generate(model,

"How is 'have a nice day' in Chinese?",

output = "text")

cat(response, "\n")

## In Chinese, "have a nice day" is translated to "" (nǐ hǎo).Even with limited Chinese knowledge you can probably see that what ought to be the Chinese word in quotes is … well, there is no word. (And if you know Chinese, you also see that the pinyin version, nǐ hǎo, is not quite right either.) But the upside is that it is fast, and requires little memory.

We can try a more powerful model, for instance, qwen3-vl:2b:

model <- "qwen3-vl:2b"

response <- generate(model,

"How is 'have a nice day' in Chinese?",

output = "text")

cat(response, "\n")

## The phrase **"have a nice day"** in Chinese isn't a direct translation (since Chinese doesn't use this exact structure for casual greetings), but the **most natural and common way** to express this wish is:

##

## ### ✅ **祝你今天愉快**

## *(Zhù nǐ jīn tiān kuài lè)*

##

## #### Why this works:

## - **"祝" (zhù)** = "wishing" or "congratulating" (e.g., "wish you a happy day").

## - **"你" (nǐ)** = "you" (polite form).

## - **"今天" (jīn tiān)** = "today".

## - **"愉快" (kuài lè)** = "pleasant" or "happy" (idiomatic for "nice").

## → This phrase captures the **warm, friendly tone** of "have a nice day" while sounding natural in Chinese.

## ...As you can see, even the small version of qwen3-vl is much more powerful–at least for translating English to Chinese.

These examples demonstrated the basic tools to use ollama in R. However, such single prompts do not gain from being issued through computer. Next, we’ll do some data analysis.

19.4 Analyzing data

The previous examples involved a single task–just translating an expression. Now let’s do something that cannot easily be done manually.

We use Amazon reviews data (see Section I.2). Here let’s ask a small model, llama 3.1:1b, to read the review, and evaluate how satisfied is the user with the result:

reviews <- read_delim("data/amazon-reviews.csv.bz2") %>%

select(rating, review) # only need these two variables19.4.1 The simplest prompting

Perhaps the most important task when working with LLM-s is to devise a good prompt. Typically, you have to explain the model quite a bit what do you want, and how do you want the response to be written. To give you an idea, here is an example review (review 9):

## Beautiful alone (not a good topper because it covers so well, in one coat,

## which is a good thing too)What rating do you as a human think the reviewer gave? You probably cannot answer, unless you know a bit more how do people tend to write reviews and give numeric grades. Also–you need to know that Amazon ratings are between 1 and 5, with ‘1’ being the worst and ‘5’ being the best score. For the reference, the reviewers rating is 5.

Let’s attempt this with a simple prompt. The final prompt should

contain both the instructions and the actual review as a single

string. It is easiy to combine a prompt template

and the actual review together using

sprintf() function (see Section 7.4.2). Hence a

naive prompt might be:

Later, when feeding the actual data into the model, we can use

sprintf(template, reviews$review[i]) that will replace %s with the

actual review.

A suitable way to do data analysis is to just loop over all the dataset, line-by-line, and feed in the reviews into the prompt, one after the other. Let’s do this here:

model <- "llama3.2:1b"

for(i in 1:nrow(reviews)) {

review <- reviews$review[i]

## First, let's print the review and the actual rating,

## for the reference

cat("\n", "---", i, "---\n") # print the number,

# separate different reviews with dashes

## Print the actual rating and wrap the lines for the review

review %>%

strwrap() %>%

paste(collapse = "\n") %>%

cat(reviews$rating[i], ., "\n")

## Make prompt out of template and the review

prompt <- sprintf(template, review)

## Generate response

response <- generate(model, prompt,

output = "text")

## Wrap the lines and print:

response %>%

strwrap() %>%

paste(collapse = "\n") %>%

cat("\n-> ", ., "\n")

}- the loops runs over all lines of the dataset and creates a new

variable,

reviewfor easier reference. - In order to make output easier to read and analyze, it also prints

the review number

ibetween---markers. - Both the review and response lines are

wrapped. The response will be put on a new

line and marked with

->.

And below are some excerpts from the results:

## --- 1 ---

## 2 Polish chips very easily Love the color.

##

## -> The user's rating for this review would be 4 out of 5.

##

## Since "very easily" implies high ease, and the user loves the color ("Love

## the color"), I'd expect a perfect score (5). However, in consumer reviews,

## sometimes the text may not perfectly match the numerical scale used by the

## website or platform.

## ...

## --- 3 ---

## 5 Foams and feels very clean.

##

## -> The user's rating for this statement would be 5 out of 5, as it indicates

## that they found the product to be extremely clean and enjoyable to use.

## ...

## --- 5 ---

## 2 The shower gel wash leaves your skin soft and the smell is very pleasant.

## My husband really liked it but it is way too expensive to use on a regular

## basis.

##

## The container is the worst one I have ever used. The container is square

## and very awkward to use. The pump is impossible to use as it stays down

## when you pump. I you need to pump more then you have to lift it to depress

## it again.

##

## I cannot recommend this product (even if the soap smells good) as it is

## very hard to use and way to expensive.

##

## -> The user's rating on a 1-5 scale would likely be 2, indicating that they

## did not like the product overall. They mentioned several negative aspects

## of the product: its high price, unpleasant smell, and awkward container

## design.

## ...

## --- 8 ---

## 1 In person it's different then pic

##

## -> Based on the given information, I'm assuming you want to know the rating of

## this statement. However, since there's no context about an actual product

## or service being reviewed, I'll interpret the question as asking for a

## subjective evaluation based on the phrase "different than in person."

##

## Assuming this is a one-time comment or anecdote, a rating might vary widely

## depending on individual perspectives and cultural background.

##

## A possible interpretation could be:

##

## * If it's about a difference between two different states of being (e.g.,

## physical vs. mental), then a rating of 4 out of 5 might seem reasonable as

## both could be considered "different" in some way. * If the comment is about

## something that doesn't have an in-person equivalent (e.g., how someone

## behaves or interacts with others when in person versus in video calls), a

## rating of 3 or 1 out of 5 might also apply, depending on how one perceives

## these differences.

##

## Without more context, it's challenging to assign a precise numerical value

## to the statement "In person it's different then pic."

## ...The model gets the answer right fairly often (cases 3 and 5), even as we do not give it any additional guidance about how to assess the rating.

But this is not always the case (case 8).

Sometimes the model will misunderstand the review. For instance, in case 5, it mentions “unpleasant smell”, while the review actually says that “the smell is very pleasant”.

This particular problem can probably be avoided when using a larger model, e.g. llama3.2:3b.The results are returned in a way that is hard to process automatically. How will you extract the exact number from

- … rating for this review would be 4 …

- … rating for this statement would be 5 …

- … rating on a 1-5 scale would likely be 2 …

- … rating might vary widely depending on …

So in the last case it does not even offer a consistent rating.

There may be many more problems, e.g. the rating not being limited to being between 1 and 5.

Some of the challenges here are genuinely hard. For instance, how on earth can you know that the review “Polish chips very easily Love the color.” has rating “2”? Nothing in the text suggests that the user is not really satisfied with the product.

But other problems are something that we might try to address with prompting. But before we get into more elaborate prompts, let’s try to automatically process the results.

19.4.2 Automatically reading the answers

Printing the results out as above are good if your task is just to read the model’s opinion. But what if we actually want to store the evaluations and use those later?

The first problem is that we want the model to reply in a consistent way, so that we could easily extract its rating. We can give guidance in the prompt as:

template <- "

Below is a product review. What was the user's rating on 1-5 scale?

---

%s

---

Give your answer exactly as

Rating: <rating>

where <rating> is your rating on 1-5 scale, for instance

Rating: 3

for a neutral review.

"This will help the model to provide the rating in a simple, computer-accessible way.

The other steps we need to do are to extract the rating, and to store it in a numeric form into a vector. As the latter, we can make a new column in the data frame:

model <- "llama3.2:1b"

reviews$predicted <- NA

for(i in 1:nrow(reviews)) {

review <- reviews$review[i]

## First, let's print the review and the actual rating,

## for the reference

cat("\n", "---", i, "---\n") # print the number,

# separate different reviews with dashes

## Print the actual rating and wrap the lines for the review

review %>%

strwrap() %>%

paste(collapse = "\n") %>%

cat(reviews$rating[i], ., "\n")

## Make prompt out of template and the review

prompt <- sprintf(template, review)

## Generate response

response <- generate(model, prompt,

output = "text")

## Wrap the lines and print:

response %>%

strwrap() %>%

paste(collapse = "\n") %>%

cat("\n-> ", ., "\n")

## Extract the answer and convert to a number:

r <- response %>%

sub(".*Rating: *([[:digit:]]).*", r"(\1)", .) %>%

as.numeric

## Replace the predicted NA, but only if it was able to extract

## a single number

if(length(r) == 1)

reviews$predicted[i] <- r

}##

## --- 1 ---

## 2 Polish chips very easily Love the color.

##

## -> Rating: 4

##

## --- 2 ---

## 3 This is a light weight conditioner as described. It is not deep

## conditioning if you have dry or chemically processed hair.

##

## -> Rating: 2

##

## --- 3 ---

## 5 Foams and feels very clean.

##

## -> Rating: 4

##

## --- 4 ---

## 5 Gorgeous night cream, my skin loves it, it doesn't clog up my pores and my

## skin is clear and soft and glowing. Excellent product.

##

## -> Rating: 4

##

## --- 5 ---

## 2 The shower gel wash leaves your skin soft and the smell is very pleasant.

## My husband really liked it but it is way too expensive to use on a regular

## basis.

##

## The container is the worst one I have ever used. The container is square

## and very awkward to use. The pump is impossible to use as it stays down

## when you pump. I you need to pump more then you have to lift it to depress

## it again.

##

## I cannot recommend this product (even if the soap smells good) as it is

## very hard to use and way to expensive.

##

## -> Rating: 2

##

## --- 6 ---

## 3 This protein booster skin serum feels very nice when applied. It has a

## silky smooth texture and feels good on your face and neck. It's vegan

## friendly and contains no waxes or harmful chemicals. This is a good face

## serum, but it does take a while to see results and the results are a

## reduction of fine wrinkles and an increase in general hydration. It is a

## great boost for dry winter skin and a little bit goes a long way. This

## bottle should last a couple of months.

##

## I believe this product probably performs at its finest when used with the

## other Jack Black high quality products. It's pretty expensive but it is a

## serum that uses good, quality ingredients. Being a woman who loves beauty

## products, I find that I can find a less expensive serum that works probably

## just as well as Jacks. If I had money to invest however, I'd buy his

## regimen and would use it in good faith as products that are great quality

## perform with positive results.

##

## -> Rating: 4

##

## --- 7 ---

## 5 This is the best eye cream. It may seem a little pricey for such a tiny

## tube, but it lasts FOREVER. I'm notorious for using more product than is

## strictly necessary, and even I only use a dollop slightly smaller than a

## pencil lead for each eye. It keeps the area around my eyes moisturized and

## smooth, and makeup goes over the cream and stays put quite nicely.

## Finally, I have very sensitive skin and eyes, and this is one of the only

## creams I've ever used that doesn't irritate either!

##

## -> Rating: 5

##

## --- 8 ---

## 1 In person it's different then pic

##

## -> Rating: 2

##

## --- 9 ---

## 5 Beautiful alone (not a good topper because it covers so well, in one coat,

## which is a good thing too)

##

## -> Rating: 4

##

## --- 10 ---

## 5 good purchase

##

## -> Good purchase##

## --- 11 ---

## 3 I love all Oribe products and have numerous ones I use. In fact, I have

## just about stopped using all other brands of hair products and switched to

## Oribe. As for the shampoo, it smells wonderful (the same smell as all

## other Oribe products) and leaves my hair very shiny, but does not later

## well. I know shampoo does not have to lather, but I am one of those people

## who feels they don't get their head clean without it. I find I am using

## more product than I should to get that lather which makes this very costly.

## The results are wonderful, I just don't know if I can justify the cost. If

## the bottle were larger for the price or the price was about $10 less I

## would continue to purchase.

##

## -> Rating: 4

##

## --- 12 ---

## 1 Awful product. I've used their fibers with great success, but this stuff is

## useless. All it does is make your hair look dull and straw like. I

## contacted the company and asked for a refund, but did not get the courtesy

## of a response. Sorry I bought this product. Does not do what it purports to

## do.

##

## -> Rating: 1

##

## --- 13 ---

## 5 Wanted a natural nail polish. And this is the closest anyone makes. I love

## how it makes my natural nails look beautiful. Don't have to worry when it

## starts chipping because you barely notice. Made my nails a lot less yellow.

## Love this product!

##

## -> Rating: 4

##

## --- 14 ---

## 5 Wonderful cream. It moistures my skin but does not make it greasy. I suffer

## from occasional rosacea and this cream seems to have reduced my breakouts.

## The overall texture of my skin has improved. I looked at my photographs

## from several years ago and noticed that the sun spots I had are gone today.

## This cream works as well as IPL treatment I used to have. It obviously

## takes longer to see the results but there is no pain and the cost of the

## cream compared to that of IPL make it a great investment. I use Nia24 Sun

## Damage Repair on the rest of my body with equally impressive results. I see

## no point in taking care of my face and neck while neglecting the rest of my

## skin.

##

## -> Rating: 4

##

## --- 15 ---

## 4 Good coverage-blends well

##

## -> Rating: 4

##

## --- 16 ---

## 5 This makes such a great gift, for both women and men. It's packaged so

## elegantly. As soon as you touch the box, you could see the value and

## quality. Inside the luxurious leather case. The tools are laid out neatly

## and separately in their own slot. They are also so well made and well

## designed - easy to handle and grip on, nice and sturdy. The nail clipper,

## especially, is a piece of art. It's so compact yet sharp on the nails.

## Every tool in there is cleverly engineered. I absolutely love it.

##

## -> Rating: 4

##

## --- 17 ---

## 5 I've been using the regular St. Tropez tanner for years now and have found

## it to be the most natural looking self tanner on the market. What I didn't

## love was having to leave it on overnight in order for it transfer onto my

## skin to get the level of coverage and tan that I prefer. But that's how

## most self tanners work, so I figured it was worth the hassle.

##

## This new express tanner allows you to apply the product for a few hours (3

## at the most if you want a darker tan) and then you shower and go about your

## business. It will continue working over the course of the next few hours,

## making your tan deepen as time goes by. Just make sure to exfoliate before

## using and to apply right after showering on clean skin. Also, I would

## recommend buying the application mitt. It makes it go on much easier.

##

## -> Rating: 4

##

## --- 18 ---

## 4 Its better than anything I bought in the past because it does not create a

## mess or makes my hair sticky. I am giving it a 4 because it does not stay

## on for a long. I have to reapply.

##

## -> Rating: 4

##

## --- 19 ---

## 5 I have tried various ZEN-TAN lotions now and they all work excellently.

## This one has a vanilla scent, masking any chemical smell there may be. The

## pump lotion comes out a medium brown and absorbs quickly into the skin.

## The result is a very natural and even tan. This works well around the face

## when properly applied.

##

## -> Rating: 4

##

## --- 20 ---

## 1 Product received promptly in a well cushioned envelope. However quality of

## nail polish leads me to believe it is either extremely old or had been

## sitting for a long period of time in extreme temperatures. Nail polish is

## extremely watery, not at all glossy even after 3 coats. It is not the

## quality I am used to when compared to other Essie nail polishes

##

## -> Rating: 2## [1] 4 2 4 4 2 4 5 2 4 NA 4 1 4 4 4 4 4 4 4 2- The code starts by creating a new column predicted and initializing it with NA. These are the model predictions, and we’ll keep NA-s there to denote cases that are either not yet processed, or alternatively, where the model failed to produce a rating.

- The most important part is how to extract the numeric rating from

the response. Here I use regular expressions, in particular

".*Rating: *([[:digit:]]).*". This matches a single digit after “Rating:”, and deletes everything that is not the digit. - Another important step is the replacing the predicted value with the extracted rating. However, it should only be done if the model was able to extract a single number!

Now the answers look like:

## 2 Polish chips very easily Love the color.

##

## -> Rating: 4

##

## --- 2 ---

## 3 This is a light weight conditioner as described. It is not deep

## conditioning if you have dry or chemically processed hair.

##

## -> Rating: 2

##

## --- 3 ---

## 5 Foams and feels very clean.

##

## -> Rating: 4

##

## --- 4 ---

## 5 Gorgeous night cream, my skin loves it, it doesn't clog up my pores and my

## skin is clear and soft and glowing. Excellent product.

##

## -> Rating: 4

...

## --- 10 ---

## 5 good purchase

##

## -> Good purchaseSo you can see that most responses are indeed printed in the standard format. The extracted results will look like

## [1] 4 2 4 4 2 4 5 2 4 NA 4 1 4 4 4 4 4 4 4 2Everything else is all well–despite of asking the model to rate the review in that exact form, it ended up not doing so. Even more, it did not even produce a rating.

This problem is more common with smaller models–they have more limited capacity to understand your prompts, and more limited capacity to reply in the desired form. But large models are not immune for these problems.

19.4.3 How good is the model?

Finally, it is time to see how good a job did the model do. First, let’s take a look at the confusion matrix (see Section 18.5.1):

Accuracy (see Section 18.5.2) is

## [1] 0.3## [1] 0.3Exercise 19.1

How good is this accuracy? Imagine two other models:- The first models just randomly guesses the rating with each value 1–5 occuring in the equal probability.

- The second model always predicts the most popular rating in the dataset.

What will be the (expected) accuracy of the first and the second model?

The computed accuracy, 0.3, is not overly impressive. But is it even a good measure of the model performance? Accuracy is a good choice if the predicted value contains a small number of distinct categories, and the errors, predicting a wrong category, are all equal, no matter which category was by mistake predicted wrong. But this is not such a case. We have a small number of distinct categories (‘1’ till ‘5’), but errors are not the same: mistaking ‘5’ by ‘4’ is clearly a smaller error than mistaking ‘5’ by ‘1’.

A possible solution is to compute mean squared error (MSE) instead: \[\begin{equation*} \mathit{MSE} = \frac{1}{N} \sum_{i = 1}^N (\hat y_i - y_i)^2 \end{equation*}\] Here \(N\) is the number of observations, \(y\) is the actual category, and \(\hat y\) is the predicted category. MSE computes square of the difference between the actual and predicted value, so small errors will matter much less than large errors:

## [1] 0.8947368So despite the accuracy not being good, the model is usually not more than one step off from the correct result.

You should compare both accuracy and MSE when you assess which models perform better.

19.5 Analyzing images

#!/usr/bin/env Rscript

library(tidyverse, quietly = TRUE)

library(ollamar)

## For images, 'qwen3-vl:2b' worked very well for me,

## uses 2-5G ram, depending on the image

## timings (qwen3-vl:2b):

## * cai lun: 10m, wedding photo 159s

## * nick: 860min, wedding photo 8347s

## * johan: 300m, wedding photo 3980s

## * is-otoometlc9: (on power) 79m, wedding photo 900s

## * (on battery) 79m, wedding photo 900s (did not work?), eats 40% battery

## * is-otoometd5060: 205m, wedding photo 1880s

##

## Can also use 'llava', takes 5.7G ram

model <- "qwen3-vl:8b"

cat("Using", model, "model\n")

picFolder <- "pictures"

options(timeout = 1e4) # for slow computers

## Can it recognize and translate handwritten Chinese?

cat("\nRecognize/translate the poem\n")

tictoc::tic()

generate(model,

prompt = "

This is a handwritten Chinese poem.

Read and print it, and translate it to English.

",

images = file.path(picFolder, "poem.jpg"),

output = "text") %>%

strwrap() %>%

paste(collapse = "\n") %>%

cat("\n\n")

tictoc::toc()

pics <- list.files(picFolder) |>

setdiff("poem.jpg") # to have better comparability

cat("Pictures:\n")

for(pic in pics) {

cat(pic) # print here for debugging

picPath <- paste(picFolder, pic, sep = "/")

info <- magick::image_read(picPath) |>

magick::image_info()

cat(": ", info$width, "x", info$height, "\n")

tictoc::tic()

resp <- generate(model,

prompt = "What is on the picture?",

images = picPath,

output = "text",

keep_alive = "60m")

tictoc::toc()

resp %>%

strwrap() %>%

paste(collapse = "\n") %>%

cat("\n\n")

}

cat("\nFind the student number\n")

for(pic in pics) {

cat(pic)

picPath <- paste(picFolder, pic, sep = "/")

info <- magick::image_read(picPath) |>

magick::image_info()

cat(": ", info$width, "x", info$height, "\n")

tictoc::tic()

resp <- generate(model,

prompt = "

What is on the picture?

Is this an image of a student ID card?

If yes, find the student number and reply

**student number **<number>**

",

images = picPath,

output = "text")

tictoc::toc()

resp %>%

strwrap() %>%

paste(collapse = "\n") %>%

cat("\n\n")

}

## Can it tell when was the pic taken?

## correct answer: 1940-07-25

cat("\nWhen was the picture taken?\n")

tictoc::tic()

generate(model,

prompt = "This is an old wedding photo.

What do you think, when was this picture taken?",

images = file.path(picFolder, "wedding.jpg"),

output = "text") %>%

strwrap() %>%

paste(collapse = "\n") %>%

cat("\n\n")

tictoc::toc()Results:

Using qwen3-vl:2b model

Pictures:

husky-card-husky.jpg: 770 x 495

187.191 sec elapsed

On the picture, there is a photo of a dog (specifically, a husky

or similar breed) with black and white fur.

nurember-amicitia-building.jpg: 1200 x 1600

1538.424 sec elapsed

The picture shows a historic building with a pinkish stone

facade. Notable features include: - A prominent **ornate balcony

or projecting architectural structure** (likely a decorative

parapet or balcony) with intricate carvings and a reddish-brown

roof. - Several windows of different styles: some are

rectangular, others are arched (including one with a fan-like

design above the double doors). - Two main entrances: a glass

double door with an arched top (left) and a wooden double door

with a green sign (right). - A **silver car** parked in front

of the building. - The building is set on a paved street, with

a few small details like vertical signs on the wall and a narrow

vertical structure (possibly a pipe or utility line) on the

right.

The overall scene combines architectural details of a historic

structure with modern elements (the car and street).

tent-night.jpg: 500 x 376

119.203 sec elapsed

The picture shows a **tent** illuminated at night. The tent is

positioned on a dark, possibly rocky or uneven ground, and it is

lit from within, making the fabric and structure of the tent

visible against the dark background. The surrounding area

appears to be outdoors, with some dark, indistinct elements

(possibly debris or natural terrain) in the foreground.

wedding.jpg: 1274 x 2013

1494.584 sec elapsed

The picture shows a couple dressed in wedding attire, likely a

formal wedding portrait. The man is wearing a dark suit with a

white bow tie and a white boutonnière, while the woman is in a

white wedding dress with a veil and a floral headpiece. They are

posed closely together, indicating they are a married couple.

western-bunchberry.jpg: 3275 x 2746

1513.372 sec elapsed

The picture shows a close - up view of a plant with bright red

berries and large, green leaves. The berries are clustered

together and appear to be a type of berry, while the leaves are

oval - shaped and have visible veins. The plant is situated in a

natural environment, likely a forest floor or a wooded area,

with hints of soil and other vegetation in the background.

winter-park.jpg: 800 x 1067

548.079 sec elapsed

The picture depicts a serene winter scene in a park. Key

elements include:

- **Snow-covered trees**: Tall trees with branches heavy with

snow, creating a tranquil, almost monochromatic backdrop. -

**Snow-laden benches and infrastructure**: A bench and street

lamps are blanketed in snow, emphasizing the winter setting. -

**Snow-covered pathway**: A wide, smooth path stretches through

the park, also covered in snow. - **A lone figure**: A person

is visible in the distance, walking along the path, adding a

sense of scale and human presence. - **Atmosphere**: The

overcast sky and heavy snow create a calm, quiet, and somewhat

cold mood typical of a winter day in a park.

The entire scene conveys a peaceful, wintry environment with

nature’s transformation into a quiet, snow-covered landscape.

Find the student number

husky-card-husky.jpg: 770 x 495

207.106 sec elapsed

Is this an image of a student ID card? Yes **student number

**<number> 123456789

nurember-amicitia-building.jpg: 1200 x 1600

1361.794 sec elapsed

Is this an image of a student ID card? No

**student number **<number>

tent-night.jpg: 500 x 376

107.097 sec elapsed

Is this an image of a student ID card? No, this is an image of

a tent at night.

**student number **<number>

wedding.jpg: 1274 x 2013

1470.811 sec elapsed

This image is not a student ID card. It is a vintage wedding

photograph. There is no student number or any information

related to a student ID visible in the image.

western-bunchberry.jpg: 3275 x 2746

1462.988 sec elapsed

This image shows a close - up of a plant with red berries and

green leaves. It is not a student ID card, and there is no

student number or any identifying information for a student ID

visible in the image.

**student number **<none>

winter-park.jpg: 800 x 1067

459.269 sec elapsed

Is this an image of a student ID card? No.

**student number **<number>

When was the picture taken?

This vintage wedding photo likely dates to the **1920s or

1930s**. Here’s how we can infer this:

1. **Attire and Styling**: - The groom wears a formal suit with

a bow tie and a *white boutonnière* (a flower tied with a bow,

common in this era). - The bride’s gown, veil, and overall

silhouette align with 1920–1930s wedding fashion, where women

often wore long, elegant dresses with veils, and men’s suits

were tailored for formality.

2. **Photographic Style**: - The black-and-white, grainy texture

and composition are characteristic of early-to-mid-20th-century

photography. While early 1900s photos had similar tones, the

style of the portrait (formal, posed, and the aesthetic of the

photograph) is more consistent with the 1920s or 1930s.

3. **Historical Context**: - Wedding photography conventions of

the 1920s–1930s emphasized formal poses, classic attire (no

“fashion-forward” elements that would appear later), and a focus

on simplicity and elegance. The lack of modern styling (e.g.,

bright fabrics or bold accessories) further supports this

timeframe.

While it’s possible the photo could span a small window (e.g.,

1925–1935), the overall aesthetic and clothing styles point most

strongly to the **1920s** or **early 1930s**.

1876.577 sec elapsed