library(ramp.xds) Scenario Planning

Suppose we have a model that has been used to evaluate the history of malaria control. We have already done the following:

Set up a model and fitting

Fit it to data

Evaluate the impact of major events:

What was the effect of mass bed net distributions?

What was the effect of IRS?

In a nutshell, all this is an assessment of the past. Scenario planning is an attempt to set rational expectations about what would be likely to happen in the future under various scenarios. To do this, we need to do the following:

Make a forecast of malaria in the absence of control.

Using realistic estimates of the effects of vector control after evaluation:

What should we expect to observe from bed nets?

What should we expect from IRS?

What should we expect from mass drug administration?

What other interventions / modes of delivery are possible?

How much should be allocated to malaria outbreak control?

Given the costs of doing all these, what suite of interventions would save the most lives?

Here, we start with the model we fitted to data in the Evaluation vignette.

The first step is to set up a model.

Here, we are starting with the simple SIS compartment model. This is one of the base models in ramp.xds:

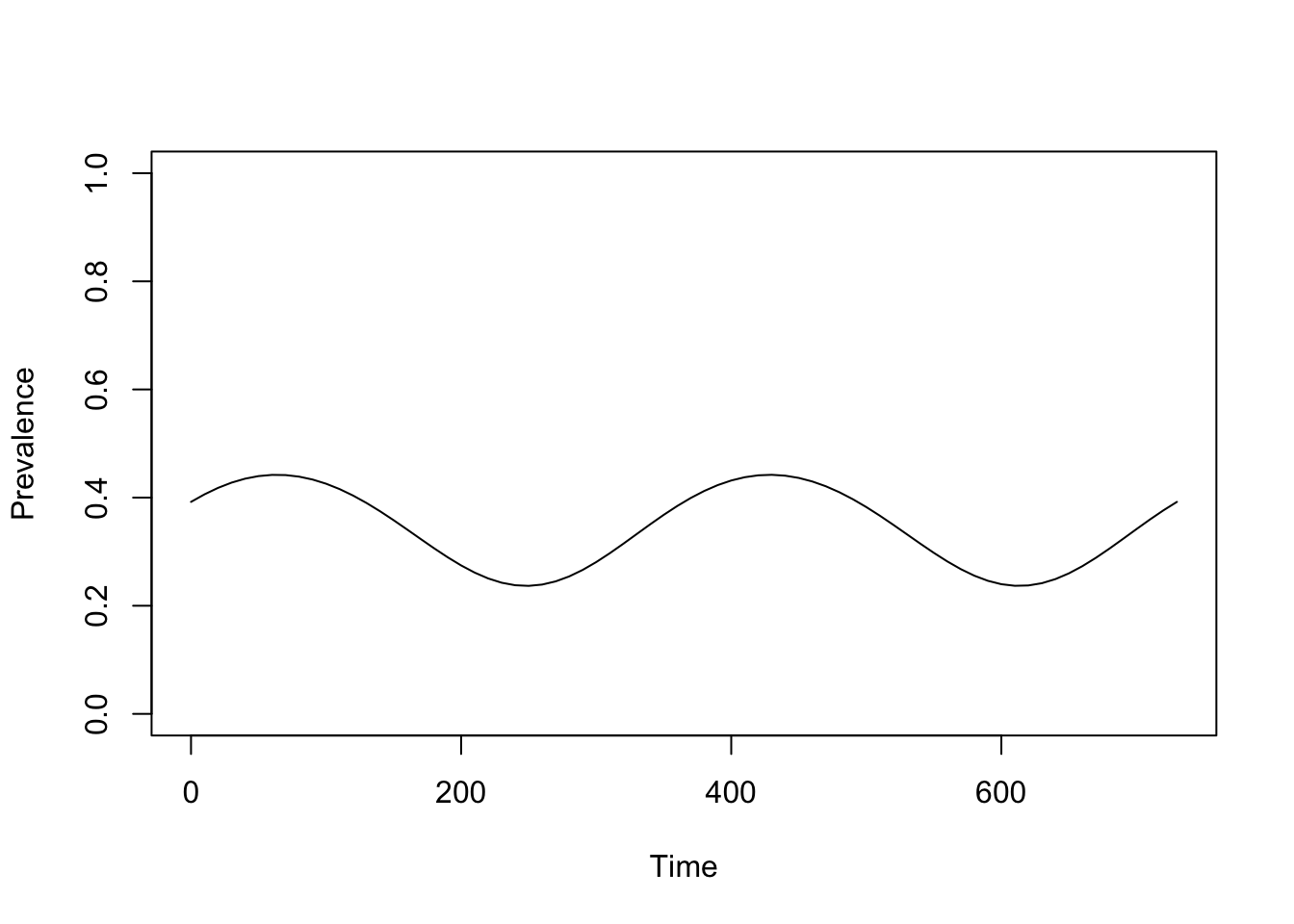

model <- xds_setup_eir(Xname = "SIS",

eir = 2/365,

season_par = makepar_F_sin(),

trend_par = makepar_F_spline(c(0:9)*365, rep(1,10)))model <- xds_solve(model, 3650, 3650)

model <- last_to_inits(model)

model <- xds_scaling(model)

saveRDS(model, "./models/scenario_planning.rds")At this point, some of the parameter values have been chosen arbitrarily, just to set up the structures we want to fit. Even so, we can visualize the outputs:

model <- xds_solve(model, 730, 10)

xds_plot_PR(model)

Fit it to data

Background – Our goal is to develop reliable malaria intelligence – estimates of malaria transmission that are ready for simulation-based analytics. In malaria analytics, we use PfPR as the pivotal metric, but most of the data we have is from clinical surveillance [1–3]. A concern with the clinical surveillance data is that it is collected passively, so it is impossible to design a study to validate clinical data. If we want to trust it, we need to develop algorithms that use the surveillance metrics to make testable predictions about the value of research metrics. The research metric of choice is the PfPR [1–3].

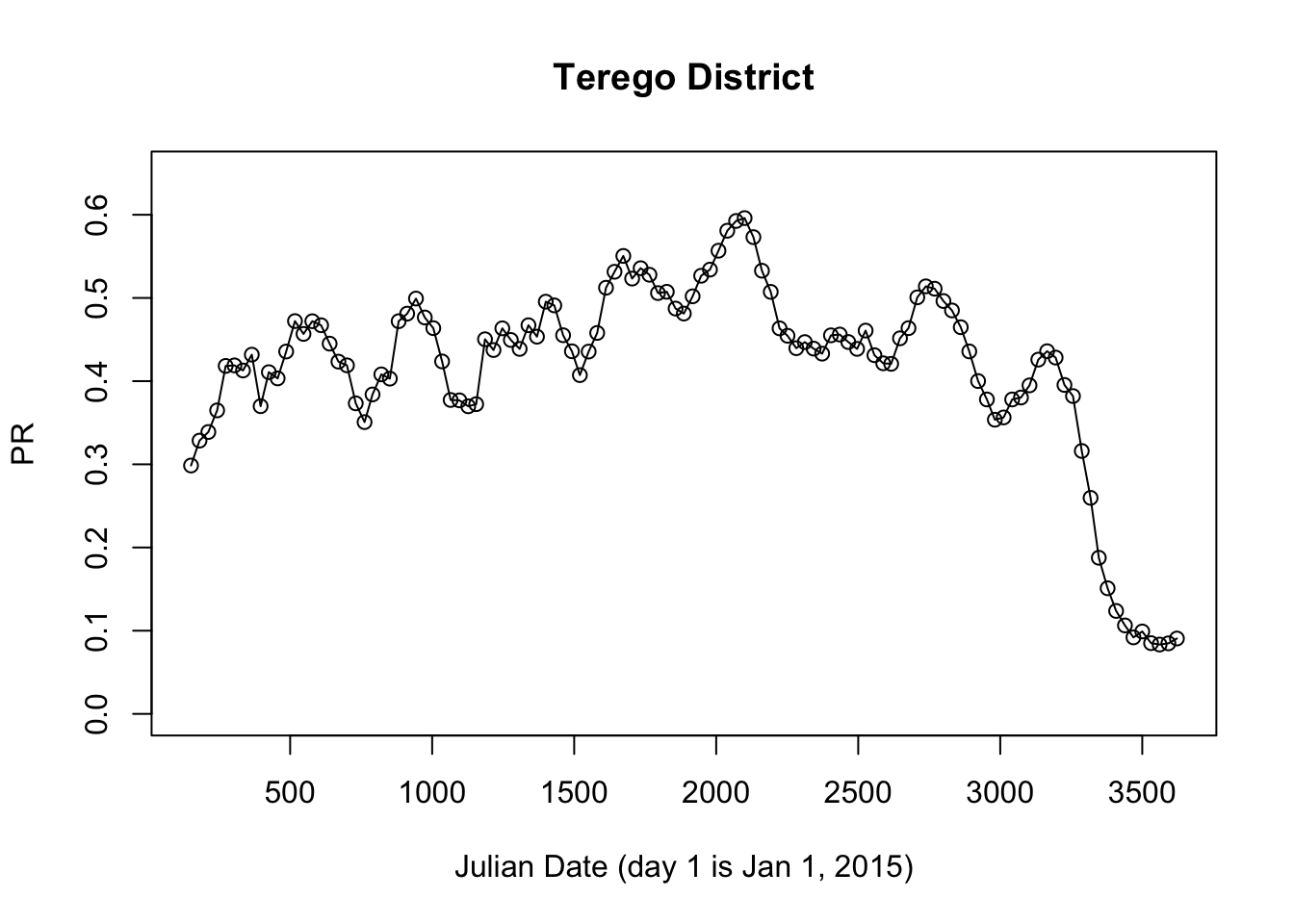

We have done this in Uganda. Here, we do some analysis using a time series of imputed PfPR data for Terego District, West Nile, Uganda.

library(ramp.uganda)

prts <- get_district_pfpr("Terego District")

with(prts, {

plot(jdate, pfpr, ylim = c(0,0.65), main = "Terego District", xlab = "Julian Date (day 1 is Jan 1, 2015)", ylab = "PR")

lines(jdate, pfpr)

})

The next step we do is to develop a basic understanding of malaria prevalence over time by fitting a model of exposure. We use set up an EIR-forced model for population dynamics:

library(ramp.work)Loading required package: ramp.controlLoading required package: MASSThe model that we fit for the EIR:

a mean EIR

a seasonal pattern

an interannual pattern;

fit_model <- setup_fitting(model, prts$pfpr, prts$jdate)

fit_model <- pr2history(fit_model)

fit_model <- xds_solve(fit_model,

times=seq(0, max(prts$jdate), by = 10))

saveRDS(fit_model, "./models/sp_fit_model.rds")Now, we visualize the results:

show_fit(fit_model)The fitted model is in red compared to the data in black. In the profile, below the vertical lines represent mass vector control events:

Mass bed net distribution (PBO nets) at the beginning of 2017

Mass bed net distribution (PBO nets) at the beginning of 2021

IRS with Fludora Fusion in at the end of 2022 / beginning of 2023

Two interventions the end of 2022 / beginning of 2023

Mass distribution with Royal Guard bed nets

IRS with Actellic

IRS with actellic at the end of 2024 / beginning of 2025

At this point, none of the information about vector control has been used in fitting the model.

Evaluate Impact

The question we want to address here is whether the interventions had any impact at all. Without doing any analysis, we can simply describe what we see:

If the 2017 bed net distribution had any effect, it is not apparent in the data

If the 2021 bed net distribution had any effect, it looks a lot like the interannual variability that preceded it. The bed net distribution at the beginning of 2021 might have dampened a seasonal peak in 2021. It also could have reversed an upwards trend.

The IRS round with Fludora Fusion might have had a small effect. In the period that follows, imputed PR reaches its lowest value since 2015;

Vector control at the end of 2023 seems to have caused a serious dip in malaria prevalence, but it is not clear whether the effect was attributable to IRS, to the nets, or to a combination.

It is possible that the decline is entirely due to Actellic, which has worked well elsewhere in Uganda

If we compared these nets to the two previous rounds, we might be tempted to believe the nets did nothing. In this last round, the nets used in Terego District were Royal Guard, a next generation net. Maybe the Royal Guard nets did all the work.

Not enough time has elapsed after 2024 to make a judgment.

How can we do this a bit more rigorously? A cursory examination of time series suggests every district time series is unique. We must thus try and establish a baseline for each district using the time series for each interval we want to evaluate. It’s not ideal, but it’s probably the best we can do.

The idea is to use the flanking data to set an expectation about what would have happened in a time interval after the intervention.