A History of Interfaces

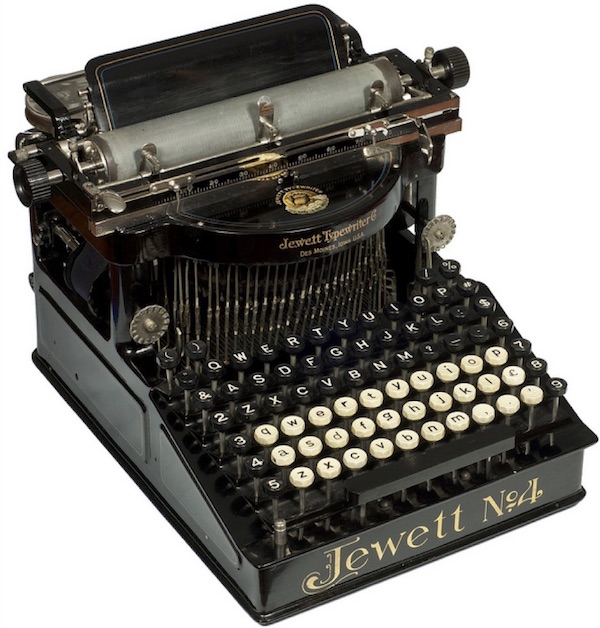

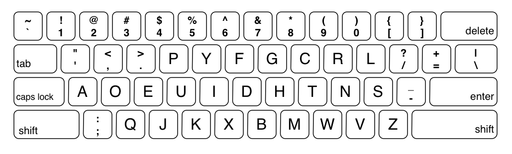

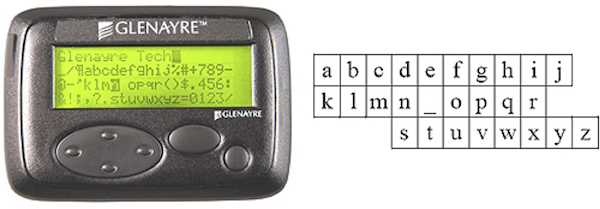

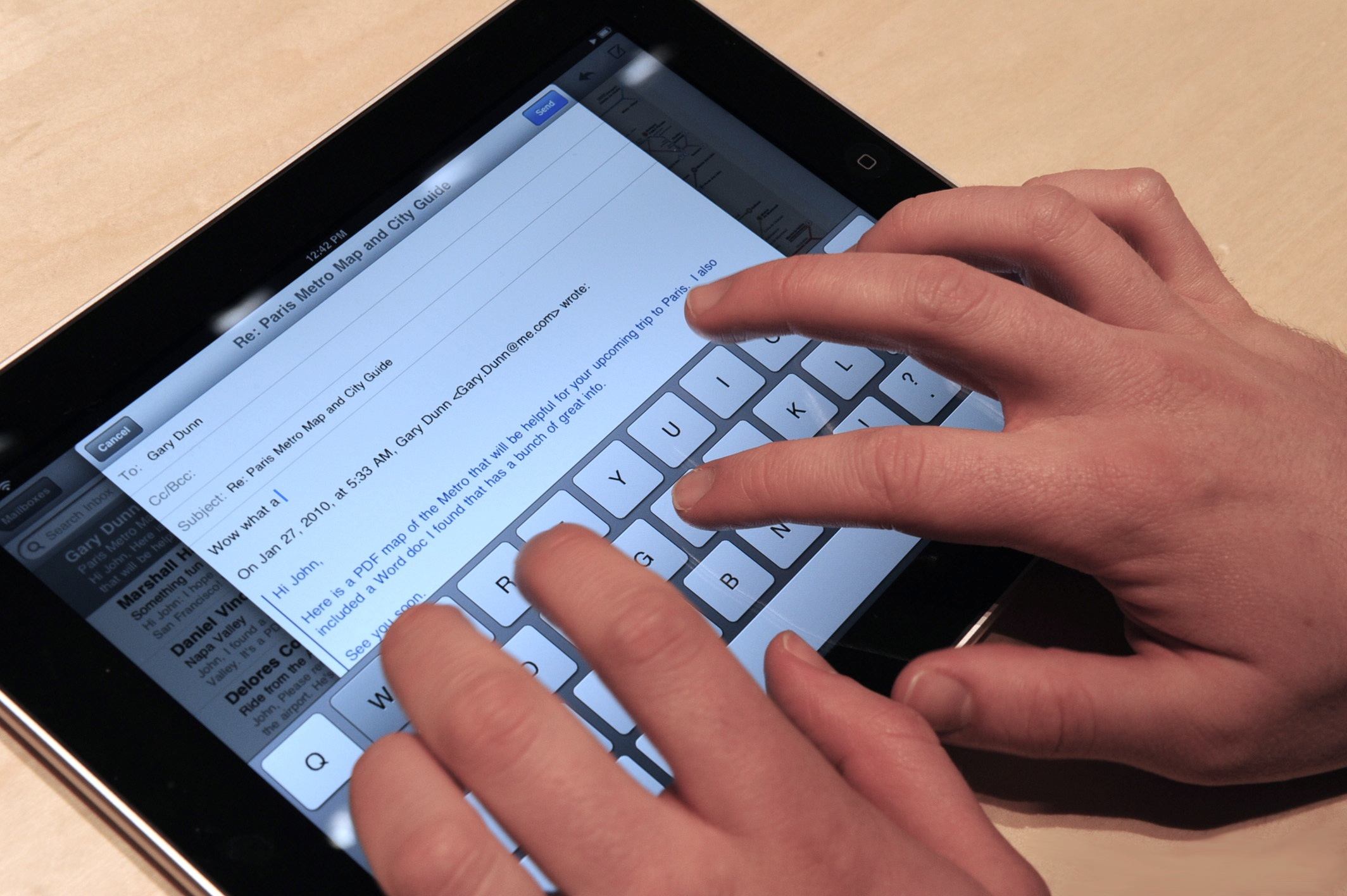

In a rapidly evolving field of augmented reality, driverless cars, and rich social media, is there any more mundane way to start a book on user interfaces than history? Probably not. And yet, for a medium that is invented entirely from imagination, the history of user interfaces is reminder that the interactive world we experience today could have been different. Files, keyboards, mice, and touchscreens—all of these concepts were invented to solve very real challenges with interacting with computers, and but for a few important people and places in the 20th century, we might have invented entirely different ways of interacting with computers 2 2 Stuart K. Card, Thomas P. Moran (1986). User technology: From pointing to pondering. ACM Conference on the History of Personal Workstations.

Computing began as an idea: Charles Babbage, a mathematician, philosopher, and inventor in London in the early 19th century, began imagining a kind of mechanical machine that could automatically calculate mathematical formulas. He called it the “Analytical Engine”, and conceived of it as a device that would encode instructions for calculations. Taking arbitrary data encoded as input on punch cards, this engine would be able to quickly and automatically calculate formulas much faster than people. And indeed, at the time, people were the bottleneck: if a banker wanted a list of numbers to be added, they needed to hire a computer—a person that quickly and correctly performed arithmetic—to add them. Baggage’s “Analytical Engine” promised to do the work of (human) computers much more quickly and accurately. Babbage later mentored Ada Lovelace, the daughter of a wealthy aristocrat; Lovelace loved the beauty of mathematics, and was enamored by Babbage’s vision, publishing the first algorithms intended to executed by such a machine. Thus began the age of computing, shaped largely by values of profit but also the beauty of mathematics.

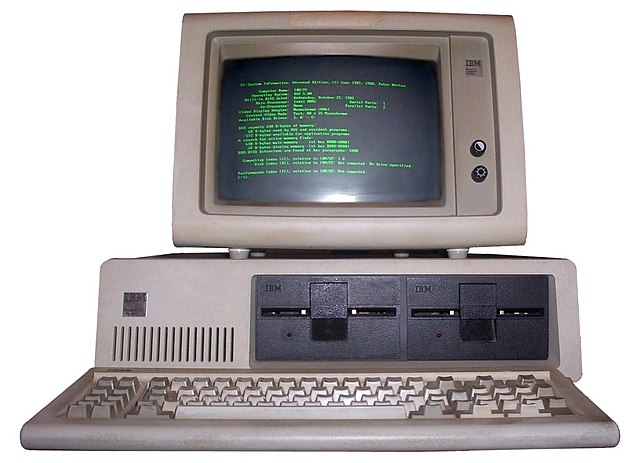

While no one ever succeeded in making a mechanical analytical engine, one hundred years later, electronic technology made digital ones possible 3 3 James Gleick and Rob Shapiro (2011). The Information . But Babbage’s vision of using punch cards for input remained intact: if you used a computer, it meant constructing programs out of individual machine instructions like add, subtract, or jump, and encoding them onto punch cards to be read by a mainframe. And rather than being driven by a desire to lower costs and increase profit in business, these computers were instruments of war, decrypting German secret messages and more quickly calculating ballistic trajectories.

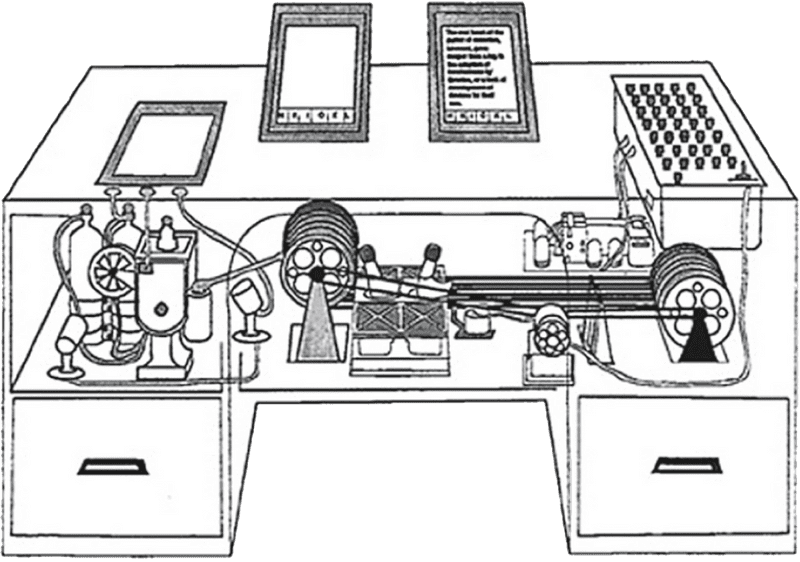

As wars came to a close, some found the vision of computers as business and war machines too limiting. Vannevar Bush , a science administrator who headed the U.S. Office of Scientific Research and Development, had a much bigger vision. He wrote a 1945 article in The Atlantic Monthly , called “As We May Think.” In it, he envisioned a device called the “Memex” in which people could store all of their information, including books, records, and communications, and access them with speed and flexibility 1 1 Vannevar Bush (1945). As we may think. The Atlantic Monthly, 176(1), 101-108.

Vannevar Bush (1945). As we may think. The Atlantic Monthly, 176(1), 101-108.

Here is how Bush described the Memex:

Consider a future device for individual use, which is a sort of mechanized private file and library. It needs a name, and, to coin one at random, “memex” will do. A memex is a device in which an individual stores all his books, records, and communications, and which is mechanized so that it may be consulted with exceeding speed and flexibility. It is an enlarged intimate supplement to his memory... Wholly new forms of encyclopedias will appear, ready made with a mesh of associative trails running through them, ready to be dropped into the memex and there amplified. The lawyer has at his touch the associated opinions and decisions of his whole experience, and of the experience of friends and authorities. The patent attorney has on call the millions of issued patents, with familiar trails to every point of his client’s interest. The physician, puzzled by a patient’s reactions, strikes the trail established in studying an earlier similar case, and runs rapidly through analogous case histories, with side references to the classics for the pertinent anatomy and histology. The chemist, struggling with the synthesis of an organic compound, has all the chemical literature before him in his laboratory, with trails following the analogies of compounds, and side trails to their physical and chemical behavior.

Vannevar Bush (1945). As we may think. The Atlantic Monthly, 176(1), 101-108.

Does this remind you of anything? The internet, hyperlinks, Wikipedia, networked databases, social media. All of it is there in this prescient description of human augmentation, including rich descriptions of the screens, levers, and other controls for accessing information.

J.C.R. Licklider, an American psychologist and computer scientist, was fascinated by Bush’s vision, writing later in his “Man-Computer Symbiosis” 4 4 J. C. R. Licklider (1960). Man-Computer Symbiosis. IRE Transactions on Human Factors in Electronics.

...many problems that can be thought through in advance are very difficult to think through in advance. They would be easier to solve, and they could be solved faster, through an intuitively guided trial-and-error procedure in which the computer cooperated, turning up flaws in the reasoning or revealing unexpected turns in the solution. Other problems simply cannot be formulated without computing-machine aid. Poincare anticipated the frustration of an important group of would-be computer users when he said, “The question is not, ‘What is the answer?’ The question is, ‘What is the question?’” One of the main aims of man-computer symbiosis is to bring the computing machine effectively into the formulative parts of technical problems.

He then talked about visions of a “thinking center” that “ will incorporate the functions of present-day libraries together with anticipated advances in information storage and retrieval ,” connecting individuals and computers through desk displays, wall displays, speech production and recognition, and other forms of artificial intelligence. In his role in the United States Department of Defense Advanced Research Projects Agency and as a professor at MIT, Licklider funded and facilitated the research that eventually led to the internet and the graphical user interface.

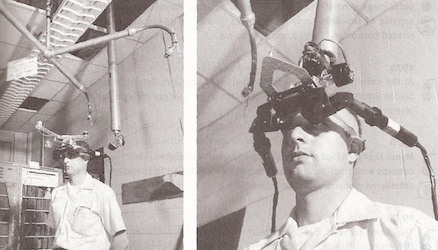

One person that Bush and Licklider’s ideas influenced was MIT computer scientist Ivan Sutherland . He followed this vision of human augmentation by exploring interactive sketching on computers, working on a system called Sketchpad for his dissertation 7 7 Ivan Sutherland (1963). Sketchpad, A Man-Machine Graphical Communication System. Massachusetts Institute of Technology, Introduction by A.F. Blackwell & K. Rodden. Technical Report 574. Cambridge University Computer Laboratory.

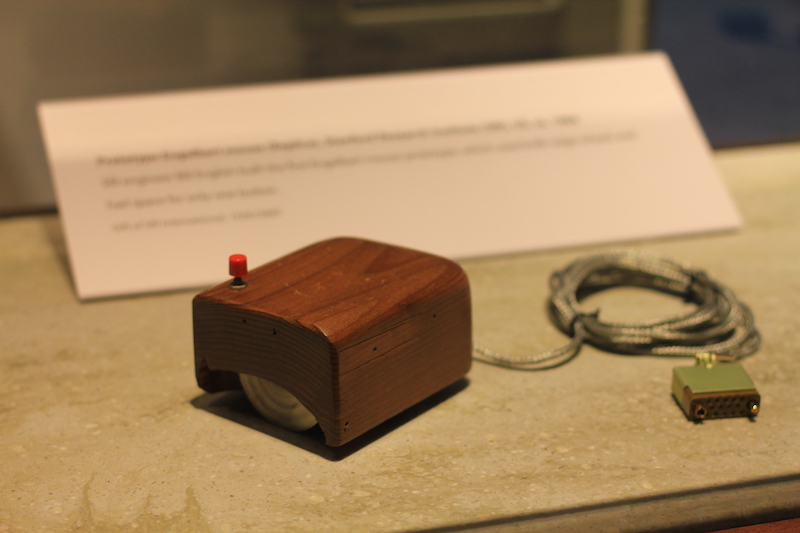

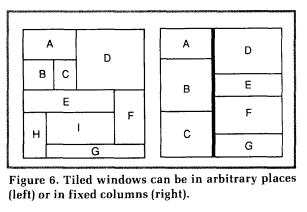

Sketchpad and Bush and Licklider’s article inspired Douglas Engelbart to found the Augmentation Research Center at the Stanford Research Institute (SRI) in the early 1960’s. Over the course of about six years, with funding from NASA and the U.S. Defense Department’s Advanced Research Projects Agency (known today as DARPA), Engelbart and his team prototyped the “oN-Line System” (or the NLS), which attempted to engineer much of Bush’s vision. NLS had networking, windows, hypertext, graphics, command input, video conferencing, the computer mouse, word processing, file version control, text editing, and numerous other features of modern computing. Engelbart himself demoed the system to a live audience in what is often called “The Mother of all Demos.” You can see the entire demonstration in the below above.

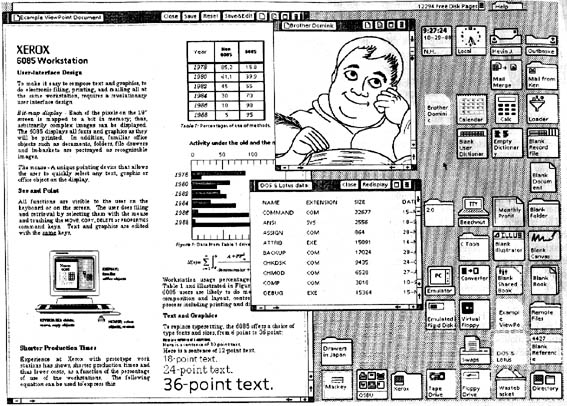

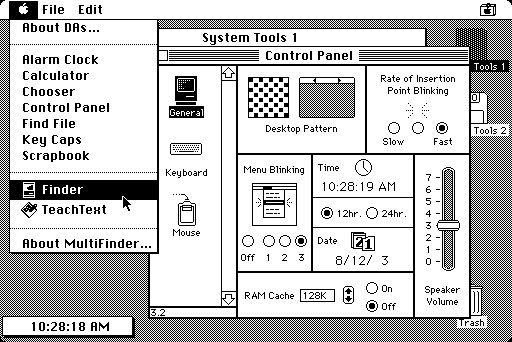

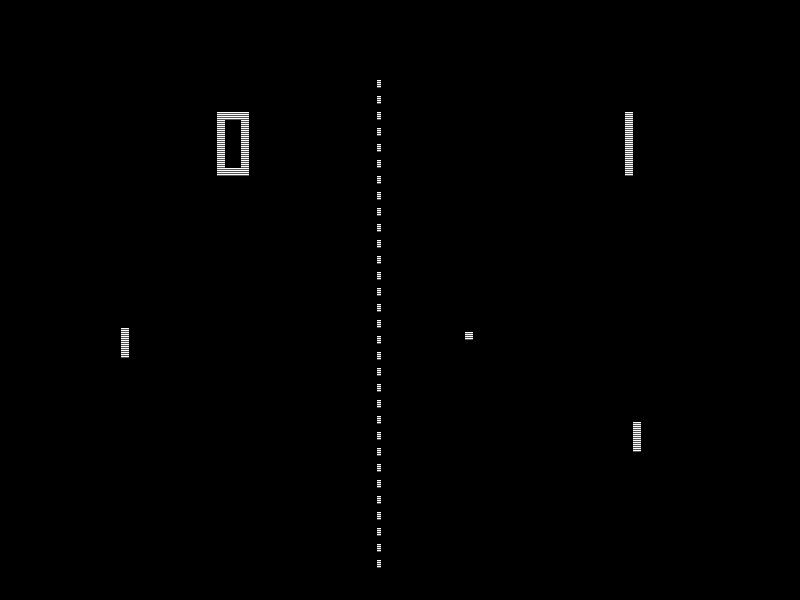

Engelbart’s research team eventually disbanded and many of them ended up at the Xerox Palo Alto Research Center (Xerox PARC). Many were truly inspired by the demo and wanted to use the freedom Xerox had given them to make the NLS prototype a reality. One of the key members of this team was Alan Kay , who had worked with Ivan Sutherland and seen Engelbart’s demo. Kay was interested in ideas of objects, object-oriented programming, and windowing systems, and created the programming language and environment Smalltalk. He was a key member of a team at PARC that developed the Alto in the mid 1970’s. The Alto included the first operating system based on a graphical user interface with a desktop metaphor, and included WYSIWYG word processing, an email client, a vector graphics editor, a painting program, and a multi-player networked video game.

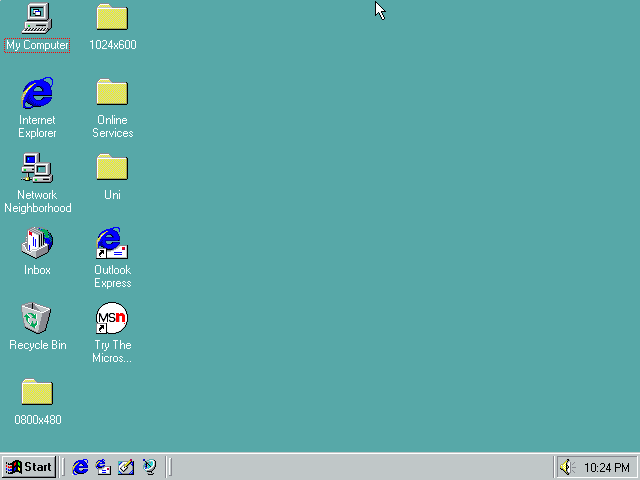

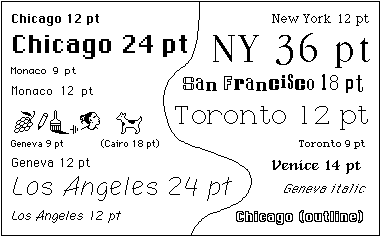

Now, these were richly envisioned prototypes, but they were not products. You couldn’t buy an Alto at a store. This changed when Steve Jobs visited Xerox PARC in 1979, where he saw a demo of the Alto’s GUI, its Smalltalk-based programming environment, and its networking. Jobs was particularly excited about the GUI, and recruited several of the Xerox PARC researchers to join Apple, leading to the Lisa and later Macintosh computers, which offered the first mass-market graphical user interfaces. Apple famously marketed the Macintosh in its 1984 advertisement, framing the GUI the first salvo in a war against Big Brother, a reference to the book, 1984 .

As the Macintosh platform expanded, it drew many innovators, including many Silicon Valley entreprenuers with ideas for how to make personal computers even more powerful. This included ideas like presentation software such as PowerPoint , which grew out of the same Xerox PARC vision of what-you-see-is-what-you-get text and graphics manipulation.

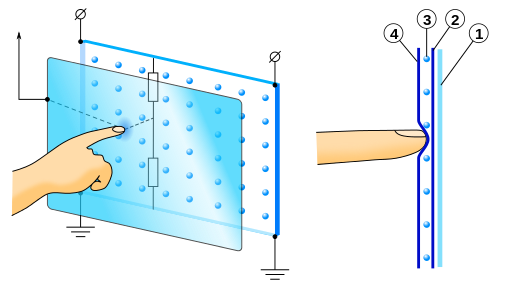

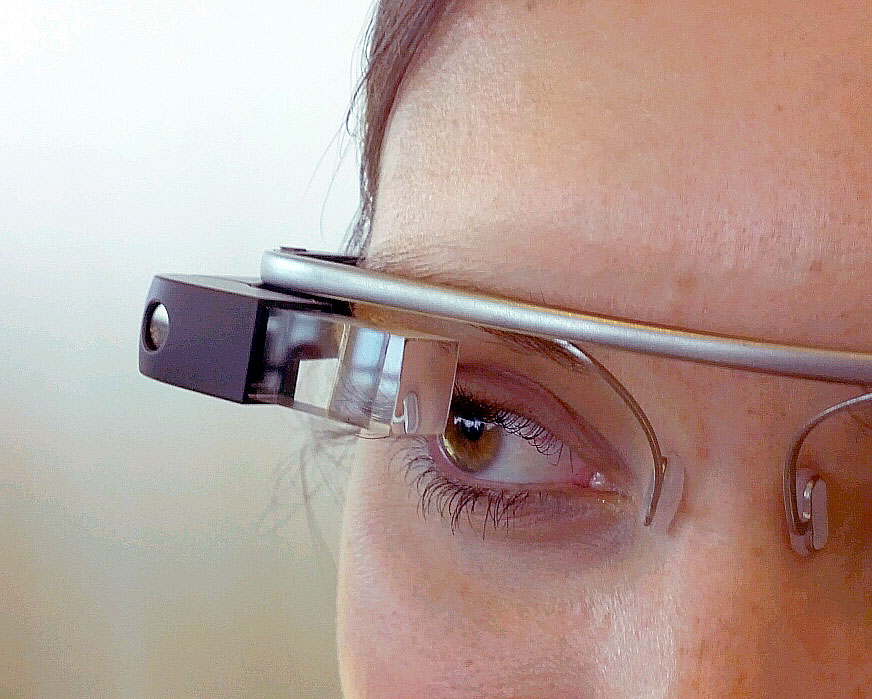

Since the release of the Macintosh, companies like Apple, Microsoft, and now Google have driven much of the engineering of user interfaces, deviating little from the original visions inspired by Bush, Licklider, Sutherland, Engelbart, and Kay. But governments and industry continued to harvest basic research for new paradigms of interaction, including the rapid proliferation of capacitive touch screens in the early 2000’s in smartphones 6 6 Brad A. Myers (1998). A brief history of human-computer interaction technology. ACM interactions.

Brad Myers, Scott E. Hudson, Randy Pausch (2000). Past, Present and Future of User Interface Software Tools. ACM Transactions on Computer-Human Interaction.

Andries van Dam (1997). Post-WIMP user interfaces. Communications of the ACM.

Mark Weiser (1991). The Computer for the 21st Century. Scientific American 265, 3 (September 1991), 94-104.

Reflecting on this history, one of the most remarkable things is how powerful one vision was to catalyze an entire world’s experience with computers. Is there something inherently fundamental about networked computers, graphical user interfaces, and the internet that was inevitable? Or if someone else had written an alternative vision for computing, would we be having different interactions with computers and therefore different interactions with each other through computing? And is it still possible to imagine different futures of interactive computing that will shape the future yet? This history and these questions remind us that nothing about our interactions with computing is necessarily “true” or “right”: they’re just ideas that we’ve collectively built, shared, and learned—and they can change. In the coming chapters, we’ll uncover what is fundamental about user interfaces and explore alternative visions of interacting with computers that may require new fundamentals.

References

-

Vannevar Bush (1945). As we may think. The Atlantic Monthly, 176(1), 101-108.

-

Stuart K. Card, Thomas P. Moran (1986). User technology: From pointing to pondering. ACM Conference on the History of Personal Workstations.

- James Gleick and Rob Shapiro (2011). The Information

-

J. C. R. Licklider (1960). Man-Computer Symbiosis. IRE Transactions on Human Factors in Electronics.

-

Brad Myers, Scott E. Hudson, Randy Pausch (2000). Past, Present and Future of User Interface Software Tools. ACM Transactions on Computer-Human Interaction.

-

Brad A. Myers (1998). A brief history of human-computer interaction technology. ACM interactions.

-

Ivan Sutherland (1963). Sketchpad, A Man-Machine Graphical Communication System. Massachusetts Institute of Technology, Introduction by A.F. Blackwell & K. Rodden. Technical Report 574. Cambridge University Computer Laboratory.

-

Andries van Dam (1997). Post-WIMP user interfaces. Communications of the ACM.

-

Mark Weiser (1991). The Computer for the 21st Century. Scientific American 265, 3 (September 1991), 94-104.

A Theory of Interfaces

First history and now theory? What a way to start a practical book about user interfaces. But as social psychologist Kurt Lewin said, “There’s nothing as practical as a good theory” 6 6 Lewin (1943). Theory and practice in the real world. The Oxford Handbook of Organization Theory.

Let’s start with why theories are practical. Theories, in essence, are explanations for what something is and how something works. These explanatory models of phenomena in the world help us not only comprehend the phenomena, but also predict what will happen in the future with some confidence, and perhaps more importantly, they give us a conceptual vocabulary to exchange ideas about the phenomena. A good theory about user interfaces would be practical because it would help explain what user interface are, and what governs whether they are usable, learnable, efficient, error-prone, etc.

HCI researchers have written a lot about theory, including theories from other disciplines, and new theories specific to HCI 5,9 5 Jacko, J. A. (2012). Human computer interaction handbook: Fundamentals, evolving technologies, emerging applications. CRC Press.

Rogers, Y. (2012). HCI theory: classical, modern, contemporary. Synthesis Lectures on Human-Centered Informatics, 5(2), 1-129.

What are interfaces?

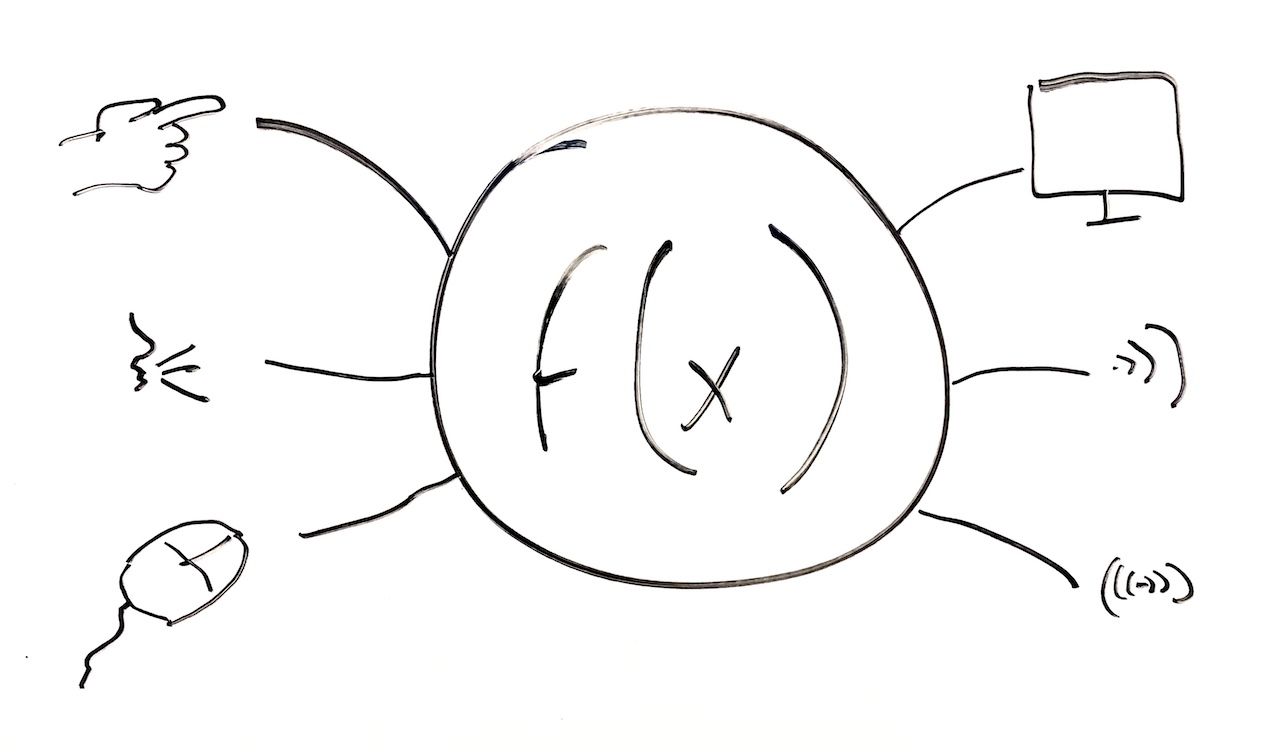

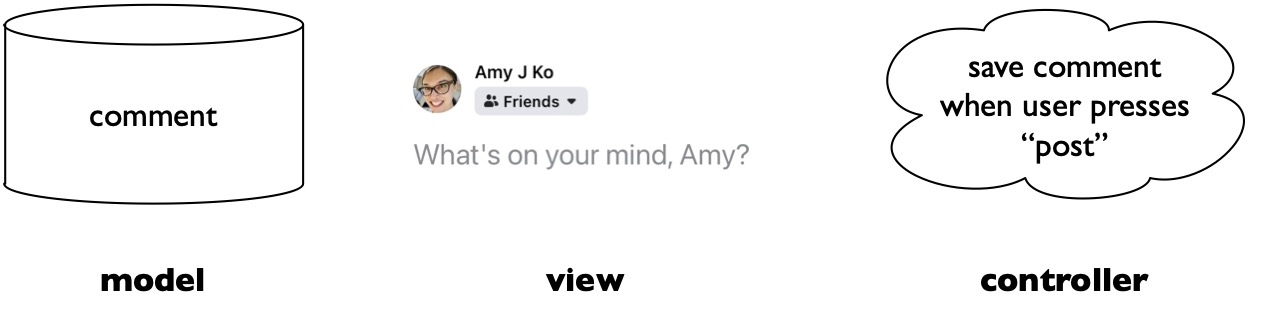

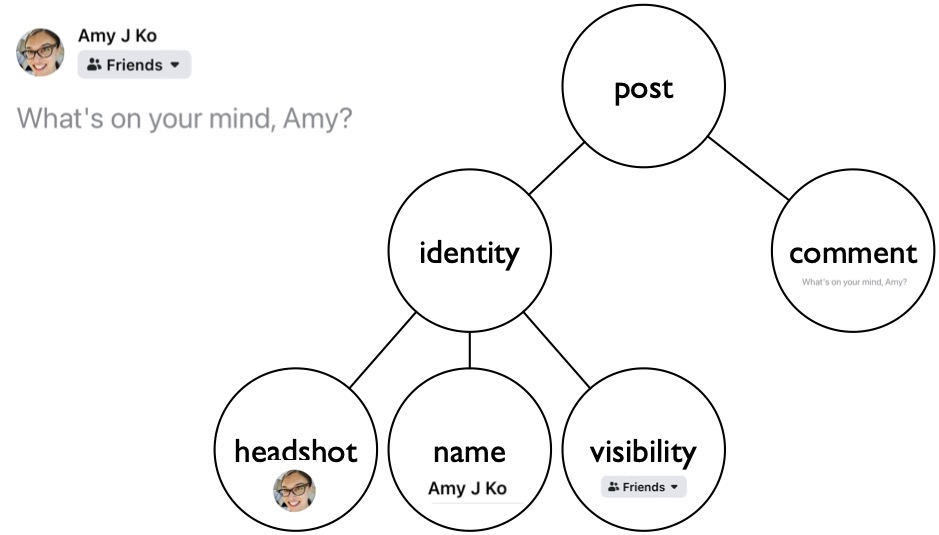

Let’s begin with what user interfaces are . User interfacesuser interface: A special kind of software designed to map human activities in the physical world (clicks, presses, taps, speech, etc.) to functions defined in a computer program. are software and/or hardware that bridge the world of human action and computer action . The first world is the natural world of matter, motion, sensing, action, reaction, and cognition, in which people (and other living things) build models of the things around them in order to make predictions about the effects of their actions. It is a world of stimulus, response, and adaptation. For example, when an infant is learning to walk, they’re constantly engaged in perceiving the world, taking a step, and experiencing things like gravity and pain, all of which refine a model of locomotion that prevents pain and helps achieve other objectives. Our human ability to model the world and then reshape the world around us using those models is what makes us so adaptive to environmental change. It’s the learning we do about the world that allows us to survive and thrive in it. Computers, and the interfaces we use to operate them, are one of the things that humans adapt to.

The other world is the world of computing. This is a world ultimately defined by a small set of arithmetic operations such as adding, multiplying, and dividing, and an even smaller set of operations that control which operations happen next. These instructions, combined with data storage, input devices to acquire data, and output devices to share the results of computation, define a world in which there is only forward motion. The computer always does what the next instruction says to do, whether that’s reading input, writing output, computing something, or making a decision. Sitting atop these instructions are functionsfunction: An idea from mathematics of using algorithms to map some input (e.g., numbers, text, or other data), to some output. Functions can be as simple as basic arithmetic (e.g., multiply , which takes two numbers and computes their product), or as complex as machine vision ( object recognition , which takes an image and a machine learned classifier trained on millions of images and produces a set of text descriptions of objects in the image. , which take input and produce output using some algorithms. Essentially all computer behavior leverages this idea of a function, and the result is that all computer programs (and all software) are essentially collections of functions that humans can invoke to compute things and have effects on data. All of this functional behavior is fundamentally deterministic; it is data from the world (content, clocks, sensors, network traffic, etc.), and increasingly, data that models the world and the people in it, that gives it its unpredictable, sometimes magical or intelligent qualities.

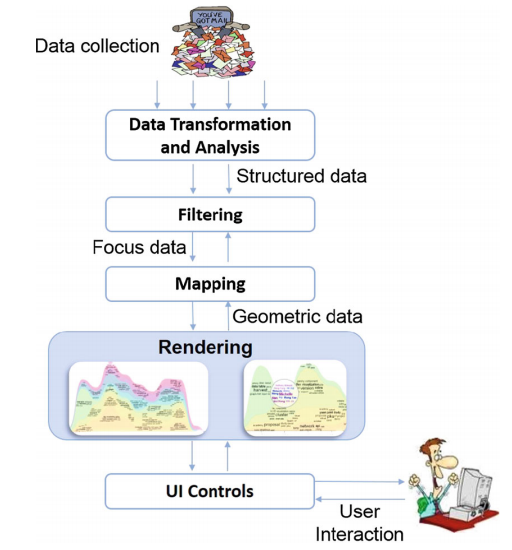

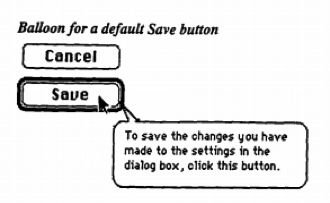

Now, both of the above are basic theories of people and computers. In light of this, what are user interfaces? User interfaces are mappings from the sensory, cognitive, and social human world to computational functions in computer programs. For example, a save button in a user interface is nothing more than an elaborate way of mapping a person’s physical mouse click or tap on a touch screen to a command to execute a function that will take some data in memory and permanently store it somewhere. Similarly, a browser window, displayed on a computer screen, is an elaborate way of taking the carefully rendered pixel-by-pixel layout of a web page, and displaying it on a computer screen so that sighted people can perceive it with their human perceptual systems. These mappings from physical to digital, digital to physical, are how we talk to computers.

Learning interfaces

If we didn’t have user interfaces to execute functions in computer programs, it would still be possible to use computers. We’d just have to program them using programming languages (which, as we discuss later in Programming Interfaces , can be hard to learn). What user interfaces offer are more learnable representations of these functions, their inputs, their algorithms, and their outputs, so that a person can build mental models of these representations that allow them to sense, take action, and achieve their goals, as they do with anything in the natural world. However, there is nothing natural about user interfaces: buttons, scrolling, windows, pages, and other interface metaphors are all artificial, invented ideas, designed to help people use computers without having to program them. While this makes using computers easier, interfaces must still be learned.

Don Norman, in his book, The Design of Everyday Things 8 8 Don Norman (2013). The design of everyday things: Revised and expanded edition. Basic Books.

Grossman, T., Fitzmaurice, G., & Attar, R. (2009). A survey of software learnability: metrics, methodologies and guidelines. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

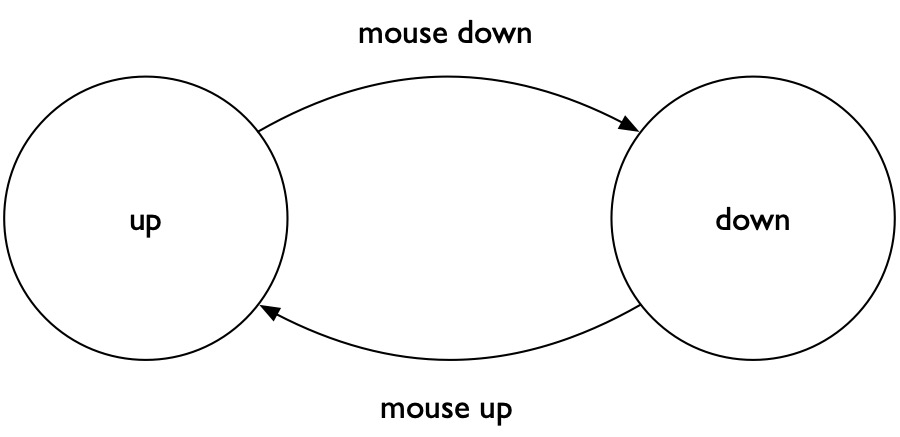

What is it that people need to learn to take action on an interface? Norman (and later Gaver 2 2 Gaver, W.W. (1991). Technology affordances. Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI '91). New Orleans, Louisiana (April 27-May 2, 1991). New York: ACM Press, pp. 79-84.

Rex Hartson (2003). Cognitive, physical, sensory, and functional affordances in interaction design. Behaviour & Information Technology.

Don Norman (2013). The design of everyday things: Revised and expanded edition. Basic Books.

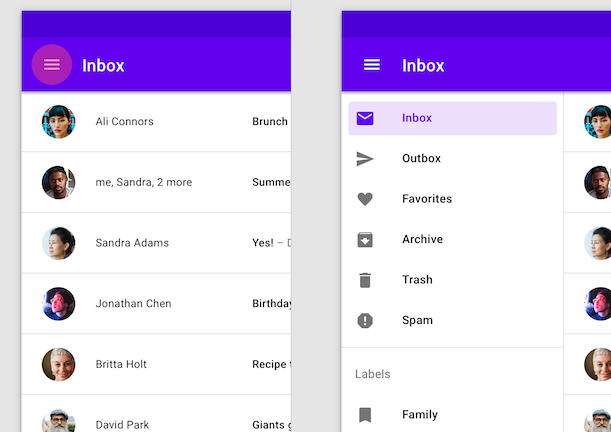

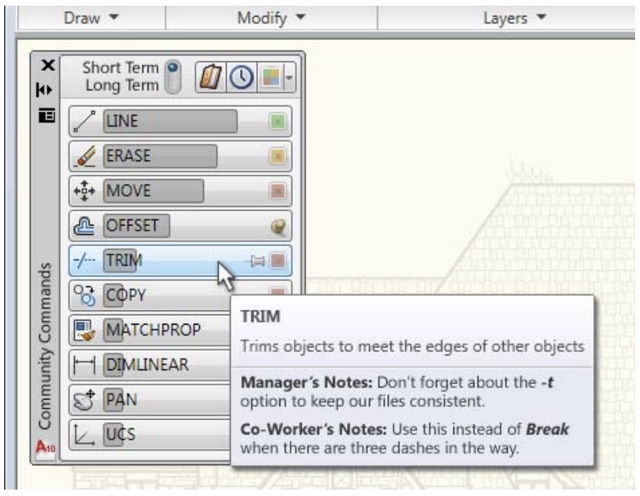

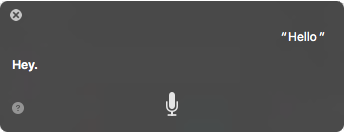

How can a person know what affordances an interface has? That’s where the concept of a signifiersignifier: Any indicator of an affordance in an interface. becomes important. Signifiers are any sensory or cognitive indicator of the presence of an affordance. Consider, for example, how you know that a computer mouse can be clicked. It’s physical shape might evoke the industrial design of a button. It might have little tangible surfaces that entreat you to push your finger on them. A mouse could even have visual sensory signifiers, like a slowly changing colored surface that attempts to say, “ I’m interactive, try touching me. ” These are mostly sensory indicators of an affordance. Personal digital assistants like Alexa, in contrast, lack most of these signifiers. What about an Amazon Echo says, “ You can say Alexa and speak a command? ” In this case, Amazon relies on tutorials, stickers, and even television commercials to signify this affordance.

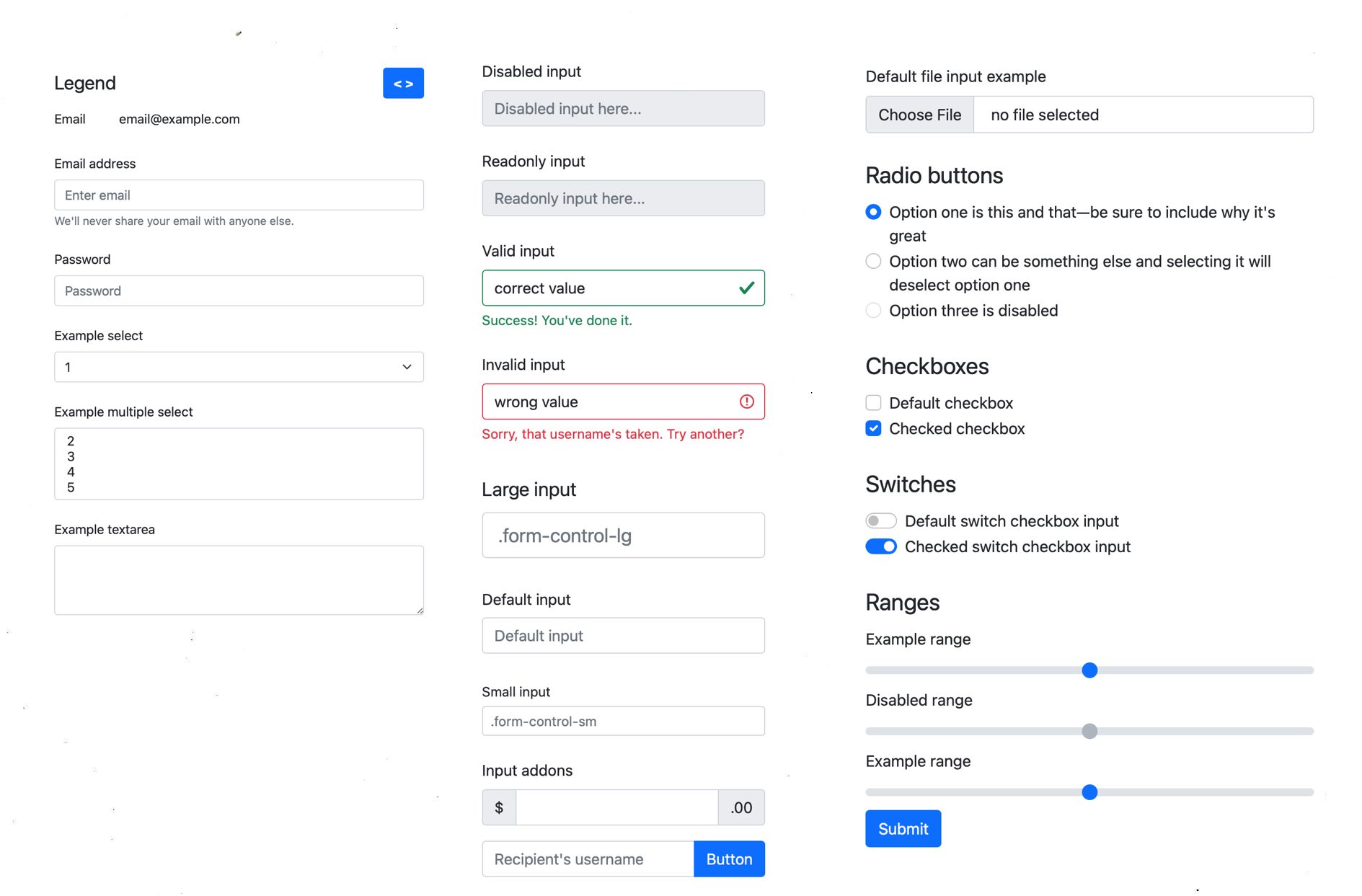

While both of these examples involve hardware, the same concepts of affordance and signifier apply to software too. Buttons in a graphical user interface have an affordance: if you click within their rectangular boundary, the computer will execute a command. If you have sight, you know a button is clickable because long ago you learned that buttons have particular visual properties such as a rectangular shape and a label. If you are blind, you might know a button is clickable because your screen reader announces that something is a “button” and reads its label. All of this requires you knowing that interfaces have affordances such as buttons that are signified by a particular visual motif. Therefore, a central challenge of designing a user interface is deciding what affordances an interface will have and how to signify that they exist.

But execution is only half of the story. Norman also discussed gulfs of evaluationgulf of evaluation: Everything a person must learn in order to understand the effect of their action on an interface, including understanding error messages or a lack of response from an interface. , which are the gaps between the output of a user interface and a user’s goal. Once a person has performed some action on a user interface via some functional affordance, the computer will take that input and do something with it. It’s up to the user to then map that feedback onto their goal. If that mapping is simple and direct, then the gulf is a small. For example, consider an interface for printing a document. If after pressing a print button, the feedback was “ Your document was sent to the printer for printing, ” that would clearly convey progress toward the user’s goal, minimizing the gulf between the output and the goal. If after pressing the print button, the feedback was “ Job 114 spooled, ” the gulf is larger, forcing the user to know what a “ job ” is, what “ spooling ” is, and what any of that has to do with printing their document.

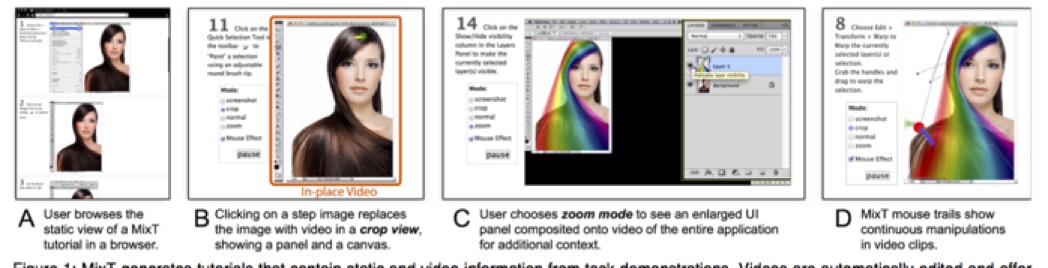

In designing user interfaces, there are many ways to bridge gulfs of execution and evaluation. One is to just teach people all of these affordances and help them understand all of the user interface’s feedback. A person might take a class to learn the range of tasks that can be accomplished with a user interface, steps to accomplish those tasks, concepts required to understand those steps, and deeper models of the interface that can help them potentially devise their own procedures for accomplishing goals. Alternatively, a person can read tutorials, tooltips, help, and other content, each taking the place of a human teacher, approximating the same kinds of instruction a person might give. There are entire disciplines of technical writing and information experience that focus on providing seamless, informative, and encouraging introductions to how to use a user interface. 7 7 Linda Newman Lior (2013). Writing for Interaction: Crafting the Information Experience for Web and Software Apps. Morgan Kaufmann.

To many user interface designers, the need to explicitly teach a user interface is a sign of design failure. There is a belief that designs should be “self-explanatory” or “intuitive.” What these phrases actually mean are that the interface is doing the teaching rather than a person or some documentation. To bridge gulfs of execution , a user interface designer might conceive of physical, cognitive, and sensory affordances that are quickly learnable, for example. One way to make them quickly learnable is to leverage conventionsconvention: A widely-used and widely-learned interface design pattern (e.g., a web form, a login screen, a hamburger menu on a mobile website). , which are essentially user interface design patterns that people have already learned by learning other user interfaces. Want to make it easy to learn how to add an item to a cart on an e-commerce website? Use a button labeled “ Add to cart, ” a design convention that most people will have already learned from using other e-commerce sites.Alternatively, interfaces might even try to anticipate what people want to do, personalizing what’s available, and in doing so, minimizing how much a person has to learn. From a design perspective, there’s nothing inherently wrong with learning, it’s just a cost that a designer may or may not want to impose on a new user. (Perhaps learning a new interface affords new power not possible with old conventions, and so the cost is justified).

To bridge gulfs of evaluation , a user interface needs to provide feedbackfeedback: Output from a computer program intended to explain what action a computer took in response to a user’s input (e.g., confirmation messages, error messages, or visible updates). that explains what effect the person’s action had on the computer. Some feedback is explicit instruction that essentially teaches the person what functional affordances exist, what their effects are, what their limitations are, how to invoke them in other ways, what new functional affordances are now available and where to find them, what to do if the effect of the action wasn’t desired, and so on. Clicking the “ Add to cart ” button, for example, might result in some instructive feedback like this:

I added this item to your cart. You can look at your cart over there. If you didn’t mean to add that to your cart, you can take it out like this. If you’re ready to buy everything in your cart, you can go here. Don’t know what a cart is? Read this documentation.

Some feedback is implicit, suggesting the effect of an action, but not explaining it explicitly. For example, after pressing “ Add to cart ,” there might be an icon showing an abstract icon of an item being added to a cart, with some kind of animation to capture the person’s attention. Whether implicit or explicit, all of this feedback is still contextual instruction on the specific effect of the user’s input (and optionally more general instruction about other affordances in the user interface that will help a user accomplish their goal).

The result of all of this learning is a mental modelmental model: A person’s beliefs about an interface’s affordances and how to operate them. 1 1 Carroll, J. M., Anderson, N. S. (1987). Mental models in human-computer interaction: Research issues about what the user of software knows. National Academies.

Note that in this entire discussion, we’ve said little about tasks or goals. The broader HCI literature theorizes about those broadly 5,9 5 Jacko, J. A. (2012). Human computer interaction handbook: Fundamentals, evolving technologies, emerging applications. CRC Press.

Rogers, Y. (2012). HCI theory: classical, modern, contemporary. Synthesis Lectures on Human-Centered Informatics, 5(2), 1-129.

Using theory

You have some new concepts about user interfaces, and an underlying theoretical sense about what user interfaces are and why designing them is hard. Let’s recap:

- User interfaces bridge human goals and cognition to functions defined in computer programs.

- To use interfaces successfully, people must learn an interface’s affordances and how they can be used to achiever their goals.

- This learning must come from somewhere, either instruction by people, explanations in documentation, or teaching by the user interface itself.

- Most user interfaces have large gulfs of execution and evaluation, requiring substantial learning.

The grand challenge of user interface design is therefore trying to conceive of interaction designs that have small gulfs of execution and evaluation, while also offering expressiveness, efficiency, power, and other attributes that augment human ability.

These ideas are broadly relevant to all kinds of interfaces, including all those already invented, and new ones invented every day in research. And so these concepts are central to design. Starting from this theoretical view of user interfaces allows you to ask the right questions. For example, rather than trying to vaguely identify the most “intuitive” experience, you can systematically ask: “ Exactly what is our software’s functionality affordances and what signifiers will we design to teach it? ” Or, rather than relying on stereotypes, such as “ older adults will struggle to learn to use computers, ” you can be more precise, saying, “ This particular population of adults has not learned the design conventions of iOS, and so they will need to learn those before successfully utilizing this application’s interface. ”

Approaching user interface design and evaluation from this perspective will help you identify major gaps in your user interface design analytically and systematically, rather than relying only on observations of someone struggling to use an interface, or worse yet, stereotypes or ill-defined concepts such as “intuitive” or “user-friendly.” Of course, in practice, not everyone will know these theories, and other constraints will often prevent you from doing what you know might be right. That tension between theory and practice is inevitable, and something we’ll return to throughout this book.

References

-

Carroll, J. M., Anderson, N. S. (1987). Mental models in human-computer interaction: Research issues about what the user of software knows. National Academies.

-

Gaver, W.W. (1991). Technology affordances. Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI '91). New Orleans, Louisiana (April 27-May 2, 1991). New York: ACM Press, pp. 79-84.

-

Grossman, T., Fitzmaurice, G., & Attar, R. (2009). A survey of software learnability: metrics, methodologies and guidelines. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Rex Hartson (2003). Cognitive, physical, sensory, and functional affordances in interaction design. Behaviour & Information Technology.

-

Jacko, J. A. (2012). Human computer interaction handbook: Fundamentals, evolving technologies, emerging applications. CRC Press.

-

Lewin (1943). Theory and practice in the real world. The Oxford Handbook of Organization Theory.

-

Linda Newman Lior (2013). Writing for Interaction: Crafting the Information Experience for Web and Software Apps. Morgan Kaufmann.

-

Don Norman (2013). The design of everyday things: Revised and expanded edition. Basic Books.

-

Rogers, Y. (2012). HCI theory: classical, modern, contemporary. Synthesis Lectures on Human-Centered Informatics, 5(2), 1-129.

What Interfaces Mediate

In the last two chapters, we considered two fundamental perspectives on user interface software and technology: a historical one, which framed user interfaces as a form of human augmentation, and a theoretical one, which framed interfaces as a bridge between sensory worlds and computational world of inputs, functions, outputs, and state. A third and equally important perspective on interfaces is what role interfaces play in individual lives and society broadly. While user interfaces are inherently computational artifacts, they are also inherently sociocultural and sociopolitical entities.

Broadly, I view the social role of interfaces as a mediating one. Mediation is the idea that rather than two entities interacting directly, something controls, filters, transacts, or interprets interaction between two entities. For example, one can think of human-to-human interactions as mediated, in that our thoughts, ideas, and motivations are mediated by language. In that same sense, user interfaces can mediate human interaction with many things. Mediation is important, because, as Marshall McLuhan argued, media (which by definition mediates) can lead to subtle, sometimes invisible structural changes in society’s values, norms, and institutions 5 5 Marshall McLuhan (1994). Understanding media: The extensions of man. MIT press.

In this chapter, we will discuss how user interfaces mediate access to three things: automation, information, and other humans. aa Computer interfaces might mediate other things too: these are just the three most prominent society.

Mediating automation

As the theory in the last chapter claimed, interfaces primarily mediate computation. Whether it’s calculating trajectories in World War II or detecting faces in a collection of photos, the vast range of algorithms from computer science that process, compute, filter, sort, search, and classify information provide real value to the world, and interfaces are how people access that value.

Interfaces that mediate automation are much like application programming interfacesapplication programming interface (API): A collection of code used for computing particular things, designed for reuse by others without having to understand the details of the computation. For example, an API might mediate access to facial recognition algorithms, date arithmetic, or trigonometric functions. (APIs) that software developers use. APIs organize collections of functionality and data structures that encapsulate functionality, hiding complexities, and providing a simpler interface for developers to use to build applications. For example, instead of a developer having to learn how to build a machine learning classification algorithm themselves, they can use a machine learning API, which only requires the developer to provide some data and parameters, which the API uses to train the classifier.

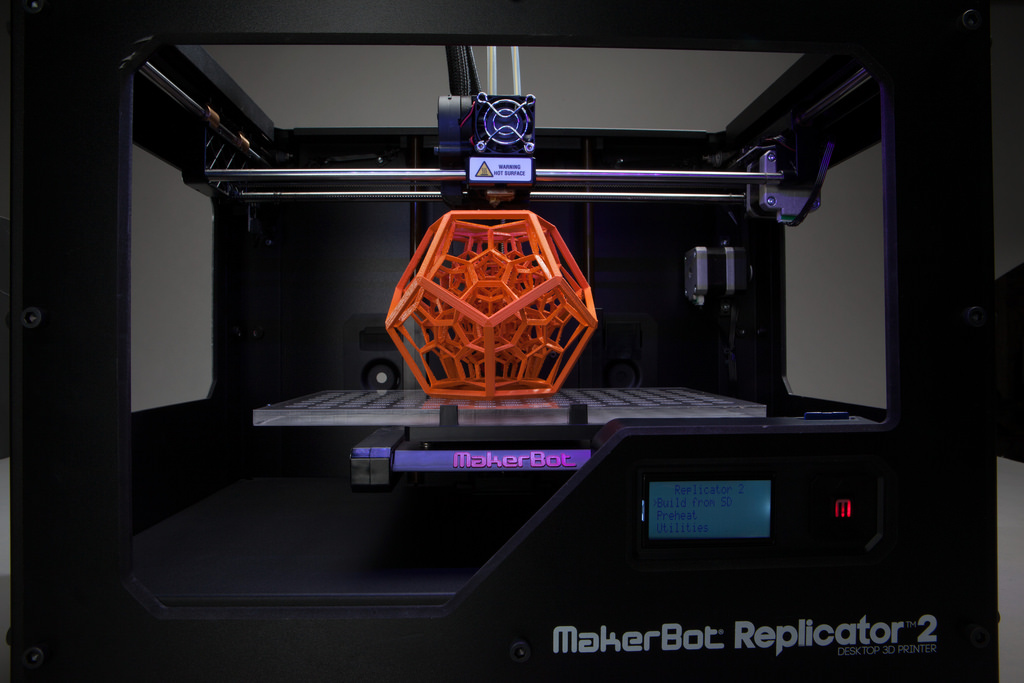

In the same way, user interfaces are simply often direct manipulation ways of providing inputs to APIs. For example, think about what operating a calculator app on a phone actually involves: it’s really a way of delegating arithmetic operations to a computer. Each operation is a function that takes some arguments (addition, for example, is a function that takes two numbers and returns their sum). In this example, the calculator is literally an interface to an API of mathematical functions that compute things for a person. But consider a very different example, such as the camera application on a smartphone. This is also an interface to an API, with a single function that takes as input all of the light into a camera sensor, a dozen or so configuration options for focus, white balance, orientation, etc., and returns a compressed image file that captures that moment in space and time. This even applies to more intelligent interfaces you might not think of as interfaces at all, such as driverless cars. A driverless car is basically one complex function that is called dozens of times a second that take a car’s location, destination, and all of the visual and spatial information around the car via sensors as input, and computes an acceleration and direction for the car to move. The calculator, the camera, and the driverless car are really both just interfaces to APIs that expose a set of computations, and user interfaces are what we use to access this computation.

From this API perspective, user interfaces mediate access to APIs, and interaction with an interface is really identical, from a computational perspective, to executing a program that uses those APIs to compute. In the calculator, when you press the sequence of buttons “1”, “+”, “1”, “=”, you just wrote a program add(1,1) and get the value 2 in return. When you open the camera app, point it at your face for a selfie, and tap on the screen to focus, you’re writing the program capture(focusPoint, sensorData) and getting the image file in return. When you enter your destination in a driverless car, you’re really invoking the program while(!at(destination)) { drive(destination, location, environment); } . From this perspective, interacting with user interfaces is really about executing “one-time use” programs that compute something on demand.

How is this mediation? Well, the most direct way to access computation would be to write computer programs and then execute them. That’s what people did in the 1960’s before there were graphical user interfaces, but even those were mediated by punchcards, levers, and other mechanical controls. Our more modern interfaces are better because we don’t have to learn as much to communicate to a computer what computation we want. But, we still have to learn interfaces that mediate computation: we’re just learning APIs and how to program with them in graphical, visual, and direct manipulation forms, rather than as code.

Of course, as this distance between what we want a computer to do and how it does it grows, so does fear about how much trust to put in computers to compute fairly. A rapidly growing body of research is considering what it means for algorithms to be fair, how people perceive fairness, and how explainable algorithms are 1,7,9 1 Abdul, A., Vermeulen, J., Wang, D., Lim, B.Y., Kankanhalli, M (2018). Trends and trajectories for explainable, accountable, intelligible systems: an HCI research agenda. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Rader, E., Cotter, K., Cho, J. (2018). Explanations as mechanisms for supporting algorithmic transparency. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Woodruff, A., Fox, S.E., Rousso-Schindler, S., Warshaw, J (2018). A qualitative exploration of perceptions of algorithmic fairness. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Mediating information

Interfaces aren’t just about mediating computation, however. They’re also about accessing information. Before software, other humans mediated our access to information. We asked friends for advice, we sought experts for wisdom, and when we couldn’t find people to inform us, we consulted librarians to help us find recorded knowledge, written by experts we didn’t have access to. Searching and browsing for information was always an essential human activity.

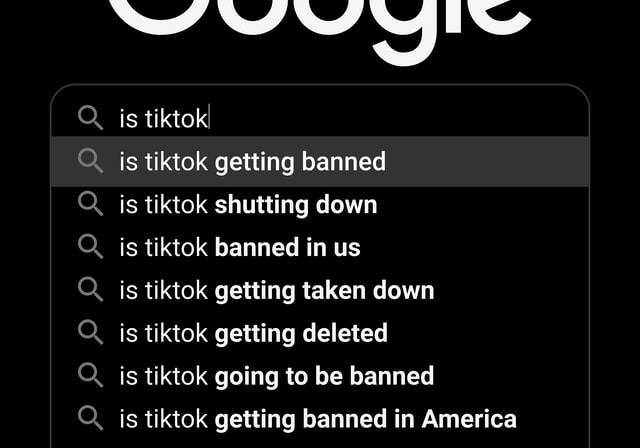

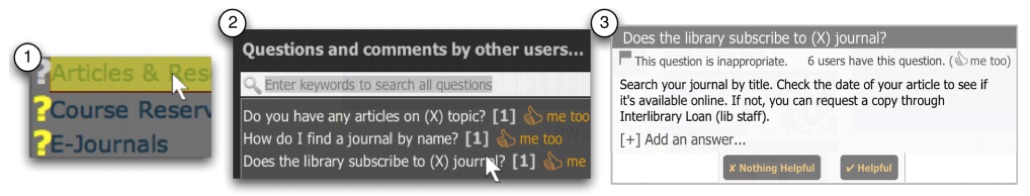

Computing changed this. Now we use software to search the web, to browse documents, to create information, and to organize it. Because computers allow us to store information and access it much more quickly than we could access information through people or documents, we started to build systems for storing, curating, and provisioning information on computers and access it through user interfaces. We took all of the old ideas from information science such as documents, metadata, searching, browsing, indexing, and other knowledge organization ideas, and imported those ideas into computing. Most notably, the Stanford Digital Library Project leveraged all of these old ideas from information science and brought them to computers. This inadvertently led to Google, which still views its core mission as organizing the world’s information, which is what libraries were originally envisioned to do. But there is a difference: librarians, card catalogs, and libraries in general view their core values as access and truth, Google and other search engines ultimately prioritize convenience and profit, often at the expense of access and truth.

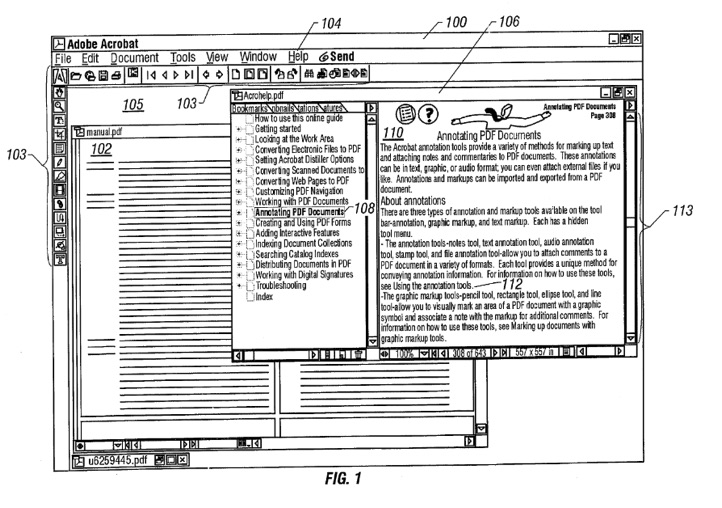

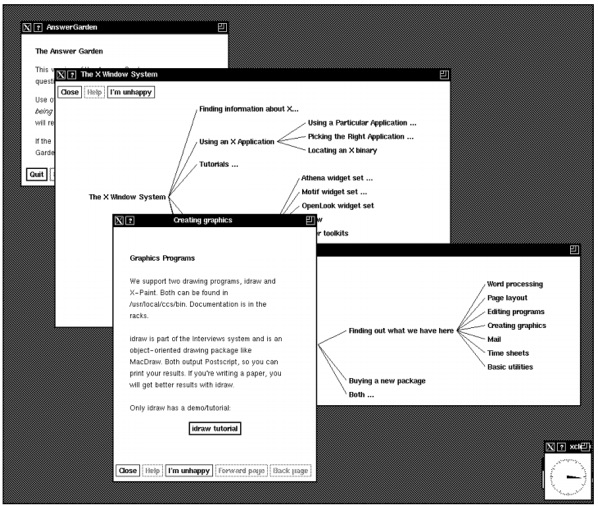

The study of how to design user interfaces to optimally mediate access to information is usually called information architectureinformation architecture: The study and practice of organizing information and inferfaces to support searching, browsing, and sensemaking. 8 8 Rosenfeld, L., & Morville, P. (2002). Information architecture for the world wide web. O'Reilly Media, Inc..

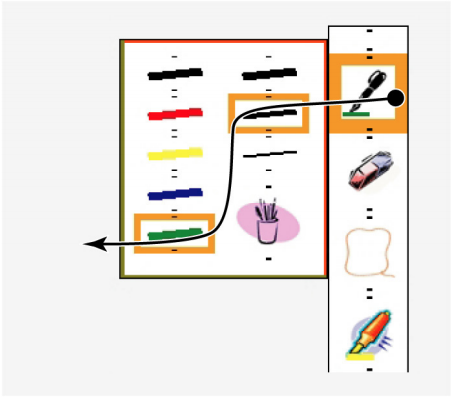

In practice, user interfaces for information technologies can be quite simple. They might consist of a programming language, like Google’s query language , in which you specify an information need (e.g., cute kittens ) that is satisfied with retrieval algorithms (e.g., an index of all websites, images, and videos that might match what that phrase describes, using whatever metadata those documents have). Google’s interface also includes systems for generating user interfaces that present the retrieved results (e.g., searching for flights might result in an interface for purchasing a matching flight). User interfaces for information technologies might instead be browsing-oriented, exposing metadata about information and facilitating navigation through an interface. For example, searching for a product on Amazon often involves setting filters and priorities on product metadata to narrow down a large set of products to those that match your criteria. Whether an interface is optimized for searching, browsing, or both, all forms of information mediation require metadata.

Mediating communication

While computation and information are useful, most of our socially meaningful interactions still occur with other people. That said, more of these interactions than ever are mediated by user interfaces. Every form of social media — messaging apps, email, video chat, discussion boards, chat rooms, blogs, wikis, social networks, virtual worlds, and so on — is a form of computer-mediated communicationcomputer-mediated communication: Any form of communication that is conducted through some computational device, such as a phone, tablet, laptop, desktop, smart speaker, etc. 4 4 Fussell, S. R., & Setlock, L. D. (2014). Computer-mediated communication. Handbook of Language and Social Psychology. Oxford University Press, Oxford, UK, 471-490 .

What makes user interfaces that mediate communication different from those that mediate automation and information? Whereas mediating automation requires clarity about an API’s functionality and input requirements, and mediating information requires metadata to support searching and browsing, mediating communication requires social context . For example, in their seminal paper, Gary and Judy Olson discussed the vast array of social context present in collocated synchronous interactions that have to be reified in computer-mediated communication 6 6 Olson, G. M., & Olson, J. S. (2000). Distance matters. Human-Computer Interaction.

Cho, D., & Kwon, K. H. (2015). The impacts of identity verification and disclosure of social cues on flaming in online user comments. Computers in Human Behavior.

Many researchers in the field of computer-supported collaborative work have sought to find designs that better support social processes, including ideas of “social translucence,” which achieves similar context as collocation, but through new forms of visibility, awareness, and accountability 3 3 Erickson, T., & Kellogg, W. A. (2000). Social translucence: an approach to designing systems that support social processes. ACM transactions on computer-human interaction (TOCHI), 7(1), 59-83.

These three types of mediation each require different architectures, different affordances, and different feedback to achieve their goals:

- Interfaces for computation have to teach a user about what computation is possible and how to interpret the results.

- Interfaces for information have to teach the kinds of metadata that conveys what information exists and what other information needs could be satisfied.

- Interfaces for communication have to teach a user about new social cues that convey the emotions and intents of the people in a social context, mirroring or replacing those in the physical world.

Of course, one interface can mediate many of these things. Twitter, for example, mediates information to resources, but it also mediates communication between people. Similarly, Google, with its built in calculator support, mediates information, but also computation. Therefore, complex interfaces might be doing many things at once, requiring even more careful teaching. Because each of these interfaces must teach different things, one must know foundations of what is being mediated to design effective interfaces. Throughout the rest of this book, we’ll review both the foundations of user interface implementation, but also how the subject of mediation constrains and influences what we implement.

References

-

Abdul, A., Vermeulen, J., Wang, D., Lim, B.Y., Kankanhalli, M (2018). Trends and trajectories for explainable, accountable, intelligible systems: an HCI research agenda. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Cho, D., & Kwon, K. H. (2015). The impacts of identity verification and disclosure of social cues on flaming in online user comments. Computers in Human Behavior.

-

Erickson, T., & Kellogg, W. A. (2000). Social translucence: an approach to designing systems that support social processes. ACM transactions on computer-human interaction (TOCHI), 7(1), 59-83.

- Fussell, S. R., & Setlock, L. D. (2014). Computer-mediated communication. Handbook of Language and Social Psychology. Oxford University Press, Oxford, UK, 471-490

-

Marshall McLuhan (1994). Understanding media: The extensions of man. MIT press.

-

Olson, G. M., & Olson, J. S. (2000). Distance matters. Human-Computer Interaction.

-

Rader, E., Cotter, K., Cho, J. (2018). Explanations as mechanisms for supporting algorithmic transparency. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Rosenfeld, L., & Morville, P. (2002). Information architecture for the world wide web. O'Reilly Media, Inc..

-

Woodruff, A., Fox, S.E., Rousso-Schindler, S., Warshaw, J (2018). A qualitative exploration of perceptions of algorithmic fairness. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Accessibility

Thus far, most of our discussion has focused on what user interfaces are . I described them theoretically as a mapping from the sensory, cognitive, and social human world to these collections of functions exposed by a computer program . While that’s true, most of the mappings we’ve discussed have been input via our fingers and output to our visual perceptual systems. Most user interfaces have largely ignored other sources of human action and perception. We can speak. We can control over 600 different muscles. We can convey hundreds of types of non-verbal information through our gaze, posture, and orientation. We can see, hear, taste, smell, sense pressure, sense temperature, sense balance, sense our position in space, and feel pain, among dozens of other senses. This vast range of human abilities is largely unused in interface design.

This bias is understandable. Our fingers are incredibly agile, precise, and high bandwidth sources of action. Our visual perception is similarly rich, and one of the dominant ways that we engage with the physical world. Optimizing interfaces for these modalities is smart because it optimizes our ability to use interfaces.

However, this bias is also unreasonable because not everyone can see, use their fingers precisely, or read text. Designing interfaces that can only be used if one has these abilities means that vast numbers of people simply can’t use interfaces 11 11 Richard E. Ladner (2012). Communication technologies for people with sensory disabilities. Proceedings of the IEEE.

This might describe you or people you know. And in all likelihood, you will be disabled in one or more of these ways someday, as you age or temporarily, due to injuries, surgeries, or other situational impairments. And that means you’ll struggle or be unable to use the graphical user interfaces you’ve worked so hard to learn. And if you know no one that struggles with interfaces, it may be because they are stigmatized by their difficulties, not sharing their struggles and avoiding access technologies because they signal disability 14 14 Kristen Shinohara and Jacob O. Wobbrock (2011). In the shadow of misperception: assistive technology use and social interactions. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Of course, abilities vary, and this variation has different impacts on people’s ability to use interfaces. One of the most common forms of disability is blindness and low-vision. But even within these categories, there is diversity. Some people are completely blind, some have some sight but need magnification. Some people have color blindness, which can be minimally impactful, unless an interface relies heavily on colors that a person cannot distinguish. I am near-sighted, but still need glasses to interact with user interfaces close to my face. When I do not have my glasses, I have to rely on magnification to see visual aspects of user interface. And while the largest group of people with disabilities are those with vision issues, the long tail of other disabilities around speech, hearing, and motor ability, when combined, is just as large.

Accessibility

Of course, most interfaces assume that none of this variation exists. And ironically, it’s partly because the user interface toolkits we described in the architecture chapter embed this assumption deep in their architecture. Toolkits make it so easy to design graphical user interfaces that these are the only kind of interfaces designers make. This results in most interfaces being difficult or sometimes impossible for vast populations to use, which really makes no business sense 8 8 Sarah Horton and David Sloan (2015). Accessibility for business and pleasure. ACM interactions.

Molly Follette Story (1998). Maximizing Usability: The Principles of Universal Design. Assistive Technology, 10:1, 4-12.

Whereas universal interfaces work for everyone as is, access technologiesaccess technology: Interfaces that are used in tadem with other intefaces to improve their poor accessibility. are alternative user interfaces that attempt to make an existing user interface more universal. Access technologies include things like:

- Screen readers convert text on a graphical user interface to synthesized speech so that people who are blind or unable to read can interact with the interface.

- Captions annotate the speech and action in video as text, allowing people who are deaf or hard of hearing to consume the audio content of video.

- Braille , as shown in the image at the top of this chapter, is a tactile encoding of words for people who are visually impaired.

Consider, for example, this demonstration of screen readers and a braille display:

Fundamentally, universal user interface designs are ones that can be operated via any input and output modality . If user interfaces are really just ways of accessing functions defined in a computer program, there’s really nothing about a user interface that requires it to be visual or operated with fingers. Take, for example, an ATM machine. Why is it structured as a large screen with several buttons? A speech interface could expose identical banking functionality through speech and hearing. Or, imagine an interface in which a camera just looks at someone’s wallet and their face and figures out what they need: more cash, to deposit a check, to check their balance. The input and output modalities an interface uses to expose functionality is really arbitrary: using fingers and eyes is just easier for most people in most situations.

Advances in Accessibility

The challenge for user interface designers then is to not only design the functionality a user interface exposes, but also design a myriad of ways of accessing that functionality through any modality. Unfortunately, conceiving of ways to use all of our senses and abilities is not easy. It took us more than 20 years to invent graphical user interfaces optimized for sight and hands. It’s taken another 20 years to optimize touch-based interactions. It’s not surprising that it’s taking us just as long or longer to invent seamless interfaces for speech, gesture, and other uses of our muscles, and efficient ways of perceiving user interface output through hearing, feeling, and other senses.

These inventions, however, are numerous and span the full spectrum of modalities. For instance, access technologies like screen readers have been around since shortly after Section 508 of the Rehabilitation Act of 1973, and have converted digital text into synthesized speech. This has made it possible for people who are blind or have low-vision to interact with graphical user interfaces. But now, interfaces go well beyond desktop GUIs. For example, just before the ubiquity of touch screens, the SlideRule system showed how to make touch screens accessible to blind users by reading labels of UI throughout multi-touch 10 10 Shaun K. Kane, Jeffrey P. Bigham, Jacob O. Wobbrock (2008). Slide rule: making mobile touch screens accessible to blind people using multi-touch interaction techniques. ACM SIGACCESS Conference on Computers and Accessibility.

For decades, screen readers have only worked on computers, but recent innovations like VizLens (above) have combined machine vision and crowdsourcing to support arbitrary interfaces in the world, such as microwaves, refrigerators, ATMs, and other appliances 7 7 Anhong Guo, Xiang 'Anthony' Chen, Haoran Qi, Samuel White, Suman Ghosh, Chieko Asakawa, Jeffrey P. Bigham (2016). VizLens: A robust and interactive screen reader for interfaces in the real world. ACM Symposium on User Interface Software and Technology (UIST).

With the rapid rise in popularity of the web, web accessibility has also been a popular topic of research. Problems abound, but one of the most notable is the inaccessibility of images. Images on the web often come without Abigale Stangl, Meredith Ringel Morris, and Danna Gurari (2020). Person, Shoes, Tree. Is the Person Naked? What People with Vision Impairments Want in Image Descriptions. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Garreth W. Tigwell, Benjamin M. Gorman, and Rachel Menzies (2020). Emoji Accessibility for Visually Impaired People. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Muhammad Asiful Islam, Yevgen Borodin, I. V. Ramakrishnan (2010). Mixture model based label association techniques for web accessibility. ACM Symposium on User Interface Software and Technology (UIST).

Dragan Ahmetovic, Daisuke Sato, Uran Oh, Tatsuya Ishihara, Kris Kitani, and Chieko Asakawa (2020). ReCog: Supporting Blind People in Recognizing Personal Objects. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Jeffrey P. Bigham (2014). Making the web easier to see with opportunistic accessibility improvement. ACM Symposium on User Interface Software and Technology (UIST).

alt tags that describe the image for people unable to see. User interface controls often lack labels for screen readers to read. Some work shows that some of the the information needs in these descriptions are universal—people describing needing descriptions of people and objects in images—but other needs are highly specific to a context, such as subjective descriptions of people on dating websites 15 15

For people who are deaf or hard of hearing, videos, dialog, or other audio output interfaces are a major accessibility barrier to using computers or engaging in computer-mediated communication. Researchers have invented systems like Legion , which harness crowd workers to provide real-time captioning of arbitrary audio streams with only a few seconds of latency 12 12 Walter Lasecki, Christopher Miller, Adam Sadilek, Andrew Abumoussa, Donato Borrello, Raja Kushalnagar, Jeffrey Bigham (2012). Real-time captioning by groups of non-experts. ACM Symposium on User Interface Software and Technology (UIST).

Neva Cherniavsky, Jaehong Chon, Jacob O. Wobbrock, Richard E. Ladner, Eve A. Riskin (2009). Activity analysis enabling real-time video communication on mobile phones for deaf users. ACM Symposium on User Interface Software and Technology (UIST).

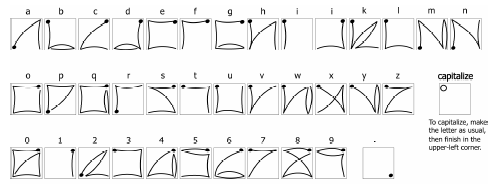

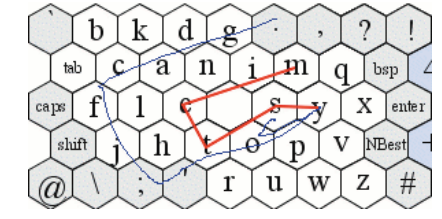

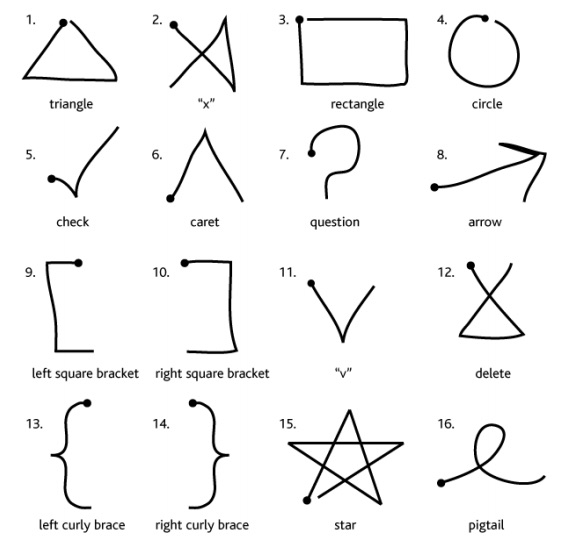

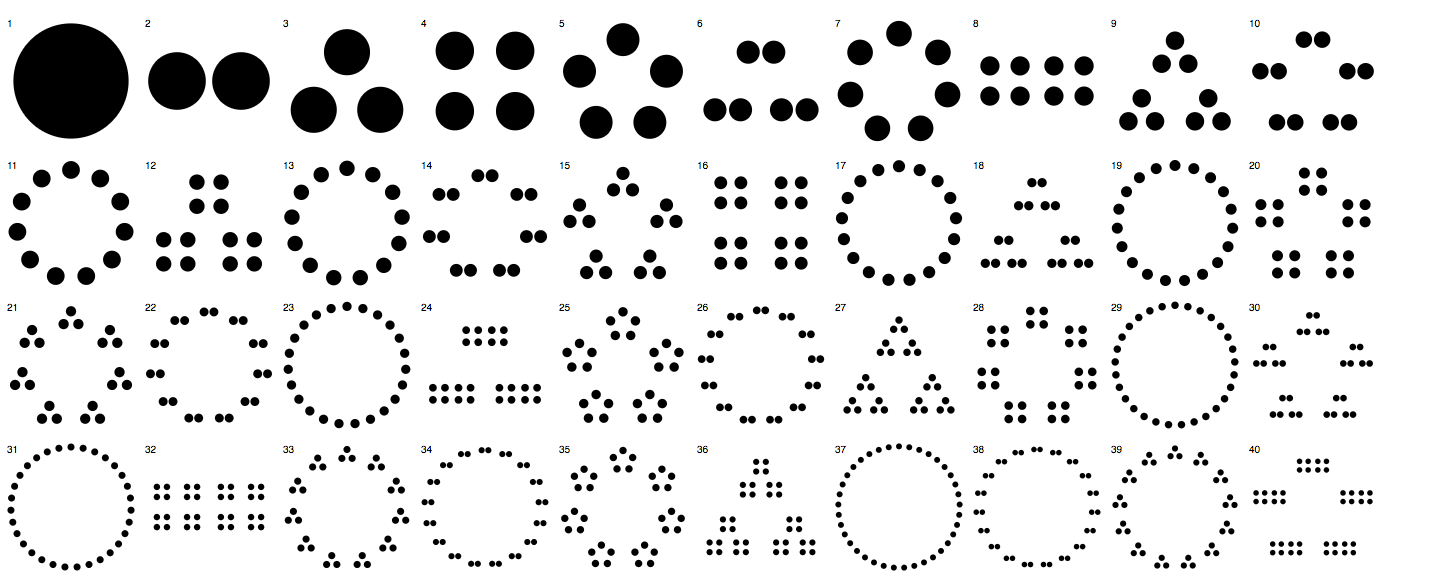

For people who have motor impairments, such as motor tremors , fine control over mouse, keyboards, or multi-touch interfaces can be quite challenging, especially for tasks like text-entry, which require very precise movements. Researchers have explored several ways to make interfaces more accessible for people without fine motor control. EdgeWrite , for example, is a gesture set (shown above) that only requires tracing the edges and diagonals of a square 19 19 Jacob O. Wobbrock, Brad A. Myers, John A. Kembel (2003). EdgeWrite: a stylus-based text entry method designed for high accuracy and stability of motion. ACM Symposium on User Interface Software and Technology (UIST).

Krzysztof Z. Gajos, Jacob O. Wobbrock, Daniel S. Weld (2007). Automatically generating user interfaces adapted to users' motor and vision capabilities. ACM Symposium on User Interface Software and Technology (UIST).

Martez E. Mott, Radu-Daniel Vatavu, Shaun K. Kane, Jacob O. Wobbrock (2016). Smart touch: Improving touch accuracy for people with motor impairments with template matching. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Jacob O. Wobbrock, Shaun K. Kane, Krzysztof Z. Gajos, Susumu Harada, Jon Froehlich (2011). Ability-Based Design: Concept, Principles and Examples. ACM Transactions on Accessible Computing.

Morgan Dixon and James Fogarty (2010). Prefab: implementing advanced behaviors using pixel-based reverse engineering of interface structure. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Sight, hearing, and motor abilities have been the major focus of innovation, but an increasing body of work also considers neurodiversity. For example, autistic people, people with down syndrome, and people with dyslexia may process information in different and unique ways, requiring interface designs that support a diversity of interaction paradigms. As we have discussed, interfaces can be a major source of such information complexity. This has led to interface innovations that facilitate a range of aids, including images and videos for conveying information, iconographic, recognition-based speech generation for communication, carefully designed digital surveys to gather information in health contexts, and memory-aids to facilitate recall. Some work has found that while these interfaces can facilitate communication, they are often not independent solutions, and require exceptional customization to be useful 6 6 Ryan Colin Gibson, Mark D. Dunlop, Matt-Mouley Bouamrane, and Revathy Nayar (2020). Designing Clinical AAC Tablet Applications with Adults who have Mild Intellectual Disabilities. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

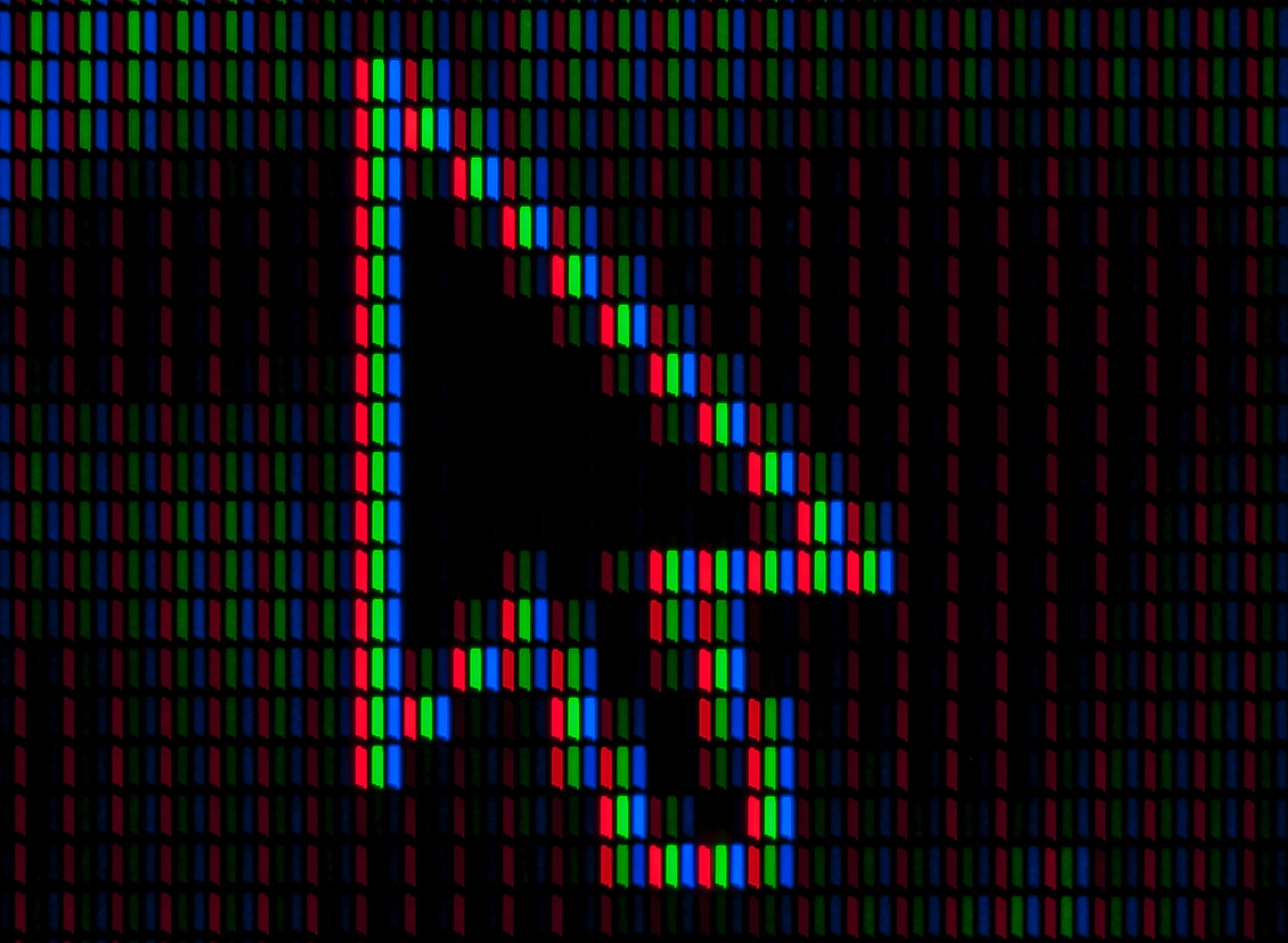

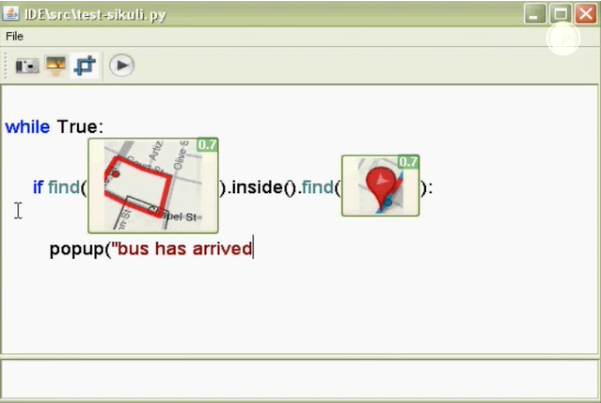

Whereas all of the innovations above aimed to make particular types of information accessible to people with particular abilities, some techniques target accessibility problems at the level of software architecture. For example, accessibility frameworks and features like Apple’s VoiceOver in iOS are system-wide: when a developer uses Apple’s standard user interface toolkits to build a UI, the UI is automatically compatible with VoiceOver , and therefore automatically screen-readable. Because it’s often difficult to convince developers of operating systems and user interfaces to make their software accessible, researchers have also explored ways of modifying interfaces automatically. For example, Prefab (above) is an approach that recognizes the user interface controls based on how they are rendered on-screen, which allows it to build a model of the UI’s layout 4 4 Morgan Dixon and James Fogarty (2010). Prefab: implementing advanced behaviors using pixel-based reverse engineering of interface structure. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Amanda Swearngin, Amy J. Ko, James Fogarty (2017). Genie: Input Retargeting on the Web through Command Reverse Engineering. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

While all of the ideas above can make interfaces more universal, they can also have other unintended benefits for people without disabilities. For example, it turns out screen readers are great for people with ADHD, who may have an easier time attending to speech than text. Making web content more readable for people with low-vision also makes it easier for people with situational impairments, such as dilated pupils after a eye doctor appointment. Captions in videos aren’t just good for people who are deaf and hard of hearing; they’re also good for watching video in quiet spaces. Investing in these accessibility innovations then isn’t just about impacting that 15% of people with disabilities, but also the rest of humanity.

References

-

Dragan Ahmetovic, Daisuke Sato, Uran Oh, Tatsuya Ishihara, Kris Kitani, and Chieko Asakawa (2020). ReCog: Supporting Blind People in Recognizing Personal Objects. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Jeffrey P. Bigham (2014). Making the web easier to see with opportunistic accessibility improvement. ACM Symposium on User Interface Software and Technology (UIST).

-

Neva Cherniavsky, Jaehong Chon, Jacob O. Wobbrock, Richard E. Ladner, Eve A. Riskin (2009). Activity analysis enabling real-time video communication on mobile phones for deaf users. ACM Symposium on User Interface Software and Technology (UIST).

-

Morgan Dixon and James Fogarty (2010). Prefab: implementing advanced behaviors using pixel-based reverse engineering of interface structure. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Krzysztof Z. Gajos, Jacob O. Wobbrock, Daniel S. Weld (2007). Automatically generating user interfaces adapted to users' motor and vision capabilities. ACM Symposium on User Interface Software and Technology (UIST).

-

Ryan Colin Gibson, Mark D. Dunlop, Matt-Mouley Bouamrane, and Revathy Nayar (2020). Designing Clinical AAC Tablet Applications with Adults who have Mild Intellectual Disabilities. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Anhong Guo, Xiang 'Anthony' Chen, Haoran Qi, Samuel White, Suman Ghosh, Chieko Asakawa, Jeffrey P. Bigham (2016). VizLens: A robust and interactive screen reader for interfaces in the real world. ACM Symposium on User Interface Software and Technology (UIST).

-

Sarah Horton and David Sloan (2015). Accessibility for business and pleasure. ACM interactions.

-

Muhammad Asiful Islam, Yevgen Borodin, I. V. Ramakrishnan (2010). Mixture model based label association techniques for web accessibility. ACM Symposium on User Interface Software and Technology (UIST).

-

Shaun K. Kane, Jeffrey P. Bigham, Jacob O. Wobbrock (2008). Slide rule: making mobile touch screens accessible to blind people using multi-touch interaction techniques. ACM SIGACCESS Conference on Computers and Accessibility.

-

Richard E. Ladner (2012). Communication technologies for people with sensory disabilities. Proceedings of the IEEE.

-

Walter Lasecki, Christopher Miller, Adam Sadilek, Andrew Abumoussa, Donato Borrello, Raja Kushalnagar, Jeffrey Bigham (2012). Real-time captioning by groups of non-experts. ACM Symposium on User Interface Software and Technology (UIST).

-

Martez E. Mott, Radu-Daniel Vatavu, Shaun K. Kane, Jacob O. Wobbrock (2016). Smart touch: Improving touch accuracy for people with motor impairments with template matching. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Kristen Shinohara and Jacob O. Wobbrock (2011). In the shadow of misperception: assistive technology use and social interactions. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Abigale Stangl, Meredith Ringel Morris, and Danna Gurari (2020). Person, Shoes, Tree. Is the Person Naked? What People with Vision Impairments Want in Image Descriptions. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Molly Follette Story (1998). Maximizing Usability: The Principles of Universal Design. Assistive Technology, 10:1, 4-12.

-

Amanda Swearngin, Amy J. Ko, James Fogarty (2017). Genie: Input Retargeting on the Web through Command Reverse Engineering. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Garreth W. Tigwell, Benjamin M. Gorman, and Rachel Menzies (2020). Emoji Accessibility for Visually Impaired People. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Jacob O. Wobbrock, Brad A. Myers, John A. Kembel (2003). EdgeWrite: a stylus-based text entry method designed for high accuracy and stability of motion. ACM Symposium on User Interface Software and Technology (UIST).

-

Jacob O. Wobbrock, Shaun K. Kane, Krzysztof Z. Gajos, Susumu Harada, Jon Froehlich (2011). Ability-Based Design: Concept, Principles and Examples. ACM Transactions on Accessible Computing.

Programming Interfaces

If you don’t know the history of computing, it’s easy to overlook the fact that all of the interfaces that people used to control computers before the graphical user interface were programming interfaces. But that’s no longer true; why then talk about programming in a book about user interface technology? Two reasons: programmers are users too, and perhaps more importantly, user interfaces quite often have programming-like features embedded in them. For example, spreadsheets aren’t just tables of data; they also tend to contain formulas that perform calculations on that data. Or, consider the increasingly common trend of smart home devices: to make even basic use of them, one must often write basic programs to automate their use (e.g., turning on a light when one arrives home, or defining the conditions in which a smart doorbell sends a notification). Programming interfaces are therefore far from niche: they are the foundation of how all interfaces are built and increasingly part of using interfaces to control and configure devices.

But it’s also important to understand programming interfaces in order to understand why interactive interfaces — the topic of our next chapter — are so powerful. This chapter won’t teach you to code if you don’t already know how, but it will give you the concepts and vocabulary to understand the fundamental differences between these two interface paradigms, when one might be used over the other, and what challenges each poses to people using computers.

Properties of programming interfaces

In some ways, programming interfaces are like any other kind of user interface: they take input, present output, have affordances and signifiers, and present a variety of gulfs of evaluation and execution. They do this by using a collection of tools, which make up the “interface” a programmer users to write computer programs:

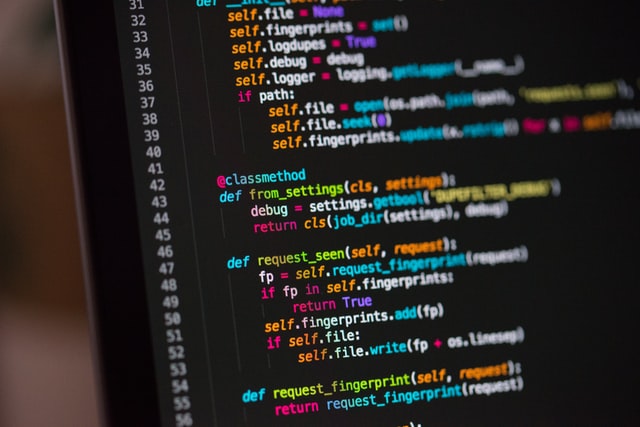

- Programming languages . These are interfaces that take computer programs, translate them into instructions that a computer can execute, and then execute them. Popular examples include languages like

Python(depicted at the beginning of this chapter) andJavaScript(which is used to build interactive web sites) but also less well-known languages such as `Scratch` , which youth often use to create interactive 2D animations and games, orR, which statisticians and data scientists use to analyze data. - Editors . These are editors, much like word processes, that programmers use to read and edit computer programs. Some languages are editor-agnostic (e.g., you can edit Python code in any text editor), whereas others come with dedicated editors (e.g., Scratch programs can only be written with the Scratch editor).

These two tools, along with many other optional tools to help streamline the many challenges that come with programming, are the core interfaces that people use to write computer programs. Programming, therefore, generally involves reading and editing code in an editor, and repeatedly asking a programming language to read the code to see if there are any errors in it, and then executing the program to see if it has the intended behavior. It often doesn’t, which requires people to debug their code, finding the parts of it leading the program to misbehave. This edit-run-debug cycle is the general experience of programming, slowly writing and revising a program until it behaves as intended (much like slowly sculpting a sculpture, writing an essay, or painting a painting).

While it’s obvious this is different from using a website or mobile application, it’s helpful to talk in more precise terms about exactly how it is different. In particular, there are three attributes in particular that make an interface a programming interface 3 3 Blackwell, A. F. (2002). First steps in programming: A rationale for attention investment models. In Human Centric Computing Languages and Environments, 2002. Proceedings. IEEE 2002 Symposia on (pp. 2-10). IEEE.

- No direct manipulation . In interactive interfaces like a web page or mobile app, concrete physical actions by a user on some data — moving a mouse, tapping on an object — result in concrete, immediate feedback about the effect of those actions. For example, dragging a file from one folder to another immediately moves the file, both visually, and on disk; selecting and dragging some text in a word processor moves the text. In contrast, programming interfaces offer no such direct manipulation: one declares what a program should do to some data, and then only after the program is executed does that action occur (if it was declared correctly). Programming interfaces therefore always involve indirect manipulation, describing.

- Use of notation . In interactive interfaces, most interactions involve concrete representations of data and action. For example, a web form has controls for entering information, and a submit button for saving it. But in programming interfaces, references to data and actions always involves using some programming language notation to refer to data and actions. For example, to open a new folder in the Unix or Linux operating systems, one has to type

cd myFolder, which is a particular notation for executing thecdcommand (standing for “change directory” and specifying which folder to navigate to (myFolder). Programming interfaces therefore always involve specific rules for describing what a user wants the computer to do. - Use of abstraction . An inherent side effect of using notations is that one must also use abstractions to refer to a computer’s actions. For example, when one uses the Unix command line program

mv file.txt ../(which movesfile.txtto its parent directory), they are using a command, a file name, and a symbolic reference../to refer to the parent directory of the current directory. Commands, names, and relative path references are all abstractions in that they all abstractly represent the data and actions to be performed. Visual icons in a graphical user interface are also abstract, but because they support direct manipulation, such as moving files from one folder to another, they can be treated as concrete objects. Not so with programming interfaces, where everything is abstract.

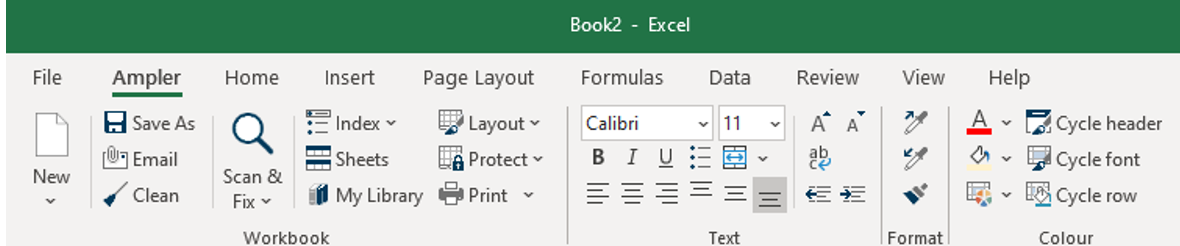

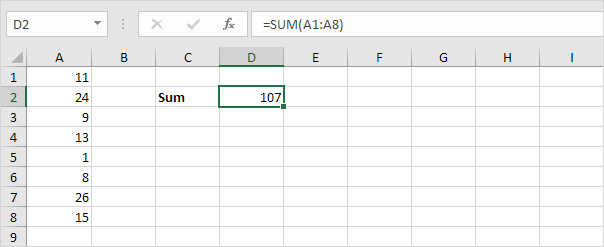

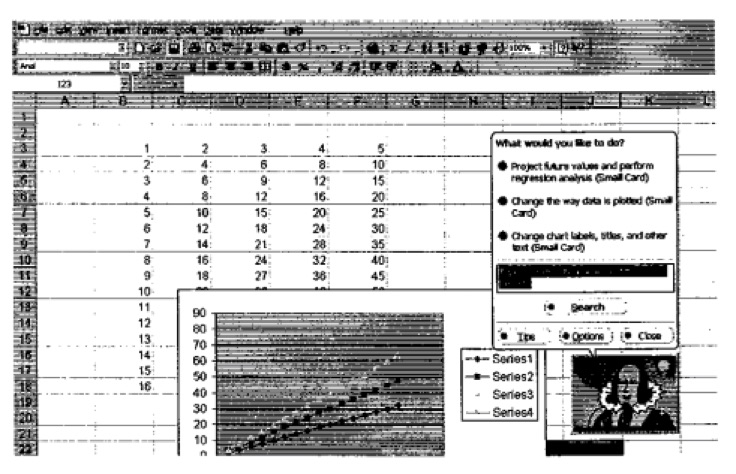

Clearly, textual programming languages such as the popular Python , Java or JavaScript fit all all of the descriptions above. But what about programming interfaces like Excel spreadsheets? Thinking through each of the properties above, we see spreadsheets have many of the same properties. While one can directly manipulate data in cells, there are no direct manipulation ways to use formulas: those must be written in a notation, the Excel programming language. And the notation invokes abstract ideas like sum rows and columns. Spreadsheets blur the line between programming and interactive interfaces, however, by immediately executing formulas as soon as they are edited, recomputing everything in the spreadsheet. This gives a a similar sense of immediacy as direct manipulation, even though it is not direct.

Another interesting case is that of chat bots, including those with pre-defined responses like in help tools and Discord, as well as more sophisticated ones like ChatGPT and others that use large language models (LLMs) trained on all of the internet’s public documents. Is writing prompts a form of programming? They seem to satisfy all of the properties above. They do not support direct manipulation, because they require a descriptions of commands. They do use notation, just natural language instead of a formal notation like a programming language. And they definitely use abstraction, in that they rely on sequences of symbols that represent abstract ideas (otherwise known as words ) to refer to what one wants a computer to do. If we accept the definition above, then yes, chat bots and voice interfaces are programming interfaces, and may entail similar challenges as more conventional programming interfaces do. And we shouldn’t be surprised: all large-language models do (along with more rudimentary approaches to responding to language) is take a series of symbols as inputs, and generate a series of symbols in response, just like any other function in a computer program.

Learning programming interfaces

Because programming interfaces are interfaces, they also have gulfs of executiongulf of execution: Everything a person must learn in order to acheive their goal with an interface, including learning what the interface is and is not capable of and how to operate it correctly. and evaluationgulf of evaluation: Everything a person must learn in order to understand the effect of their action on an interface, including understanding error messages or a lack of response from an interface. as well. Their gulfs are just much larger because of the lack of direct manipulation, the use of notation, and the centrality of abstraction. All of these create a greater distance between someone’s goal, which often involves delegating or automating some task with code.

At the level of writing code, the gulfs of execution are immense. Imagine, for example, you have an idea for an mobile app, and are starting at a blank code editor. To have any clue what to type, people must know 1) a programming language, including its notation, and the various rules for using the notation correctly; 2) basic concepts in computer science about data structures and algorithms, and 3) how to operate the code editor, which may have many features designed assuming all of this prior knowledge. Its rare, therefore, that a person might just poke around an editor or programming language to learn how it works. Instead, learning usually requires good documentation, perhaps some tutorials, and quite possibly a teacher and an education system. The same can be true of complex interactive interfaces (e.g., learning Photoshop ), but at least in the case of interactive interfaces, the functional affordances is explicitly presented in menus. With programming interfaces, affordances are invisible.

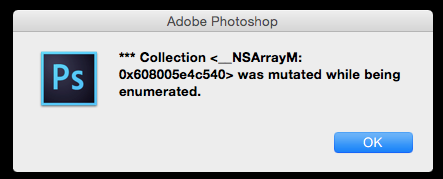

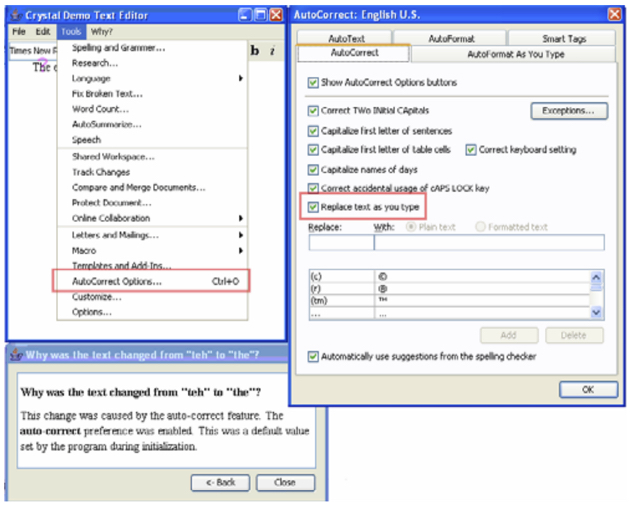

Once one has some code and executes it, programming interfaces pose similarly large gulfs of evaluation. Most programs start off defective in some way, not quite doing what a person intended: when the program misbehaves, or gives an error message, what is a programmer to do? To have any clue, people lean on their knowledge of programming language to interpret error messages, carefully analyze their code, and potentially use other tools like debuggers to understand where their instructions might have gone wrong. These debugging skills are similar to the troubleshooting skills required by in interactive interfaces (e.g., figuring out why Microsoft Word keeps autocorrecting something), but the solutions are often more than just unchecking a checkbox: they may involve revising or entirely rewriting a part of a program.

If these two gulfs were not enough, modern programming interfaces have come to introduce new gulfs. These new gulfs primarily emerge from the increasing use of APIsapplication programming interface (API): A collection of code used for computing particular things, designed for reuse by others without having to understand the details of the computation. For example, an API might mediate access to facial recognition algorithms, date arithmetic, or trigonometric functions. to construct applications by reusing other people’s code. Some of the gulfs that reuse impose include 11 11 Amy J. Ko, Brad A. Myers, Htet Htet Aung (2004). Six learning barriers in end-user programming systems. IEEE Symposium on Visual Languages-Human Centric Computing (VL/HCC).

- Design gulfs . APIs make some things easy to create, while making other things hard or impossible. This gulf of execution requires programmers to know what is possible to express.

- Selection gulfs . APIs may offer a variety of functionality, but it’s not always clear what functionality might be relevant to implementing something. This gulf of execution requires programmers to read documentation, scour web forums, and talk to experts to find the API that might best serve their need.

- Use gulfs . Once someone find an API that might be useful for creating what they want, they have to learn how to use the API. Bridging this gulf of execution might entail carefully reading documentation, finding example code, and understanding how the example code works.

- Coordination gulfs . Once someone learns how to use one part of an API, they might need to use it in coordination with another part to achieve the behavior they want. Bridging this gulf of execution might require finding more complex examples, or creatively tinkering with the API to understand its limitations.

- Understanding gulfs . Once someone has written some code with an API, they may need to debug their code, but without the ability to see the API’s own code. This poses gulfs of evaluation, requiring programmers to carefully interpret and analyze API behavior, or find explanations of its behavior in resources or online communities.

These many gulfs of execution and evaluation mean have two implications: 1) programming interfaces are hard to learn and 2) designing programming interfaces is about more than just programming language design. It’s about supporting a whole ecosystem of tools and resources to support learning.

If we return to our examination of chat bots, we can see that they also have the same gulfs. It’s not clear what one has to say to a chat bot to get the response one wants. Different phrases have different effects, in unpredictable ways. And once one does say something, if the results are unexpected, it’s not clear why the model produced the results it did, and what action needs to be taken to produce a different response. “Prompt engineering” may just be modern lingo for “programming” and “debugging”, just without all of the usual tools, documentation, and clarity of how to use them to get what you want.

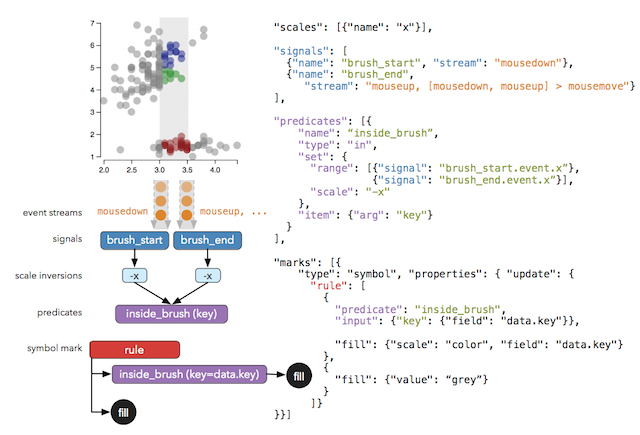

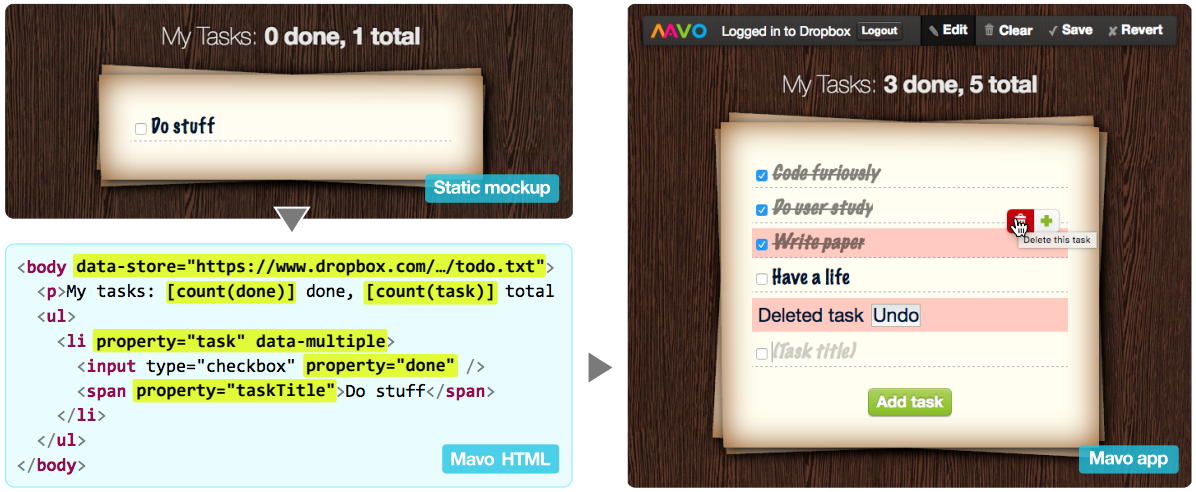

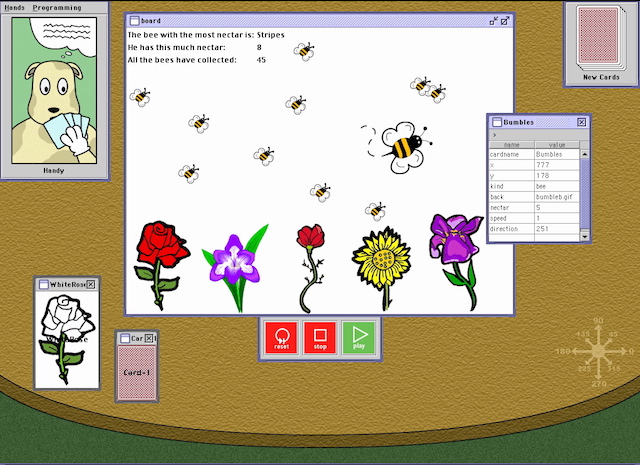

Simplifying programming interfaces