Programming Interfaces

If you don’t know the history of computing, it’s easy to overlook the fact that all of the interfaces that people used to control computers before the graphical user interface were programming interfaces. But that’s no longer true; why then talk about programming in a book about user interface technology? Two reasons: programmers are users too, and perhaps more importantly, user interfaces quite often have programming-like features embedded in them. For example, spreadsheets aren’t just tables of data; they also tend to contain formulas that perform calculations on that data. Or, consider the increasingly common trend of smart home devices: to make even basic use of them, one must often write basic programs to automate their use (e.g., turning on a light when one arrives home, or defining the conditions in which a smart doorbell sends a notification). Programming interfaces are therefore far from niche: they are the foundation of how all interfaces are built and increasingly part of using interfaces to control and configure devices.

But it’s also important to understand programming interfaces in order to understand why interactive interfaces — the topic of our next chapter — are so powerful. This chapter won’t teach you to code if you don’t already know how, but it will give you the concepts and vocabulary to understand the fundamental differences between these two interface paradigms, when one might be used over the other, and what challenges each poses to people using computers.

Properties of programming interfaces

In some ways, programming interfaces are like any other kind of user interface: they take input, present output, have affordances and signifiers, and present a variety of gulfs of evaluation and execution. They do this by using a collection of tools, which make up the “interface” a programmer users to write computer programs:

- Programming languages . These are interfaces that take computer programs, translate them into instructions that a computer can execute, and then execute them. Popular examples include languages like

Python(depicted at the beginning of this chapter) andJavaScript(which is used to build interactive web sites) but also less well-known languages such as `Scratch` , which youth often use to create interactive 2D animations and games, orR, which statisticians and data scientists use to analyze data. - Editors . These are editors, much like word processes, that programmers use to read and edit computer programs. Some languages are editor-agnostic (e.g., you can edit Python code in any text editor), whereas others come with dedicated editors (e.g., Scratch programs can only be written with the Scratch editor).

These two tools, along with many other optional tools to help streamline the many challenges that come with programming, are the core interfaces that people use to write computer programs. Programming, therefore, generally involves reading and editing code in an editor, and repeatedly asking a programming language to read the code to see if there are any errors in it, and then executing the program to see if it has the intended behavior. It often doesn’t, which requires people to debug their code, finding the parts of it leading the program to misbehave. This edit-run-debug cycle is the general experience of programming, slowly writing and revising a program until it behaves as intended (much like slowly sculpting a sculpture, writing an essay, or painting a painting).

While it’s obvious this is different from using a website or mobile application, it’s helpful to talk in more precise terms about exactly how it is different. In particular, there are three attributes in particular that make an interface a programming interface 3 3 Blackwell, A. F. (2002). First steps in programming: A rationale for attention investment models. In Human Centric Computing Languages and Environments, 2002. Proceedings. IEEE 2002 Symposia on (pp. 2-10). IEEE.

- No direct manipulation . In interactive interfaces like a web page or mobile app, concrete physical actions by a user on some data — moving a mouse, tapping on an object — result in concrete, immediate feedback about the effect of those actions. For example, dragging a file from one folder to another immediately moves the file, both visually, and on disk; selecting and dragging some text in a word processor moves the text. In contrast, programming interfaces offer no such direct manipulation: one declares what a program should do to some data, and then only after the program is executed does that action occur (if it was declared correctly). Programming interfaces therefore always involve indirect manipulation, describing.

- Use of notation . In interactive interfaces, most interactions involve concrete representations of data and action. For example, a web form has controls for entering information, and a submit button for saving it. But in programming interfaces, references to data and actions always involves using some programming language notation to refer to data and actions. For example, to open a new folder in the Unix or Linux operating systems, one has to type

cd myFolder, which is a particular notation for executing thecdcommand (standing for “change directory” and specifying which folder to navigate to (myFolder). Programming interfaces therefore always involve specific rules for describing what a user wants the computer to do. - Use of abstraction . An inherent side effect of using notations is that one must also use abstractions to refer to a computer’s actions. For example, when one uses the Unix command line program

mv file.txt ../(which movesfile.txtto its parent directory), they are using a command, a file name, and a symbolic reference../to refer to the parent directory of the current directory. Commands, names, and relative path references are all abstractions in that they all abstractly represent the data and actions to be performed. Visual icons in a graphical user interface are also abstract, but because they support direct manipulation, such as moving files from one folder to another, they can be treated as concrete objects. Not so with programming interfaces, where everything is abstract.

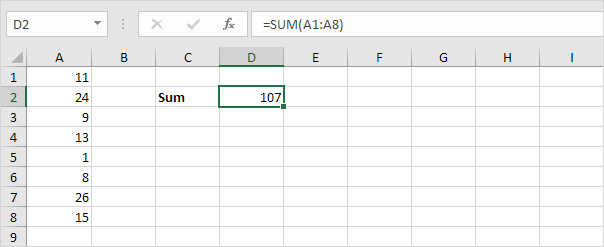

Clearly, textual programming languages such as the popular Python , Java or JavaScript fit all all of the descriptions above. But what about programming interfaces like Excel spreadsheets? Thinking through each of the properties above, we see spreadsheets have many of the same properties. While one can directly manipulate data in cells, there are no direct manipulation ways to use formulas: those must be written in a notation, the Excel programming language. And the notation invokes abstract ideas like sum rows and columns. Spreadsheets blur the line between programming and interactive interfaces, however, by immediately executing formulas as soon as they are edited, recomputing everything in the spreadsheet. This gives a a similar sense of immediacy as direct manipulation, even though it is not direct.

Another interesting case is that of chat bots, including those with pre-defined responses like in help tools and Discord, as well as more sophisticated ones like ChatGPT and others that use large language models (LLMs) trained on all of the internet’s public documents. Is writing prompts a form of programming? They seem to satisfy all of the properties above. They do not support direct manipulation, because they require a descriptions of commands. They do use notation, just natural language instead of a formal notation like a programming language. And they definitely use abstraction, in that they rely on sequences of symbols that represent abstract ideas (otherwise known as words ) to refer to what one wants a computer to do. If we accept the definition above, then yes, chat bots and voice interfaces are programming interfaces, and may entail similar challenges as more conventional programming interfaces do. And we shouldn’t be surprised: all large-language models do (along with more rudimentary approaches to responding to language) is take a series of symbols as inputs, and generate a series of symbols in response, just like any other function in a computer program.

Learning programming interfaces

Because programming interfaces are interfaces, they also have gulfs of executiongulf of execution: Everything a person must learn in order to acheive their goal with an interface, including learning what the interface is and is not capable of and how to operate it correctly. and evaluationgulf of evaluation: Everything a person must learn in order to understand the effect of their action on an interface, including understanding error messages or a lack of response from an interface. as well. Their gulfs are just much larger because of the lack of direct manipulation, the use of notation, and the centrality of abstraction. All of these create a greater distance between someone’s goal, which often involves delegating or automating some task with code.

At the level of writing code, the gulfs of execution are immense. Imagine, for example, you have an idea for an mobile app, and are starting at a blank code editor. To have any clue what to type, people must know 1) a programming language, including its notation, and the various rules for using the notation correctly; 2) basic concepts in computer science about data structures and algorithms, and 3) how to operate the code editor, which may have many features designed assuming all of this prior knowledge. Its rare, therefore, that a person might just poke around an editor or programming language to learn how it works. Instead, learning usually requires good documentation, perhaps some tutorials, and quite possibly a teacher and an education system. The same can be true of complex interactive interfaces (e.g., learning Photoshop ), but at least in the case of interactive interfaces, the functional affordances is explicitly presented in menus. With programming interfaces, affordances are invisible.

Once one has some code and executes it, programming interfaces pose similarly large gulfs of evaluation. Most programs start off defective in some way, not quite doing what a person intended: when the program misbehaves, or gives an error message, what is a programmer to do? To have any clue, people lean on their knowledge of programming language to interpret error messages, carefully analyze their code, and potentially use other tools like debuggers to understand where their instructions might have gone wrong. These debugging skills are similar to the troubleshooting skills required by in interactive interfaces (e.g., figuring out why Microsoft Word keeps autocorrecting something), but the solutions are often more than just unchecking a checkbox: they may involve revising or entirely rewriting a part of a program.

If these two gulfs were not enough, modern programming interfaces have come to introduce new gulfs. These new gulfs primarily emerge from the increasing use of APIsapplication programming interface (API): A collection of code used for computing particular things, designed for reuse by others without having to understand the details of the computation. For example, an API might mediate access to facial recognition algorithms, date arithmetic, or trigonometric functions. to construct applications by reusing other people’s code. Some of the gulfs that reuse impose include 11 11 Amy J. Ko, Brad A. Myers, Htet Htet Aung (2004). Six learning barriers in end-user programming systems. IEEE Symposium on Visual Languages-Human Centric Computing (VL/HCC).

- Design gulfs . APIs make some things easy to create, while making other things hard or impossible. This gulf of execution requires programmers to know what is possible to express.

- Selection gulfs . APIs may offer a variety of functionality, but it’s not always clear what functionality might be relevant to implementing something. This gulf of execution requires programmers to read documentation, scour web forums, and talk to experts to find the API that might best serve their need.

- Use gulfs . Once someone find an API that might be useful for creating what they want, they have to learn how to use the API. Bridging this gulf of execution might entail carefully reading documentation, finding example code, and understanding how the example code works.

- Coordination gulfs . Once someone learns how to use one part of an API, they might need to use it in coordination with another part to achieve the behavior they want. Bridging this gulf of execution might require finding more complex examples, or creatively tinkering with the API to understand its limitations.

- Understanding gulfs . Once someone has written some code with an API, they may need to debug their code, but without the ability to see the API’s own code. This poses gulfs of evaluation, requiring programmers to carefully interpret and analyze API behavior, or find explanations of its behavior in resources or online communities.

These many gulfs of execution and evaluation mean have two implications: 1) programming interfaces are hard to learn and 2) designing programming interfaces is about more than just programming language design. It’s about supporting a whole ecosystem of tools and resources to support learning.

If we return to our examination of chat bots, we can see that they also have the same gulfs. It’s not clear what one has to say to a chat bot to get the response one wants. Different phrases have different effects, in unpredictable ways. And once one does say something, if the results are unexpected, it’s not clear why the model produced the results it did, and what action needs to be taken to produce a different response. “Prompt engineering” may just be modern lingo for “programming” and “debugging”, just without all of the usual tools, documentation, and clarity of how to use them to get what you want.

Simplifying programming interfaces

Given all of the difficulties that programming interfaces pose, its reasonable to wonder why we use them at all, instead of just creating interactive interfaces for everything we want computers to do. Unfortunately, that simply isn’t possible: creating new applications requires programming; customizing the behavior of software to meet our particular needs requires programming; and automating any task with a computer requires programming. Therefore, many researchers have worked hard to reduce the gulfs in programming interfaces as much as possible, to enable more people to succeed in using them.

While there is a vast literature on programming languages and tools, and much of it focuses on bridging gulfs of execution and evaluation, in this chapter, we’ll focus on the contributions that HCI researchers have made to solving these problems, as they demonstrate the rich, under-explored design space of ways that people can program computers beyond using general purpose languages. Much of this work can be described as supporting end-user programmingend-user programming: Any programming done as a means to accomplish some other goal (in contrast to software engineering, which is done for the sole purpose of creating software for others to use). , which is any programming that someone does as a means to accomplishing some other goal 13 13 Amy J. Ko, Robin Abraham, Laura Beckwith, Alan Blackwell, Margaret Burnett, Martin Erwig, Chris Scaffidi, Joseph Lawrance, Henry Lieberman, Brad Myers, Mary Beth Rosson, Gregg Rothermel, Mary Shaw, Susan Wiedenbeck (2011). The state of the art in end-user software engineering. ACM Computing Surveys.

Tom Yeh, Tsung-Hsiang Chang, Robert C. Miller (2009). Sikuli: using GUI screenshots for search and automation. ACM Symposium on User Interface Software and Technology (UIST).

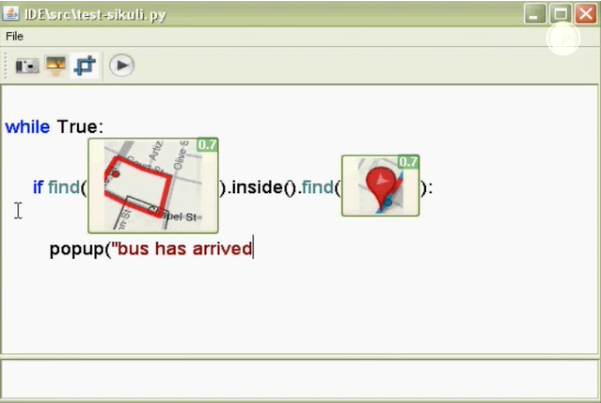

This vast range of domains in which programming interfaces can be used has lead to an abundance of unique interfaces. For example, several researchers have explored ways to automate interaction with user interfaces, to take repetitive tasks and automate them. One such system is Sikuli (above), which allows users to use screenshots of user interfaces to write scripts that automate interactions 28 28 Tom Yeh, Tsung-Hsiang Chang, Robert C. Miller (2009). Sikuli: using GUI screenshots for search and automation. ACM Symposium on User Interface Software and Technology (UIST).

Gilly Leshed, Eben M. Haber, Tara Matthews, Tessa Lau (2008). CoScripter: automating & sharing how-to knowledge in the enterprise. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Tessa Lau, Julian Cerruti, Guillermo Manzato, Mateo Bengualid, Jeffrey P. Bigham, Jeffrey Nichols (2010). A conversational interface to web automation. ACM Symposium on User Interface Software and Technology (UIST).

Robert C. Miller, Victoria H. Chou, Michael Bernstein, Greg Little, Max Van Kleek, David Karger, mc schraefel (2008). Inky: a sloppy command line for the web with rich visual feedback. ACM Symposium on User Interface Software and Technology (UIST).

Greg Little and Robert C. Miller (2006). Translating keyword commands into executable code. ACM Symposium on User Interface Software and Technology (UIST).

Arvind Satyanarayan, Kanit Wongsuphasawat, Jeffrey Heer (2014). Declarative interaction design for data visualization. ACM Symposium on User Interface Software and Technology (UIST).

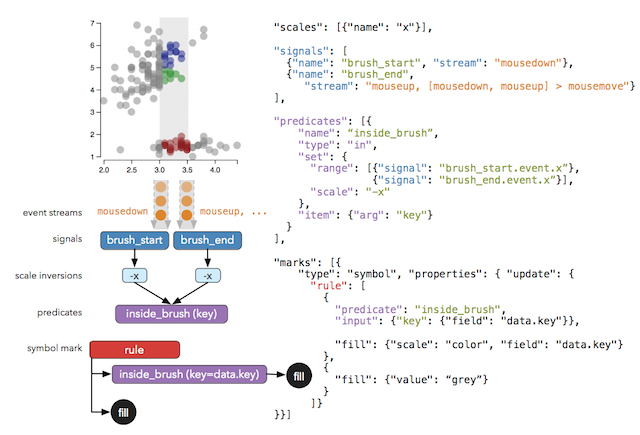

Another major focus has been supporting people interacting with data. Some systems like Vega above have offered new programming languages for declaratively specifying data visualizations 25 25 Arvind Satyanarayan, Kanit Wongsuphasawat, Jeffrey Heer (2014). Declarative interaction design for data visualization. ACM Symposium on User Interface Software and Technology (UIST).

Philip J. Guo, Sean Kandel, Joseph M. Hellerstein, Jeffrey Heer (2011). Proactive wrangling: mixed-initiative end-user programming of data transformation scripts. ACM Symposium on User Interface Software and Technology (UIST).

Mikaël Mayer, Gustavo Soares, Maxim Grechkin, Vu Le, Mark Marron, Oleksandr Polozov, Rishabh Singh, Benjamin Zorn, Sumit Gulwani (2015). User Interaction Models for Disambiguation in Programming by Example. ACM Symposium on User Interface Software and Technology (UIST).

Robert C. Miller and Brad A. Myers (2001). Outlier finding: focusing user attention on possible errors. ACM Symposium on User Interface Software and Technology (UIST).

Azza Abouzied, Joseph Hellerstein, Avi Silberschatz (2012). DataPlay: interactive tweaking and example-driven correction of graphical database queries. ACM Symposium on User Interface Software and Technology (UIST).

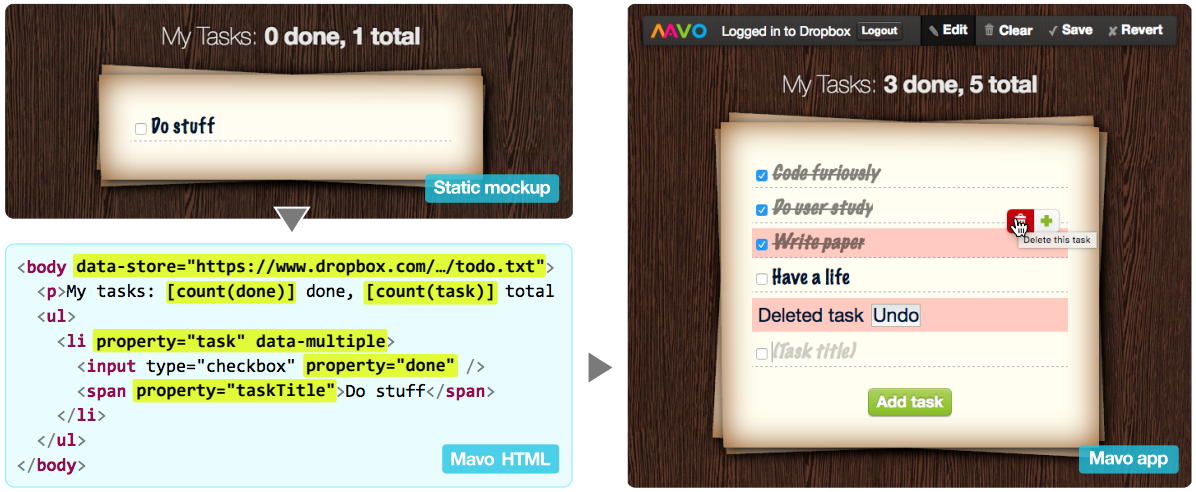

Lea Verou, Amy X. Zhang, David R. Karger (2016). Mavo: Creating Interactive Data-Driven Web Applications by Authoring HTML. ACM Symposium on User Interface Software and Technology (UIST).

Some systems have attempted to support more ambitious automation, empowering users to create entire applications that better support their personal information needs. For example, many systems have combined spreadsheets with other simple scripting languages to enable users to write simple web applications with rich interfaces, using the spreadsheet as a database 2,6 2 Edward Benson, Amy X. Zhang, David R. Karger (2014). Spreadsheet driven web applications. ACM Symposium on User Interface Software and Technology (UIST).

Kerry Shih-Ping Chang and Brad A. Myers (2014). Creating interactive web data applications with spreadsheets. ACM Symposium on User Interface Software and Technology (UIST).

David R. Karger, Scott Ostler, Ryan Lee (2009). The web page as a WYSIWYG end-user customizable database-backed information management application. ACM Symposium on User Interface Software and Technology (UIST).

Lea Verou, Amy X. Zhang, David R. Karger (2016). Mavo: Creating Interactive Data-Driven Web Applications by Authoring HTML. ACM Symposium on User Interface Software and Technology (UIST).

Julia Brich, Marcel Walch, Michael Rietzler, Michael Weber, Florian Schaub (2017). Exploring End User Programming Needs in Home Automation. ACM Trans. Comput.-Hum. Interact. 24, 2, Article 11 (April 2017), 35 pages.

J. F. Pane, B. A. Myers and L. B. Miller, (2002). Using HCI techniques to design a more usable programming system. IEEE Symposium on Visual Languages-Human Centric Computing (VL/HCC).

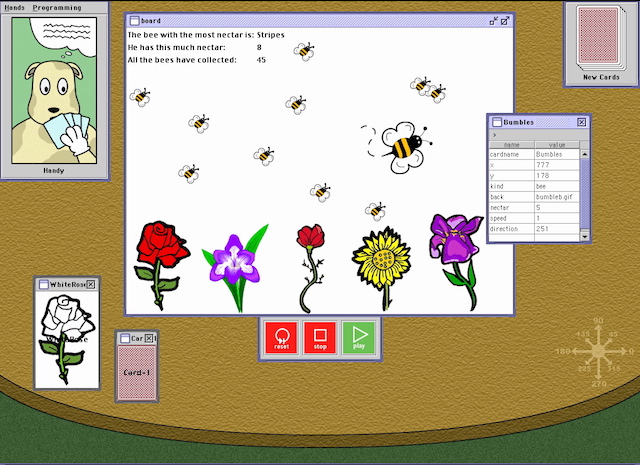

Perhaps the richest category of end-user programming systems are those supporting the creation of games. Hundreds of systems have provided custom programming languages and development environments for authoring games, ranging from simple game mechanics to entire general purpose programming languages to support games 10 10 Caitlin Kelleher and Randy Pausch (2005). Lowering the barriers to programming: A taxonomy of programming environments and languages for novice programmers. ACM Computing Surveys.

J. F. Pane, B. A. Myers and L. B. Miller, (2002). Using HCI techniques to design a more usable programming system. IEEE Symposium on Visual Languages-Human Centric Computing (VL/HCC).

Richard G. McDaniel and Brad A. Myers (1997). Gamut: demonstrating whole applications. ACM Symposium on User Interface Software and Technology (UIST).

Amy J. Ko, Brad A. Myers (2004). Designing the Whyline: A Debugging Interface for Asking Questions About Program Failures. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Most innovations for programming interfaces have focused on bridging the gulf of execution. Fewer systems have focused on bridging gulfs of evaluation by supporting, testing, and debugging behaviors a user is trying to understand. One from my own research was a system called the Whyline 11 11 Amy J. Ko, Brad A. Myers, Htet Htet Aung (2004). Six learning barriers in end-user programming systems. IEEE Symposium on Visual Languages-Human Centric Computing (VL/HCC).

Brian Burg, Amy J. Ko, Michael D. Ernst (2015). Explaining Visual Changes in Web Interfaces. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology (UIST '15).

Joshua Hibschman and Haoqi Zhang (2015). Unravel: Rapid web application reverse engineering via interaction recording, source tracing, library detection. ACM Symposium on User Interface Software and Technology (UIST).

Todd Kulesza, Weng-Keen Wong, Simone Stumpf, Stephen Perona, Rachel White, Margaret M. Burnett, Ian Oberst, Amy J. Ko (2009). Fixing the program my computer learned: barriers for end users, challenges for the machine. International Conference on Intelligent User Interfaces (IUI).

Kayur Patel, Naomi Bancroft, Steven M. Drucker, James Fogarty, Amy J. Ko, James Landay (2010). Gestalt: integrated support for implementation and analysis in machine learning. ACM Symposium on User Interface Software and Technology (UIST).

Will McGrath, Daniel Drew, Jeremy Warner, Majeed Kazemitabaar, Mitchell Karchemsky, David Mellis, Björn Hartmann (2017). Bifröst: Visualizing and checking behavior of embedded systems across hardware and software. ACM Symposium on User Interface Software and Technology (UIST).

Evan Strasnick, Maneesh Agrawala, Sean Follmer (2017). Scanalog: Interactive design and debugging of analog circuits with programmable hardware. ACM Symposium on User Interface Software and Technology (UIST).

One way to think about all of these innovations is as trying to bring all of the benefits of interactive interfaces — direct manipulation, no notation, and concreteness — to notations that inherently don’t have those properties, by augmenting programming environments with these features. This work blurs the distinction between programming interfaces and interactive interfaces, bringing the power of programming to broader and more diverse audiences. But the work above also makes it clear that there are limits to this blurring: no matter how hard we try, describing what we want rather than demonstrating it directly, always seems to be more difficult, and yet more powerful.

References

-

Azza Abouzied, Joseph Hellerstein, Avi Silberschatz (2012). DataPlay: interactive tweaking and example-driven correction of graphical database queries. ACM Symposium on User Interface Software and Technology (UIST).

-

Edward Benson, Amy X. Zhang, David R. Karger (2014). Spreadsheet driven web applications. ACM Symposium on User Interface Software and Technology (UIST).

-

Blackwell, A. F. (2002). First steps in programming: A rationale for attention investment models. In Human Centric Computing Languages and Environments, 2002. Proceedings. IEEE 2002 Symposia on (pp. 2-10). IEEE.

-

Julia Brich, Marcel Walch, Michael Rietzler, Michael Weber, Florian Schaub (2017). Exploring End User Programming Needs in Home Automation. ACM Trans. Comput.-Hum. Interact. 24, 2, Article 11 (April 2017), 35 pages.

-

Brian Burg, Amy J. Ko, Michael D. Ernst (2015). Explaining Visual Changes in Web Interfaces. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology (UIST '15).

-

Kerry Shih-Ping Chang and Brad A. Myers (2014). Creating interactive web data applications with spreadsheets. ACM Symposium on User Interface Software and Technology (UIST).

-

Philip J. Guo, Sean Kandel, Joseph M. Hellerstein, Jeffrey Heer (2011). Proactive wrangling: mixed-initiative end-user programming of data transformation scripts. ACM Symposium on User Interface Software and Technology (UIST).

-

Joshua Hibschman and Haoqi Zhang (2015). Unravel: Rapid web application reverse engineering via interaction recording, source tracing, library detection. ACM Symposium on User Interface Software and Technology (UIST).

-

David R. Karger, Scott Ostler, Ryan Lee (2009). The web page as a WYSIWYG end-user customizable database-backed information management application. ACM Symposium on User Interface Software and Technology (UIST).

-

Caitlin Kelleher and Randy Pausch (2005). Lowering the barriers to programming: A taxonomy of programming environments and languages for novice programmers. ACM Computing Surveys.

-

Amy J. Ko, Brad A. Myers, Htet Htet Aung (2004). Six learning barriers in end-user programming systems. IEEE Symposium on Visual Languages-Human Centric Computing (VL/HCC).

-

Amy J. Ko, Brad A. Myers (2004). Designing the Whyline: A Debugging Interface for Asking Questions About Program Failures. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Amy J. Ko, Robin Abraham, Laura Beckwith, Alan Blackwell, Margaret Burnett, Martin Erwig, Chris Scaffidi, Joseph Lawrance, Henry Lieberman, Brad Myers, Mary Beth Rosson, Gregg Rothermel, Mary Shaw, Susan Wiedenbeck (2011). The state of the art in end-user software engineering. ACM Computing Surveys.

-

Todd Kulesza, Weng-Keen Wong, Simone Stumpf, Stephen Perona, Rachel White, Margaret M. Burnett, Ian Oberst, Amy J. Ko (2009). Fixing the program my computer learned: barriers for end users, challenges for the machine. International Conference on Intelligent User Interfaces (IUI).

-

Tessa Lau, Julian Cerruti, Guillermo Manzato, Mateo Bengualid, Jeffrey P. Bigham, Jeffrey Nichols (2010). A conversational interface to web automation. ACM Symposium on User Interface Software and Technology (UIST).

-

Gilly Leshed, Eben M. Haber, Tara Matthews, Tessa Lau (2008). CoScripter: automating & sharing how-to knowledge in the enterprise. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Greg Little and Robert C. Miller (2006). Translating keyword commands into executable code. ACM Symposium on User Interface Software and Technology (UIST).

-

Mikaël Mayer, Gustavo Soares, Maxim Grechkin, Vu Le, Mark Marron, Oleksandr Polozov, Rishabh Singh, Benjamin Zorn, Sumit Gulwani (2015). User Interaction Models for Disambiguation in Programming by Example. ACM Symposium on User Interface Software and Technology (UIST).

-

Richard G. McDaniel and Brad A. Myers (1997). Gamut: demonstrating whole applications. ACM Symposium on User Interface Software and Technology (UIST).

-

Will McGrath, Daniel Drew, Jeremy Warner, Majeed Kazemitabaar, Mitchell Karchemsky, David Mellis, Björn Hartmann (2017). Bifröst: Visualizing and checking behavior of embedded systems across hardware and software. ACM Symposium on User Interface Software and Technology (UIST).

-

Robert C. Miller and Brad A. Myers (2001). Outlier finding: focusing user attention on possible errors. ACM Symposium on User Interface Software and Technology (UIST).

-

Robert C. Miller, Victoria H. Chou, Michael Bernstein, Greg Little, Max Van Kleek, David Karger, mc schraefel (2008). Inky: a sloppy command line for the web with rich visual feedback. ACM Symposium on User Interface Software and Technology (UIST).

-

J. F. Pane, B. A. Myers and L. B. Miller, (2002). Using HCI techniques to design a more usable programming system. IEEE Symposium on Visual Languages-Human Centric Computing (VL/HCC).

-

Kayur Patel, Naomi Bancroft, Steven M. Drucker, James Fogarty, Amy J. Ko, James Landay (2010). Gestalt: integrated support for implementation and analysis in machine learning. ACM Symposium on User Interface Software and Technology (UIST).

-

Arvind Satyanarayan, Kanit Wongsuphasawat, Jeffrey Heer (2014). Declarative interaction design for data visualization. ACM Symposium on User Interface Software and Technology (UIST).

-

Evan Strasnick, Maneesh Agrawala, Sean Follmer (2017). Scanalog: Interactive design and debugging of analog circuits with programmable hardware. ACM Symposium on User Interface Software and Technology (UIST).

-

Lea Verou, Amy X. Zhang, David R. Karger (2016). Mavo: Creating Interactive Data-Driven Web Applications by Authoring HTML. ACM Symposium on User Interface Software and Technology (UIST).

-

Tom Yeh, Tsung-Hsiang Chang, Robert C. Miller (2009). Sikuli: using GUI screenshots for search and automation. ACM Symposium on User Interface Software and Technology (UIST).