What Interfaces Mediate

In the last two chapters, we considered two fundamental perspectives on user interface software and technology: a historical one, which framed user interfaces as a form of human augmentation, and a theoretical one, which framed interfaces as a bridge between sensory worlds and computational world of inputs, functions, outputs, and state. A third and equally important perspective on interfaces is what role interfaces play in individual lives and society broadly. While user interfaces are inherently computational artifacts, they are also inherently sociocultural and sociopolitical entities.

Broadly, I view the social role of interfaces as a mediating one. Mediation is the idea that rather than two entities interacting directly, something controls, filters, transacts, or interprets interaction between two entities. For example, one can think of human-to-human interactions as mediated, in that our thoughts, ideas, and motivations are mediated by language. In that same sense, user interfaces can mediate human interaction with many things. Mediation is important, because, as Marshall McLuhan argued, media (which by definition mediates) can lead to subtle, sometimes invisible structural changes in society’s values, norms, and institutions 5 5 Marshall McLuhan (1994). Understanding media: The extensions of man. MIT press.

In this chapter, we will discuss how user interfaces mediate access to three things: automation, information, and other humans. aa Computer interfaces might mediate other things too: these are just the three most prominent society.

Mediating automation

As the theory in the last chapter claimed, interfaces primarily mediate computation. Whether it’s calculating trajectories in World War II or detecting faces in a collection of photos, the vast range of algorithms from computer science that process, compute, filter, sort, search, and classify information provide real value to the world, and interfaces are how people access that value.

Interfaces that mediate automation are much like application programming interfacesapplication programming interface (API): A collection of code used for computing particular things, designed for reuse by others without having to understand the details of the computation. For example, an API might mediate access to facial recognition algorithms, date arithmetic, or trigonometric functions. (APIs) that software developers use. APIs organize collections of functionality and data structures that encapsulate functionality, hiding complexities, and providing a simpler interface for developers to use to build applications. For example, instead of a developer having to learn how to build a machine learning classification algorithm themselves, they can use a machine learning API, which only requires the developer to provide some data and parameters, which the API uses to train the classifier.

In the same way, user interfaces are simply often direct manipulation ways of providing inputs to APIs. For example, think about what operating a calculator app on a phone actually involves: it’s really a way of delegating arithmetic operations to a computer. Each operation is a function that takes some arguments (addition, for example, is a function that takes two numbers and returns their sum). In this example, the calculator is literally an interface to an API of mathematical functions that compute things for a person. But consider a very different example, such as the camera application on a smartphone. This is also an interface to an API, with a single function that takes as input all of the light into a camera sensor, a dozen or so configuration options for focus, white balance, orientation, etc., and returns a compressed image file that captures that moment in space and time. This even applies to more intelligent interfaces you might not think of as interfaces at all, such as driverless cars. A driverless car is basically one complex function that is called dozens of times a second that take a car’s location, destination, and all of the visual and spatial information around the car via sensors as input, and computes an acceleration and direction for the car to move. The calculator, the camera, and the driverless car are really both just interfaces to APIs that expose a set of computations, and user interfaces are what we use to access this computation.

From this API perspective, user interfaces mediate access to APIs, and interaction with an interface is really identical, from a computational perspective, to executing a program that uses those APIs to compute. In the calculator, when you press the sequence of buttons “1”, “+”, “1”, “=”, you just wrote a program add(1,1) and get the value 2 in return. When you open the camera app, point it at your face for a selfie, and tap on the screen to focus, you’re writing the program capture(focusPoint, sensorData) and getting the image file in return. When you enter your destination in a driverless car, you’re really invoking the program while(!at(destination)) { drive(destination, location, environment); } . From this perspective, interacting with user interfaces is really about executing “one-time use” programs that compute something on demand.

How is this mediation? Well, the most direct way to access computation would be to write computer programs and then execute them. That’s what people did in the 1960’s before there were graphical user interfaces, but even those were mediated by punchcards, levers, and other mechanical controls. Our more modern interfaces are better because we don’t have to learn as much to communicate to a computer what computation we want. But, we still have to learn interfaces that mediate computation: we’re just learning APIs and how to program with them in graphical, visual, and direct manipulation forms, rather than as code.

Of course, as this distance between what we want a computer to do and how it does it grows, so does fear about how much trust to put in computers to compute fairly. A rapidly growing body of research is considering what it means for algorithms to be fair, how people perceive fairness, and how explainable algorithms are 1,7,9 1 Abdul, A., Vermeulen, J., Wang, D., Lim, B.Y., Kankanhalli, M (2018). Trends and trajectories for explainable, accountable, intelligible systems: an HCI research agenda. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Rader, E., Cotter, K., Cho, J. (2018). Explanations as mechanisms for supporting algorithmic transparency. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Woodruff, A., Fox, S.E., Rousso-Schindler, S., Warshaw, J (2018). A qualitative exploration of perceptions of algorithmic fairness. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Mediating information

Interfaces aren’t just about mediating computation, however. They’re also about accessing information. Before software, other humans mediated our access to information. We asked friends for advice, we sought experts for wisdom, and when we couldn’t find people to inform us, we consulted librarians to help us find recorded knowledge, written by experts we didn’t have access to. Searching and browsing for information was always an essential human activity.

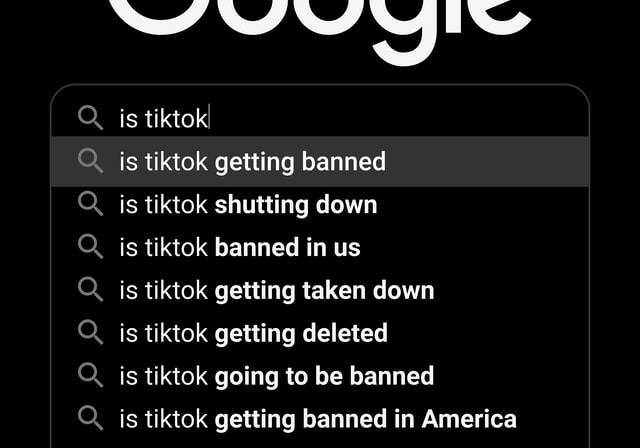

Computing changed this. Now we use software to search the web, to browse documents, to create information, and to organize it. Because computers allow us to store information and access it much more quickly than we could access information through people or documents, we started to build systems for storing, curating, and provisioning information on computers and access it through user interfaces. We took all of the old ideas from information science such as documents, metadata, searching, browsing, indexing, and other knowledge organization ideas, and imported those ideas into computing. Most notably, the Stanford Digital Library Project leveraged all of these old ideas from information science and brought them to computers. This inadvertently led to Google, which still views its core mission as organizing the world’s information, which is what libraries were originally envisioned to do. But there is a difference: librarians, card catalogs, and libraries in general view their core values as access and truth, Google and other search engines ultimately prioritize convenience and profit, often at the expense of access and truth.

The study of how to design user interfaces to optimally mediate access to information is usually called information architectureinformation architecture: The study and practice of organizing information and inferfaces to support searching, browsing, and sensemaking. 8 8 Rosenfeld, L., & Morville, P. (2002). Information architecture for the world wide web. O'Reilly Media, Inc..

In practice, user interfaces for information technologies can be quite simple. They might consist of a programming language, like Google’s query language , in which you specify an information need (e.g., cute kittens ) that is satisfied with retrieval algorithms (e.g., an index of all websites, images, and videos that might match what that phrase describes, using whatever metadata those documents have). Google’s interface also includes systems for generating user interfaces that present the retrieved results (e.g., searching for flights might result in an interface for purchasing a matching flight). User interfaces for information technologies might instead be browsing-oriented, exposing metadata about information and facilitating navigation through an interface. For example, searching for a product on Amazon often involves setting filters and priorities on product metadata to narrow down a large set of products to those that match your criteria. Whether an interface is optimized for searching, browsing, or both, all forms of information mediation require metadata.

Mediating communication

While computation and information are useful, most of our socially meaningful interactions still occur with other people. That said, more of these interactions than ever are mediated by user interfaces. Every form of social media — messaging apps, email, video chat, discussion boards, chat rooms, blogs, wikis, social networks, virtual worlds, and so on — is a form of computer-mediated communicationcomputer-mediated communication: Any form of communication that is conducted through some computational device, such as a phone, tablet, laptop, desktop, smart speaker, etc. 4 4 Fussell, S. R., & Setlock, L. D. (2014). Computer-mediated communication. Handbook of Language and Social Psychology. Oxford University Press, Oxford, UK, 471-490 .

What makes user interfaces that mediate communication different from those that mediate automation and information? Whereas mediating automation requires clarity about an API’s functionality and input requirements, and mediating information requires metadata to support searching and browsing, mediating communication requires social context . For example, in their seminal paper, Gary and Judy Olson discussed the vast array of social context present in collocated synchronous interactions that have to be reified in computer-mediated communication 6 6 Olson, G. M., & Olson, J. S. (2000). Distance matters. Human-Computer Interaction.

Cho, D., & Kwon, K. H. (2015). The impacts of identity verification and disclosure of social cues on flaming in online user comments. Computers in Human Behavior.

Many researchers in the field of computer-supported collaborative work have sought to find designs that better support social processes, including ideas of “social translucence,” which achieves similar context as collocation, but through new forms of visibility, awareness, and accountability 3 3 Erickson, T., & Kellogg, W. A. (2000). Social translucence: an approach to designing systems that support social processes. ACM transactions on computer-human interaction (TOCHI), 7(1), 59-83.

These three types of mediation each require different architectures, different affordances, and different feedback to achieve their goals:

- Interfaces for computation have to teach a user about what computation is possible and how to interpret the results.

- Interfaces for information have to teach the kinds of metadata that conveys what information exists and what other information needs could be satisfied.

- Interfaces for communication have to teach a user about new social cues that convey the emotions and intents of the people in a social context, mirroring or replacing those in the physical world.

Of course, one interface can mediate many of these things. Twitter, for example, mediates information to resources, but it also mediates communication between people. Similarly, Google, with its built in calculator support, mediates information, but also computation. Therefore, complex interfaces might be doing many things at once, requiring even more careful teaching. Because each of these interfaces must teach different things, one must know foundations of what is being mediated to design effective interfaces. Throughout the rest of this book, we’ll review both the foundations of user interface implementation, but also how the subject of mediation constrains and influences what we implement.

References

-

Abdul, A., Vermeulen, J., Wang, D., Lim, B.Y., Kankanhalli, M (2018). Trends and trajectories for explainable, accountable, intelligible systems: an HCI research agenda. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Cho, D., & Kwon, K. H. (2015). The impacts of identity verification and disclosure of social cues on flaming in online user comments. Computers in Human Behavior.

-

Erickson, T., & Kellogg, W. A. (2000). Social translucence: an approach to designing systems that support social processes. ACM transactions on computer-human interaction (TOCHI), 7(1), 59-83.

- Fussell, S. R., & Setlock, L. D. (2014). Computer-mediated communication. Handbook of Language and Social Psychology. Oxford University Press, Oxford, UK, 471-490

-

Marshall McLuhan (1994). Understanding media: The extensions of man. MIT press.

-

Olson, G. M., & Olson, J. S. (2000). Distance matters. Human-Computer Interaction.

-

Rader, E., Cotter, K., Cho, J. (2018). Explanations as mechanisms for supporting algorithmic transparency. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Rosenfeld, L., & Morville, P. (2002). Information architecture for the world wide web. O'Reilly Media, Inc..

-

Woodruff, A., Fox, S.E., Rousso-Schindler, S., Warshaw, J (2018). A qualitative exploration of perceptions of algorithmic fairness. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).