The power of information

My great grandfather was a saddle maker and farmer in Horslunde, on the poor island of Lolland, Denmark. In the 1850’s, his life was likely defined by silence. He quietly worked on saddles in a shed, working with his hands to shape and mold cowhide. He tended to his family’s one horse. Most of his social encounters were with his wife, children, and perhaps the small community church of a few dozen people each Sunday. I’m sure there was drama, and gossip, and some politics, but those likely happened on the scale of weeks and months, as communication traveled slowly, by mouth and paper. His children—my grandfather and his siblings—likely played in the quiet grassy flat fields in the summer, far from anyone. Life was busy and physically demanding, but it was quiet.

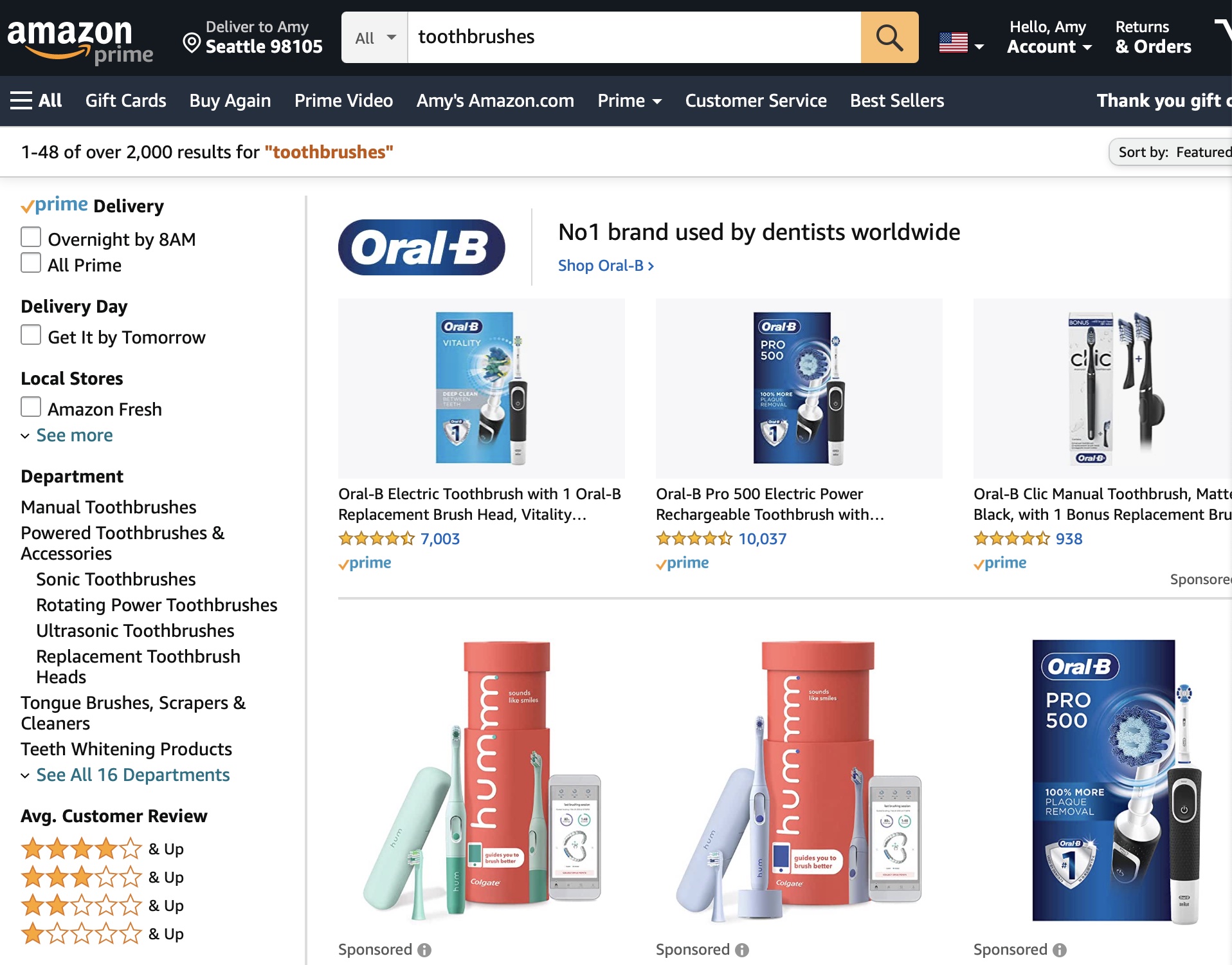

My life, in contrast, is noisy. As a professor, my research connects me with people across North and South America, all of Europe, and much of Asia and Australasia. I receive hundreds of emails each day, I see thousands of tweets, I might have a dozen friends and family message me on a dozen different platforms, causing my smart watch to gently vibrate every few minutes. My news aggregator pushes hundreds of stories to me, Facebook and LinkedIn share hundreds of updates from friends, family, and colleagues. The moment I’m bored, I have limitless games, television, movies, podcasts, magazines, comics, and books to choose from. I might spend just as much time using search engines and reading reviews to help decide what information to consume as I do actually consuming it. If my Danish grandparent’s lives were defined by quiet focus, mine is defined by clamorous distraction.

Despite these dramatic differences in the density and role of information in our two lives, information was very much present in both of them. My grandfather’s information was about livestock, weather, and the grain and quality of leather; my information is about knowledge, ideas, and politics. In either of our lives, has information been any more or less important or necessary? My grandfather needed it to sell saddles and I need it to educate and discover. I may have access to more of it, but it’s not obvious that this is better—just different.

What these two stories show is that information was, is, and always will be necessary to human thriving. In fact, some describe humanity as informavores , dependent on finding patterns, predictions, and order in an increasingly entropic, chaotic world 4 4 George A. Miller (1983). Informavores. The Study of Information: Interdisciplinary Messages.

Of course, some information is less necessary and powerful than other information. Much of the internet has little value; in fact, content on the web is increasingly generated by algorithms, which read and recombine existing writing to produce things that appear novel, but in essence are not. Most of the media produced does not change lives, but serves merely to resolve boredom. Our vibrant cities are noisier than ever, but most of that noise has no meaning or value to us. And the news, while more accessible than ever, is increasingly clickbait, with little meaningful journalistic or investigative content.

If it’s not the abundance of information that makes it powerful, what is it?

What is powerful about information?

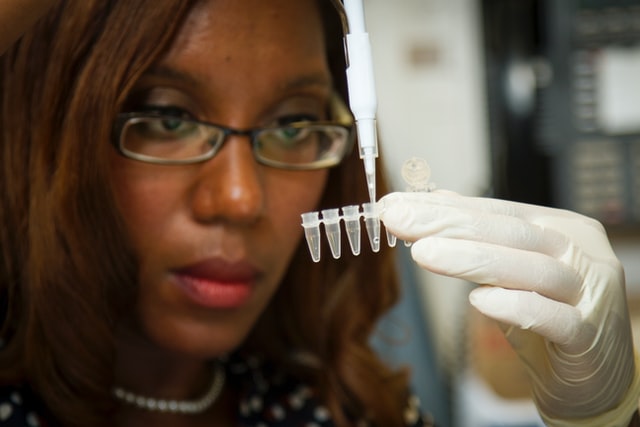

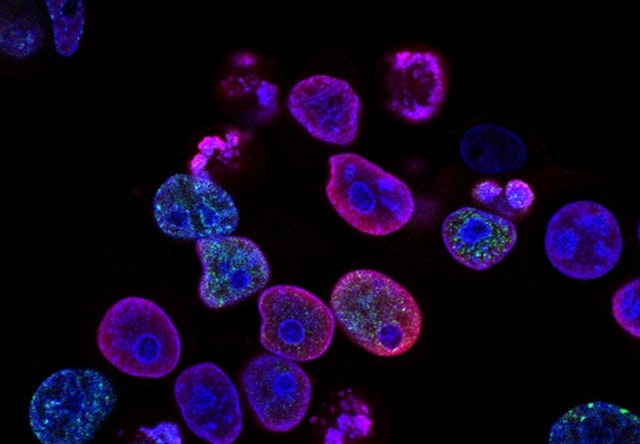

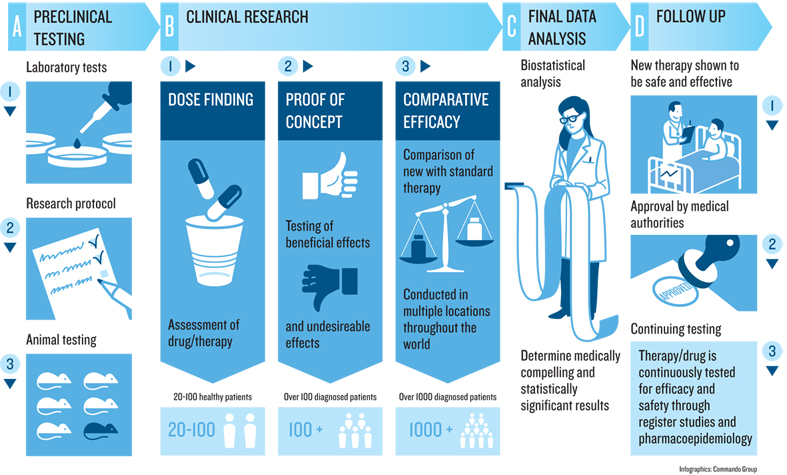

The power of information comes from how it is situated in powerful contexts. Consider, for example, the SARS-CoV-2 Pfizer vaccine, which is one of many vaccines that will help build and sustain global immunity to the dangerous virus. This is a powerful, innovative vaccine that is fundamentally information: it encodes how to construct the “spike” of SARS-CoV-2 found on the surface of the coronavirus that causes COVID-19. When that information, stored as messenger RNA, is injected into our bodies, our cells read those mRNA instructions, assemble the spike protein, and our immune systems, rich databases of safe and dangerous proteins, detect the spike as a new foreign body, and add it to their database of substances to attack. The next time our immune systems see that spike, they are ready to act and destroy the virus before it replicates and causes COVID-19 symptoms. Information, therefore, keeps us healthy .

There is also power behind the origins of that DNA sequence. In February and March 2020, an international community of American and Chinese researchers worked together to model, describe, and share the structure of the SARS-CoV-2 spike. Their research depended on computers, on DNA sequencers, on the internet for distribution of knowledge, and on the free movement of information across national boundaries in academia. All of these information systems, and the centuries of research on vaccines, DNA, and computing, were necessary for making the vaccine possible in record time. The power of the vaccine, then, isn’t just about an mRNA sequence, but an entire history of discovery, innovation, and institutions that use information to understand nature. Information, therefore, fuels discovery .

Stories of the power of information abound in history 2 2 Joseph Janes (2017). Documents That Changed the Way We Live. Rowman & Littlefield.

Prior to 1592, there was no standard calendar in western civilization, and so it was hard to discuss time. The declaration of Pope Gregory XIII defined months, weeks, years, and days for Europe—and due to the rampant colonialism that followed—for most of the world 5 5 Gordon Moyer (1982). The Gregorian Calendar. Scientific American.

As European colonialism waned and modern democracies emerged, information was a central force in shaping new systems of government. For example, the Declaration of Independence, released on July 4th, 1776, can be thought of simply as a one page letter to the Kingdom of Great Britain, declaring the British colonies as sovereign states. But it was far more than a letter: it led to the Revolutionary War, the decline of power in Great Britain, and the writing of the U.S. Constitution 1 1 Max Edling (2003). A Revolution in Favor of Government: Origin of the U.S. Constitution and the Making of the American State. OUP USA.

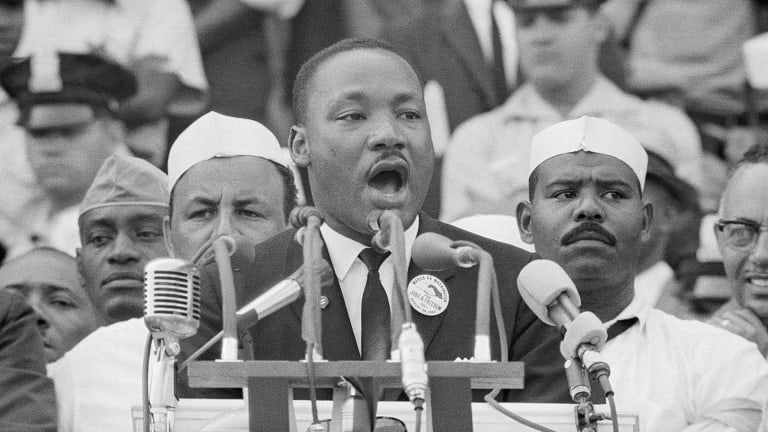

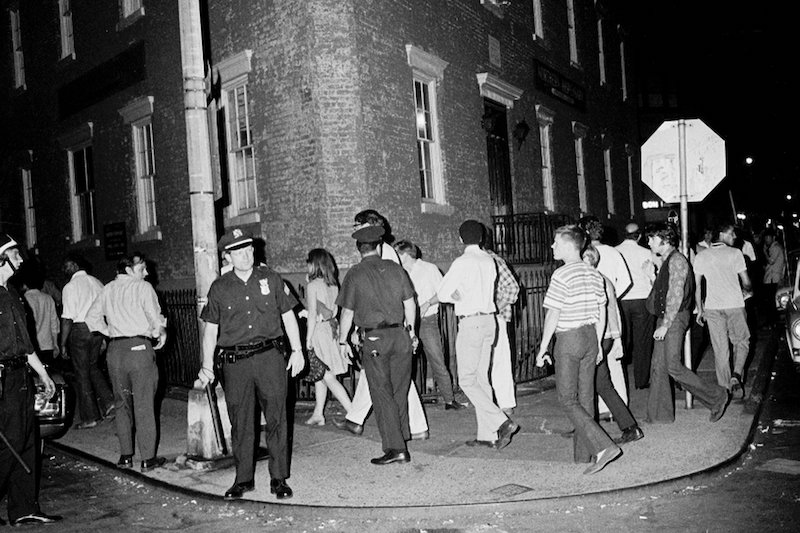

Some of these injustices in the United States led to information that changed the course of civil rights. For example, Martin Luther King, Jr’s 1963 speech, I have a dream , conveyed a vision of the United States without racism 7 7 Eric J. Sundquist (2009). King's Dream. Yale University Press.

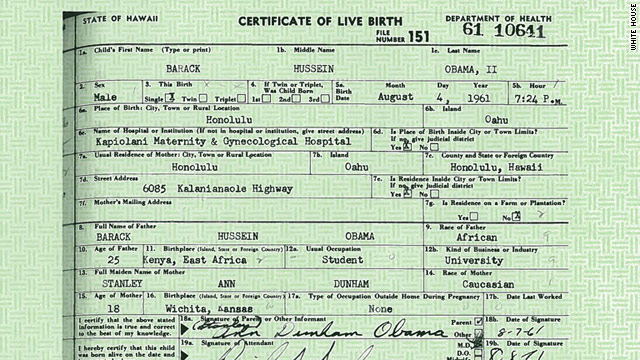

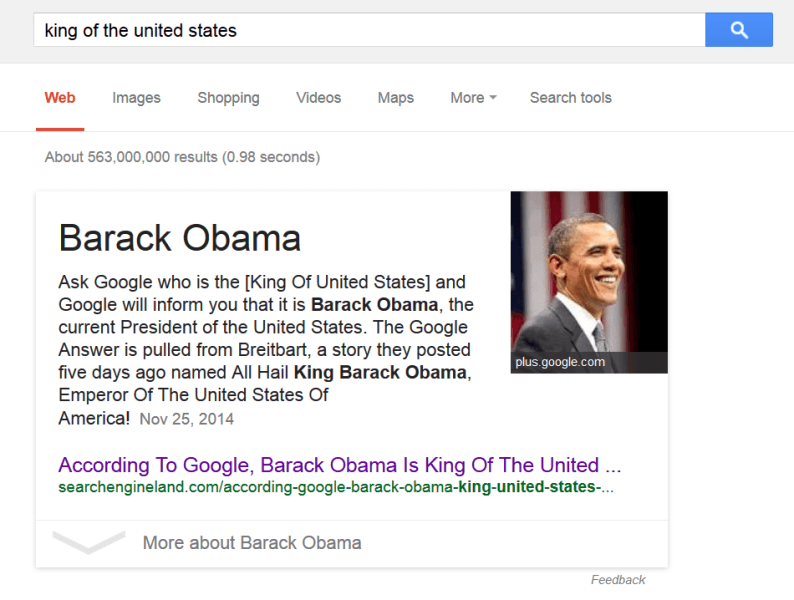

Throughout this history of human and civil rights, a central challenge has been defining identity. Who is American? How does one prove they were born here? Documents like birth certificates, filled out and signed at the time and place of birth, become powerful media for laying claim to the rights of citizenship. Nowhere was this more apparent than in President Trump’s baseless conspiracy theories of President Obama’s citizenship 6 6 Vincent N. Pham (2015). Our foreign president Barack Obama: The racial logics of birther discourses. Journal of International and Intercultural Communication.

In our 21st century, identity is just one part of the much larger way that we use information to communicate. When we express our views on social media, they are connected to our identities, and shape how people see us and interpret our motives and intents. For example, consider this moment in 2017, when long-time U.S. Senator John McCain stood in front of his senate colleagues, who awaited his up or down vote on whether to repeal the Affordable Care Act. His thumbs down, a simple non-verbal information signal, shocked the senate floor, reinforced his “maverick” reputation, and preserved the health care of millions of people. Information, therefore, binds us.

The illusory power of technology

Information, of course, is woven through all of our interactions with people and nature, and so it does much more than just the things above. It helps us diagnose and explain problems, it archives what humanity has discovered, it entertains us, it connects us, and it gives us meaning.

But the power of information, therefore, derives more from its meaning, its context, and how it is interpreted and used, and less from how it is transmitted. Moreover, it’s not information itself that is powerful, but the potential for action that information can create that is powerful: after all, having information does not necessarily mean understanding it, and understanding it does not necessarily mean acting upon it. It is easy to forget this, especially in our modern world, where information technology like Google, the internet, and smartphones clamor for our attention, and seem to be responsible for so much in society. It’s easy to confuse information technology for the cause of this power. Consider, for example, Google search, an inescapable fixture in how the modern world finds information. What is Google search without content? Merely an algorithm that finds and organizes nothing. Or, think about using your smartphone without an internet connection or without anyone to talk to. Of what value is its microprocessor, its memory, and its applications if there is no content to consume and no people to connect with? Information technology is, ultimately, just a vessel for information to be stored, retrieved, and presented—there is nothing intrinsically valuable about it. And information itself has a similar limit to its power: without people to understand and act upon it, it is inert.

This perspective challenges Marshall McLuhan’s famous phrase, “the medium is the message” 3 3 Marshall McLuhan (1964). Understanding Media: The Extensions of Man. Routledge, London.

In the rest of this book, we will try to define information, demarcating its origins, explaining its impacts, deconstructing our efforts to create and share it, and problematizing our use of technology to store, retrieve, and present it. Throughout, we shall see that while information is powerful in its capacity to shape action, and information technology can make it even more powerful, information can also be perilous to capture and share without doing great harm and injustice.

Podcasts

The podcasts below all reveal the power of information in different ways.

- The Other Extinguishers (Act 2), Boulder v. Hill, This American Life. Shows how a long, collaborative history of science led to the DNA sequencing of the SARS-CoV-2 “spike” in just 10 minutes ( transcript )

- Electoral College Documents, 2020 , Documents that Changed the World. Discusses how the U.S. electoral college system actually determine transfer of power in United States elections.

- Statistical Significance, 1925 , Documents that Changed the World. Discusses the messy process by which scientific certainty is established.

References

-

Max Edling (2003). A Revolution in Favor of Government: Origin of the U.S. Constitution and the Making of the American State. OUP USA.

-

Joseph Janes (2017). Documents That Changed the Way We Live. Rowman & Littlefield.

-

Marshall McLuhan (1964). Understanding Media: The Extensions of Man. Routledge, London.

-

George A. Miller (1983). Informavores. The Study of Information: Interdisciplinary Messages.

-

Gordon Moyer (1982). The Gregorian Calendar. Scientific American.

-

Vincent N. Pham (2015). Our foreign president Barack Obama: The racial logics of birther discourses. Journal of International and Intercultural Communication.

-

Eric J. Sundquist (2009). King's Dream. Yale University Press.

The peril of information

When I was in high school, I was a relatively good student. All but one of my grades were A’s, I was deeply curious about mathematics and computer science, and I couldn’t wait to go to college to learn more. My divorced parents weren’t particularly wealthy, and so I worked many part-time jobs to save up for college applications and AP exam fees, bagging groceries, babysitting, and tutoring. It didn’t leave much time for extracurriculars. I was sure that colleges would understand my circumstances and see my promise. But when the decisions came back, I’d only been admitted to two places: my public state university, and the University of Washington, but the latter only offered a $500 loan, and I had no college savings. My dreams were shattered because the committee ignored the context of the information I gave them: that I had no time to stand out in any other way beyond grades because I was busy working, supporting my family.

Familiar stories like this show that not all information is good information or used in good ways. In fact, as powerful as information is, there is nothing about power that is inherently good either. In fact, power can be perilous. The admissions committees at my dream universities had great power to shape my fate through their decisions, and the information they asked me to share didn’t allow me to tell my whole story. And my lack of guidance from school counselors, teachers, and parents meant that I didn’t know what information to give to increase my chances. The committee’s decisions, while quite a powerful form of information, were therefore in no way neutral. In fact, behind their decisions were a particular set of values and notions of merit that shaped the information they requested, the information they provided, and the decision they sent me in thin envelopes in the mail.

With power comes peril

What is power? Within the context of society, powerpower: The capacity to influence and control others. is capacity to influence the emotions, behavior, and opportunities of other people. Power and information are intimately related in that information is itself a form of influence. When you listen to someone speak or read someone’s writing, the information they convey can shape our thoughts and ideas. When you take up physical space and give non-verbal cues of a reluctance to move, you are signaling information about what physical threats you might impose. When you share some information on social media, you are helping an idea find its way into other people’s minds. All of these forms of communication are therefore forms of power.

But power is not just communication. Power also resides in our knowledge and beliefs, shaped by information we received long ago. As children, for example, many of us learn ideas of racism, sexism, ableism, transphobia, xenophobia, and other forms of discrimination, fear, or social hierarchy. These ideas, beliefs, and assumptions form systems of power, in which those high in social hierarchies can influence who has rights, resources, and opportunity. Sociologist Patricia Collins called these forms of power, and their many interactions, the matrix of dominationmatrix of domination: The system of culture, norms, and laws designed to maintain particular social hierarchies of power. 1 1 Patricia Collins (1990). Black feminist thought: Knowledge, consciousness, and the politics of empowerment. Routeledge.

D’Ignazio and Klein, in their book Data Feminism 2 2 Catherine D'Ignazio, Lauren F. Klein (2020). Data Feminism. MIT Press.

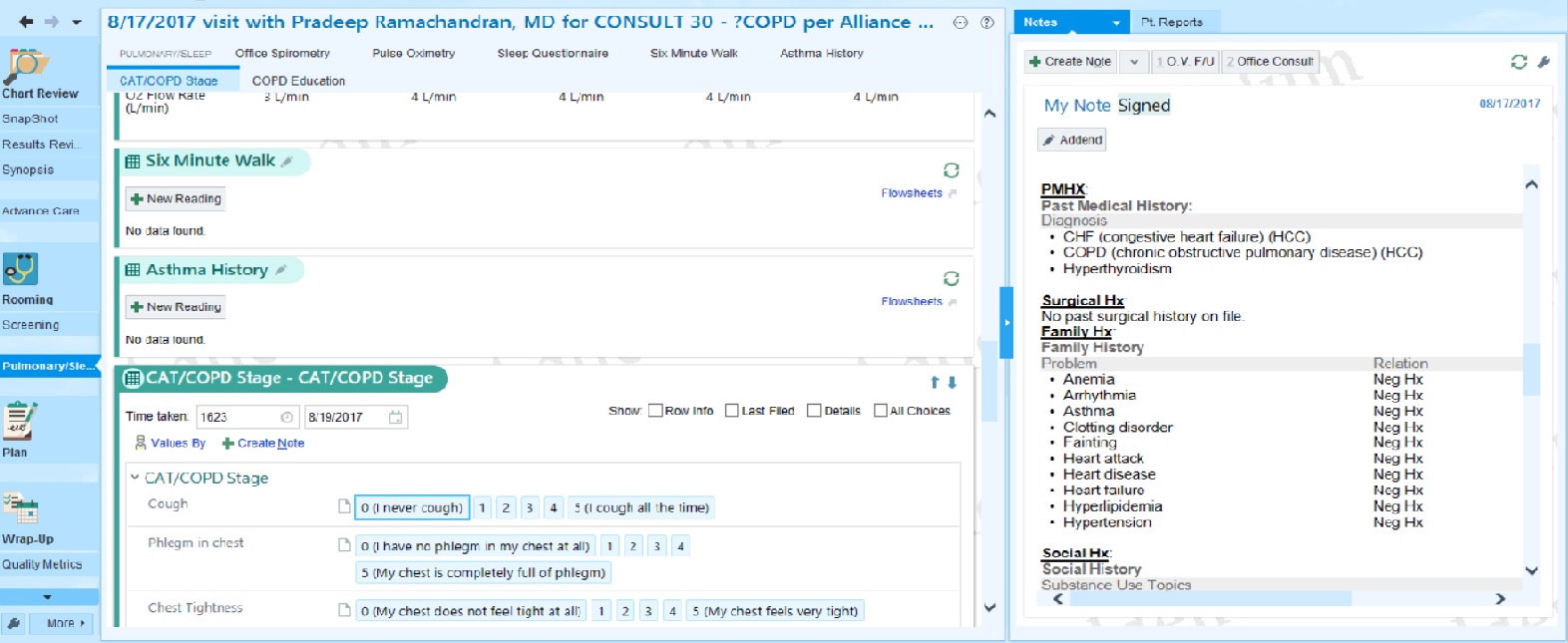

Consequences of information

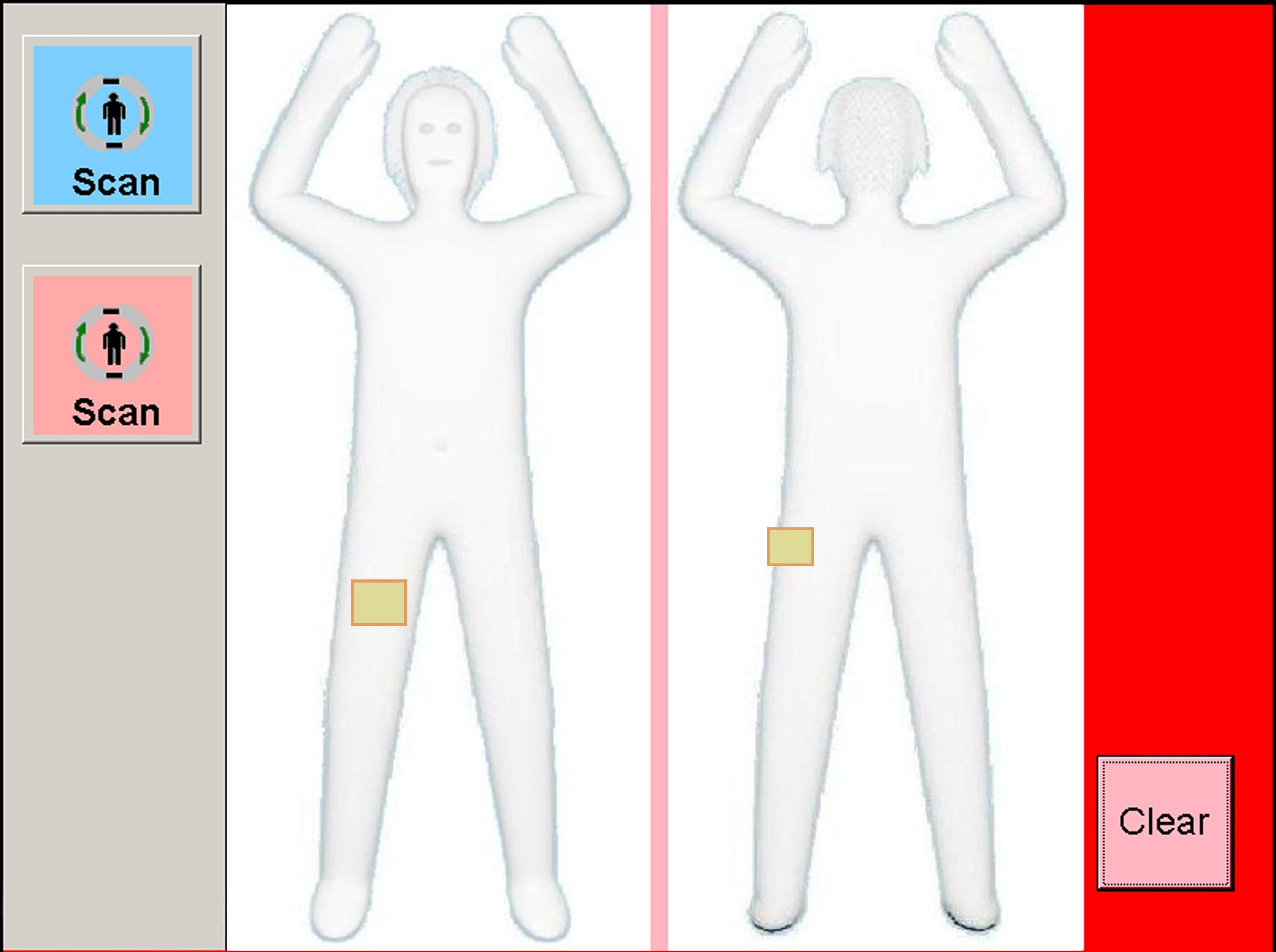

Let’s consider some of the many ways that information and its underlying values can be perilous. One seemingly innocuous problem with information is that it can misrepresent . For example, when I go to an airport in the United States, I often have to be scanned by a TSA body scanner. This scanner creates a three dimensional model of the surface of my body using x-ray backscatter. The TSA agent then selects male or female, and the algorithm inside the scanner compares the model of my body to a machine learned model of “normal” male and female bodies, based on a data set of undisclosed origin. If it finds discrepancies between the model of my body and its model of “normal,” I am flagged as anomalous, and then subjected to a public body search by an agent. For most people, the only time this will happen is if there is something that looks like a gun to the scanner. But many transgender people such as myself, as well as other people whose bodies do not conform to stereotypically gendered shapes such as those with disabilities, are frequently flagged and searched. This discrimination, in which “normal” bodies are protected by security, and “anomalous” bodies are invasively searched and sometimes humiliated, derives from how the TSA scanners and the TSA agents misrepresent the actual diversity of human bodies. They misrepresent this diversity because of the matrix of domination that excludes gender non-conforming and disabled people from consideration in design.

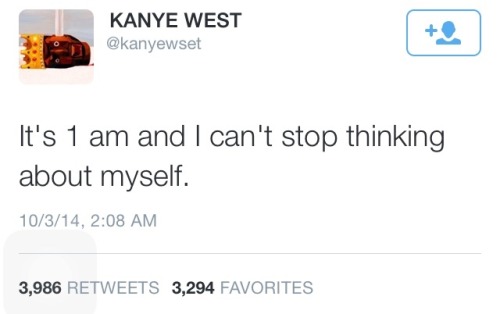

Information can also be false or misleading, misinforming its recipient. This was perhaps no more apparent during President Trump’s 2016-2020 term, in which he wrote countless tweets that were demonstrably false about many subjects, including COVID-19. For example, on October 5th, 2020, just after being released from the hospital a few days after testing positive for the virus, he tweeted:

I will be leaving the great Walter Reed Medical Center today at 6:30 P.M. Feeling really good! Don’t be afraid of Covid. Don’t let it dominate your life. We have developed, under the Trump Administration, some really great drugs & knowledge. I feel better than I did 20 years ago!

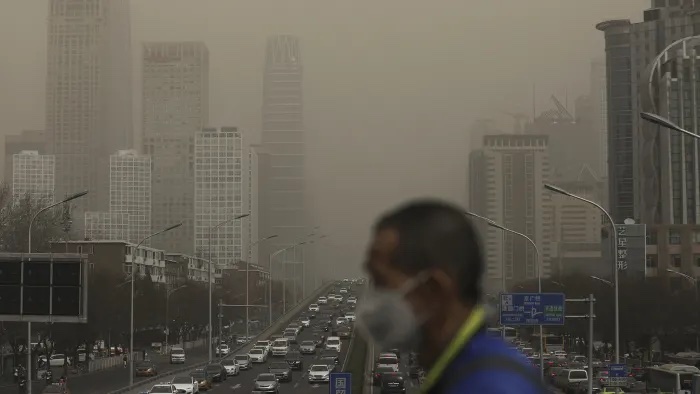

Whether or not the President was intentionally giving bad advice, or this was simply fueled by his steroid injection, its impact was clear, as the tweet was shortly followed by the Autumn 2020 wave of infections in the United States, fueled by conspiracy theories framing the virus as a hoax, and pressure on state and local officials to avoid stricter public health rules. Hundreds of thousands of people died in the U.S., likely due partly to the President’s persistent spreading of misleading information about the virus and many years of similar misinformation about vaccines, spread by parents who did not understand or believe the science of immunology.

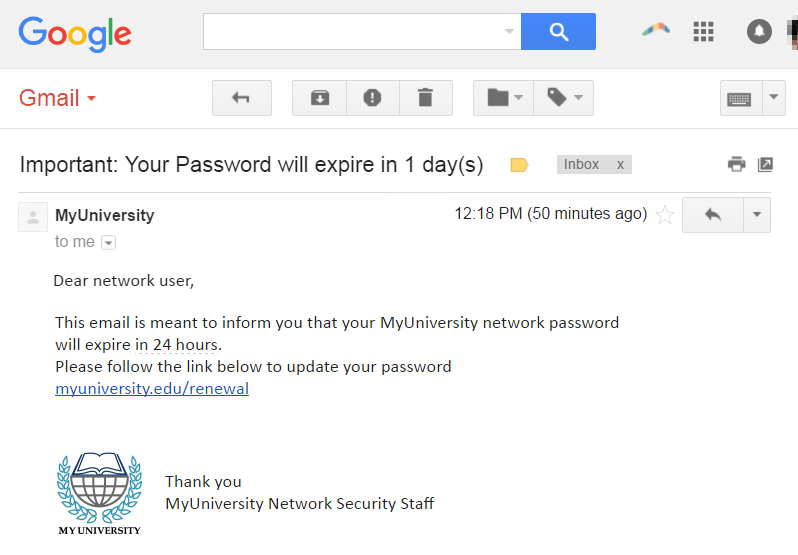

Information can also disinform . Unlike misinformation, which is independent of intent, disinformation is information that people know to be false, and spread in order to influence behavior in particular ways. For example, many states in the United States require doctors who are administering abortions to lie to patients about the effects of abortion; the intent of these laws is framed as informed consent, but many legislators have admitted that their actual purpose is to disincentivize people from following through on abortions. Similarly, QAnon conspiracies , have created even larger collective delusions that lead to entirely alternate false realities. Disinformation, then, is a form of power and influence through deception.

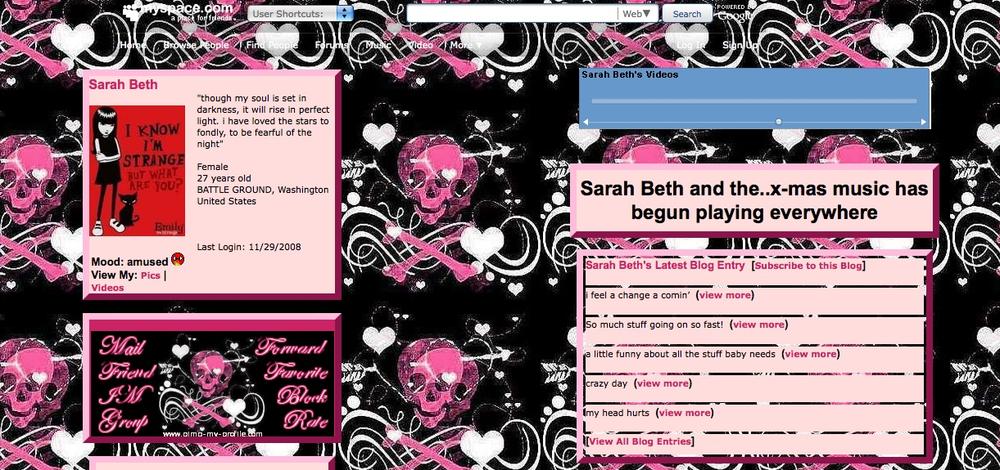

Some information may be true, but may be created to manipulate , by misrepresenting its purpose. For example, in the video above, technologist Jaron Lanier discusses how social media platforms like Facebook and Instagram (also owned by Facebook) are fundamentally about selling ads. Facebook is not presented this way—in fact, it is rather innocuously presented as a way to stay connected with friends and family—but its true purpose is to ensure that when an advertisement is shown in a social media feed that we attend to it and possibly click on it, which makes Facebook money. Similarly, a “like” button is presented as a social signal of support or affirmation, but its primary purpose is to help generate a detailed model of our individual interests, so that ads may be better targeted towards us. Independent of whether you think this is a fair trade, it is fundamentally manipulation, as Facebook misrepresents the motives of its features and services.

Information can also overload 4 4 Benjamin Scheibehenne, Rainer Greifender, Peter M. Todd (2010). Can There Ever Be Too Many Options? A Meta‐Analytic Review of Choice Overload. Journal of Consumer Research.

Sometimes, information can be addictive 3 3 Samaha, M., & Hawi, N. S. (2016). Relationships among smartphone addiction, stress, academic performance, and satisfaction with life. Computers in Human Behavior.

Samaha, M., & Hawi, N. S. (2016). Relationships among smartphone addiction, stress, academic performance, and satisfaction with life. Computers in Human Behavior.

Some recent research has even shown that ease of accessing information can lead to isolation 5 5 Sherry Turkle (2011). Alone together: Why we expect more from technology and less from ourselves. MIT Press.

Information can also kill . Consider, for example, the case of the Uber autonomous driving sensor system . In March of 2018, it was driving down a highway with a human driver monitoring it. Elaine Herzberg, a pedestrian, was crossing the road with her bike at 10 p.m. The driver was not impaired, but also was not monitoring the system. The system noticed an obstacle, but could not classify it; then, it classified it as a vehicle; then as a bicycle. And finally, 1.3 seconds before impact, the system determined that an emergency braking maneuver was required to avoid a collision. However, this mode was disabled during automated driving mode, and the driver was not notified, so the car struck and killed Elaine. This critical bit of information—danger!—was never sent to the driver, and since the driver was not paying attention, someone died. Beyond automation, errors in information can kill in any safety-critical context, including flight, health care, and social safety nets that provide food, shelter, and security.

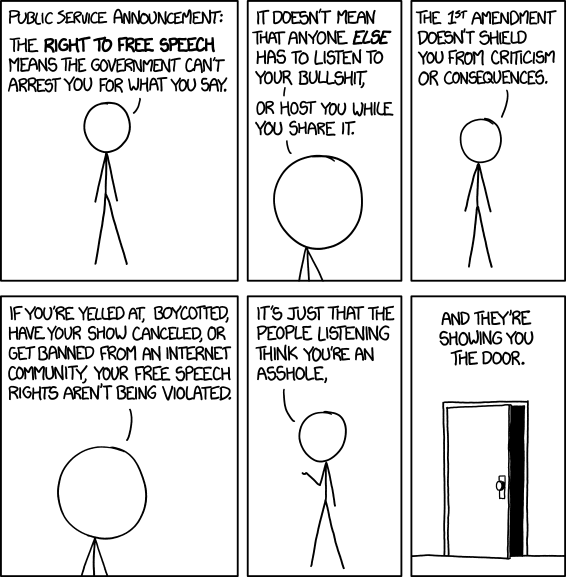

These, of course, are not the only potential problems with information. The world has an ongoing struggle with the tensions of free speech, censorship, and the many ways we have discussed above that information can do harm. With the ability of the internet to archive much of our past, there are also many open questions about what rights we have to erase information stored on other people’s computers that might tie us to a past life, a past action, or a past name or identity. These and the numerous many other questions, reinforce that information has values, and those values are intrinsically tied to the ways that we exercise control over each other.

Podcasts

The podcasts below all reveal the peril of information in different ways.

- Family Stories, Family Lies , Code Switch. Shares one story of how the origins of a family name are far more complicated than one can ever imagine, capturing a multi-generation history of love and oppression.

- A Conspiracy Theory Proved Wrong, The Daily, The New York Times . Discusses the history of the QAnon conspiracy and its aftermath after its predictions did not come true.

- No Silver Bullets, On the Media, WNCY Studios . Discusses how information and propaganda has led to far-right extremism, and how information is also used to deradicalize.

- Facebook’s CTO on Misinformation, In Machines We Trust . Facebook’s CTO describes Facebook’s struggling efforts to detect misinformation and prevent harm.

- Undercover and Over-Exposed, On the Media . Discusses the risks of freely sharing information with journalists.

References

-

Patricia Collins (1990). Black feminist thought: Knowledge, consciousness, and the politics of empowerment. Routeledge.

-

Catherine D'Ignazio, Lauren F. Klein (2020). Data Feminism. MIT Press.

-

Samaha, M., & Hawi, N. S. (2016). Relationships among smartphone addiction, stress, academic performance, and satisfaction with life. Computers in Human Behavior.

-

Benjamin Scheibehenne, Rainer Greifender, Peter M. Todd (2010). Can There Ever Be Too Many Options? A Meta‐Analytic Review of Choice Overload. Journal of Consumer Research.

-

Sherry Turkle (2011). Alone together: Why we expect more from technology and less from ourselves. MIT Press.

Data, information, knowledge

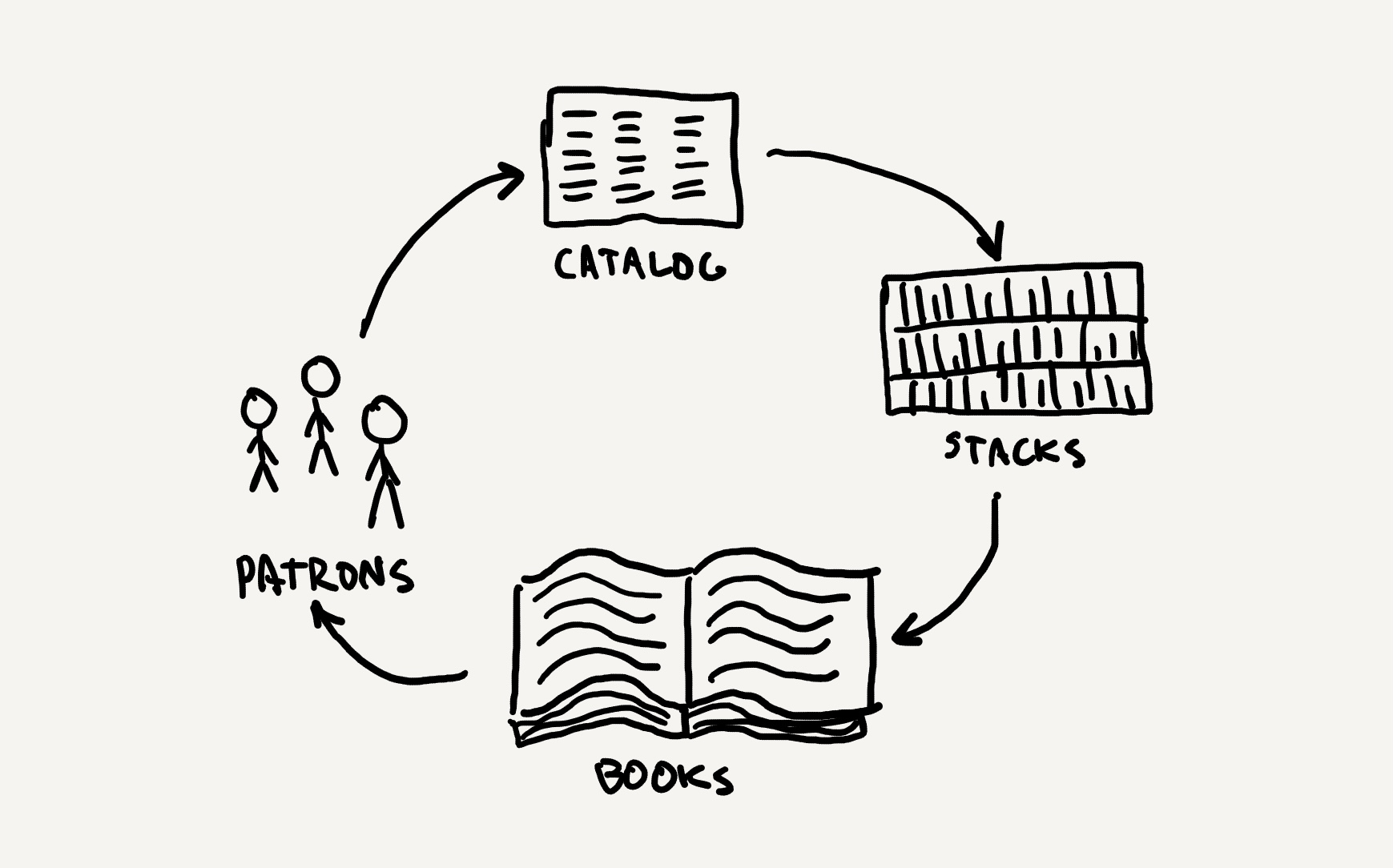

When I was a child in the 1980’s, there was no internet, there were no mobile devices, and computers were only just beginning to reach the wealthiest of homes. My experience with information was therefore decidedly analog. I remember my three primary sources of information fondly. First, a few times a month, my mother would take my brother and I to our local public library, and we would browse, find a pile of books that captured our attention, and then simply sit, in silence, and read together for hours. Eventually, we would get hungry, and we would check out a dozen books, and then devour them at home together for the next few weeks, repeating the cycle again. My second source was the newspaper. Every morning, my father would leave early in the morning to get a donut and coffee, and go to the local newspaper rack on the street to buy a copy of The Oregonian for a nickel, a dime, or a quarter. Sometimes I would join him and get a donut myself, and then we would come home, eating donuts together while he read the news and I read the comics. My third source was magazines. In particular, I subscribed to 3-2-1 Contact , a science, technology, and math education magazine that accompanied the 1980 broadcast television show. The monthly magazine came with fiction, non-fiction, math puzzles, and even reader submissions of computer programs written in the BASIC programming language—type them in and see what happens! I would run out to our mailbox every morning near the beginning of the month to see if the latest issue had come. And when it did, it consumed the next week of my free time.

Of course, this analog world was quickly replaced with digital. Forty years later, the embodied joy of reading books, news, and magazines with my family, and the wonderful anticipation that came with having to wait for information, was replaced with speed. I still read books, but I click a button to get them on my tablet instantaneously. I still read the news, but I scroll through a personalized news feed at breakfast, with little sense of shared experience with family. And I still read magazines, but on a tiny smartphone screen, whenever I want, which is rarely. Instead, I fall mindlessly into the infinite YouTube rabbit hole, with no real sense of continuity, anticipation, or wonder. Computer science imagined a world in which we could get whatever information we want, whenever we want, and then realized it over the past forty years. And while the words I can find in these new media are the same kind as those forty years ago, somehow, the experience of this information just isn’t the same.

This change in media begs an important question: what is information? We can certainly name many things that seem to contain it: news, books, and magazines, like above, but also movies, speeches, music, data, talking, writing, and perhaps even non-verbal things like sign language, dancing, facial expressions, and posture. Or are these just containers for information and the information itself is things like words, images, symbols, and bits? And what about things in nature, like DNA? Information seems to be everywhere—in nature, in our brains, in our language, and in our technology—but can something that seems to be in everything be a useful idea?

What is information?

This is a question that Michael Buckland grappled with in 1991 2 2 Michael K. Buckland (1991). Information as thing. Journal of the American Society for information Science.

- One idea was as information as a process , in which a person becomes informed and their knowledge changes. This would suggest that information is not some object in the world, but rather some event that occurs in the world, in the interaction between things. The challenge with this notion of information is that process is situational and contextual: the door in my office, to me, might not be informational at all, it might just play the role of keeping heat inside. But to someone else, the door being closed might be informational, signaling my availability. From a process perspective, the door itself is not information, but particular people in particular situations may glean different information from the door and its relation to other social context about its meaning. If information is process, then anything can be information, and that doesn’t really help us define what information is.

- Another idea that Buckland explored was information as knowledge . This notion of information makes it intangible, as knowledge, belief, opinion, and ideas are personal, subjective, and stored in the mind. The only way to access them is for that knowledge to be communicated in some way, through speech, writing, or other signal. For example, I know what it feels like to be bored, but communicating that feeling requires some kind of translation of that feeling into some media (e.g., me posting on Twitter, “I’m bored.”).

- The last idea that Buckland explored was information as thing . Here, the idea was that information is different from knowledge and process in that it is tangible, observable, and physical. It can be stored and retrieved. The implication of this view is that we can only interact with information through things, and so information might as well just be the things themselves: the books, the magazines, the websites, the spreadsheet, and so on.

Buckland was not the only one to examine what information might be. Another notable perspective came from Gregory Bateson in his work Form, Substance, and Difference 1 1 Gregory Bateson (1970). Form, substance and difference. Essential Readings in Biosemiotics.

What we mean by information - the elementary unit of information - is a difference which makes a difference, and it is able to make a difference because the neural pathways along which it travels and is continuously transformed are themselves provided with energy... But what is a difference? A difference is a very peculiar and obscure concept. It is certainly not a thing or an event. This piece of paper is different from the wood of this lectern. There are many differences between them-of color, texture, shape, etc. But if we start to ask about the localization of those differences, we get into trouble. Obviously the difference between the paper and the wood is not in the paper; it is obviously not in the wood; it is obviously not in the space between them, and it is obviously not in the time between them.

Gregory Bateson (1970). Form, substance and difference. Essential Readings in Biosemiotics.

Bateson’s somewhat obtuse thought experiment is a slightly different idea than Buckland’s process , knowledge , and thing notions of information: it imagines the world as full of noticeable differences , and that those differences are not in the things themselves, but in their relationships to each other. For example, in DNA, it is not the cytosine, guanine, adenine, or thymine themselves that encode proteins, but the ability of cells to distinguish between them. Or, to return to the earlier example of my office door, it is not the door itself that conveys information, but the the fact that the door maybe open, closed, slightly ajar—those differences, and knowledge of them, are what allow for the door to convey information. Whether the “difference” encoded in the sequence of letters is conveyed by in a print magazine or a digital one, the differences are conveyed nonetheless, suggesting that information is less about medium than it is the ability of a “perceiver” to notice differences in that medium.

In 1948, well before Buckland and Bateson were theorizing about information conceptually, Claude Shannon was trying to understand information from an engineering perspective. Working in signal processing at Bell Labs, he was trying to find ways of transmitting telephone calls more reliably. In his seminal work, 8 8 Claude E. Shannon (1948). A mathematical theory of communication. The Bell System Technical Journal.

Another way to think about Shannon’s entropic idea of information is through probability: in the first example there’s only one way toss four heads so the probability is low. In contrast, in the second sequence, you have a high probability of getting half heads and half tails and could get those flips in many different ways: two heads then two tails, or one head followed by two tails followed by one head, and so on. The implication of these ideas is that the more rare “events” or “observations” in some phenomenon, the more information there is.

A third way to think about Shannon’s idea was that the the amount of information in anything is inversely related to is compressability . For example, is there a shorter way to say “1111111111”? We might say “ten 1’s”. But is there a shorter way to say “1856296289”? As a prime number, no. Shannon took this notion of compressibility to the extreme, observed that a fundamental unit of difference might be called a bit : either something is or isn’t, 1 or 0, true or false. He postulated that all information might be encoded as bit sequences and that the more compressible a bit sequence was, the less information content it has. This idea, of course, went on to shape not only telecommunications, but mathematics, statistics, computing, and biology, and enabled the modern digital world we have today.

Data, Information, and Knowledge

The nuance, variety, and inconsistency of all of these ideas bothered some scholars, who struggled to reconcile these definitions. Charles Meadow and Weijing Yuan tried to create some order on these concepts in their 1997 paper, Measuring the impact of information: defining the concepts 5 5 Charles Meadow, Weijing Yuan (1997). Measuring the impact of information: defining the concepts. Information Processing & Management.

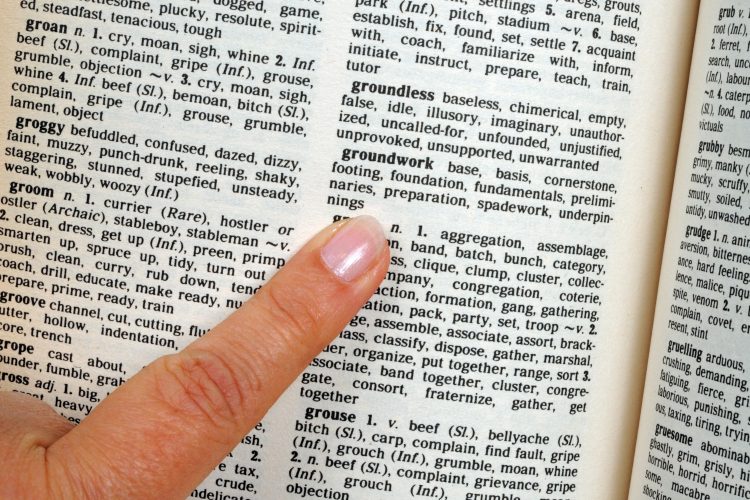

- Datadata: Analog or digital symbols that someone might perceive in the world and ascribe meaning. are a set of “symbols”, broadly construed to include any form of perceptible difference in the world. In this definition, the individual symbols have potential for meaning, but that they may or may not be meaningful or parsable to a recipient. For example, the binary sequence 00000001 is indeed a set of symbols, but you as the reader do not know if they encode the decimal number 1, or some message about cats, encoded by youth inventing their own secret code. A hand gesture with five fingers stretched out in a plane might mean someone is stretching their fingers or a non-verbal signal meant to get someone’s attention. In the same way, this entire chapter is data, in that it is a sequence of symbols that likely has much meaning to those fluent in English, but very little meaning to those not. Data, as envisioned by Shannon, is an abstract message, which may or may not have informational content.

- Informationinformation: The process of receiving, perceiving, and interpreting data into knowledge. , in Meadow and Yuan’s definition, is realization of the informational potential of data: it is the process of receiving, perceiving, and translating data into knowledge. The distinction from data, therefore, is a subtle one. Consider this bullet point, for example. The data is the sequence of Roman characters, stored on a web server, delivered to your computer, and rendered by your web browser. Thus far, all of this is data, being transmitted by a computer, and translated into different data representations. The process of you reading this English-encoded data, and comprehending the meaning of the words and sentences that it encodes, is information. Someone else might read it and experience different information.

- Knowledgeknowledge: An interconnected system of information in a mind. , in contrast to information, is what comes after the process of perceiving and interpreting data. It is the accumulation of information received by a particular entity. The authors do not get into whether that entity must be human—can cats have knowledge, or even single-cell organisms, or even non-living artifacts?—but this idea is enough to distinguish information from knowledge.

Some scholars have extended this framework to also include wisdom 7 7 Jennifer Rowley (2007). The wisdom hierarchy: representations of the DIKW hierarchy. Journal of Information and Communication.

Milan Zeleny (2005). Human Systems Management: Integrating Knowledge, Management and Systems. World Scientific.

One challenge with all of these conception of data, information, and knowledge, is the broader field of epistemologyepistemology: The study of how we know we know things. , which is a branch of philosophy concerned with how we know that know things. For example, one epistemological position called logical positivism is that we know things through logic, such as formal reasoning or mathematical proofs. Another stance called positivism , otherwise broadly known as empiricism, and widely used in the sciences, argues that we know things through a combination of observation and logic. Postpositivism takes the same position as positivism, but argues that there is inherent subjectivity and bias in sensory experience and reasoning, and only by recognizing our biases can we maintain objectivity. Interpretivism largely abandons claims of objectivity, arguing that all knowledge involves human subjectivity, and instead frames knowledge as subjective meaning. These and the many other perspectives on what knowledge is complicate simple classifications of data, information, and knowledge, because they question what it means to even know something.

Emotion and Context

These many works that attempt to define information largely stem from mathematical, statistical, and organizational traditions, and have sought formal, abstract definitions amenable for science, technology, and engineering. However, other perspectives on information challenge these ideas, or at least complicate simplistic notions of “messages”, “recipients”, and “encoding”. For example, consider the work of behavioral economists 4 4 Jennifer Lerner (2015). Emotion and decision making. Annual Review of Psychology.

An example of such bias is confirmation bias 6 6 Raymond Nickerson (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology.

A second aspect of information often overlooked by mathematical, symbolic definitions of information is contextcontext: Social, situational information that shapes the meaning of information being received. . This idea of context is implied in Bateson’s notion of difference, Buckland’s notion of information being a thing in a context, and implicit in Meadow and Yuan’s formulation in the recipient’s perception of information. But in all of these definitions, and in the work on the role of emotions in decisions, context appears to play a powerful role in shaping what information means, perhaps even more powerful than whatever data is encoded, or what emotions are at play in interpreting data. For example, in 1945, novelist and cultural critical Michael Ventura, said:

Without context, a piece of information is just a dot. It floats in your brain with a lot of other dots and doesn’t mean a damn thing. Knowledge is information-in-context … connecting the dots.

To illustrate his point, consider, for example, this sequence of statements, which reveals progressively more context about the information presented in the first statement.

- I have been to war.

- In that war, I have killed many people.

- Sometimes, killing in that war brought me joy and laughter.

- The war was a game called Call of Duty Black Ops: Cold War .

- The game was designed by Treyarch and Raven Software.

- I play it with my friends.

The first statement is still true in a way, but each of the other pieces of information fundamentally changed your perception of the meaning of the prior statements. Even simple examples like this demonstrate that while we may be able to objectively encode messages with symbols, and transmit them reliably, these mathematical notions of information fail to account for the meaning of information, and how it can change in the presence of our emotions and other information that arrives later. Defining information simply as data perceived and understood is therefore overly reductive, hiding the complexity of human perception, cognition, identity, and culture.

It also hides the complexity of context. Consider, for example, the many kinds of context that can shape the meaning of information:

- How was the information created? What process was followed? Was it verified by someone credible? Is it true? These questions fundamentally shape the meaning of information, and yet are rarely visible in information itself (with the exception of academic research, which has a practice of thoroughly describing the methods by which information was produced), and journalism, which often follows ethical standards for acquiring information, and sometimes reveals sources.

- When was the information created? A news story that was released 5 years ago does not have the same meaning that it does now; our knowledge of the future that occurred after it was published changes how we see its events, and how we interpret their meaning. And yet, we often do not pay attention to when news was reported, when a Wikipedia page was written, when a scientific study was published, or when someone wrote a tweet. Information is created in a temporal context that shapes its meaning.

- For whom was the information created? Messages have intended recipients and audiences, with whom an author has shared knowledge. This chapter was written for students learning about information; tweets are written for followers; love letters are meant for lovers; cyberbullying text messages are meant for victims. Without knowing for whom the message was created, it is not possible to know the full meaning of information, because one cannot know the shared knowledge of the two parties.

- Who created the information? The identity of the person creating the information shapes its meaning as well. For example, when I write “Being transgender can be hard.”, it matters that I am a transgender person saying it. It conveys a certain credibility through lived experience, while also establishing some authority. It also shapes how the message is interpreted, because it conveys personal experience. But if a cisgender person says it, their position in relation to transgender people shapes its meaning: are they an ally expressing solidarity, a mental health expert stating an objective fact, or an uninformed bystander with no particular knowledge of trans people?

- Why was the information created? The intent behind information can shape its meaning as well. Consider, for example, when someone posts some form of hate speech on social media. Did they post it to get attention? To convey an opinion? To connect with like-minded people? To cause harm? As a joke? These different motives shape how others might interpret the message. That this context is often missing from social media posts is why short messages so often lead to confusion, misinterpretation, and outrage.

These many forms of context, and the many others not listed here, show that while some aspects of information may be able to be represented with data, the social, emotional, cultural, and political context of how that data is received can shape information as well. Therefore, as D’Ignazio and Klein argued in Data Feminism , “the numbers don’t speak for themselves” 3 3 Catherine D'Ignazio, Lauren F. Klein (2020). Data Feminism. MIT Press.

Returning to my experiences as a child, the diverse notions of information above reveal a few things. First, while the data contained in the books, news, and magazines of my youth might not be different kind from that in my adulthood, the information is different. The social context in which I experience it changes what I take from it, my motivation to seek it has changed, and my ability to understand how it was created, by whom, for what, and when has been transformed by computing. Thus, while the “data” behind information has changed little over time, information itself has changed considerably as media, and the contexts in which we create and consume it, have changed in form and function. And if we are to believe the formulations above that relate information to knowledge, then the knowledge I gain from books, news, and magazines has almost certainly changed too. What implications this has on our individual and collective worlds is something we have yet to fully understand.

Podcasts

Want to learn more about the importance of context in information? Consider these podcasts:

- Can AI Fix Your Credit?, In Machines We Trust, MIT Technology Review . Discusses credit reports and the context they miss that leads to inequities in access to loans such as credit cards, car purchases, and homes.

- How Radio Makes Female Voices Sound Shrill, On the Media . Discusses how voice information is not simply data, but a complex set of hardware decisions about how voice is translated into data.

References

-

Gregory Bateson (1970). Form, substance and difference. Essential Readings in Biosemiotics.

-

Michael K. Buckland (1991). Information as thing. Journal of the American Society for information Science.

-

Catherine D'Ignazio, Lauren F. Klein (2020). Data Feminism. MIT Press.

-

Jennifer Lerner (2015). Emotion and decision making. Annual Review of Psychology.

-

Charles Meadow, Weijing Yuan (1997). Measuring the impact of information: defining the concepts. Information Processing & Management.

-

Raymond Nickerson (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology.

-

Jennifer Rowley (2007). The wisdom hierarchy: representations of the DIKW hierarchy. Journal of Information and Communication.

-

Claude E. Shannon (1948). A mathematical theory of communication. The Bell System Technical Journal.

-

Milan Zeleny (2005). Human Systems Management: Integrating Knowledge, Management and Systems. World Scientific.

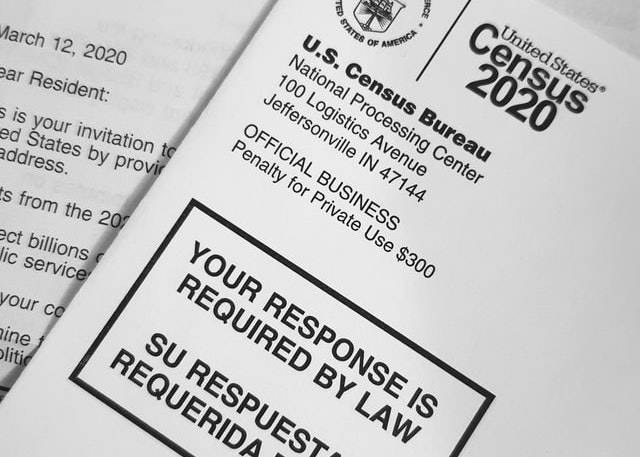

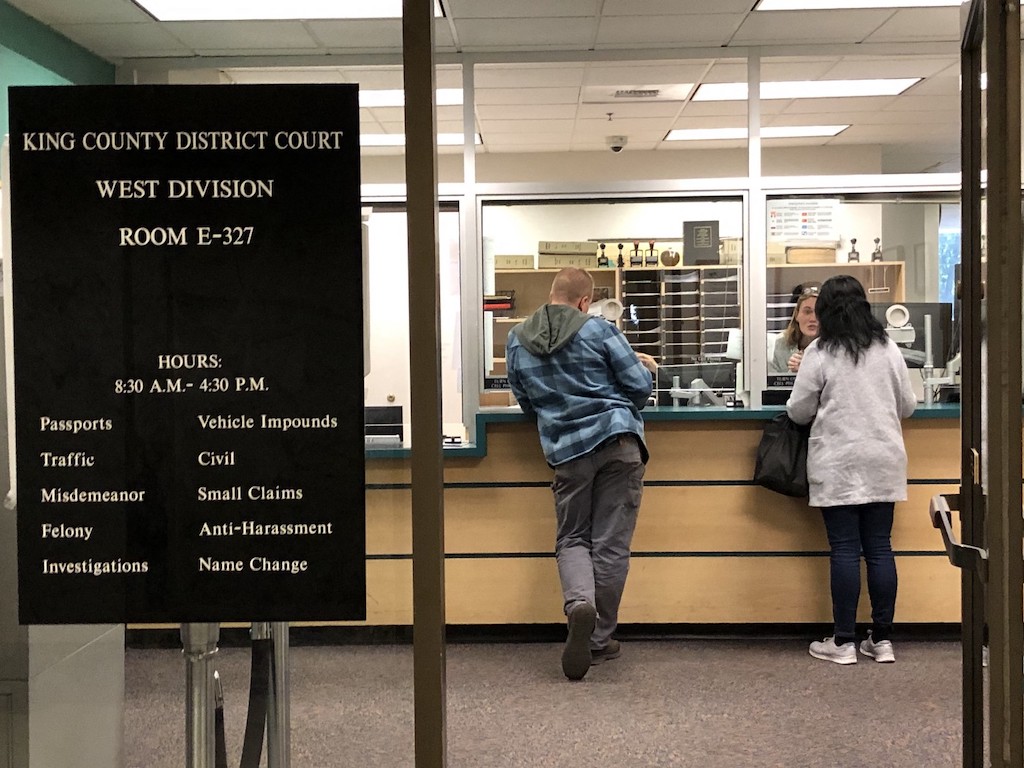

Data, encoding, and metadata

Every ten years, the United States government completes a census of everyone that lives within its borders. This census, mandated by the U.S. Constitution, is a necessary part of determining many functions of government, including how seats in the U.S. House of Representatives are allocated to each state, based on population, as well as how federal support is allocated for safety net programs. Demographic information, such as age, gender, race, and disability, also informs federal funding of research and other social services. Therefore, this national survey is about distributing both power and resources.

When I filled out the census for the first time in the year 2000 at the age of 20, I wasn’t really fully aware of why I was filling out the survey, or how the data would be used. What struck me about it were these two questions:

- What is your sex? ☐ Male ☐ Female

- What is your race? ☐ White ☐ Black, African Am., or Negro, ☐ American Indian or Alaska Native, ☐ Asian Indian, ☐ Chinese, ☐ Filipino, ☐ Japanese, ☐ Korean, ☐ Vietnamese, ☐ Native Hawaiian, ☐ Guamanian or Chamorro, ☐ Samoan, ☐ Other Pacific Islander, ☐ Some other race

I struggled with both. At the time, I wasn’t definitely uncomfortable with thinking about myself as male, and it was too scary to think about myself as female. What I wanted to write in that in that first box was “Confused, get back to me.” Today, I would confidently choose female , or woman , or transgender , or even do you really need to know ? But the Census, with its authority to shape myriad policies and services based on gender for the next twenty years of my life, forced me into a binary.

The race question was challenging for a different reason. I was proudly biracial, with Chinese and Danish parents, and I was excited that for the first time in U.S. history, I would be able to select more than one race to faithfully represent my family’s ethic and cultural background. Chinese was an explicit option, so that was relatively easy (though my grandparents had long abandoned their ties to China, happily American citizens, with a grandfather who was a veteran, serving in World War I). But White ? Why was there only one category of White? I was Danish, not White. Once again, the Census, with its authority to use racial data to monitor compliance with equal protection laws and shape funding for schools and medical services, forced me into a category in which I did not fit.

These stories are problems of data, encoding, and metadata. In this chapter, we will discuss how these ideas relate, connecting them to the notions of information in the previous chapter, and complicating their increasingly dominant role in informing the public and public policy.

Data comes in many forms

As we discussed in the previous chapter, datadata: Analog or digital symbols that someone might perceive in the world and ascribe meaning. can be thought of as any form of symbols, analog or discrete , that someone might perceive in the world. Data, therefore, can come in many forms, including those that might enter through our analog senses of touch, sight, hearing, smell, taste, and even other less known senses, including pain, balance, and proprioception (the sense of where our bodies are in space relative to other objects). These human-perceptible, physical forms of data might therefore be in the form of physical force (touch), light (sight), sound waves (hearing), molecules (smell, taste), and the position of matter in relation to our bodies (balance, proprioception). It might be strange to imagine, but when we look out into the world to see the beauty of nature, when we smell and eat a tasty meal, and when we listen to our favorite songs, we are perceiving data in its many analog forms, which becomes information in our minds, and potentially knowledge.

But data can also come in forms that cannot be directly perceived. The most modern example is bitsbit: A binary value, often represented as either 1 or 0 or true or false. , as Claude Shannon described them 11 11 Claude E. Shannon (1948). A mathematical theory of communication. The Bell System Technical Journal.

James Gasser (2000). A Boole anthology: recent and classical studies in the logic of George Boole. Springer Science & Business Media.

M. Riordan (2004). The lost history of the transistor. IEEE spectrum.

Of course, binary, bits, and transistors were not the first forms of discrete data. Deoxyribonucleic acid (DNA) came much earlier. A molecule composed of two polynucleotide chains that wrap around each other to form a helix shape, these chains are composed of sequences of one of four nucleobases, cytosine, guanine, adenine, and thymine. DNA, just like transistors, encodes data, but unlike a computer, which uses bits to make arithmetic calculations, life uses DNA to assemble proteins, which are the complex molecules that enable most of the functions in living organisms and viruses. And just like the transistors in a microprocessor and computer memory are housed inside the center of a computer, DNA is fragile, and therefore carefully protected, housed inside cells and the capsid shell walls of viruses. Of course, because DNA is data, we can translate it to bits 10 10 Michael C. Schatz, Ben Langmead, Steven L. Salzberg (2010). Cloud computing and the DNA data race. Nature Biotechnology.

Because computers are our most dominant and recent form of information technology, it is tempting to think of all data as bits. But this diversity of ways of storing data shows that binary is just the most recent form, and not even our most powerful: bits cannot heal our wounds the way that DNA can, nor can bits convey the wonderful aroma of fresh baked bread. This is because we do not know how to encode those things as bits.

Data requires encoding

The forms of data, while fascinating and diverse, are insufficient for giving data the potential to become information in our minds. Some data is just random noise with no meaning, such as the haphazard scrawls of a toddler trying to mimic written language, or the static of a radio receiving no signal. That is data, in the sense that it conveys some difference, entropy, or surprise, but it has little potential to convey information. To achieve this potential, data can be encodedencoding: Rules that define the structure and syntax of data, enabling unambiguous interpretation and reliable storage and transmission. , giving some structure and syntax to the entropy in data that allows it to be transmitted, parsed, and understood by a recipient. There are many kinds of encodings, some natural (such as DNA), some invented, some analog, some digital, but they all have one thing in common: they have rules that govern the meaning of data and how it must be structured.

To start, let’s consider spoken, written, or signed natural language, like English, Spanish, Chinese, or American Sign Language. All of these languages have syntax, including grammatical rules that suggest how words and sentences should and should not be structured to convey meaning. For example, English often uses the ending -ed to indicate an action occurred in the past ( waited , yawned , learned ). Most American Sign Language conversations follow Subject-Verb-Object order, as in CAT LICKED WALL . When we break these grammatical rules, the data we convey becomes harder to parse, and we risk our message being misunderstood. Sometimes those words and sentences are encoded into sound waves projected through our voices; sometimes they are encoded through written symbols like the alphabet or the tens of thousands of Chinese characters and sometimes they are encoded through gestures, as in sign languages that use the orientation, position, and motion of our hands, arms, faces, and other body parts. Language, therefore, is an encoding of thoughts into words and sentences that follow particular rules 2 2 Kathryn Bock, Willem Levelt (1994). Language production: Grammatical encoding. Academic Press.

While most communication with language throughout human history was embodied (using only our bodies to generate data), we eventually invented some analog encodings to leverage other media. For example, the telegraph was invented as a way of sending electrical signals over wire long distances between adjacent towns, much like a text messages 4 4 Lewis Coe (2003). The telegraph: A history of Morse's invention and its predecessors in the United States. McFarland.

This history of analog encodings directly informed the digital encodings that followed Shannon’s work on information theory. The immediate problem posed by the idea of using bits was how to encode the many kinds of data in human civilization into bits. Consider, for example, numbers. Many of us learn to work in base 10, learning basic arithmetic such as 1+1=2. But mathematicians have long known that there are an infinite number of bases for encoding numbers. Bits, or binary values, simply use base two. There, encoding the integers 1 to 10 in base two is simply:

| Binary | Decimal |

|---|---|

| 1 | 1 |

| 10 | 2 |

| 11 | 3 |

| 100 | 4 |

| 101 | 5 |

| 110 | 6 |

| 111 | 7 |

| 1000 | 8 |

| 1001 | 9 |

| 1010 | 10 |

That was simple enough. But in storing numbers, the early inventors of the computer quickly realized that there would be limits on storage 12 12 Jon Stokes (2007). Inside the Machine: An Illustrated Introduction to Microprocessors and Computer Architecture. No Starch Press.

Storing positive integers was easy enough. But what about negative numbers? Early solutions reserved the first bit in a sequence to indicate the sign, where 1 represented a negative number, and 0 a positive number. That would make the bit sequence 10001010 the number -10 in base 10. It turned out that this representation made it harder and slower for computers to do arithmetic on numbers, and so other encodings - which allow arithmetic to be computed the same way, whether numbers are positive or negative - are now used (predominantly the 2’s complement encoding ). However, reserving a bit to store a number’s sign also means that one can’t count as high: 1 bit for the sign means only 7 bits for the number, which means 2 to the power 7, or 128 possible positive or negative values, including zero.

Real numbers (or “decimal” numbers) posed an entirely different challenge. What happens when one wants to encode a large number, like 10 to the power 78, the latest estimate of the minimum number of atoms in the universe? For this, new encoding had to be invented, that would leverage the scientific notation in mathematics. The latest version of this encoding is IEEE 754 , which defines an encoding called floating-point numbers, which reserves some number of bits for the “significand” (the number before the exponent), and other bits for the exponent value. This encoding scheme is not perfect. For example, it is not possible to accurately represent all possible numbers with a fixed number of bits, nor is it possible to represent irrational numbers such as 1/7 accurately. This means that simple real number arithmetic like 0.1 + 0.2 doesn’t produce the expected 0.3 , but rather 0.30000000000000004 . This level of precision is good enough for applications that only need approximate values, but scientific applicants requiring more precision, such as experiments with particle accelerators which often need hundreds of digits of precision, have required entirely different encodings.

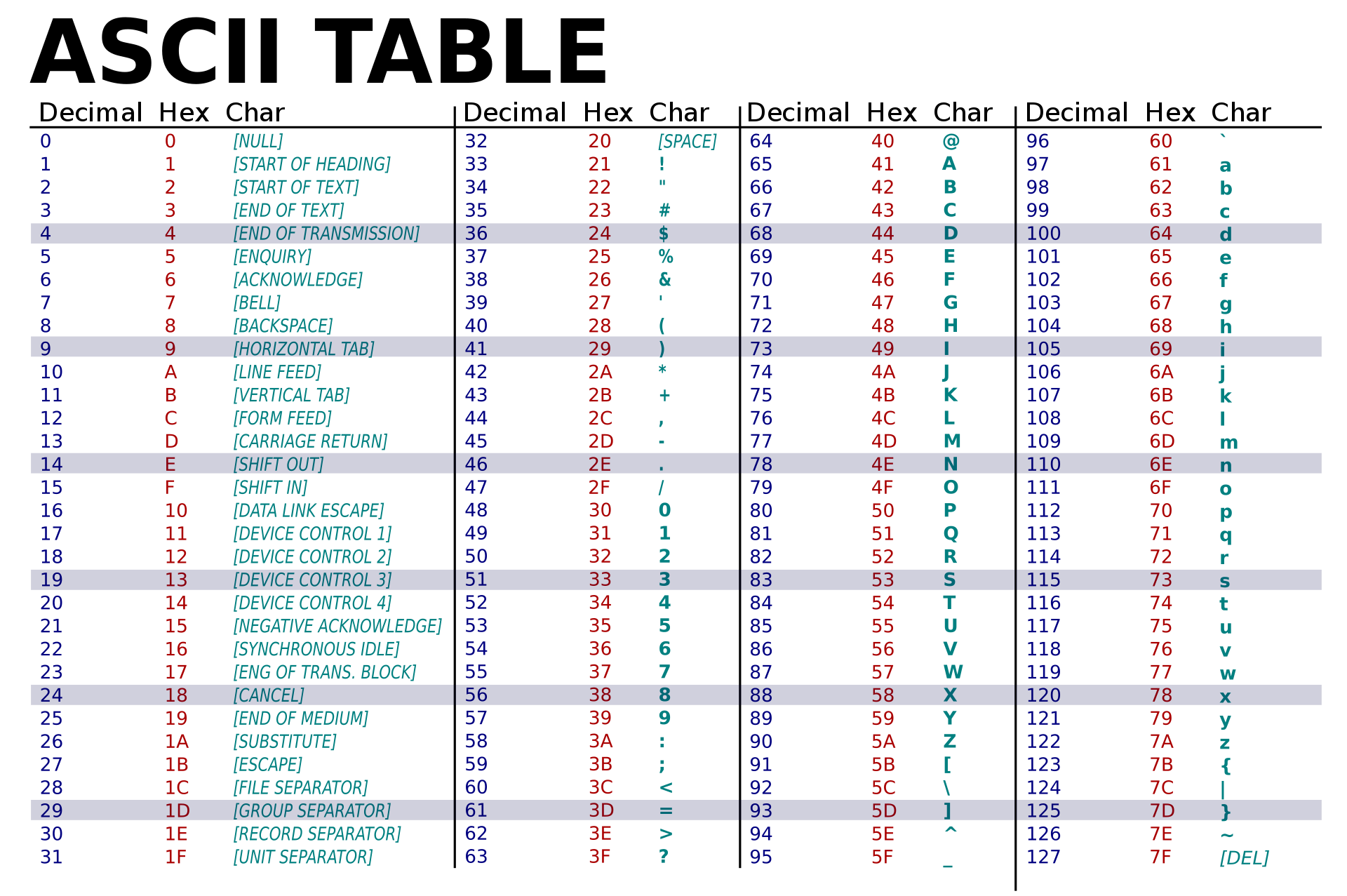

While numbers are important, so are other symbols. In fact, most of the Internet is text, and not numbers. So encoding text was an entirely different challenge. The basic idea that early inventors of computers devised was to encode each character in the alphabet as a specific number. This first encoding, called ASCII (which stood for American Standard Code for Information Interchange), used specific values in an 8-bit sequence to denote specific characters 8 8 C.E. Mackenzie (1980). Coded-Character Sets: History and Development. Addison-Wesley Longman Publishing.

65 stood for A , 66 for B , 67 for C , and so on, with lower case letters starting at 97 for a . Therefore, to encode the word kitty , one would use the sequence of numbers 107 105 116 116 121 , or in unsigned binary, 01101011 01101001 01110100 01110100 01111001 . Five letters, five 8-bit numbers.

Of course, as is clear in the name for this encoding — American Standard — it was highly exclusionary: only western languages could be encoded, excluding all other languages in the world. For that, Unicode was invented, allowing the encoding of all of the world’s languages 7 7 Jukka K. Korpela (2006). Unicode explained. O'Reilly Media, Inc..

Numbers and text of course do not cover all of the kinds of data in the world. Images have their own analog and digital encodings (film photograph negatives, JPEG, PNG, HEIC, WebP), as do sounds (analog records, WAV, MP3, AAC, FLAC, WMA). And of course, software of all kinds defines encodings for all other imaginable kinds of data. Each of these, of course, are designed, and use these basic primitive data encodings as their foundation. For example, when the U.S. Census was designing the 2000 survey, the two questions I struggled with were stored as a data structure , with several fields, each with a data type:

| Data type | Description |

|---|---|

| boolean | true if checked male |

| boolean | true if checked White |

| boolean | true if checked Black |

| boolean | true if checked American Indian/Native Alaskan |

| boolean | true if checked Asian Indian |

| boolean | true if checked Chinese |

| boolean | true if checked Filipino |

| boolean | true if checked Japanese |

| boolean | true if checked Korean |

| boolean | true if checked Vietnamese |

| boolean | true if checked Hawaiian |

| boolean | true if checked Guamanian/Chamorro |

| boolean | true if checked Pacific Islander |

| text | encoding of handwritten text describing another race |

Each response to that survey was encoded using that data structure, encoding binary as one of two values, and encoding race is a yes or no value to one of twelve descriptors, plus an optional text value in which someone could write their own racial label. Data structures, like the one above, are defined by software designers and developers using programming languages; database designers create similar encodings by defining database schema , specifying the data and data types of data to be stored in a database .

Encoding is lossy

The power of encoding is that it allows us to communicate in common languages. Encoding in bits is even more powerful, because it is much easier to record, store, copy, transmit, analyze, and retrieve data with digital computers. But as should be clear from the history of encoding above, encodings are not particularly neutral. Natural languages are constantly evolving to reflect new cultures, values, and beliefs, and so the rules of spelling and grammar are constantly contested and changed. Digital encodings for text designed by Americans in the 1960’s excluded the rest of the world. Data structures like the one created for the 2000 U.S. Census excluded my gender and racial identity. Even the numerical encodings have bias, making it easier to represent integers centered around 0, and giving more precision to rational numbers than irrational numbers. These biases demonstrate that an inescapable fact of all analog and digital encodings of data: they are lossy, failing to perfectly, accurately, and faithfully represent the phenomenon they encode 1 1 Ruha Benjamin (2019). Race after technology: Abolitionist tools for the new jim code. Social Forces.

Nowhere is this more clear than when we compare analog to digital encodings of the same phenomena to the phenomena they attempt to encode as data:

- Analog music , such as that stored on records and tapes, is hard to record, copy, and share, but it captures a richness and fidelity. Digital music , in contrast, is easy to copy, share, and distribute, but is usually of lower quality. Both, however, cannot compare to the embodied experience of live performance, which is hard to capture, but far richer than recordings.

- Analog video , such as that stored on film, has superior depth of field but is hard to edit and expensive. Digital video , in contrast, is easier to copy and edit, but it is prone to compression errors that are far more disruptive than the blemishes of film. Both, however, cannot compare to the embodied experience of seeing and hearing the real world, even though that embodied experience is impossible to replicate.

Obviously, each encoding has tradeoffs; there is not best way to capture data of each kind, just different ways, reflecting different values and priorities.

Metadata captures context

One way to overcome the lossy nature of encoding is by also encoding the context of information. Context, as we described in the previous chapter, represents all of the details surrounding some information, such as who delivered it, how it was captured, when it was captured, and even why it was captured. As we noted, context is critical for capturing the meaning of information, and so encoding context is a critical part of capturing the meaning of data. When we encode the context of data as data, we call that metadatametadata: Data about data, capturing its meaning and context. , as it is data about data 13 13 Marcia Lei Zeng (2008). Metadata. Neal-Schuman Publishers.

To encode metadata, we can use the same basic ideas of encoding. But deciding what metadata to capture, just as with deciding how to encode data, is a value-driven design choice. Consider, for example metadata about images we have captured. The EXIF metadata standard captures context about images such as captions that may narrate and describe the image as text, geolocation data that specifies where on Earth it was taken, the name of the photographer who took the photo, copyright information about rights to reuse the photo, and the date the photo was taken. This metadata can help image data be better interpreted as information, by modeling the context it was taken. (We say “model”, because just as with data, metadata does not perfectly capture context: a caption may overlook important image contents, the name of the photographer may have changed, and the geolocation information may have been imprecise or altogether wrong).

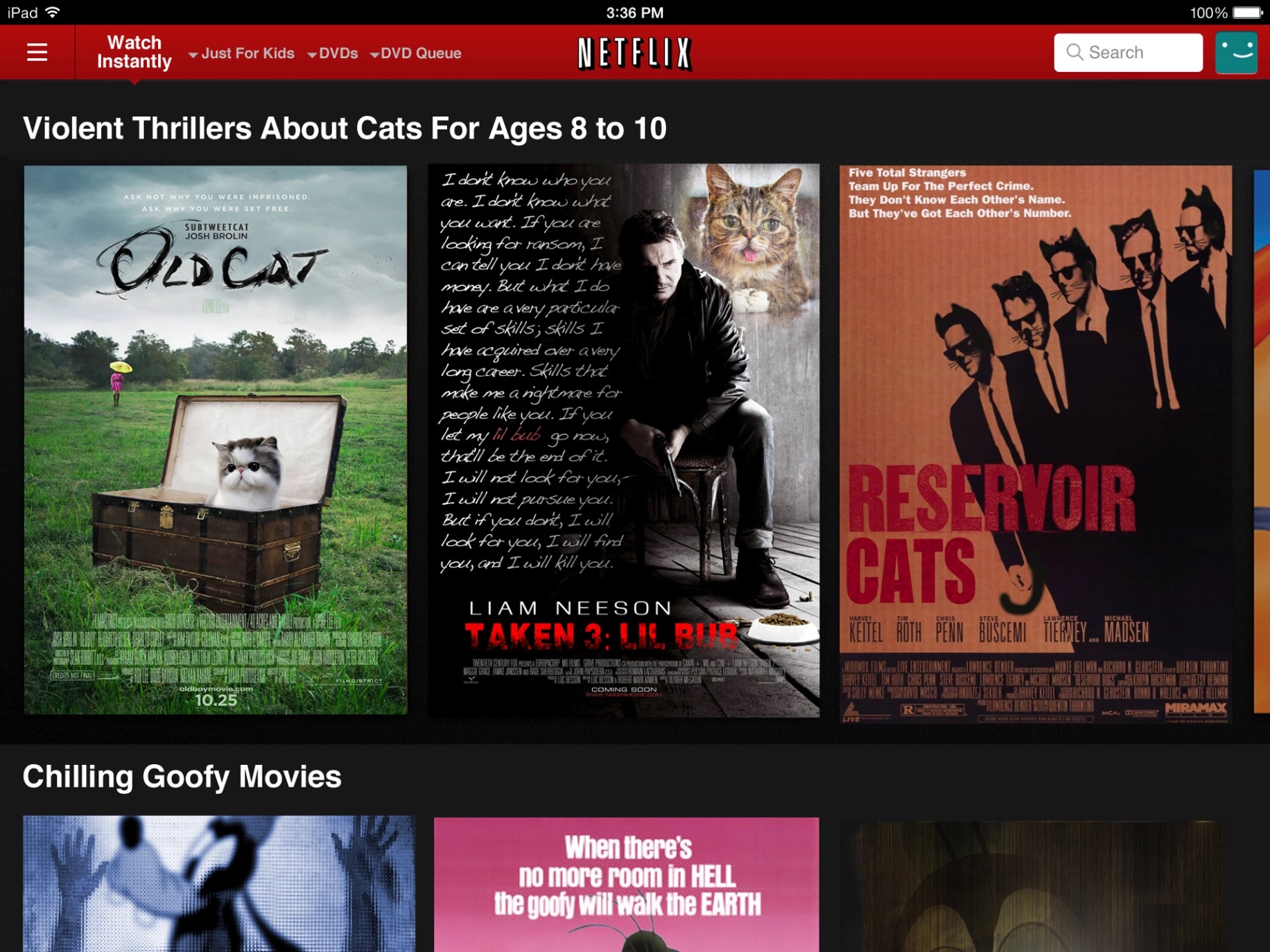

But metadata is useful for more than just interpreting information. It is also useful for organizing it. For example, Netflix does more than encode television and movies as data for streaming. It also stores and even creates metadata about movies and television, such as who directed it, who produced it, who acted in it, what languages it can be heard in, whether it is captioned, and so on. This metadata facilitates searching and browsing, as we shall discuss in our later chapter on information seeking. But Netflix also creates metadata, synthetically generating tens of thousands of “micro-genres” to describe very precise categories of movies, such as Binge-Worthy Suspenseful TV dramas or Critically Acclaimed Irreverent TV Comedies . This metadata helped Netflix make browsing both more granular and informative, creating a sense of curiosity and surprise through its sometimes odd and surprising categorizations. This is an example of what knowledge organization scholars called a controlled vocabularycontrolled vocabulary: A fixed collection of terminology used to restrict word choice to ensure consistency and support browsing and searching. , a carefully designed selection of words and phrases that serve as metadata for use in browsing and searching. Tagging systems like controlled vocabularies are in contrast to hierarchical systems of organization, like taxonomies, which strictly place data or documents within a single category.

While some metadata is carefully designed and curated by the creators of data, sometimes metadata is created by the consumers of data. Most systems call these taggingtagging: A system for enabling users of an information system to create descriptive metatdata for data. systems, and work by having consumers of data apply public tags to items, resulting in an emergent classification system; scholars have also called them folksonomies , collaborative tagging , or social classification , or social tagging systems 6 6 Andreas Hotho, Robert Jäschke, Christoph Schmitz, Gerd Stumme (2006). Information retrieval in folksonomies: Search and ranking. European Semantic Web Conference.

Classification, in general, is fraught with social consequences. 3 3 Geoffrey Bowker, Susan Leigh (2000). Sorting things out: Classification and its consequences. MIT Press.

Thus far, we have established that information is powerful when it is situated in systems of power, that this power can lead to problems, and that information is likely a process of interpreting data, and integrating it into our knowledge. This is apparent in the story that began this chapter, in which the information gathered by U.S. Census has great power to distribute political power and federal resources. What this chapter has shown us is that the data itself has its own powers and perils, manifested in how it and its metadata is encoded. This is illustrated by how the U.S. Census encodes gender and race, excluding my identity and millions of others’ identities. This illustrates that the choices about how to encode data and metadata reflect the values, beliefs, and priorities of the systems of power in which they are situated. Designers of data, therefore, must recognize the systems of power in which they sit, carefully reflecting on how their choices might exclude.

Podcasts

Learn more about data and encoding:

- Emojiconomics, Planet Money, NPR . Discusses the fraught role of business and money in the Unicode emoji proposal process.

- Our Year: Emergency Mode Can’t Last Forever, What Next, Slate . Discusses the processes by which COVID-19 data have been produced in the United States, and the messy and complex metadata that makes analysis and decision making more difficult.

- Inventing Hispanic, The Experiment, The Atlantic . Discusses the history behind the invention of the category “Hispanic” and “Latino”, and how racial categories are used to encode race as a social construct.

- Organizing Chaos, On the Media . Reports on the debates about the Dewey Decimal System and it’s inherent social biases.

References

-

Ruha Benjamin (2019). Race after technology: Abolitionist tools for the new jim code. Social Forces.

-

Kathryn Bock, Willem Levelt (1994). Language production: Grammatical encoding. Academic Press.

-

Geoffrey Bowker, Susan Leigh (2000). Sorting things out: Classification and its consequences. MIT Press.

-

Lewis Coe (2003). The telegraph: A history of Morse's invention and its predecessors in the United States. McFarland.

-

James Gasser (2000). A Boole anthology: recent and classical studies in the logic of George Boole. Springer Science & Business Media.

-

Andreas Hotho, Robert Jäschke, Christoph Schmitz, Gerd Stumme (2006). Information retrieval in folksonomies: Search and ranking. European Semantic Web Conference.

-

Jukka K. Korpela (2006). Unicode explained. O'Reilly Media, Inc..

-

C.E. Mackenzie (1980). Coded-Character Sets: History and Development. Addison-Wesley Longman Publishing.

-

M. Riordan (2004). The lost history of the transistor. IEEE spectrum.

-

Michael C. Schatz, Ben Langmead, Steven L. Salzberg (2010). Cloud computing and the DNA data race. Nature Biotechnology.

-

Claude E. Shannon (1948). A mathematical theory of communication. The Bell System Technical Journal.

-

Jon Stokes (2007). Inside the Machine: An Illustrated Introduction to Microprocessors and Computer Architecture. No Starch Press.

-

Marcia Lei Zeng (2008). Metadata. Neal-Schuman Publishers.

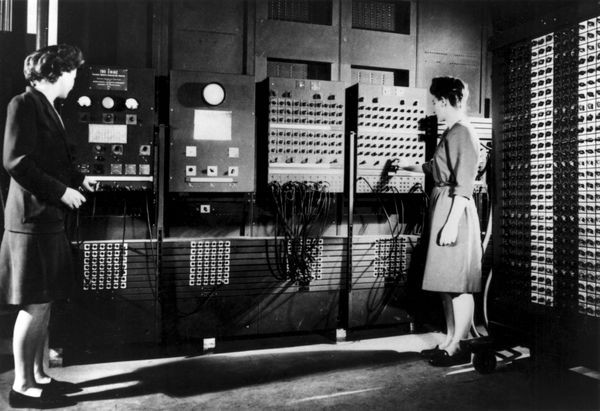

Information technology

My family’s first computer was an Apple IIe computer. We bought it in 1988 when I was 8. My mother had ordered it after $3,000 saving for two years, hearing that it could be useful for word processing, but also education. It arrived one day in a massive cardboard box and had to be assembled. Before my parents got home, I had opened the box and found the assembly instructions. I was so curious about the strange machine, I struggled my way through the instructions for two hours, I had plugged in the right cables, learned about the boot loader floppy disk, inserted into the floppy disc reader, and flipped the power switch. The screen slowly brightened, revealing its piercing green monochrome and a mysterious command line prompt.

After a year of playing with the machine, my life had transformed. My play time had shifted from running outside with my brother and friends with squirt guns to playing cryptic math puzzle games, listening to a homebody mow his lawn in a Sims-like simulation game, and drawing pictures with typography in the word processor, and waiting patiently for them to print in the dot matrix printer. This was a device that seemed to be able to do anything, and yet it could do so little. Meanwhile, the many other interests I had life faded, and my parents’ job shifted from trying to get us to come inside for dinner to trying to get us to go outside to play.

For many people since the 1980’s, life with computers has felt much the same, and it rapidly led us to equate computers with technology, and technology with computers. But as I learned later, technology has meant many things in history, and quite often, it has meant information technology.

What is technology?

The word technology comes from ancient Greece, combining tekhne , which means art or craft , with logia , which means a “subject of interest” to make the word tecknologia . Since ancient Greece, the word technology has generally referred to any application of knowledge, scientific or otherwise, for practical purposes, with its use evolving to refer to whatever recent inventions had captivated humanity 5 5 Stephen J. Kline (1985). What is technology?. Bulletin of Science, Technology & Society.

| 15000 BC | stone tools |

|---|---|

| 2700 BC | abacus |

| 900’s | gunpowder |

| 1100’s | rockets |

| 1400’s | printing press |

| 1730’s | yarn spinners |

| 1820’s | motors |

| 1910’s | flight |

| 1920’s | television |

| 1930’s | flying |

| 1950’s | space travel |

| 1960’s | lasers |

| 1970’s | computers |

| 1980’s | cell phones |

| 1990’s | internet |

| 2000’s | smartphones |

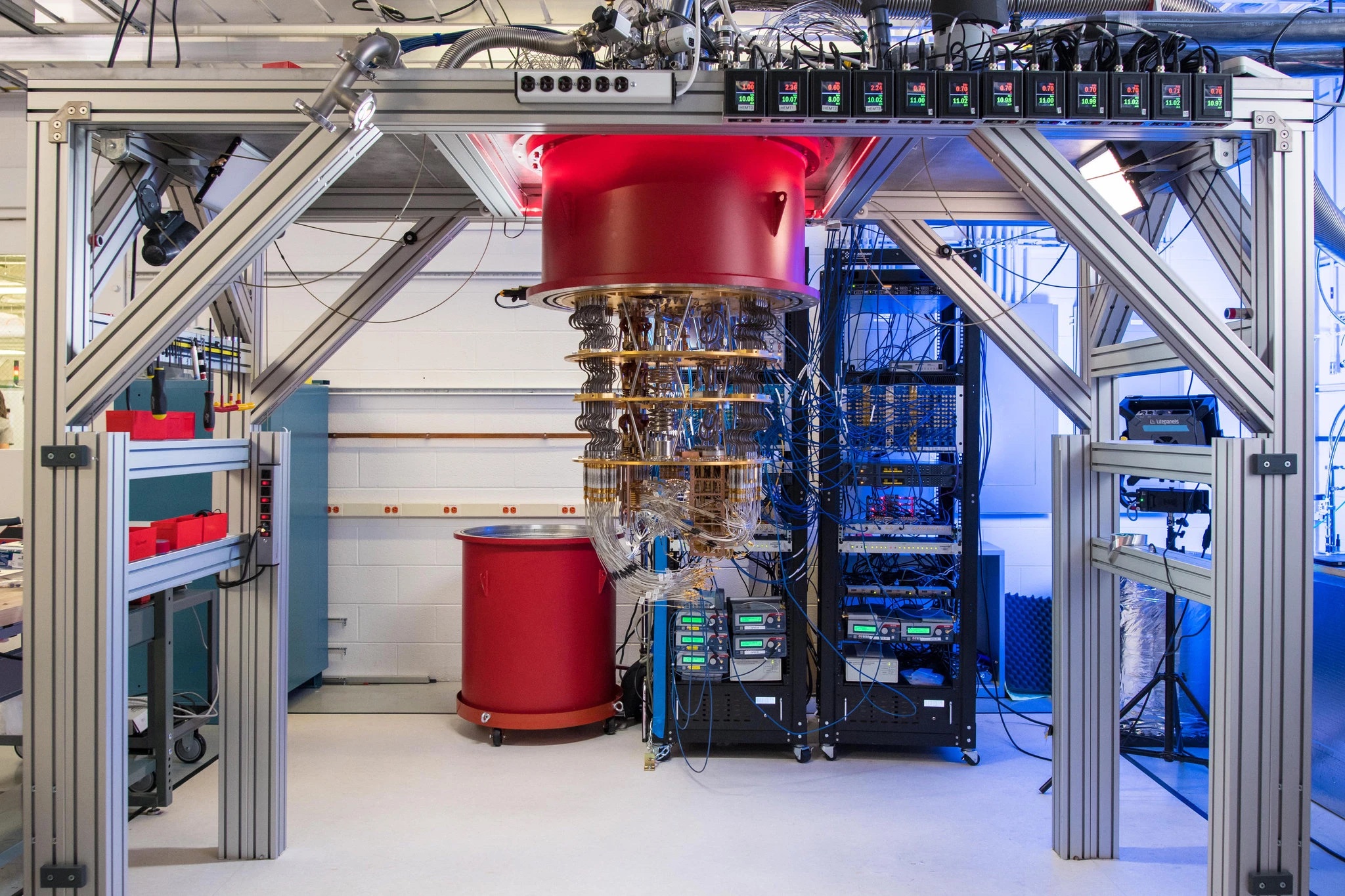

While many technologies are not explicitly for the purpose of transmitting information, you can see from the ones in bold in the table above that most technology innovations in the last five hundred years have been information technologies 4 4 James Gleick (2011). The information: A history, a theory, a flood. Vintage Books.

A second thing that is clear from the history above is that most technologies, and even most information technologies, have been analog, not digital, from the abacus for calculating quantities with wooden beads almost 3,000 years ago to the smartphones that dominate our lives today. Only since the invention of the digital computer in the 1950’s, and their broad adoption in the 1990’s and 2000’s, did the public start using the word “technology” to refer to computing technology.

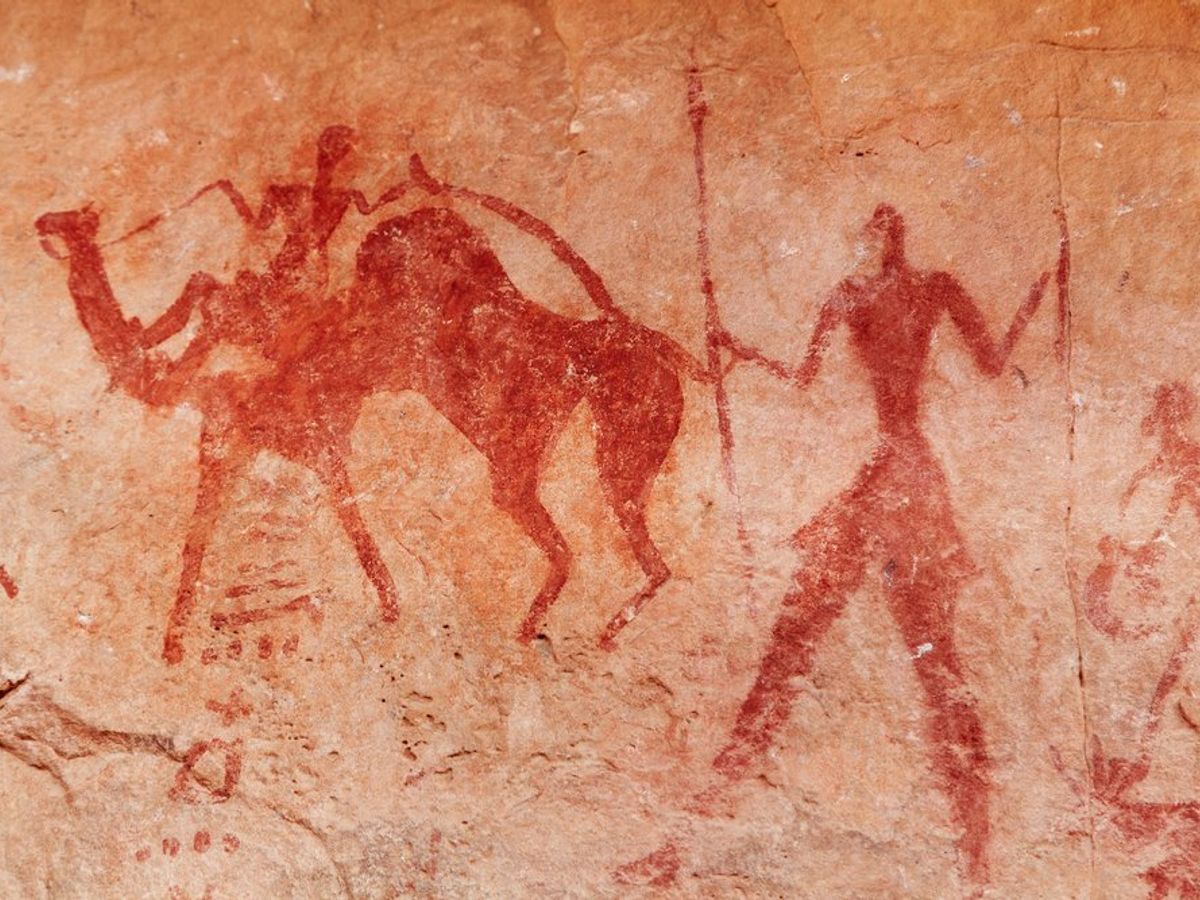

Early information technologies

The history of information technologies, unsurprisingly, follows the evolution of humanity’s desire to communicate. For example, some of the first information technologies were materials used to create art , such as paint, ink, graphite, lead, wax, crayon, and other pigmentation technologies, as well as technologies for sculpting and carving. Art was one of humanity’s first ways of communicating and archiving stories 6 6 Shigeru Miyagawa, Cora Lesure, Vitor A. Nóbrega (2018). Cross-modality information transfer: a hypothesis about the relationship among prehistoric cave paintings, symbolic thinking, and the emergence of language. Frontiers in Psychology.

Spoken language came next 9 9 Jean-Jacques Rousseau, John H. Moran, Johann Gottfried Herder, Alexander Gode (2012). On the Origin of Language. University of Chicago Press.

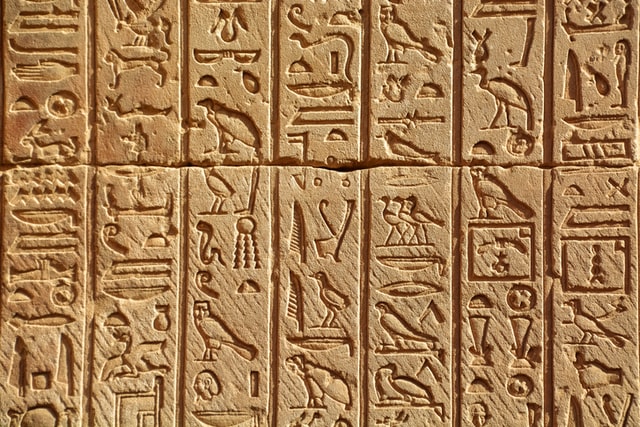

Writing came later. Many scholars dispute the exact origins of writing, but our best evidence suggests that the Egyptians and Mesopotamian’s, possibly independently, created the early systems of abstract pictograms to represent particular nouns, as well as early Chinese civilizations, where symbols have been found on tortoise shells. These early pictographic scripts evolved into alphabets and character sets, and exploited any number of media, including walls of caves, stone tablets, wooden tablets, and eventually paper. Paints, inks, and other technologies emerged, eventually being refined to the point where we take immaculate paper and writing instruments such as pencil and pens for granted. Some found writing dangerous; Socrates, the Greek philosopher, for example, believed that the written word would prevent people from harnessing their minds:

For this invention will produce forgetfulness in the minds of those who learn to use it, because they will not practice their memory. Their trust in writing, produced by external characters which are no part of themselves, will discourage the use of their own memory within them. You have invented an elixir not of memory, but of reminding; and you offer your pupils the appearance of wisdom, not true wisdom, for they will read many things without instruction and will therefore seem to know many things, when they are for the most part ignorant and hard to get along with, since they are not wise, but only appear wise.

Plato (-370). Phaesrus. Cambridge University Press.

Writing, of course, was slow. Even once humanity had created books, there was no way to copy them other than painstakingly transcribing every word onto new paper. This limited books to the wealthiest and most elite people in society, and ensured that news was only accessible via word of mouth, reinforcing systems of power undergirded by access to information.

The printing press , invented by goldsmith Johannes Gutenberg around 1440 in Germany, solved this problem. It brought together a box full of letter blocks on which ink could be applied, another box in which letters were placed, and a machine, which would lay ink upon the lead blocks, and then paper would be rolled to apply the ink. With this mechanism, copying a text went from taking months to minutes, introducing the era of mass communication, permanently transforming society through the advent of reading literacy. Suddenly, the ability to access books and newspapers , and learn to read them, lead to a rising cultural self-awareness through the circulation of ideas that prior had only been accessible to those with access to education and books. To an extent, the printing press democratized knowledge—but only if one had access to literacy, likely through school.

While print transformed the world, in the late 18th century, the telegraph began connecting it. Based on earlier ideas of flag semaphores in China, the electrical telegraphs of the 19th century connected towns by wires, encoding what were essentially text messages via electrical pulses. Because electricity could travel at the speed of light, it was no longer necessary to ride a horse to the next town over, or send a letter via train. The telegraph made it possible to send a message without ever leaving your town.

Around the same time, French inventor Nicéphore Niépce invented the photograph , using chemical processes to expose a film to light over the course of several days, allowing for the replication of images of the world. This early process led to more advanced ones, and eventually black and white film photography in the late 19th century, and color in the 1930’s. It was much longer after the first photographs that the first motion pictures were invented. Photography and movies became, and continues to be, the dominant way that people capture moments in history, their own, and the world’s.

While photography was being refined, in 1876 Alexander Graham Bell was granted a patent for the telephone , a device much like the telegraph, but rather than encoding text through electrical pulses, it encoded voice. The first phones were connected directly to each other, but later phones were connected via switchboards, which were eventually automated by computers, creating a worldwide public telephone network.

During nearly the same year in 1887, Thomas Edison and his crew of innovators invented the phonograph , a device for recording and reproducing sound. For the first time, it was possible to capture live music or conversation, store it on a circular disk, and then spin that disk to generate sound. It became an increasingly popular invention, and lead to the recorded music industry.