The immediate goal of assessing a simulation model is to detect those features of the phenomenon that the proposed model is unable to adequately reproduce; the ultimate goal is to then locate the sources of these deficiencies, be they in the optimization search, the model`s proposed mathematical structure, the formulation and selection of the objective functions used to quantify the discrepancy between the model`s predictions and the observed phenomenon, or the model`s underlying process or conceptual structure (see figure below).

The core problem blends modelling, inference, and optimization, raising conceptual challenges for each field. For modelers, the challenge is to acknowledge models can only be assessed, not validated or verified, and that the assessment is only as good as (is conditional on) the choice of phenomenon features underlying the objectives and their quantification. This leads to an evolutionary spiral of learning about both the model structure and the most informative ways of interrogating it (the criteria or assessment characteristics based on the key phenomenon features). For statisticians, the challenge is to shift from inference conditional on the assumed model structure to inference about the model structure, more akin to diagnostics based on goodness of fit and investigating the model's Bayesian predictive distribution.

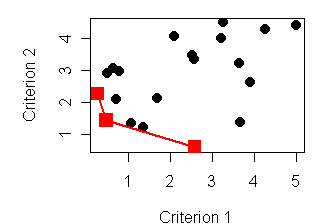

If there is insufficiently rich observations to support statistical inference, then the problem can be approached from the perspective of multi-criteria optimization. For optimizers (actually, for everybody), the challenge then is to recognize that the information basis for assessment comes from the tradeoffs revealed by the Pareto frontier and that just maximizing the number of satisfied objectives (or constraints) is insufficient.

It is easiest to grasp the core issues of this problem from a familiar perspective:

( Figure) Pareto Optimal Model Assessment Cycle (after Reynolds and Ford, Ecology 1999)

Model assessment is concerned with the Zeroth problem of statistics (Mallows, The American Statistician, 1998): model specification. The goal is to develop a coherent logic of structural inference for settings where the model structure is based on some degree of scientific content (in contrast to being simply a parsimonious empirical summary of observed relationships).

Mechanistic models are widely used in the biological and ecological sciences to define and assess hypothesized processes giving rise to an observed phenomenon. Generally, considerable uncertainty is associated with the selection of both the model components and their mathematical representations. Even when the primary focus is prediction rather than model assessment, the predictive impact of this structural uncertainty often greatly outweighs that of the parameter uncertainty (see Wood & Thomas, Proceedings of the Royal Society of London B, 1999, for a nice demonstration).

Thus the initial inference task when developing such models is structural inference or model assessment rather than parameter inference (Mallows 1998, Reynolds & Ford 1999). From a statistical perspective, the issue is whether the (joint) predictive distribution derived from the proposed model structure is coherent with the observed (joint) distribution.

Note that this refers to the predictive distribution of the chosen characteristics of interest, which will commonly only be implicitly defined by the model structure (Diggle & Gratton 1984). This can generate a conflict if traditional inference methods are used uncritically: standard methods start by assuming this coherency via conditioning on the model, thus identifying the sample's sufficient statistics. Yet the sufficient statistics often are not the assessment characteristics of interest. If this distinction is not recognized, standard model fitting followed by goodness of fit assessments may either fail to detect deficiencies or may improperly identify deficiencies that really stem from contrasting information content in the sufficient statistics vs the assessment characteristics of interest (Reynolds & Golinelli 2004). In a sense, this reverses the sequence of traditional statistical inference methods

When only limited observations are available on the characteristics of interest, their joint distribution cannot be adequately estimated, undermining standard statistical approaches for estimating and assessing coherency of the model's posterior predictive distribution. Alternatively, the coherency problem can be seen as a multi-criteria optimization problem: are there any parameterizations that allow the proposed model structure to simultaneously adequately reproduce all of these characteristics? In other words, what is the model's Pareto Frontier?

Model assessment investigates the Pareto frontier of the multi-objective minimization problem defined by the trio (Reynolds and Ford, Ecology, 1999):

| M() | the simulation model, producing multivariate output |

| X ⊆ ℜm | the feasible parameter space |

| F=(F1(M()),...,Fn(M())): ℜm ⇒ ℜn |

vector of objective functions measuring n distinct features of model performance |

Definition: Pareto Optimality

Parameterization X dominates Y (X >Pareto Y)

Û

" i, 1 £ i £ n,

Fi(M(X)) £ Fi(M(Y)) and

$ i, 1 £ i £ n,

such that Fi(M(X)) < Fi(M(Y)).

X is non-dominant to Y (X || Y) Û

$ i, j, i ¹ j, such that

Fi(M(X)) < Fi(M(Y)) and

Fj(M(Y)) < Fj(M(X))

The Pareto optimal set, PF(X) Í X, is the set of all non-dominated solutions with respect to the vector of objective functions F, i.e. the set of solutions which are mutually non-dominated and are not dominated by any other Y in the search range.

The Pareto frontier, FF Í ℜn, is the associated set of their objective vectors, FF(X) = {(F1(M(X)), ..., Fn(M(X))) | X

Î PF(X) }.

Broad guidelines for selecting objective functions are given in Reynolds and Ford, Ecology, 1999. Note that a key step of the model assessment cycle assesses the adequacy of the assessment criteria choices. This issue is also briefly addressed in Komuro et al., Ecological Modeling, 2006.

The objective functions may be continuous measures of discrepancy between a model prediction and a target value. However, often a lack of quantitative data from the phenomena limits the discrepancy measure to a simple binary function - does the prediction fall within an acceptable range of values (Horn 1981, Reynolds & Ford 1999)? E.g., the objective is an implicitly defined constraint. In model assessment this is simply one end of the spectrum of objective function definitions, a spectrum driven by data availability and the nature of the feature and model, e.g., deterministic or stochastic. Fuzzy objective functions are possible. In general, a model assessment can use both continuous objectives and constraints, though most optimization software assumes all objectives have similar quality - either all continuous or all constraints.

Software for Generating Pareto Frontiers

The assessment of the individual-based model of stand development and canopy competition, WHORL, demonstrates the basic steps of the multi-criteria assessment process. The difficult stage of interpreting the assessment results to gain insight into sources of model deficiencies is further illustrated in Komuro et al., Lecture Notes in Computer Science, Vol. 4403, 2007; and Komuro et al., Proceedings of the IEEE Congress on Evolutionary Computation, CEC 2007,Singapore.