Regulating information

Gender is a strange, fickle kind of information. Those unfamiliar with the science equate it with “biological sex,” and yet we have long known that gender and sex are two different things, and sex is not binary, but richly diverse along numerous genetic, developmental, and hormonal dimensions 3 3 Anne Fausto-Sterling (2000). Sexing the Body: Gender Politics and the Construction of Sexuality. Basic Books.

Patricia Yancey Martin (2004). Gender as social institution. Social Forces.

And yet, as much as I want the right to define my gender not by my “biology” and not by how am I seen, but who I am , the public regularly engages in debate about whether I deserve that right. For example, in June 2020, Health and Human Services (HHS) decided to change Section 1557 of its anti-discrimination policy, removing gender from the list of protected aspects of identity, and defining gender as “biological sex.” The implication is that the government would decide, not me, whether I was a man or a woman, and it would decide that based on “biology”. Of course, the government has no intent of subjecting me to blood draws and chromosomal analysis in order for me to have rights to bring anti-discrimination lawsuits against healthcare providers (and it wouldn’t help anyway, since there are no tests that definitively establish binary sex, because binary indicators do not exist.) Instead, HHS proposed to judge biological sex by other information: a person’s height, the shape of their forehead, or their lack of a bust. Defining gender in these ways, of course, subjects all people, not just transgender women or women in general, to discrimination on the basis of how their bodies are perceived.

Who, then, gets to decide what information is used to define gender, and how that information is used to determine someone’s rights? This is just one of many questions about information that concern ethics, policy, law, and power, all of which are ways different ways of reasoning about how we regulate information in society. In the rest of this chapter, we will define some of these ideas about policy and regulation, and then discuss many of the modern ethical questions that arise around information.

What are ethics, policy, law, and regulation?

While this book cannot do justice to ideas that have spanned centuries of philosophy and scholarship 9 9 Michael J. Sandel (2010). Justice: What's the right thing to do?. Macmillan.

To begin, ethicsethics: Systems of moral and questions and principles about human behavior. concerns moral questions and principles about people’s behavior in society. Of the four words were are addressing here, this is the broadest, as ethics are at the foundation of many kinds of decisions. Individuals engage information ethics when they make choices to share or not share information (e.g., should I report that this student cheated? ). Groups make decisions about how to act together (e.g., should we harass this person on Twitter for their beliefs? ). Organizations such as private for and not-for profit businesses make ethical decisions about how employees professional and personal lives interact (e.g., Should employees be allowed to use their own personal devices? ). And governments made decisions about information and power (e.g., Who owns tweets? ). Information ethics is concerned with the subset of moral questions that concern information, though it is hard to fully divorce information from ethical considerations, and so that scope is quite broad. For example, consider questions seemingly separate from information, such as capital punishment: part of an argument that justifies its morality is one that relies on certainty of guilt and confidence in predictions about a convicted person’s future behavior. Those are inherently informational concerns, and so capital punishment is an information ethics issue.

Policypolicy: Rules that restrict human behavior to some end. is broadly concerned with defining rules that enact a particular ethical position. For example, for the parenthetical questions above, one might define policies that say 1) yes, report all cheating, 2) no, never harass people for their beliefs, unless they are threatening physical harm, 3) yes, allow employees to use their own devices, but require them to follow security procedures, and 4) Twitter owns tweets because they said they did in the terms of service that customers agreed to. Policies are, in essence, just ideas about how to practically implement ethical positions. They may or may not be enforced, they may or may not be morally sound, and you may or may not agree with them. They are just a tool for operationalizing moral positions.

Some policies become lawlaw: Policies enforced by governments. , when they are written into legal language and passed by elected officials in governed society. In a society defined by law, laws are essentially policies that are enforced with the full weight of law enforcement. Decisions about what policy ideas become law are inherently political, in that they are shaped by tensions between different ethical positions and tensions, which are resolved through systems of political power. For example, one could imagine a more ruthless society passing a law that required mandatory reporting of cheating in educational contexts. What might have been an unenforced policy guideline in a school would then shift to becoming a requirement with consequences.

Regulationsregulations: Interpretations of law that enable enforcement. are rules that interpret law, usually created by those charged with implementing and enforcing laws. For example, suppose the law requiring mandatory reporting about cheating did not say how to monitor for failures to reporting. It might be up to a ministry or department of education to define how that monitoring might occur, such as defining a quarterly survey that all students have to fill out anonymously reporting any observed cheating and the names of the offenders. Regulations flow from laws, laws from policies, and policies from ethics.

Privacy

One of the most active areas of policy and lawmaking has been privacy. Privacy, at least in U.S. constitutional law, as muddled origins. For example, the 4th amendment alludes to it, stating:

The right of the people to be secure in their persons, houses, papers, and effects, against unreasonable searches and seizures, shall not be violated, and no Warrants shall issue, but upon probable cause, supported by Oath or affirmation, and particularly describing the place to be searched, and the persons or things to be seized.

This amendment is often interpreted as a right to privacy, though there is considerable debate about the meaning of the words “unreasonable” and “probable”; later decisions by the U.S. Supreme Court found that the Constitution grants a right to privacy against governmental intrusion, but not necessarily intrusion by other parties.

Many governments, lacking explicit privacy rights, have passed laws granting them more explicitly. For example, in 1974, the U.S. government passed the Family Educational Rights and Privacy Act (FERPA), which gives parents access to their children’s educational records up to the age of 18, but also protects all people’s educational records, regardless of age, from disclosure without consent. This policy, of course, had unintended consequences: for example, it implicitly disallows things like peer grading, and only vaguely defines who can have access to educational records within an educational institution. FERPA strongly shapes the information systems of schools, colleges, and universities, as it requires sophisticated mechanisms for securing educational records and tracking disclosure consents to prevent lawsuits.

More than two decades later in 1996, the U.S. government passed a similar privacy law, the Health Insurance Portability and Accountability Act (HIPPA), which includes a privacy rule that disallows the disclosure of “protected health information” without patient consent. Just as with FERPA, HIPPA reshaped the information systems used in U.S. health 2 2 David Baumer, Julia Brande Earp, and Fay Cobb Payton (2000). Privacy of medical records: IT implications of HIPAA. ACM SIGCAS Computers and Society.

Just a few years later in 2000, the U.S. government passed COPPA, which requires parent or guardian consent to gather personal information about children under 13 years of age. Many organizations had been gathering such information without such consent, and did not know how to seek and document consent, and violated the law for years. Eventually, after several regulatory clarifications by the Federal Trade Commission, companies had clear guidelines: they were required to post privacy policies, provide notice to parents of data collection, obtain verifiable parental consent, provide ways for parents to review data collected, secure the data, and delete the data once it is no longer necessary. Because getting “verifiable” consent was so difficult, most websites responded to these rules by asking for a user’s birthdate, and disabling their account if they were under 13 7 7 Irwin Reyes, Primal Wijesekera, Joel Reardon, Amit Elazari Bar On, Abbas Razaghpanah, Narseo Vallina-Rodriguez, and Serge Egelman (2018). "Won’t somebody think of the children?" Examining COPPA compliance at scale. Privacy Enhancing Technologies.

Perhaps the most sweeping privacy law has been the European Union’s General Data Protection Regulation (GDPR), passed in 2016. It requires that:

- Data collection about individuals must be lawful, fair, and transparent;

- The purpose of data collection must be declared and only used for that purpose;

- The minimum data necessary should be gathered for the purpose;

- Data about individual must be kept accurate;

- Data may only be stored while it is needed for the purpose;

- Data must be secured and confidential; and

- Organizations must obtain unambiguous, freely given, specific, informed, documented and revokable consent for the data collection.

This law sent strong waves through the software industry, requiring it to spend years re-engineering its software to be compliant 1 1 Jan Philipp Albrecht (2016). How the GDPR will change the world. European Data Portection Law Review.

Privacy law, of course, has still been narrow in scope, neglecting to address many of the most challenging ethical questions about digital data collection. For example, if a company gathers information about you through legal means, and that information is doing you harm, is it responsible for that harm? Many have begun to imagine “right to be forgotten” laws 5,8 5 Paulan Korenhof, Bert-Jaap Koops (2014). Identity construction and the right to be forgotten: the case of gender identity. The Ethics of Memory in a Digital Age.

Jeffrey Rosen (2011). The right to be forgotten. Stanford Law Review.

Property

While information privacy laws have received much attention, information property laws have long been encoded into law. Consider, for example, U.S. copyright law, which was first established in 1790. It deems that any creator or inventor of any writing, work, or discovery, gets exclusive rights to their creations, whether literary, musical, dramatic, pictorial, graphic, auditory, or more. This means that, without stating otherwise, anything that you write down, including a sentence on a piece of paper, is automatically copyrighted, and that no one else can use it without your permission. Of course, if they did, you probably would not sue them, but you could—especially if it was a song lyric, an icon, or solution to a hard programming problem, all of which might have some value worth protecting. This law effectively makes information, in particular embodiments, property. This law, of course, has had many unintended consequences, especially as computers have made it easier to copy data. For example, is sampling music in a song a copyright violation? Is reusing some source code for a commonly known algorithm a copyright violation? Does editing a copyrighted image create a new image? Most of these matters have yet to be settled in U.S. or globally.

Trademarks are a closely related law, which allow organizations to claim words, phrases, and logos as exclusive property. The goal of the law is to prevent confusion about the producers of goods and services, but it includes provisions to prevent trademarks for names that are offensive in nature. This law leads to many fascinating questions about names, and who gets rights to them. For example, Simon Tam, a Chinese-American man started a band, and decided to call it The Slants , to reclaim the racial slur. When he applied for a trademark, however, the trademark office declined his application because it was deemed offensive, as it was disparaging to people of Asian decent. The case went to the Supreme Court, which eventually decided that the first amendment promise of free speech overrode the trademark law.

Speech

As we have noted throughout this book, information has great potential for harm. Information can shame, humiliate, intimidate, and scare; it can also lead people to be harassed, attacked, and even killed. When such information is shared, especially on social media platforms, who is responsible for that harm, if anyone?

In the United States, some of these situations have long been covered by libel, slander, and defamation laws, which carve out exceptions to free speech to account for harm. Defamation in this case, is the general term covering anything that harms someone’s reputation; it is libel when it is written and published, and slander when it is spoken. Generally, defamation cases must demonstrate that someone made a statement, that the statement was published, that the statement caused harm to reputation, that the statement was false, and that the type of statement made was not protected in some other way (e.g., it is legal to slander in courts of law).

Prior to the internet, it was hard for acts of defamation to reach scale. Mass media such as newspapers were the closest, where early newspapers might write libelous stories, and be sued by the subject of the news article. But with the internet, there are many new kinds of defamation possible, as well as many things that go beyond defamation to harassment. For example, cyberbullying 4 4 Dorothy Wunmi Grigg (2010). Cyber-aggression: Definition and concept of cyberbullying. Australian Journal of Guidance and Counselling.

Defamation laws also have little to say about what responsibility social media platforms have in amplifying these forms of harassment. There are, however, laws that explicitly state that such platforms are not responsible. Section 230 of the Communications Decency Act (CDA), for example, says:

No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.

This protects social media platforms from any culpability in any of the harm that occurs on their platforms, defamation or otherwise. Because of the numerous concerns about online harassment by consumers, many companies have begun to attempt to self-regulate. For example, the Global Alliance for Responsible Media framework defines several categories of harmful content, and attempts to develop actions, processes, and protocols for protecting brands (yes, brands, not consumers). Similarly, in 2020, Facebook launched a Supreme Court to resolve free speech disputes about content posted on its platform.

All of these concerns about defamation, harassment, and harm, are fundamentally about how free speech should be, how responsible people should be for what they say and write, and how responsible platforms should be when they amplify that speech.

Identity

We began this chapter by discussing the contested nature of gender identity as information, and how policy, law, and regulation are being used to define on what basis individual rights are protected. That was just one example of a much broader set of questions about information about our identities. For example, when do we have a right to change our names? Do we have a right to change the sex and gender markers on our government identification, such as birth certificates, driver’s licenses, and passports? When can our identity be used to decide which sports teams we can play on, which restrooms we can use, and whether we can serve in the military, and who we can marry?

Gender, of course, is not the only contested aspect of our identities, and it intersects with other aspects of identity 10 10 Riley C. Snorton (2017). Black on both sides: A racial history of trans identity. University of Minnesota Press.

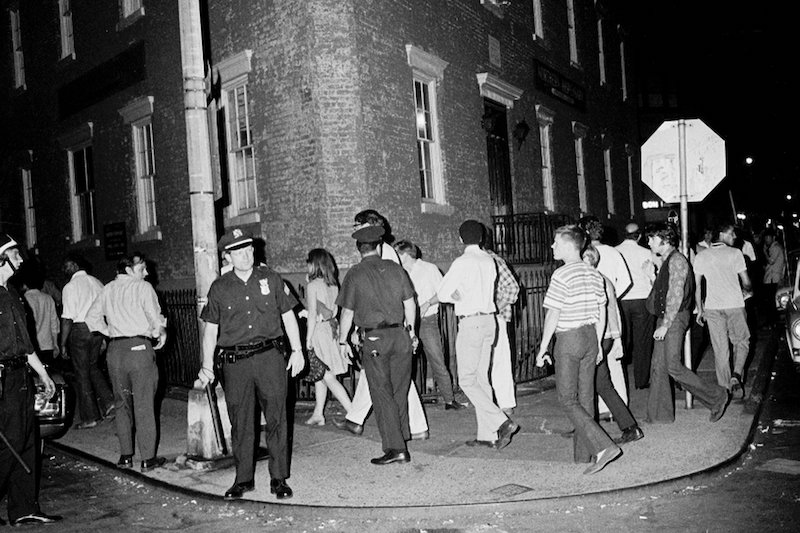

In many countries, the ethics, policy, law, and regulation around gender, race, citizenship have been less focused on granting rights and more about taking them away. The U.S. Constitution itself only counted 3/5ths of each enslaved Black person in determining representation in the House. Women did not have the right to vote until 1920, long after the end to U.S. slavery. Most transgender people in the world, including most in the U.S., still do not have rights to change their legal name and gender without proof of surgery that many trans people do not want or need. What progress has been made came after years of activism, violence, and eventually law: the Emancipation Proclamation ended slavery after civil war; the 19th Amendment granted women the right to vote after one hundred years of activism; the Civil Rights Act of 1964 outlawed discrimination on the basis of race, color, religion, sex, national origin, and sexual orientation only after years of anti-Black violence; and the Obergefell (2014) and Bostock (2020) cases granted LGBTQ people rights to marriage and rights to sue for employment discrimination. All of these hard won rights were fundamentally about information and power, ensuring everyone has the same rights, regardless of the information conveyed by their skin, voice, dress, or other aspects of their appearance or behavior.

These four areas of information ethics are of course just some of many information ethics issues society. The diversity of policy, legal, and regulatory progress in each demonstrates that the importance of moral questions about information does not always correspond to the attention that society gives to encoding policy ideas about morality into law. This all but guarantees that as information technology industries continue to reshape society according to capitalist priorities, we will continue to to engage in moral debates about whether those values are the values we want to shape society, and what laws we might need to reprioritize those values.

Podcasts

Interested in learning more about information ethics, policy, and regulation? Consider these podcasts, which investigate current events:

- Who Owns the Future? On the Media . Discusses the U.S. Justice departments’ anti-trust case against Facebook.

- Unspeakable Trademark, Planet Money . Discusses what rights people have to trademark names and how these rights intersect with culture and identity.

- The High Court of Facebook, The Gist . Discusses Facebook’s long-demanded formation of an independent body for making censorship decisions.

- A Vast Web of Vengence, The Daily, NY Times . Discusses a specific case of online harassment and defamation, the harm that came from it, and how the law shielded companies from responsibility for taking the defamatory content down.

- The Facebook Whistle-Blower Testifies, The Daily, NY Times . Explores the impact of the testimony on possible regulation.

References

-

Jan Philipp Albrecht (2016). How the GDPR will change the world. European Data Portection Law Review.

-

David Baumer, Julia Brande Earp, and Fay Cobb Payton (2000). Privacy of medical records: IT implications of HIPAA. ACM SIGCAS Computers and Society.

-

Anne Fausto-Sterling (2000). Sexing the Body: Gender Politics and the Construction of Sexuality. Basic Books.

-

Dorothy Wunmi Grigg (2010). Cyber-aggression: Definition and concept of cyberbullying. Australian Journal of Guidance and Counselling.

-

Paulan Korenhof, Bert-Jaap Koops (2014). Identity construction and the right to be forgotten: the case of gender identity. The Ethics of Memory in a Digital Age.

-

Patricia Yancey Martin (2004). Gender as social institution. Social Forces.

-

Irwin Reyes, Primal Wijesekera, Joel Reardon, Amit Elazari Bar On, Abbas Razaghpanah, Narseo Vallina-Rodriguez, and Serge Egelman (2018). "Won’t somebody think of the children?" Examining COPPA compliance at scale. Privacy Enhancing Technologies.

-

Jeffrey Rosen (2011). The right to be forgotten. Stanford Law Review.

-

Michael J. Sandel (2010). Justice: What's the right thing to do?. Macmillan.

-

Riley C. Snorton (2017). Black on both sides: A racial history of trans identity. University of Minnesota Press.