Sapience

George Mobus

The University of Washington Tacoma,

Institute of Technology

Part 1. An Introduction to Sapience

Part 2. The Relationships Between Sapience, Cleverness, and Affect

Part 3. The Components of Sapience

Part 4. The Neuroscience of Sapience

Part 5. The Evolution of Sapience

Part 4. The Neuroscience of Sapience

Overview

Some Necessary Caution

A first caveat. While in the prior installments I constructed a very different framework for thinking about the basis of what we call wisdom than had previously been presented in psychology, I managed to keep it constrained by actual psychological work on that subject. I may have flirted with speculation in the area of systems and strategic perspective, but I don't think it strayed too far from observation of human thinking and behavior. In what follows there is necessarily a great deal more speculation involved simply due to the nature of the underlying science — the science of the human brain. In recent years a tremendous amount of information about the frontal lobes and especially the prefrontal cortex has come to light and the pace seems to be accelerating. So, while I will attempt to stick close to what is known about brain function with respect to the functional components of sapience you should recognize that this is getting toward extreme conjecture!

My second caveat is: The material presented here is assembled from book sources rather than primary literature (journal articles) so is going to be dated. In other words the field is much farther advanced than represented in these sources. I have endeavored to keep track of some of the important latest work and use those findings in guiding the integration of what is in the books. Nevertheless, the rate of research results these days is staggering, as is the volume of material. On the plus side, new research should always be considered tentative and so raises some uncertainty with respect to interpretation. Thus reliance on books by highly credited authors seems a fair basis for anything that is, itself, speculative. I hope you will bear with me.

What We Will Cover

The intent of this installment is to map the components of sapience, explicated by the prior installment, to brain functions. This ranges from neural circuits, starting with how neural nets might be representing percepts, concepts, and models, and going to how specific brain regions might be organizing and processing tacit knowledge (organized as systems perspectives) for strategically controlled and morally-motivated judgments.

For those less familiar with brain anatomy or neurobiology I will provide as many Wikipedia references (for easy and quick tutorials) and general reading suggestions that can be used for greater background than can be contained in this work. I'll try to be gentle in expectations of what the general reader knows about neurobiology, but at some point it will be necessary for those with no background but interested in understanding these concepts better to dig deeper on their own. An excellent resource for those who want to become more acquainted with cognitive neuroscience is the comprehensive book by Barrs and Gage (2000). And if you are looking for a very readable treatment of the subject with wonderful graphics look at Rita Carter's "Mapping the Mind" (1999).

Neural Basis of Systems Representation and Models

Representing Causal Relations In Neurons

Amazing as it may sound, the capacity to represent the world out there with networks of neurons inside the head begins with the tiniest bit of neural machinery, the synapse, the connecting point in communications from one neuron to another. During a particularly fruitful time in the 1980s a number of artificial neural network (ANN) models were developed. These were computer simulations of what many researchers believed to be a semblance of what goes on in the brain. They were necessarily quite simple and treated synapses as scalar weights. This never seemed quite right to me and I pursued a different route, which I will describe below.

Most of the work on artificial neural networks centered around a concept called “distributed representation” (Rumelhart, et al, 1986; Hanson & Olson, 1990). This term was used to describe a scheme in which pattern encoding was distributed among all synaptic junctions in a fully connected network (see the diagram in the Wikipedia article on ANN above.) The main idea was that every synapse in such a network participated, in some small way, in the encoding of every pattern that the network was trained to recognize. In fact this actually worked for relatively small sets of non-heterogenous patterns (e.g. recognizing individual faces from a large library of faces). But the concept ran into trouble for very large sets or for dealing with heterogeneous patterns. One of the main problems had to do with the amount of time it would take to train the networks. At least one mathematician determined that as the network grew in size to accommodate larger problem sets the time it took to train the network grew exponentially large. This means that such networks are going to be limited severely in terms of what they can learn to represent.

The early researchers pushing the distributed representation paradigm were convinced that their first successes meant that the brain actually stored information in the way their model networks did. There was a long and deep debate regarding the differences between distributed representation versus what is called local representation — the idea that a limited number of neurons encode specific patterns. That debate has been largely settled of late by recognition that specific clusters of neurons do fire differentially in response to specific patterns presented to the sensory system. For example we now know that there are neurons that fire whenever a generic face (even the ‘happy face’) is presented (work done in monkeys). Another set of neurons fire whenever the face of a generic monkey is presented. Still another set fires when the face of a conspecific is presented, and so on. When the latter example is the case, all of the prior clusters are also firing, suggesting that 1) there is a hierarchy of representation from generic down to specifics, and 2) that patterns are indeed represented locally rather than distributed throughout the brain. As I will show below, this local representation is actually just a focal point for specific concepts (encoded patterns). However, it turns out, too, that the total feature representation of a specific pattern is distributed, but only amongst local clusters at lower levels in the hierarchy.

While the ANN work was receiving so much attention in the 1980s and 90s (and actually continues to dominate some neurological thinking even today) I felt dissatisfied with the lack of biological realism being modeled. Synaptic weights did not seem to me to adequately represent the dynamics of what was then known to occur in real synapses (Alkon, 1987). So I set about trying to formulate a computer model that did a better job at emulating biological synapses.

In work that I did in the early 90s I built a computer simulation model of a more biological-like synapse, which I dubbed the Adaptrode (Mobus, 1994; and see below). The main feature of the Adaptrode as a mechanism for learning is its ability to capture multi-time scale associative information through a reinforcement mechanism. What this means is that the Adaptrode could record a memory trace in the short run, from incoming action potentials, and, if that recording were reinforced by a signal coming through a second channel shortly after, it would record another intermediate-term memory trace at a somewhat weaker level. The second trace, while weaker, nevertheless kept the memory trace for a longer period of time. Then after a longer time had passed, if yet another signal arrived via a third (or even fourth) channel, the memory trace ended up in a long-term form.

In other words, the Adaptrode mechanism, when incorporated into a simulated neuron, allowed that neuron to have short-, intermediate-, and long-term memory traces recording the association between two or more external sources. In the above referenced book chapter (actually an extract from my PhD dissertation) I showed that the Adaptrode could emulate Pavlovian conditioning. And I further showed that such conditioning is a necessary part of any representation of causal relations,

A causal relation is of the general form:

A ⇒C B, if TA <int TB

where event A and event B are observed in near proximity and the time of event A, TA, comes before the time of event B, TB by a small interval.

There are more specific forms of such relations, for example a stochastic form allows that events A and B are probabilistic but that the above situation must be true more often than not. There can also be restrictions placed on the relation, such as that B must never precede A in time. However, these are just ways to formally capture what we all readily perceive when we say that A causes B.

One of the more interesting versions of causal relations is that of circular causality (see the above reference to causality). Most of us are satisfied with a simple A causes B kind of explanation for things, like hitting the cue ball with the stick causes it to roll and hit the target ball in billiards. In such situations we are happy with a unidirectional flow of causality. But we might just ask, what caused one to hit the cue ball in the first place? Most would imagine a chain of causal relations going back to something like the person wanted to get the target ball into a corner pocket. Our explanation can go even further back and suggest that the individual wanted to play the game, and so on. But few will ever say something like: the memory of the target ball going into a pocket, if the angle it is hit by the cue ball is just right, causes the person to hit the ball! In other words, something about the ball and pocket (an effect) actually is part of the cause of hitting the cue ball.

This, possibly strained, example is an instance of circular causality, wherein an effect loops back somehow to be a component cause of the event that caused it. The notion of circular causality is an absolute no-no in most logics, but it goes on all around us all the time. A causes B, which causes C, which feeds back to affect A, and so on. In the billiard example, if the person misses her shot, that fact feeds back into her memory and her brain tries to build a more correct aiming strategy for the next time she shoots. If she finds herself repeating the same kind of shot, she might do a better job of it.

It turns out that an ability to represent causal relations is essential to building models of how things work. Causal relations are also captured in simple algebraic functions such as: y = ƒ(x). This is interpreted as the value of y changes in proportion to some change in the value of x. The function associates two variables, x and y where the latter is considered the dependent variable. Hence, a change in x 'causes' a change in y. What the Adaptrode allowed me to do was to build neural circuits (networks of neurons) that represented such relations but also learned those relations and their strength of association over very long time frames. For example, my Mavric robotic experiments showed that a wandering robot could learn to associate one kind of sound with a light, that meant reward (well, robot reward) and another combination that meant harm (Mobus, 2000), and then always approach the reward-combo while avoiding the harm-combo. Thus the robot learned to represent cause and effect, a sensed phenomenon with a reinforcing signal, either reward or punishment (see the Mavric link for details).

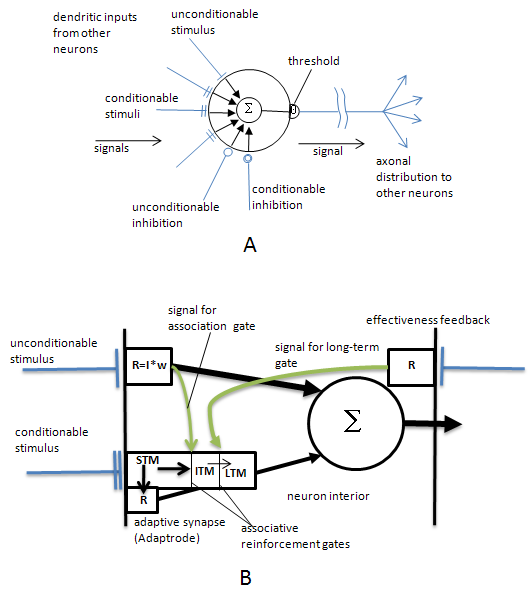

Representing causality is something neurons can do quite well. Figure 1 shows a stylized neuron (Fig. 1A) and a schematic of what happens inside the neuron to cause associative (and causal) adaptation to occur (Fig. 1B).

Figure 1. An adaptable (learning) neuron (A) and how it works (B). See text for details.

The neuron in Fig. 1A receives multiple excitatory and inhibitory inputs from many other neurons in the network. The single barred terminus, labeled “unconditionable stimulus” brings a non-adaptable stimulus signal to the neuron. This signal is generally highly effective in contributing to the overall excitation level of the neuron. The circle with a Σ is a spatiotemporal integrator (actually the cell membrane) that adds all of the incoming signals and sends the current level of excitation to the Θ threshold in the axonal hillock. If the summation exceeds the threshold the cell fires output action potentials. Their frequency is proportional to the fluctuating excitation of the cell. Both excitatory and inhibitory inputs can contribute. The double barred termini represent adaptable (meaning plastic) synapses (inputs) that can become stronger in their influence under conditions of sustained inputs as well as temporally correlated excitation of the unconditionable stimulus. If any of these synapse become stronger they can begin to cause the neuron to fire even in the absence of the unconditionable stimulus. Thus the pattern of learned inputs can cause the neuron to come to represent that pattern and fire the neuron whenever that pattern is present in the input array.

Figure 1B details how the learning occurs and gives a hint at the role of time in the adaptation process. Here we see an unconditionable and a conditionable stimulus together. This is representative of signaling circuits (actually internal flows of chemical concentrations!) inside the neuron. Just inside the synapse is a compartment that responds to the input signals. In the figure the response of a synaptic signal, R, is determined by the synaptic efficacy or weight (w) times the real-time input signal (I, frequency of action potentials). That response, in turn, signals the integrator. In the case of the unconditionable input, the weight, w, is a high and fixed value, meaning that the synapse is competent to cause the neuron to fire by itself, what Daniel Alkon calls a “flow-through” synapse (Alkon, 1987). Such synapses carry important semantic information. In the Pavlovian dog-salivation conditioning experiment, this signal was the smell of food, while the conditionable signal was the ringing of the bell.

The conditionable synapse, immediately beneath the unconditionable one, is inherently weak to begin with; its weight value is near zero, and so it cannot fire the neuron. Note that the compartment behind this synapse has three sub-compartments representing three stages in the evolution (dynamics) of a memory encoding. Initially w is low. A steady (strong) input signal will tend to elevate the w value as a short-term memory (STM), but as soon as the signal falls off, w will decay again toward its initial low level. However it takes a bit of time for this decay to occur. During that time, should the unconditionable stimulus cause the cell to fire, it also opens a ‘gate’ that allows whatever the value of w is at that instant to change a similar weighting value in the intermediate-term memory area (ITM). This means that a short-term memory trace is saved for a longer time (the decay in the ITM area is much, much slower than in the STM). What has happened is that an association between the conditionable and unconditionable signals has been established. Moreover, the way these synapses work, the conditionable signal had to occur a short time interval prior to the unconditionable signal otherwise the gate is locked shut. What this means is that the conditionable signal represents something that is “causally” associated with the unconditionable signal (or whatever generated it). The conditionable signal becomes, in a very real sense, a predictor of the unconditionable signal.

Over an even longer time scale, if the firing of the neuron sets into motion some downstream activity, that will provide excitatory (or possibly inhibitory) feedback to the cell, either at another synapse or through neuromodulators. That feedback can then open a second gate that will allow the STM to raise the w value in the long-term memory area (LTM). Thus the memory trace becomes associated not just with an original semantic signal, but also with longer-term rewards (or possible punishments). Because of the time lags involved, these associations are strongly causal in inferential nature. The conditionable synapses are locked out from encoding associations if either the unconditionable signal or the feedback signal comes before the conditionable signal in real time.

Over many reoccurrences of these temporal associations of signals, the conditionable synapse will develop a much stronger weighting (efficacy) that will allow it to contribute significantly to the cell firing on its own. Typically, in large pyramidal neurons with thousands of inputs, it will be a pattern of many synapses (coming from multiple sources) that build up enough strength such that collectively they can cause the neuron to fire in the absence of semantic inputs. But the principle of temporal encoding shown above is operative in these cases (see, Mobus, 1992 for more details).

Armed with the notion that small neuronal circuits can capture and represent causal relations as well as general associations (pattern recognition) it is just a small step to develop a theory of construction of dynamic systems models using neural circuits. Systems dynamic modeling gives us a clue. Such models are, in fact, networks of components (or 'stocks') and connected by dynamic directional links (or 'flows') that implement feedback loops and, yes, causal relationships. Networks of living neurons, or rather small neural circuits called cortical columns are able to learn to represent perceptual patterns (like faces) and learn to associate patterns to form meta-patterns, or concepts. The latter are not just static representations, however. The causal dynamic described above allows these concepts to interact with one another as models of how the real-world counterparts interact. The invocation of one concept (or percept, for that matter) can invoke related concepts. We experience this as things coming to mind, or being reminded. When we think of that beautiful sunset we also think about our lover.

Representing Concepts in Neural Networks

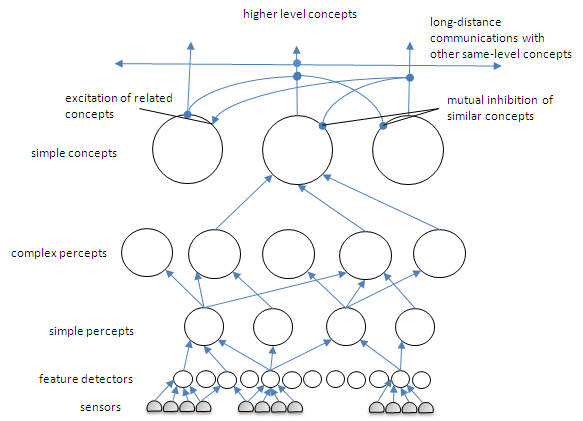

The representation of concepts is accomplished in a hierarchical fashion (see Fig. 2 below). That is small bits of representation, called features, when they form a consistent spatiotemporal pattern, generate a percept (either as sensory driven or as mentally activated). The links between the feature (detectors) and the percept representation neuron cluster are learned in precisely the manner given above. A small cluster of neurons might be activated when that set of features is activated and the cluster learns (as a unified group) that the particular set of features 'means' that percept. Pavlovian conditioning actually provides an example of the attachment of meaning to arbitrary causal associations. Pavlov's dogs learned to associate a bell with the availability of food in their near future (seconds later). The bell had no intrinsic meaning to the dogs. But it came to have meaning when paired in this manner with food, which does have meaning. The bell caused the dogs to salivate as if food were present. We can readily model this association of arbitrary events/patterns with meaningful stimuli or previously learned meaningful concepts. Indeed I suspect that this is at the heart of what Damasio called 'somatic marking' (Damasio, 1994) and I have referred to as semantic tagging.

Figure 2. Hierarchical representation of the world in features, percepts, and concepts.

In the above figure sensory inputs come in from below to activate feature recognizers (as an aside, ANNs employing fully distributed representation can actually be used as local feature detectors in simulations of brain circuits!). Those features of the world that are present in the sensory field (e.g. in the visual field) are activated and then activate the perceptual field above. Percept clusters (represented by a single circle but not to be taken as a single neuron) are activated when the set of features maps to that cluster. The connecting lines in the figure are actually complex channels that provide two-way activation, i.e. from above or from below (see Fig. 3 below). There are numerous adjunct neurons in these channels that prevent run-away activation, but are not shown here. Also note that some features are shared between several or even many percepts. The lines shown are channels subsequent to any learning that has taken place to form the mappings. Only a few maps are shown in the figure.

A quick note on memories and their location in the brain. In the above diagram and the last paragraph I indicate that the connections between clusters are recurrent. That is, the lower clusters can be activated from higher clusters and vice versa. During sensory stimulus the flow of information is from bottom, more 'primitive' clusters to more integrated ones (features to percepts to concepts). However, during thinking or imagery recall the flow can go in the other direction, from higher level concepts to lower level features. We now know from imaging studies (e.g. fMRI) that memories are not formed from simple clusters at one point in the cortex. Rather, recall of memories excite the same sensory areas of the brain involved in perceptual tasks. Perceptual memories are learned from repeated or reinforced bottom up activations and perceptual recognition proceed from bottom up mappings. Once learned, however, when a higher level concept is activated during cognition, it can send downward a wave of (presumably milder) excitation that recurses over the mapping from bottom upward. So memories are found to be diffuse and reuse portions of the sensory and integrative cortices.

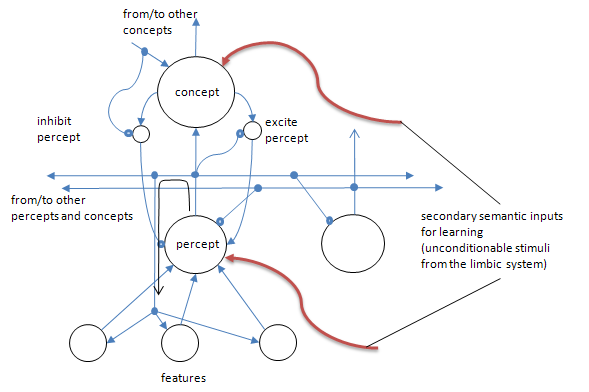

Figure 3. Some greater detail than provided in Fig. 2 shows that the excitation of higher level clusters (e.g. concepts) can feed back to lower level clusters that comprise the components of patterns that activate the cluster in bottom-up (perceptual) processing. However, the downward activation can be driven from yet higher level clusters. For example, a meta-concept, or other associated concepts (not shown) can activate a concept that then sends recurrent signals down to the percepts that are part of the bottom-up activators of that concept. In turn, percepts can activate the feature set that would activate them from the bottom-up. Thus perceptual experiences activate concepts from the bottom up, while higher or associated concepts activate lower level percepts/features from above. This mechanism enables single cell clusters to encode complex objects and relations by reusing the lower level clusters. This recurrent wiring helps to explain why areas in the perceptual cortex are activated even when someone is just thinking about an object rather than perceiving it. The two smaller clusters represent associated control neurons that either drive downward activation or inhibit positive feedback from upward activation. The open circular termini are inhibitory synapses. The solid dots on lines (axons) are used to denote connection of multiple lines. Also note the red inputs from the limbic system. These are affective-based unconditionable stimuli to the cortical neurons that, in essence, tell the cells when there is something important that needs to be encoded as per the description of synaptic learning above.

In a recursive fashion, sets of percepts that have been activated from their various feature maps activate concepts to which they are mapped. Concepts are complex versions of percepts with other inputs considered, for example, other concepts. The channel arrows above the concept level represent communications between various concepts. These can be excitatory, as when concepts have been learned in association. Or they can be inhibitory, as when concepts are mutually exclusive or clash.

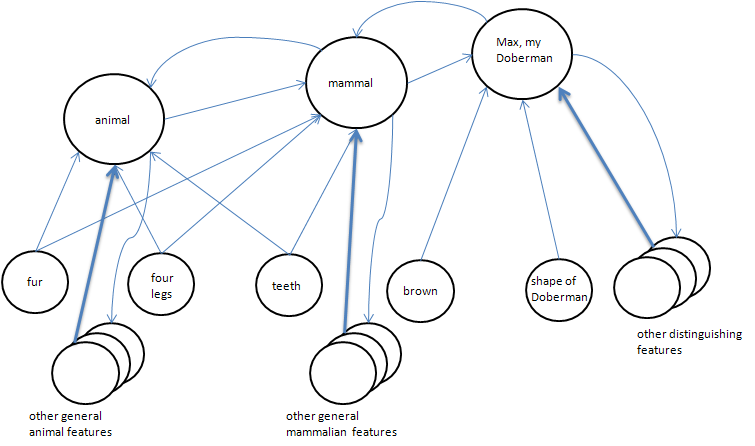

Concepts that intercommunicate can form meta-concepts. For example, a 'dog' is a meta-concept. It groups all animals having certain perceptual characteristics (aggregates of features) in common into a category of things. A specific dog, your pet Fido, has some unique instances of those characteristics which you recognize. Yet the uniqueness of your pet does not preclude your understanding that it is, after all, a dog in the more generic sense. This ability to categorize and generalize while maintaining specificity of instances is probably a general mammalian (possibly some avian cousins can do it as well) mentation feature.

Figure 4. Features and concepts form super-clusters or meta-concepts. Feedback signals help reinforce the relations between such clusters over time using the leaning mechanism covered above. Thinking about my dog Max (a hypothetical since I don't currently own a dog) activates all of the associated concepts, e.g. mammal, animal, etc., as well as numerous features that contribute to Maxness. If I were to physically see (perceive) Max, these features would be activated driving activation up the chain to my concept of Max cluster. These kinds of associations allow us to answer such questions as: Is Max a mammal?

All of these structures are composed of myriad neural clusters with many neurons participating in forming dynamic cluster activations when a concept is activated in the mind (even if subconsciously). Neurons and new sub-clusters can join and leave these structures over time as learning takes place. New associations can be made at all levels and old associations can fade if not reinforced. Moreover, old associations can be inhibited in the case where new learned associations provide contradictory or dampening weight to the various activations. The structure of neural representation is in constant flux as new associations are learned. Some are so often reinforced with new experiences that they become essentially permanent in long-term encoding (changes in synaptic morphology suggest that some connections develop long-term stability).

As life goes on we form larger scale conceptual networks (networks of networks of networks...) to represent more abstract concepts like cities and corporations. We don't really have a good idea about the capacity of the brain to form these fractal structures, that is, what is the largest scale. But we do know that better brains, those more intelligent brains, can form more complex structures at more abstract levels than more common ones. This is part of general intelligence. Creativity plays into this in providing ways in which brains can form novel, if temporary, meta-concepts at abstract levels and explore the applicability of these structures.

Constructing Concepts and Percepts in Specific Brain Regions

Learning associative linkages and forming ever more elaborate networks of sub-networks appears to go on throughout the entire cerebral cortex. However, the functions of different regions put this capacity to different uses. The basic concept formation process that I have just described appears to take place primarily in the forward (anterior) portion of the parietal lobes and the temporal lobes — the integrative processing parts of the cerebral cortex. These regions are responsible for integrating sensory features to form percepts and at least the first layers of concepts. It isn't clear where more abstract concepts are formed, but a likely candidate is regions within the frontal lobes.

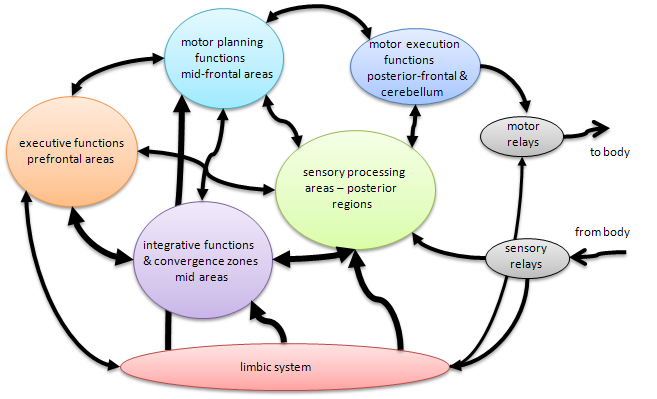

Figure 5. Brain functions and a rough map of information flows. See explanation in text.

Superimposed on this conceptual structure formation aspect is the sensory, thinking, motor activation processing that constitutes the major activity of the cortex. Sensory information comes in through more posterior structures. Primary visual processing, for example, is handled in the occipital lobes. In general the more posterior portions of the lobes (except for frontal) process sensory input patterns, building percepts. The more central regions (except for occipital and frontal) integrate multi-sensory percepts forming or activating early concepts. These, in turn are the stuff of scene recognition and understanding — determining what is immediately in one's environment from sight, sound, touch, smell, etc. All of this is forwarded to the frontal lobes where decisions about what to do given the situation in the environment along with the current state of the self and already present drives and motives. These decisions then loop back to the posterior frontal areas, sometimes called the pre-motor cortex, where the decisions activate motor intentions that eventually culminate in some kind of action, even if only to generate new thoughts without overt activity.

This description is extremely rough and probably overly simplistic in leaving out a tremendous amount of detail. But such is the problem of conveying a general idea in this limited format! Below I will try to be a little more specific about brain regions involved in specific aspects of sapience. Here I just wanted to provide a grand tour of the cortex as it pertains to encoding knowledge and using that knowledge in more-or-less routine behavior. These parts of the cortex are responsible for learning about the world (concepts and models) and using that knowledge to act in the world. Below I look at a few specific features of the brain that are involved in affecting decisions that help us act well in the world we learn about.

The Seat of Sapience: The Prefrontal Cortex

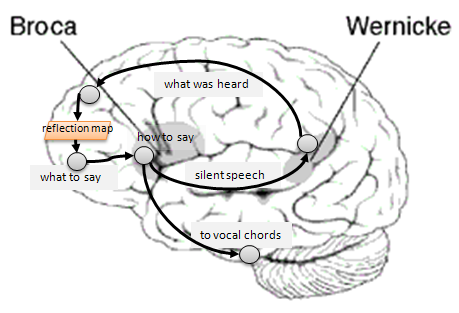

The posterior regions of the frontal lobes are designated as the premotor cortex. This is where intentional behaviors and movement planning is coordinated. When you reach for a glass of wine, this region organizes the sequence of movements and sets in motion the motor control programs (possibly 'run' in the cerebellum) that perform the behavior. The anterior portion of the frontal lobes is called the prefrontal cortex and has been designated as organizing the 'executive' functions (Goldberg, 2001; Goldberg & Bougkov, 2007). These are the functions associated with higher cognition and consciousness. This is the region of the brain where plans are formulated, memory managed, and mentalese is translated into interior narrative in the language of speech. When you 'listen' to your interior monologue (thinking to yourself) your prefrontal cortex is driving the formulated thoughts from working memory into the speech area of the frontal lobe — Brocca's area — where the preformation of voiced sounds is initiated and syntactical structures (concepts) are formed. The posterior portion of Brocca's area lies in or near the temporal lobe where integration of the sensory inputs occurs. Thus a posterior portion of that lobe in conjunction with the parietal lobe feeds the auditory area of the brain — Wernicke's area — where the reverberations of what would otherwise be sounds (voice) are 'heard' in the head. The brain forms this phonological loop because those reverberations are then subsequently interpreted and end up back in the frontal lobe. The prefrontal cortex, particularly the reflective map that was introduced in Part 3, is having an on-going conversation with itself in the same language as the individual uses to communicate with other individuals. But this conversation is merely the tip of the proverbial cognitive iceberg. The underlying, that is sub- and pre-conscious thinking activity, not summarized in the speaking language, comprises a much greater volume of conceptual organization. It involves the generation of temporary concept hierarchies and sequences (some novel) in working memory, and then the filtering and reorganizing of those temporary thoughts guided by the mental models of the world already established (above). Presumably much more of this pre-conscious shuffling and construction takes place than ever surfaces to the level of internal voice generation.

Figure 6. The internal conversation loop (phonological loop) involves the listening (hearing language processing) in Wernicke's area receiving echoes from the speech production area of the frontal lobe, Brocca's area. The same channels from Brocca's area, along with control signals from the cerebellum, go to the vocal chords, tongue, diaphragm and other voicing muscles, but are presumably blocked when you are thinking to yourself. When you are talking to yourself you probably get both actual sound inputs along with internal (non-voiced) inputs in Wernicke's area. [Brain figure thanks to Wikipedia.]

It is likely that the prefrontal cortex, which is greatly expanded in humans relative to other apes, provides the main organizing circuitry for building and adjusting our models of how the world works. Once called the 'silent' lobes, because their functions were not obvious, the frontal lobes are now known to be involved in taking in the situation in the world from our sensory integration cortices, using our mental models to interpret the situation as well as integrate our inner drives and goals, and formulating behavioral responses based on anticipated results, or a change in the situation. It is the prefrontal region which is primarily responsible for controlling this activity. Then the output from the behavioral plan is pushed back into the premotor regions for action to be initiated. This, at least, accounts for voluntary behavior. As discussed below, involuntary, or reflexive behaviors have a different origin and triggers.

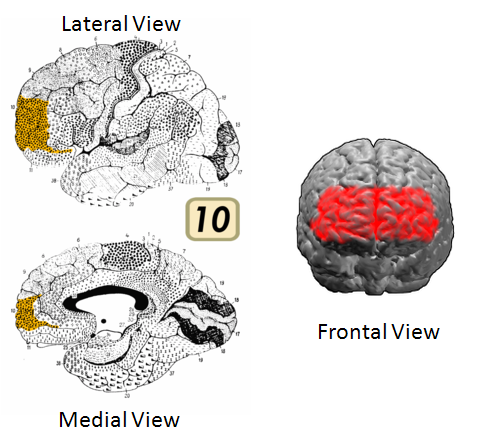

The cortex has been mapped into smaller regions based on cytoarchitectonic (organization of cellular structures and cell type distributions), and to some degree on functional, considerations (see Brodmann area maps). Many executive functions have been derived from psychological testing and have subsequently been correlated with the activities in specific areas in the prefrontal cortex. One area that has remained tantalizingly elusive is Brodmann area 10 (BA10), the most frontal polar area of the brain (see Fig. 7). It is also likely that this area is the most recent to expand in extent, and may have been most recently modified, cytoarchitectonically, in the evolution of humans. From what initial results are coming from cognitive neuroscience regarding the activities in this area, I am strongly suspicious that it represents the highest level of control of all other areas of the brain.

Figure 7. Brodmann Area 10 is the foremost region of the prefrontal cortex (frontal-polar). It is strongly implicated in high order judgment and moral reasoning executive tasks. It appears to be the final convergence zone for all other prefrontal areas as well as many other cortical and limbic brain areas. [Source: Wikipedia]

The prefrontal cortex is reentrantly wired to every other part of the brain, including the brain stem nuclei where the most primitive (operational level) controls are located. The prefrontal lobe areas collect information from all areas of the brain and provide recurrent afferents to those areas. Thus to conclude that the prefrontal cortex is the seat of strategic and higher order tactical/logistical control is not unwarranted. And BA10, the polar region, is itself connected to every part of the rest of the prefrontal cortex. Hence the strong suspicion that this patch of tissue is responsible for the highest level of strategic control of the organism is not without merit.

I should point out that I am not saying that BA10 is solely responsible for sapience, in the sense that all of the processing I have been talking about (conscious and subconscious) goes on just in BA10. Rather, I think of BA10 as having executive coordination control over all of the other parts of the prefrontal cortex and that these, in turn, are involved in controlling all of the processing activities that collectively produce an integrated sapience function. This vision of the functional aspects of prefrontal cortex architecture is in keeping with the hierarchical representation scheme presented above. In a real sense, concepts encoded into BA10 are the ultimate meta-concepts that tie everything together. The more competency this region has, the more comprehensive these meta-concepts will be. All of the other brain regions encode and process their own kind of knowledge and would continue to do so if BA10 were eliminated (as has happened in certain brain lesion accidents). Indeed we have reason to believe that elimination or reduction in effectiveness of BA10 does not seem to diminish intelligence as we normally think of it.

My thesis, specifically, is that the expansion and differentiation of BA10 in Homo sapiens greatly increased the level of strategic perspective which, in turn, increased the existing moral sentiments and systems perspective in support of high-order judgments and intuition. I will reopen that discussion in Part 5 on the evolution of sapience.

Ongoing substantial research on the cognitive functions of the prefrontal cortex is producing elegant results regarding the nature of consciousness and elucidating the 'I' in phrases like: “I think, therefore I am.” My hope is that neuroscientists will see value in a framework for thinking about human psychology in terms of sapience as the epitome of strategic (executive) control and its role in integrating moral sentiment with systems-oriented perceptual/conceptual model construction. Below I argue for how this might be accomplished.

Affect and Moral Sentiments

The limbic system is the more central region of the brain, often called the primitive mammalian brain. It preceded the neocortex evolutionarily and overlies the most primitive parts of the brain stem. In short the limbic system is responsible for a wide array of functions that involve distribution of sensory inputs to the various sensory cortices (thalamus), early warning of environmental contingencies that have emotional content (amygdala), laying down long-term memory traces in the cortex (hippocampus), and numerous other logistic and tactical control functions. In particular, the limbic system is responsible for reporting affective state to the prefrontal cortex. In other words, we feel our emotional state by virtue of the limbic monitors reporting that state to our consciousness. Note that our emotional state is usually determined by our environmental situation prior to our becoming aware of it. First we lose our temper and then we realize we are angry and need to assess what caused us to be so. The same is basically true for all the other primary emotions.

The basis of positive moral sentiment seems to be the twin affective drives of altruism and empathy. It is not clear, at present, what basic layout in the limbic system gives rise to these drives. They seem to be motivated by more primitive drives, indeed the most primitive drives, of seeking for resources and mates, and avoidance of dangers (Mobus, 1999). These two most primitive drives (found in all motile creatures including bacteria!) are augmented in more complex animals with additional drives such as rage, panic, and sex drive. In yet more advanced organisms care giving and play (in mammals and some birds) round out the set of drives (see McGovern, K. in Barrs and Gage, 2007, Chapter 13, pp 369-388).

Seeking behaviors are driven by associations of physical sensations with rewards. For example, the taste of a food generates a reward loop, with dopamine delivered to associator neurons to 'bless' the association, that is to validate the substance as a food so that it will be sought after in the future (see my robot brain explanation of how I got MAVRIC to emulate this behavior.)

These basic drives underlie the biasing of perceptual and conceptual systems in the neocortex. We saw this in the Relationships (Part 2) installment. We will see that the neocortex provides an important set of new facilities to match these basic drives to social behavior. But there seems to remain a puzzling middle piece to the story of how basic drives translate into social behaviors, especially in animals with simpler brains. The origin and mechanisms underlying eusocial insects, for example, cannot rely on mirror neurons (to be discussed below) as found in neocortical tissue. Pre-mammalian phyla contain many examples of social behavior, such as schools of fish, so sociality must have a deep mechanism in the primitive brain stem.

By the time we get to mammals we find many social species that do not depend on, say, instinctive behaviors per se, or division of labor to the extent seen in the eusocial insects. What we do see is altruism and mimicking behaviors emerge from the primitive cortex and later expanded in the neocortex. So we are not in a position to identify the brain structures directly involved in going from primitive drives to social behavior yet, although a link between the automatic reaction to bodily excreta and rotten food — disgust — has been suggested. It may be that soon we will have more insights into how primitive drives are linked to our more evolved emotional centers in the limbic system.

There has been much progress in connecting more advanced affective modules in the limbic system to the basis of moral reasoning in the neocortex. These 'more advanced' structures include the hippocampi and the amygdalae (from above) as well as the cingulate cortex (see below), especially with its strong connectivity with the frontal lobes (Damasio, 1994; LeDoux, 1996). A great deal is now known about the iterative processing that takes place between the limbic centers and the frontal lobes and most especially the prefrontal cortex.

There is reason to believe that the moral instinct in humans is to an analogous capacity in language acquisition. That is, just as all normal humans have the capacity and inclination to learn language at a young age, to learn the vocabulary and grammar of their native tongue without explicit instruction, so too, all normal humans have a built-in moral instinct that becomes particularized to a given culture. All humans, by this theory, have a built-in sense that there are right and wrong social behaviors, to experience empathy with other minds, and to instinctively seek to cooperate with familiar conspecifics in social networks. Since it is fairly certain that the motivation and mechanisms for language acquisition is built into our brains, and if there is an analogous situation with respect to the acquisition of morals (e.g. there is a moral sentiment that moves us to acquire specific moral codes) then morality is essentially built in.

By built in, of course, I mean that genetic propensities exist which guide the early development of the brain to hard-wire these instinctive tendencies into the limbic areas and ensure communications with the appropriate cortical regions. The latter areas are where learning complex concepts allow one to learn the specific rules of a given society; what constitutes specific good and bad behaviors within the context of that society. The impetus to want to belong to the group tends to bias our behavioral decisions to what that group counts as good and avoid that which it counts as bad. Of course, sometimes it is tempting to do something that would otherwise be counted as bad (cheating) because, as biological creatures seeking self gratification, it can be advantageous if the behavior can be pulled off without getting caught. Our brains have evolved an elaborate set of filters for self-inhibition (regulation) as well as to detect cheating in other members of the group.

The basis of our moral (and ethical) reasoning, processed in the neocortex, is grounded in evolved instinctive behaviors that allow us to form strong social bonds and functional structures. The essences of morality is how we tend to treat others within our group and those outside our group. The adaptive capacity of the human neocortex is substantial, as evidenced by our ability to belong to different 'groups' and to expand our sense of groupness beyond mere tribal levels (150 - 200 people according to some estimates) to include large institutions (e.g. our work or religious affiliations), states and nations, and perceived racial affinities (Berreby, 2005). Our brains have allowed us to behave socially with strangers with whom we perceive a common membership in some conceptual group (Seabright, 2004). Nevertheless there are limits. It is just as easy to perceive an exclusionary set of attributes that make the 'other(s)' seem inferior, even non-human, allowing for more aggressive tendencies to emerge. Such perceptions, if driven by emotional forces from the limbic system, readily lead to all kinds of horrors (as judged from the outside by uninvolved observers). This is very likely an evolutionary hold over from our Pleistocene ancestors, their tribal organizations (groups) and the effects of group selection that favors in-group altruism and out-group suspicion and hostility (Sober & Wilson, 1998).

Currently one of the most interesting developments in understanding the brain mechanisms underlying social behavior and moral reasoning, is the basis for empathy in specific neuronal structures called 'mirror neurons', recently discovered in primate cortex. There is, in fact, a mirror system that has been shown to be activated both when an individual is performing some specific act and when that same individual is observing the same behavior in a different individual. Work is underway to understand the role of these neuronal systems in empathetic thinking (c.f. theory of mind). It will be interesting to see if more definitive mechanisms of this sort can be discovered, which will further help us understand how moral sentiments, originating in the hard-wired limbic centers, affect judgment and reasoning. And, just as importantly, how the latter can modulate, alter, or even activate those centers.

So far as sapience is concerned, it is my hypothesis that the prefrontal cortex, especially the frontopolar region, has responsibility for bringing to bear a large body of tacit knowledge about good and evil, right and wrong, costs and benefits and many other dichotomies, on the decisions that must be made in maintaining the social order when problems emerge. That groups are composed of many highly variable individuals, each with a high sense of autonomy and a generally unique array of desires and motivations, as well as personality type inventories, ensures that problems (conflicts) will arise. To what degree can a strong sapient individual bring their wisdom to the judgment process, motivated by strong, positive, moral values may often mean the difference between success and failure of the group. I will explore this from an evolutionary perspective in the final installment.

Primitive to Higher-order Judgment

Every time you make a decision (conscious or unconscious!), no matter how trivial or 'local' it may be, some portion of your brain is applying judgment while another part is applying affect to bias that decision. Most of us go through daily life making mostly trivial decisions. What to eat, what news story to read, what to wear, etc. are the stuff of daily life. Most of the time these decisions are made subconsciously without thinking too much about it. Even when the number of choices is larger (do I want Mexican, Chinese, Thai, ...?) and we spend a little time actively engaged in analysis (what do I feel like eating?) we don't go at it with any kind of rigor. We just decide based on what we feel.

Low-level or primitive judgment refers to the evolutionarily early application of learned preferences to guide decision processing. Some brain studies suggest that the anterior cingulate cortex (ACC) plays a role in conflict resolution and mediating between the affect centers and the neocortex. It is conceivable that this region of the primitive mammalian brain actually was responsible for early mammalian judgment processing. Now it is still involved in applying biases to lower-level decision making.

The ACC lies just beneath the frontal lobe neocortex and near the prefrontal cortex specifically. The latter is richly connected with the former, suggesting an integration of functions. The ACC has a rich concentration of specialized neurons called spindle cells which are implicated in high-speed communications between the limbic system and the prefrontal cortex. But in addition the ACC is in communication with the parietal cortex as well as the frontal eye fields of the frontal cortex (implicated in eye movement control). It appears that the ACC is situated in such a way that all of the information needed to evaluate a person's situation and make moment-to-moment judgments is available. Simple judgments may include directing the eyes (and possibly the auditory system focus) in deciding what to look at next.

I suspect that the prefrontal cortex extends the judgment processing role of the ACC wherein the former has expanded the scope and complexity of learned tacit knowledge application to decision processing. In other words, the prefrontal cortex has become responsible for higher-order judgments. The lowest sort of such judgments are those involving simple matters but requiring conscious analysis and consideration. Decisions like 'what paint color would look best in this room?' require more thought as well as awareness of emotional responses and thus, I suspect, are processed by the prefrontal cortex with 'help' from the judgment application (to decision processing) circuitry already available in the ACC.

Higher order judgment requires reflective examination of the 'problem', the factors involved, the beliefs and feelings of others (wicked problems are invariably social in nature), one's own feelings and desires, and, most importantly, how choices may play out in the future. Thus the prefrontal cortex is the orchestrator for bringing all of the intelligence, creativity, and affective resources to bear on our models of how the world works and what are the possible outcomes of different choices. Only some of this reflection need be done in conscious awareness. More likely a larger portion of judgment processing takes place in subconscious mind but some time later comes into conscious awareness more or less fully formed. In the former case the decision is taken without conscious reflection. In the latter case, the ACC may be responsible for promoting comparative processing in the prefrontal areas to resolve differences.

This model has the neocortical regions of the prefrontal cortex essentially accreted onto the more primitive paleocortex and adding substantially more processing and representational power to handle substantially more complex problems.

I cannot leave this discussion without pointing out that while high-order judgments improve the efficacy of decisions in general, they are still subject to many biases. In Part 3, under the heading, “Efficacious Models” I discussed several systemic biases that obtain in most people's judgments due to the fact that the brain relies heavily on heuristic processes (see next section) to come to quick (and sometimes dirty) conclusions. The quickness can be a benefit in most ordinary circumstances where speed is more valuable than precision. This would certainly apply to many kinds of survival decisions where it is often alright to error on the side of caution and react quickly. These heuristics, however, still dominate much of human judgment. Ordinary (that is average) sapience does not have the ability to force every decision to be made contemplatively (nor should it). But it also seems to still be weak when it comes to judgments about what judgments should be contemplated before affecting decisions. This is likely the domain of the 2½-order consciousness that I introduced in Part 3 (Fig. 3). This ‘ultimate’ reflective map has the job of judging judgments and, if sufficiently developed, determine when heuristic-based biases are potentially damaging to good decisions. It would then direct judgment processing to kick into second-system (rational processing) mode to filter out the effects of biases to a greater degree. It is not likely that all biases can be filtered. And it is likely that biases still persist, even if at a reduced effectiveness level. But the judgments should be greatly improved. As an example, consider the effect that mood has on judgments. It is well known that mood (happy or sad) can have a bias on judgments (Schwarz, 2002). But it is also known that by pointing out to someone the attribution of their current mood, that is not related to the target subject of the judgment, that many people can discount their moods and arrive at better judgments than they would have otherwise. In other words, it is possible to trigger more rational judgment processing in the particular case of mood biases. Hence we know the mental processing power is available. But this also shows us that it is not always the case that people self-monitor their judgment processing and recognize that their mood: 1) is not attributable to the current problem; 2) will influence judgments unless contemplation is initiated. This is evidence of the still relative weakness of sapience in most people.

Mood-based biasing is just one of many forms that result from heuristic processing that attempts to speed the decision process along. It has been shown that mood-based biases can be mitigated to some extent with conscious attribution. But this may not be the case for all such biases. Indeed it has been shown that some forms of biases cannot be mitigated simply by calling attention to the possibility of biases entering the judgment process. What we are left with, for the present, is an understanding that heuristic processes leave the sapient (higher-order) judgment process vulnerable to mistakes. There is some evidence that this need not always be the case, but generally is the rule rather than the exception (except in exceptional cases, which we recognize as wisdom!) This inherent weakness in sapience will become a topic of discussion in Part 5 where I discuss the evolution of sapience and its future.

Systems Intelligence

In the section above on Neural Basis of Systems Representation and Models I laid out a scheme for how networks of neurons might reasonably wire up (through learning) to form representations of things and processes, including a mechanism for representing causal relations. This was left as a basis for building concepts (and actually percepts as well). The relationship to 'models' was not further developed. In this section I want to further explore how dynamic models can be built and 'run' in brain tissue since such models are the basis for understanding how sapience models systems in the world outside the head, and, as it turns out, inside the head as well.

Heuristic Programming in the Brain

In computing we build models of real-world systems by writing programs. A program is, at base, just a set of steps, like in a recipe, that collectively do three basic things: they either change a memory variable's value using arithmetic operations, or they perform input/output operations. A few computer instructions cause a program to branch, that is take one of two different tracks of steps, based on the condition of a value resulting from an arithmetic operation (e.g. a value is positive or negative). Typically, computer programs are algorithmic. That means the steps are a) unambiguously defined; b) a finite sequence of steps; and c) produce a clear answer, usually within a reasonable time frame. Algorithms are very definite and specific. They can be implemented in computers because the nature of logic elements are matched to the need for definiteness and specificity. Algorithms, and their implementation in computer programs, very clearly solve well-defined (mathematically and logically) problems.

There is another kind of programming that can be simulated in a computer but is a better description of what can go on in living neural tissue. And that is heuristic programming. Heuristics are generally described as 'rules-of-thumb' in that they are not guaranteed to produce a definite result. It turns out that vastly more kinds of real-world problems cannot be sufficiently specified such that some algorithm might be found that would solve the problem. In this case it may still be possible to design a program involving heuristics to find a possible, even probable, (sometimes approximate) solution to such an under specified problem. It turns out that motor (muscle) control is of this nature. Muscles are not like solid gears and machine motors. They are deformable under stress, yet resilient and pliant. They do not respond exactly to electrical stimulation, although a bundle of muscle cells will perform reasonably well as an averaged response.

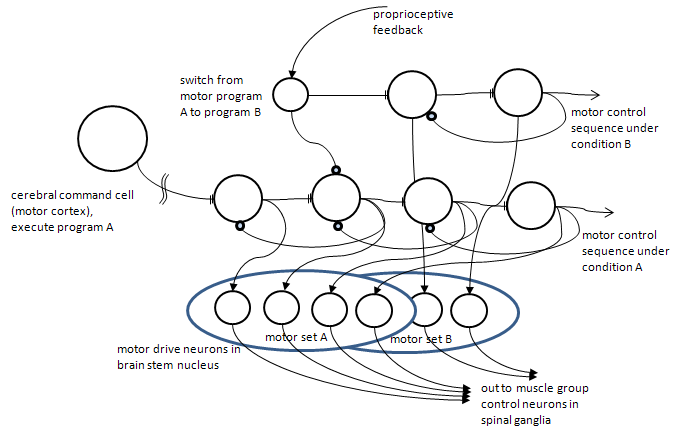

As brains evolved, and animal behaviors required more and more fine motor control in spite of the uncertainties in response for muscle fibers, the neural circuitry of the cerebellum developed several interesting capabilities. Behaviors involve sequences of activation of different muscle groups, often balancing the responses of opponent groups (e.g. biceps vs. triceps), in order to generate coordinated movements, say of appendages. Thus motor control is essentially a program of different motor responses evolved to carry out a motion primitive (like lifting a leg). More complex programs, like walking, are built out of meta-sequences of these primitives. The higher-level control, or decisions about the goals of making motions, what behavior to elicit, etc. is accomplished in the motor region of the cerebral cortex (frontal lobes), but the execution control and feedback control is handled by linear circuits of neurons in the cerebellum. In other words, cerebellar circuits instantiate motor programs. More complex program (like riding a bicycle) are actually learned — like the motor cortex, the cerebellar cortex is also capable of adaptation with repetition.

Heuristic programs can be built in neural tissue in a relatively straightforward manner. Figure 8, below, shows a highly simplified, but biologically plausible model of how neurons can sequence a previously learned set of steps as well as branch to an alternate step plan should circumstances dictate (through body feedback).

Figure 8. A hypothetical, but plausible, circuit of neurons for implementing a heuristic (motor) program. Note that the program is initiated by a command cell in the cerebral cortex (frontal lobe). The program is simply a sequentially activated set of neurons. In this simple version, each neuron activates the next one in line, but is subsequently inhibited by the neuron it just activated (negative feedback). In the event some body sensor (proprioceptive) determines a change in conditions that require a new sequence, a branching to a set of cells representing that different sequence can be initiated by inhibiting the old sequence, here in step 2, and starting an alternate sequence. All of these program step neurons activate actual motor drive neurons in the brain stem. Note that these sequences are learned (the double flat termini in keeping with Fig. 1. These kinds of circuits may be found in the cerebellum, which has received scant interest in the cognitive neuroscience literature until recently.

Mental Simulation Models in Neural Circuits

I have gone to some length to discuss this aspect of motor programs because it turns out that the brain can use the same basic architecture to build mental models for simulating real-world systems. These are not motor programs per se; they do not involve directing muscles to activate. Rather they can be used to control sequencing of concept activation back in the cerebral cortex. Recent research has shown that the cerebellum is implicated not just in motor control and coordination, but also in some cognitive processes, including the generation of speech. It isn't hard to see that motor sequencing is needed for actual phonation, but it is thought now that the cerebellar circuits are involved in more complex structure formation, such as words and possibly even sentences or phrases. Other afferents from the same kind of circuits are now known to innervate regions of the cortex other than Brocca's area or even other motor regions so speech production is not what these fibers are for. It is quite possible that branching program segments in the cerebellum are used to control the timing and sequence of activation of various concepts that represent real-world objects and actions.

Once one can specify a method for producing branchable sequences everything is in place to produce more complex programs that simulate real-world dynamics. In other words, the brain has the inherent capacity, between concept representation in the cerebral cortices and timed, and conditional program structures in the cerebellum, to construct and run models of the world! And running a model in fast forward is the essence of thinking about the future, of planning, and of feeding the judgment process with options.

Constructing Models — Guidance from the Prefrontal Cortex

What remains is to say how models of real-world systems are constructed in the first place. What guides the general intelligence system in deciding what to learn, how to organize it, etc? These questions are yet to be answered. If I were asked to guess, and generate hypotheses that might be tested, I would start with an assumption that the guidance is carried out by the prefrontal cortex, but using templates of systemness from deep in the limbic areas. The perception of systemness is evolutionarily ancient, I suspect. At the heart of native knowledge of how the world works must come basic senses of boundaries of coherent things, dynamics, and cause and effect assignment. This is an area of interest I wanted to pursue with my robotic models, some day.

Clearly lower animals (at least birds and mammals) show an ability to recognize 'things' in their environments. They show a capacity to recognize process and dynamic relationships. These abilities suggest that there is something very basic about systems representation and model building. In what follows here is what we know about the brain that provides clues as to how it builds dynamic models of systems composed of subsystems and themselves composing meta-systems.

I haven't said anything as yet about the fact that the two hemispheres of the brain are lateralized or functionally dual. This issue is terribly overplayed in popular psychology (left-brain/right-brain people!) but there are some obvious differences in functions performed on either side by mature brains. One of the more interesting findings is that the left hemisphere (or at least the frontal lobes and parts of both the parietal and temporal lobes) is the site of enduring patterns of processing. Most often noted is that ordinarily Wernicke's area and Brocca's area work their speech processing magic on the left hemispheres. Other evidence suggests that other routine processing modules are instantiated in the left hemisphere cortex. This leads to questions about the popularly viewed 'heart' side of the brain — the right hemisphere. Goldberg has developed a very interesting model that suggests that the right cortex is largely involved in processing novelty or newly developed circuits — new models (Goldberg, 2001; ______, 2006). It could be that the left hemisphere, in particular of the prefrontal and pre-motor areas of the frontal lobe, has the machinery in place to guide the construction of a model to be built in the right hemisphere where it can be 'tried out'. The model could also arise by copying circuit relations from an existing model (from the left hemisphere) into the right hemisphere and then guiding changes[1]. This would be essentially what we mean by analogic thinking. Once a model is constructed and 'tested', perhaps validated by experience, it might be copied back into the left hemisphere for future use in routine thinking or as the basis for a new analogy.

This scheme requires a tremendous degree of plasticity in the wiring between neurons and cortical columns in the right hemisphere. If this is the case one test of the hypothesis would be to look for dynamic and possibly amorphous (that is, dense, but weakly activating) connectivity patterns in the right hemisphere. Indeed a great deal of work on working memory involving novel task learning implicates the right hemisphere frontal lobe. Barrs had developed a theory of working memory that is accessible to all relevant regions of the brain as a 'Global Workspace', though the idea here is related to consciousness and would not apply to subconscious processes of model building, strictly speaking. Nevertheless, a general vision of the right hemisphere acting as a giant white board where images can be temporarily written and adjusted is appealing. The left hemisphere, frontal lobe acting as a controller, initiating the writing, guiding the adjusting, initiating testing, and finally encoding a permanent image of a dynamic model for later automatic use provides for a compelling model of how the brain can think new thoughts (see footnote 1 for more elaboration of how this might work).

Thinking, whether it results in an inner voice, that is, in consciously registered thoughts, or goes on subconsciously, has an appealing connection with premotor processing. Unlike actual motor output (movement) the command signals direct a program of sequencing concepts and their modifications. What started out in mammals as a system for constructing sophisticated muscular choreographies spawned a system for choreographing conceptual dances — models of the world — that could then be tested on the stage of mentation.

Executive Functions and Strategic Judgment

In my series on sapient governance, I elaborated on the application of hierarchical cybernetic theory to the establishment of social governing mechanisms that might replace our current hodgepodge of mechanisms. I likened this to the control architecture of the brain which is divided into three basic layers (the triune brain) of operational, coordination (tactical and logistic), and strategic management. Now we can see that the brain is indeed a hierarchical cybernetic system with the highest level devoted to strategic management. In the prior installment in this series, Part 3, The Components of Sapience Explained, I identified the strategic perspective as a central component in sapience psychology. Now it seems plausible that we will find the seat of strategic thinking in the prefrontal cortex and, I strongly suspect, largely in Brodmann area 10 (BA10).

Strategic thinking requires that we build models of how the external environment works. This includes making decisions and judgments about what should be learned and how to go about learning. The models are used to simulate how the world will evolve into the future under different starting conditions, particularly with respect to actions that we might take in the present. The models themselves are organized concept clusters probably residing in the right hemisphere anterior parietal lobe. Their dynamics (i.e. running the models) is likely orchestrated by the premotor regions of the frontal lobes in conjunction with the choreography directions mediated in the cerebellum. The outputs from these models are analyzed by regions of the prefrontal cortex (other Brodmann areas conjoined with area 10) and supplied to BA10 for disposition and ultimately for making strategic (e.g. long-term) decisions. Such decisions might easily include life changing decisions such as what do I want to do when I grow up (career), or who do I want to marry, etc. In other words, the brain has evolved a capacity to plan for the future.

Strategic thinking involves the following characteristics:

- How is the world changing in the future?

- What goals should I set? Are they reasonable? What evidence do I have to suggest these are attainable and worthy?

- What are my assets (strengths) that I can bring to bear to meet these goals?

- What weakness or shortcomings do I have that would prevent me from meeting these goals?

- What assets should I attempt to obtain to help me meet these goals (sub-goals)?

- How will others react to my actions in attempting to reach these goals?

- Can I enlist others aid or support? Will I harm anyone else?

Wisdom is often associated with those that think about the future, the long-term future. A wise person often thinks about what will be good for the future of the tribe. They think about what would be the best actions to take today to ensure a good outcome for the majority. They think strategically, not just for themselves, their own benefit, but for their group. Early hominids likely had the capacities listed above. They could think strategically for themselves. Modern humans have evolved a capacity to think strategically for many others; replace 'I' with 'we' in the list above. Or, at least, some humans seem to have this ability. True sapience surely includes this capacity to care and think for the benefit of family, friends, tribe, and even strangers. There may be a good reason, however, why this level of sapience is not widespread in the population of humanity. As I will dig further into the evolution of sapience in the next installment, and to somewhat anticipate that exploration, note that not everyone in a tribe needs to be a strategic thinker for the group. Indeed, it is best if there are few wise folk in a tribe so that there is a higher chance that they will reach agreements. In other words, it seems reasonable that true sapience is not to be found in the majority of humans.

Summary

As we learn more and more about the functions of the frontal lobes, in particular the prefrontal cortices, we find that much of our unique human capacity to deal with our complex social milieu, our ability to think about the future, and to plan our actions in the present with the future in mind is associated with this remarkable region. Specifically, when we look for those qualities of mind which truly differentiate humans from other primates and all other animals, we are led to focus on the role of the frontopolar region of the brain. The patch designated as Brodmann area 10 is particularly intriguing with respect to those capacities we recognize as the basis of wisdom.

I hope that neuropsychologists, in the not-too-distant future will concentrate on teasing out capacities for wise decision making (judgments). I strongly suspect they will find the activity of the BA10 patch is highly correlated with making wise choices.

Footnotes

[1] Copying a neural circuit or network from one region of brain to another is based on the same learning mechanism in synapses as was discussed at the outset. Neural networks representing established concepts in the left hemisphere could be activated (possibly during dream sleep) while corresponding regions in the right hemisphere that have not been committed to long-term memory encoding are simultaneously activated by long-distance axons across the corpus callosum. Subsequently, using the causal encoding rules, the right hemisphere region just activated receives the secondary semantic signals (unconditionable stimuli) that cause the activations from the left side to become encoded in short or intermediate term memory traces. In this way the left side concept can be temporarily written into the right side. Modifications can be made to the new right side model by the prefrontal cortex selectively activating left side attributes and shortly after activating the semantic signals to the right side so that it appends those attributes to the newly constructed model in the right side. Thus the right hemisphere acts as a programmable memory where creative modifications can be applied without modifying the long-term memories in the left hemisphere. What would be required then is that: 1) if a new model proves to be more efficacious than the currently stored version in the left side, it must be copied back to the left side to reconstruct the older version; 2) the right hemisphere must prevent long-term memory traces from obtaining so that the neural circuits can be returned to scratchpad status waiting for the next new model to be built. All of this is, of course, highly speculative. But it should be a testable hypothesis.

Bibliography and References

- Alkon, Daniel L., (1987). Memory Traces in the Brain, Cambridge University Press, Cambridge.

- Andreasen, Nancy C. (2005). The Creative Brain, Penguin, New York.

- Barrs, Bernard J. & Gage, Nicole M. (eds.) (2007). Cognition, Brain, and Consciousness: Introduction to Cognitive Neuroscience, Elsevier, Amssterdam.

- Berreby, David (2005). Us and Them: Understanding Your Tribal Mind, Little, Brown, and Company, New York.

- Cacioppo, J.T., Visser, P.S., and Pickett, C.L., (2006). Social Neuroscience: People Thinking About Thinking People, The MIT Press, Cambridge MA.

- Carter, Rita (1999). Mapping the Mind, University of California Press, Berkeley CA.

- Damasio, Antonio R., (1994). Descartes' Error: Emotion, Reason, and the Human Brain, G.P. Putnum's Sons, New York.

- Fuster, Joaquín (1999). Memory in the Cerebral Cortex, The MIT Press, Cambridge MA.

- Gazzaniga, Michael S., (2005). The Ethical Brain, Dana Press, New York.

- Gilovich, Thomans, Griffin, Dale, & Kahneman (eds), (2002). Heuristics and Biases: The Psychology of Intuitive Judgment, Cambridge University Press, New York.

- Goleman, Daniel (2006). Social Intelligence, Bantam Books, New York.

- Goldberg, Elkhonon, (2001). The Executive Brain: Frontal Lobes and the Civilized Mind, Oxford University Press, New York.

- Goldberg, Elkhonon, (2006). The Wisdom Paradox, Gotham Books, New York.

- Goldberg, Elkhonon & Bougkov, Dmitri (2007). "Goals, executive control, and action", in Barrs & Gage (eds.) Cognition, Brain, and Consciousness: Introduction to Cognitive Neuroscience, Chapter 12, Elsevier, Amssterdam.

- Hanson, J. S., & Olson, C. R. (eds. 1990). Connectionist Modeling and Brain Function: The Developing Interface, The MIT Press, Cambridge, MA.

- Johnson-Laird, Philip (2006). How We Reason, Oxford University Press, Oxford.

- LeDoux, Joseph, (1996). The Emotional Brain: The Mysterious Underpinnings of Emotional Life, Simon & Schuster, New York.

- Mobus, George (1992). "Toward a Theory of Learning and Representing Causal Inferences in Neural Networks", in D. S. Levine and M. Aparicio (Eds.), Neural Networks for Knowledge Representation and Inference, Lawrence Erlbaum Associates.

- Mobus, G.E., (1999). "Foraging Search: Prototypical Intelligence", Third Internation Conference on Computing Anticipatory Systems, HEC Liege, Belgium, August 9-14, 1999. Daniel Dubois, Editor, Center for Hyperincursion and Anticipation in Ordered Systems, Institute of Mathematics, University of Liege.

- Mobus, G.E., (2000). "Adapting robot behavior to a nonstationary environment: a deeper biologically inspired model of neural processing". Presented at Sensor Fusion and Decentralized Control in Robotic Systems III, SPIE - International Society for Optical Engineering, Nov. 7, 2000, Boston, MA

- Rumelhart, D. E., McClelland, J. L. & the PDP Research Group (eds. 1986). Parallel Distributed Processing: Explorations in the Microstructures of Cognition, Volumes 1 & 2, The MIT Press, Cambridge, MA.

-

Schwarz, Norbert (2002). “Feelings as Information: Moods Influence Judgments and Processing Strategies”, in Gilovich, et al. (2002), pages 534-547.

- Seabright, Paul (2004). The Company of Strangers: A Natural History of Economic Life, Princeton University Press, Princeton, New Jersey.

- Sober, Elliott & Wilson, David Sloan (1998). Unto Others: The Evolution and Psychology of Unselfish Behavior, Harvard University Press, Cambridge MA.