Text Entry

Just as pointing is a nearly ubiquitous form of non-verbal communication, text is a ubiquitous form of verbal communication. Every single character we communicate to friends, family, coworkers, and computers—every tweet, every Facebook post, every email—leverages some form of text-entry user interface. These interfaces have one simple challenge: support a person in translating the verbal ideas in their head into a sequence of characters that are stored, processed, and transmitted by a computer.

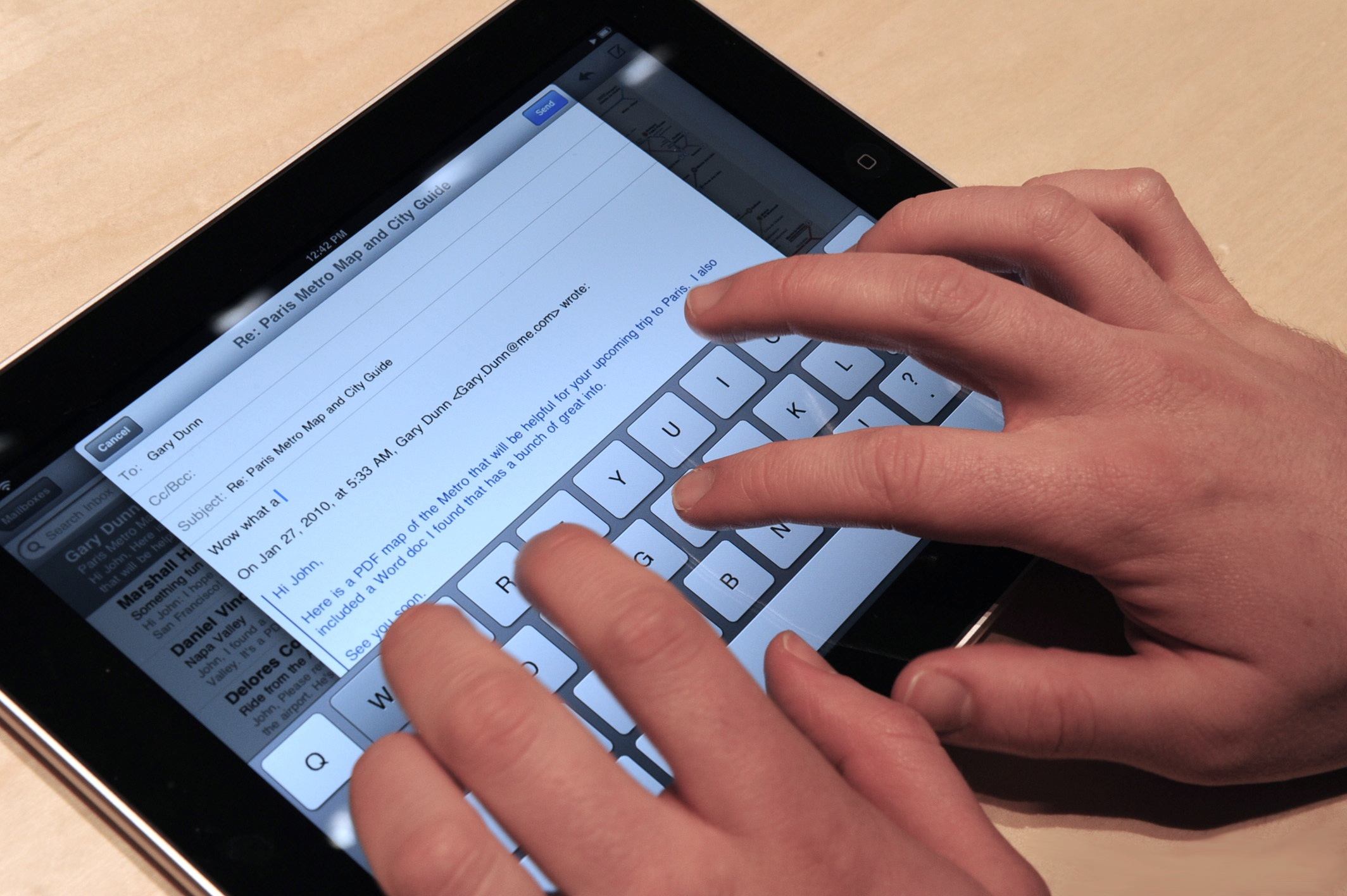

If you’re reading this, you’re already familiar with common text-entry interfaces. You’ve almost certainly used a physical keyboard, arranged with physical keys labeled with letters and punctuation. You’ve probably also used a virtual on-screen keyboard, like those in smartphones and tablets. You might even occasionally use a digital assistant’s speech recognition as a form of text entry. And because text-entry is so frequent a task with computers, you probably also have very strong opinions about what kinds of text-entry you prefer: some hate talking to Siri, some love it. Some still stand by their physical Blackberry keyboards, others type lightning fast on their Android phone’s virtual on-screen keyboard. And some like big bulky clicky keys, while others are fine with the low travel of most thin modern laptop keyboards.

What underlies these strong opinions? A large set of hidden complexities. Text entry needs to support letters, numbers, and symbols across nearly all of human civilization. How can text entry support the input of the 109,384 distinct symbols from all human languages encoded in Unicode 6.0 ? How can text be entered quickly and error-free, and help users recover from entry errors? How can people learn text entry interfaces, just like they learn handwriting, keyboarding, speech, and other forms of verbal communication. How can people enter text in a way that doesn’t cause pain, fatigue, or frustration? Many people with injuries or disabilities (e.g., someone who is fully paralyzed) may find it excruciatingly difficult to enter text. The ever-smaller devices in our pockets and on our wrists only make this harder, reducing the usable surfaces for comfortable text entry.

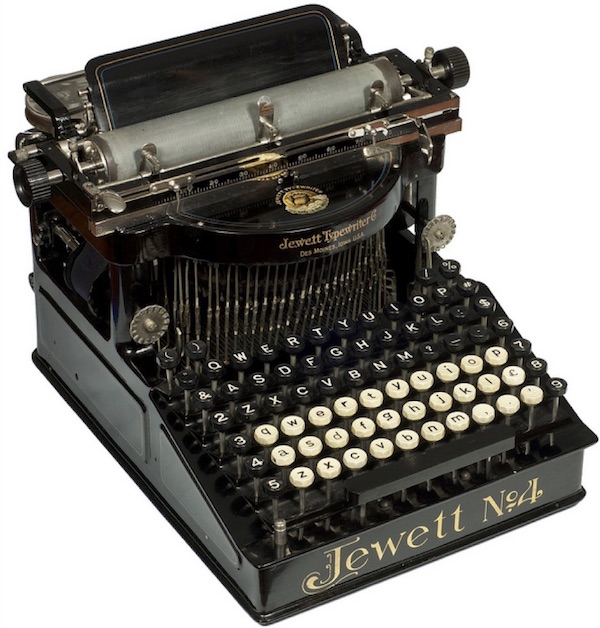

The history of text entry interfaces predates computers (Silfverberg 2007). For example, typewriters like the Jewett No. 4 shown above had to solve the same problem as modern computer keyboards, but rather than storing the sequence of characters in computer memory, they were stored on a piece of paper with ink. Typewriters like the Jewett No. 4 and their QWERTY keyboard layout emerged during the industrial revolution when the demand for text increased.

Of course, the difference between mechanical text entry and computer text entry is that computers can do so much more to ensure fast, accurate, and comfortable experiences. Researchers have spent several decades exploiting computing to do exactly this. This research generally falls into three categories: techniques that leverage discretediscrete text entry: Explicitly and unambiguously selecting characters, words, and phrases for entry (e.g., with a keyboard). input, like the pressing of a physical or virtual key, techniques that leverage continuouscontinuous text entry: Providing some ambiguous source of text (e.g., speech, gesture) and having an interface translate it into text. input, like gestures or speech, and statistical techniques that attempt to predictpredictive text entry: Providing input that suggests the desired text and having the interface predict what input might be intended. the text someone is typing to automate text entry.

Discrete input

Discrete text input involves entering a single character or word at a time. We refer to them as discrete because of the lack of ambiguity in input: either a button is pressed and a character or word is generated, or it is not. The most common and familiar forms of discrete text entry are keyboards. Keyboards come in numerous shapes and sizes, both physical and virtual, and these properties shape the speed, accuracy, learnability, and comfort of each.

Keyboards can be as simple as 1-dimensional layouts of characters, navigated with a left, right, and select key. These are common on small devices where physical space is scarce. Multiple versions of the iPod, for example, used 1-dimensional text entry keyboards because of its one dimensional click wheel.

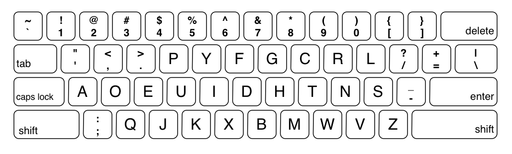

Two-dimensional keyboards like the familiar QWERTY layout are more common. And layout matters. The original QWERTY layout, for example, was designed to minimize mechanical failure, not speed or accuracy. The Dvorak layout was designed for speed, placing the most common letters in the home row and maximizes alternation between hands (Dvorak and Dealey 1936):

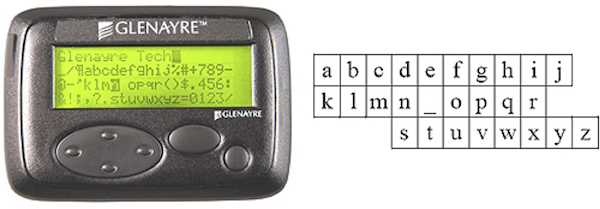

Not all two-dimensional keyboards have a 1-to-1 mapping from key to character. Some keyboards are virtual, with physical keys for moving an on-screen cursor:

Cell phones in the early 21st century used a multitap method in which each key on the 12-key numeric keyboard typically found on pre-smartphone cellphones mapped to three or four numbers or letters. To select the letter you wanted, you would press a key multiple times until the desired letter is displayed. A letter was entered when a new key was struck. If the next letter is on the same key as the previous letter, then the user must wait for a short timeout or hold down the key to commit the desired character. Some researchers sped up multitap techniques like this by using other sensors, such as tilt sensors, making it faster to indicate a character 15 15 Daniel Wigdor and Ravin Balakrishnan (2003). TiltText: using tilt for text input to mobile phones. ACM Symposium on User Interface Software and Technology (UIST).

I. Scott MacKenzie, R. William Soukoreff, Joanna Helga (2011). 1 thumb, 4 buttons, 20 words per minute: design and evaluation of H4-writer. ACM Symposium on User Interface Software and Technology (UIST).

Tomoki Shibata, Daniel Afergan, Danielle Kong, Beste F. Yuksel, I. Scott MacKenzie, Robert J.K. Jacob (2016). DriftBoard: A Panning-Based Text Entry Technique for Ultra-Small Touchscreens. ACM Symposium on User Interface Software and Technology (UIST).

Some keyboards are eyes-free. For example, long used in courtrooms, stenographers who transcribe human speech in shorthand have used chorded keyboards called stenotypes:

With 2-4 years of training, some stenographers can reach 225 words per minute. Researchers have adapted these techniques to other encodings, such as braille, to support non-visual text entry for people who are blind or low-vision 3 3 Shiri Azenkot, Jacob O. Wobbrock, Sanjana Prasain, Richard E. Ladner (2012). Input finger detection for nonvisual touch screen text entry in Perkinput. Graphics Interface (GI).

On-screen virtual keyboards like those found in modern smartphones introduce some degree of ambiguity into the notion of a discrete set of keys, because touch input can be ambiguous. Some researchers have leveraged additional sensor data to disambiguate which key is being typed, such as which finger is typically used to type a key 7 7 Daewoong Choi, Hyeonjoong Cho, Joono Cheong (2015). Improving Virtual Keyboards When All Finger Positions Are Known. ACM Symposium on User Interface Software and Technology (UIST).

Christian Holz and Patrick Baudisch (2011). Understanding touch. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

The primary benefit of the discrete input techniques above is that they can achieve relatively fast speeds and low errors because input is reliable: when someone presses a key, they probably meant to. But this is not always true, especially for people with motor impairments that reduce stability of motion. Moreover, there are many people that cannot operate a keyboard comfortably or at all, many contexts in which there simply isn’t physical or virtual space for keys, and many people who do not want to learn an entire new encoding for entering text.

Continuous input

Continuous input is an alternative to discrete input, which involves providing a stream of data as input, which the computer then translates into characters or words. This helps avoid some of the limitations above, but often at the expense of speed or accuracy. For example, popular in the late 1990’s, the Palm Pilot, seen in the video below, used a unistroke gesture alphabet for text entry. It did not require a physical keyboard, nor did it require space on screen for a virtual keyboard. Instead, users learned a set of gestures for typing letters, numbers, and punctuation.

As the video shows, this wasn’t particularly fast or error-free, but it was relatively learnable and kept the Palm Pilot small.

Researchers envisioned other improved unistroke alphabets. Most notably, the EdgeWrite system was designed to stabilize the motion of people with motor impairments by defining gestures that traced around the edges and diagonals of a square 16 16 Jacob O. Wobbrock, Brad A. Myers, John A. Kembel (2003). EdgeWrite: a stylus-based text entry method designed for high accuracy and stability of motion. ACM Symposium on User Interface Software and Technology (UIST).

Yi-Chi Liao, Yi-Ling Chen, Jo-Yu Lo, Rong-Hao Liang, Liwei Chan, Bing-Yu Chen (2016). EdgeVib: Effective alphanumeric character output using a wrist-worn tactile display. ACM Symposium on User Interface Software and Technology (UIST).

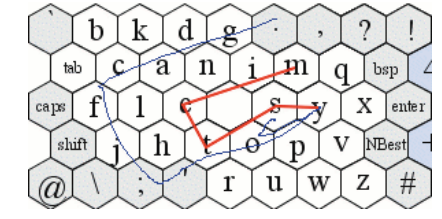

Whereas the unistroke techniques focus on entering one character at a time, others have explored strokes that compose entire words. Most notably, the SHARK technique allowed users to trace across multiple letters in a virtual keyboard layout, spelling entire words in one large stroke 9 9 Per-Ola Kristensson and Shumin Zhai (2004). SHARK2: a large vocabulary shorthand writing system for pen-based computers. ACM Symposium on User Interface Software and Technology (UIST).

Researchers have built upon this basic idea, allowing users to use two hands instead of one for tablets 5 5 Xiaojun Bi, Ciprian Chelba, Tom Ouyang, Kurt Partridge, Shumin Zhai (2012). Bimanual gesture keyboard. ACM Symposium on User Interface Software and Technology (UIST).

Jessalyn Alvina, Joseph Malloch, Wendy E. Mackay (2016). Expressive Keyboards: Enriching Gesture-Typing on Mobile Devices. ACM Symposium on User Interface Software and Technology (UIST).

Jochen Rick (2010). Performance optimizations of virtual keyboards for stroke-based text entry on a touch-based tabletop. ACM Symposium on User Interface Software and Technology (UIST).

As interfaces move increasingly away from desktops, laptops, and even devices, researchers have investigated forms of text-entry that involve no direct interaction with a device at all. This includes techniques for tracking the position and movement of fingers in space for text entry 17 17 Xin Yi, Chun Yu, Mingrui Zhang, Sida Gao, Ke Sun, Yuanchun Shi (2015). ATK: Enabling ten-finger freehand typing in air based on 3d hand tracking data. ACM Symposium on User Interface Software and Technology (UIST).

Other techniques leveraging spatial memory of keyboard layout for accurate text input on devices with no screens 6 6 Xiang 'Anthony' Chen, Tovi Grossman, George Fitzmaurice (2014). Swipeboard: a text entry technique for ultra-small interfaces that supports novice to expert transitions. ACM Symposium on User Interface Software and Technology (UIST).

Shiri Azenkot, Cynthia L. Bennett, Richard E. Ladner (2013). DigiTaps: eyes-free number entry on touchscreens with minimal audio feedback. ACM Symposium on User Interface Software and Technology (UIST).

Handwriting and speech recognition have also long been a goal in research and industry. While both continue to improve, and speech recognition in particular becomes ubiquitous, both continue to be plagued by recognition errors. People are finding many settings in which these errors are tolerable (or even fun!), but they have yet to reach levels of accuracy to be universal, preferred methods for text entry.

Predictive input

The third major approach to text entry has been predictive input, in which a system simply guesses what a user wants to type based on some initial information. This technique has been used in both discrete and continuous input, and is relatively ubiquitous. For example, before smartphones and their virtual keyboards, most cellphones offered a scheme called T9, which would use a dictionary and word frequencies to predict the most likely word you were trying to type.

These techniques leverage Zipf’s law, an empirical observation that the most frequent words in human language are exponentially more frequent than the less frequent words. The most frequent word in English (“the”) accounts for about 7% of all words in a document, and the second most frequent word (“of”) is about 3.5% of words. Most words rarely occur, forming a long tail of low frequencies.

This law is valuable because it allows techniques like T9 to make predictions about word likelihood. Researchers have exploited it, for example, to increase the relevance of autocomplete predictions 11 11 I. Scott MacKenzie, Hedy Kober, Derek Smith, Terry Jones, Eugene Skepner (2001). LetterWise: prefix-based disambiguation for mobile text input. ACM Symposium on User Interface Software and Technology (UIST).

Kenneth C. Arnold, Krzysztof Z. Gajos, Adam T. Kalai (2016). On Suggesting Phrases vs. Predicting Words for Mobile Text Composition. ACM Symposium on User Interface Software and Technology (UIST).

The past, present, and future of text entry

Our brief tour through the history of text entry reveals a few important trends:

- There are many ways to enter text into computers and they all have speed-accuracy tradeoffs.

- The vast majority of techniques focus on speed and accuracy, and not on the other experiential factors in text entry, such as comfort or accessibility.

- There are many text entry methods that are inefficient, and yet ubiquitious (e.g., QWERTY); adoption therefore isn’t purely a function of speed and accuracy, but many other factors in society and history.

As the world continues to age, and computing moves into every context of our lives, text entry will have to adapt to these shifting contexts and abilities. For example, we will have to design new ways of efficiently entering text in augmented and virtual realities, which may require more sophisticated ways of correcting errors from speech recognition. Therefore, while text entry may seem like a well-explored area of user interfaces, every new interface we invent demands new forms of text input.

References

-

Jessalyn Alvina, Joseph Malloch, Wendy E. Mackay (2016). Expressive Keyboards: Enriching Gesture-Typing on Mobile Devices. ACM Symposium on User Interface Software and Technology (UIST).

-

Kenneth C. Arnold, Krzysztof Z. Gajos, Adam T. Kalai (2016). On Suggesting Phrases vs. Predicting Words for Mobile Text Composition. ACM Symposium on User Interface Software and Technology (UIST).

-

Shiri Azenkot, Jacob O. Wobbrock, Sanjana Prasain, Richard E. Ladner (2012). Input finger detection for nonvisual touch screen text entry in Perkinput. Graphics Interface (GI).

-

Shiri Azenkot, Cynthia L. Bennett, Richard E. Ladner (2013). DigiTaps: eyes-free number entry on touchscreens with minimal audio feedback. ACM Symposium on User Interface Software and Technology (UIST).

-

Xiaojun Bi, Ciprian Chelba, Tom Ouyang, Kurt Partridge, Shumin Zhai (2012). Bimanual gesture keyboard. ACM Symposium on User Interface Software and Technology (UIST).

-

Xiang 'Anthony' Chen, Tovi Grossman, George Fitzmaurice (2014). Swipeboard: a text entry technique for ultra-small interfaces that supports novice to expert transitions. ACM Symposium on User Interface Software and Technology (UIST).

-

Daewoong Choi, Hyeonjoong Cho, Joono Cheong (2015). Improving Virtual Keyboards When All Finger Positions Are Known. ACM Symposium on User Interface Software and Technology (UIST).

-

Christian Holz and Patrick Baudisch (2011). Understanding touch. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Per-Ola Kristensson and Shumin Zhai (2004). SHARK2: a large vocabulary shorthand writing system for pen-based computers. ACM Symposium on User Interface Software and Technology (UIST).

-

Yi-Chi Liao, Yi-Ling Chen, Jo-Yu Lo, Rong-Hao Liang, Liwei Chan, Bing-Yu Chen (2016). EdgeVib: Effective alphanumeric character output using a wrist-worn tactile display. ACM Symposium on User Interface Software and Technology (UIST).

-

I. Scott MacKenzie, Hedy Kober, Derek Smith, Terry Jones, Eugene Skepner (2001). LetterWise: prefix-based disambiguation for mobile text input. ACM Symposium on User Interface Software and Technology (UIST).

-

I. Scott MacKenzie, R. William Soukoreff, Joanna Helga (2011). 1 thumb, 4 buttons, 20 words per minute: design and evaluation of H4-writer. ACM Symposium on User Interface Software and Technology (UIST).

-

Jochen Rick (2010). Performance optimizations of virtual keyboards for stroke-based text entry on a touch-based tabletop. ACM Symposium on User Interface Software and Technology (UIST).

-

Tomoki Shibata, Daniel Afergan, Danielle Kong, Beste F. Yuksel, I. Scott MacKenzie, Robert J.K. Jacob (2016). DriftBoard: A Panning-Based Text Entry Technique for Ultra-Small Touchscreens. ACM Symposium on User Interface Software and Technology (UIST).

-

Daniel Wigdor and Ravin Balakrishnan (2003). TiltText: using tilt for text input to mobile phones. ACM Symposium on User Interface Software and Technology (UIST).

-

Jacob O. Wobbrock, Brad A. Myers, John A. Kembel (2003). EdgeWrite: a stylus-based text entry method designed for high accuracy and stability of motion. ACM Symposium on User Interface Software and Technology (UIST).

-

Xin Yi, Chun Yu, Mingrui Zhang, Sida Gao, Ke Sun, Yuanchun Shi (2015). ATK: Enabling ten-finger freehand typing in air based on 3d hand tracking data. ACM Symposium on User Interface Software and Technology (UIST).