Hand-Based Input

Thus far, we have discussed two forms of input to computers: pointing and text entry . Both are mostly sufficient for operating most forms of computers. But, as we discussed in our chapter on history , interfaces have always been about augmenting human ability and cognition, and so researchers have pushed far beyond pointing and text to explore many new forms of input. In this chapter, we focus on the use of hands to interact with computers, including touchscreens , pens , gestures , and hand tracking .

One of the central motivations for exploring hand-based input came from new visions of interactive computing. For instance, in 1991, Mark Weiser, who at the time was head of the very same Xerox PARC that led to the first GUI, wrote in Scientific American about a vision of ubiquitous computing . 36 36 Mark Weiser (1991). The Computer for the 21st Century. Scientific American 265, 3 (September 1991), 94-104.

Hundreds of computers in a room could seem intimidating at first, just as hundreds of volts coursing through wires in the walls did at one time. But like the wires in the walls, these hundreds of computers will come to be invisible to common awareness. People will simply use them unconsciously to accomplish everyday tasks... There are no systems that do well with the diversity of inputs to be found in an embodied virtuality.

Mark Weiser (1991). The Computer for the 21st Century. Scientific American 265, 3 (September 1991), 94-104.

Within this vision, input must move beyond the screen, supporting a wide range of embodied forms of computing. We’ll begin by focusing on input techniques that rely on hands, just as pointing and text-entry largely have: physically touching a surface, using a pen-shaped object to touch a surface, and moving the hand or wrist to convey a gesture. Throughout, we will discuss how each of these forms of interaction imposes unique gulfs of execution and evaluation.

Touch

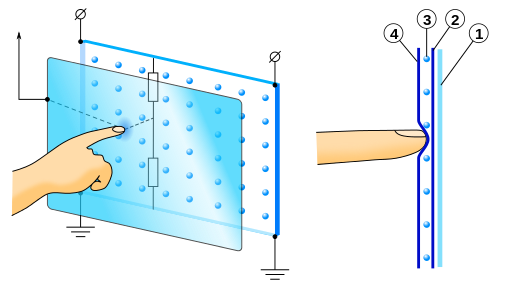

Perhaps the most ubiquitous and familiar form of hand-based input is using our fingers to touchscreens. The first touchscreens originated in the mid-1960’s. They worked similarly to modern touchscreens, just with less fidelity. The earliest screens consisted of an insulator panel with a resistive coating. When a conductive surface such as a finger made contact, it closed a circuit, flipping a binary input from off to on. It didn’t read position, pressure, or other features of a touch, just that the surface was being touched. Resistive touchscreens came next, and rather than using capacitance to close a circuit, it relied on pressure to measure voltage flow between X wires and Y wires, allowing a position to be read. In the 1980’s, HCI researcher Bill Buxton aa Fun fact: Bill was my “academic grandfather”, meaning that he was my advisor’s advisor. invented the first multi-touch screen while at the University of Toronto, placing a camera behind a frosted glass panel, and using machine vision to detect different black spots from finger occlusion. This led to several other advancements in sensing technologies that did not require a camera, and in the 1990’s, multi-touchscreens launched on consumer devices, including handheld devices like the Apple Newton and the Palm Pilot . The 2000’s brought even more innovation in sensing technology, eventually making multi-touchscreens small enough to embed in the smartphones we use today. (See ArsTechnica’s feature on the history of multi-touch for more history).

As you are probably already aware, touchscreens impose a wide range of gulfs of execution and evaluation on users. On first use, for example, it is difficult to know if a surface is touchable. One will often see children who are used to everything being a touchscreen attempt to touch non-touchscreens, confused that the screen isn’t providing any feedback. Then, of course, touchscreens often operate via complex multi-fingered gestures. These have to be somehow taught to users, and successfully learned, before someone can successfully operate a touch interface. This learning requires careful feedback to address gulfs of evaluation, especially if a gesture isn’t accurately performed. Most operating systems rely on the fact that people will learn how to operate touchscreens from other people, such as through a tutorial at a store.

While touchscreens might seem ubiquitous and well understood, HCI research has been pushing its limits even further. Some of this work has invented new types of touch sensors. For example, researchers have worked on materials that allow touch surfaces to be cut into arbitrary shapes and sizes other than rectangles. 23 23 Simon Olberding, Nan-Wei Gong, John Tiab, Joseph A. Paradiso, Jürgen Steimle (2013). A cuttable multi-touch sensor. ACM Symposium on User Interface Software and Technology (UIST).

Christian Rendl, Patrick Greindl, Michael Haller, Martin Zirkl, Barbara Stadlober, Paul Hartmann (2012). PyzoFlex: printed piezoelectric pressure sensing foil. ACM Symposium on User Interface Software and Technology (UIST).

Yuta Sugiura, Masahiko Inami, Takeo Igarashi (2012). A thin stretchable interface for tangential force measurement. ACM Symposium on User Interface Software and Technology (UIST).

Hrvoje Benko, Andrew D. Wilson, Ravin Balakrishnan (2008). Sphere: multi-touch interactions on a spherical display. ACM Symposium on User Interface Software and Technology (UIST).

Chris Harrison, Hrvoje Benko, Andrew D. Wilson (2011). OmniTouch: wearable multitouch interaction everywhere. ACM Symposium on User Interface Software and Technology (UIST).

Other researchers have explored ways of more precise sensing of how a touchscreen is touched. Some have added speakers to detect how something was grasped or touched 25 25 Makoto Ono, Buntarou Shizuki, Jiro Tanaka (2013). Touch & activate: adding interactivity to existing objects using active acoustic sensing. ACM Symposium on User Interface Software and Technology (UIST).

Chris Harrison, Julia Schwarz, Scott E. Hudson (2011). TapSense: enhancing finger interaction on touch surfaces. ACM Symposium on User Interface Software and Technology (UIST).

Chris Harrison and Scott E. Hudson (2008). Scratch input: creating large, inexpensive, unpowered and mobile finger input surfaces. ACM Symposium on User Interface Software and Technology (UIST).

T. Scott Saponas, Chris Harrison, Hrvoje Benko (2011). PocketTouch: through-fabric capacitive touch input. ACM Symposium on User Interface Software and Technology (UIST).

Sundar Murugappan, Vinayak, Niklas Elmqvist, Karthik Ramani (2012). Extended multitouch: recovering touch posture and differentiating users using a depth camera. ACM Symposium on User Interface Software and Technology (UIST).

Commercial touchscreens still focus on single-user interface, only allowing one person at a time to touch a screen. Research, however, has explored many ways to differentiate between multiple people using a single touch-screen. One approach is to have users sit on a surface that determines their identity, differentiating touch input. 4 4 Paul Dietz and Darren Leigh (2001). DiamondTouch: a multi-user touch technology. ACM Symposium on User Interface Software and Technology (UIST).

Andrew M. Webb, Michel Pahud, Ken Hinckley, Bill Buxton (2016). Wearables as Context for Guiard-abiding Bimanual Touch. ACM Symposium on User Interface Software and Technology (UIST).

Chris Harrison, Munehiko Sato, Ivan Poupyrev (2012). Capacitive fingerprinting: exploring user differentiation by sensing electrical properties of the human body. ACM Symposium on User Interface Software and Technology (UIST).

Christian Holz and Patrick Baudisch (2013). Fiberio: a touchscreen that senses fingerprints. ACM Symposium on User Interface Software and Technology (UIST).

While these inventions have richly explored many possible new forms of interaction, there is so far very little appetite for touchscreen innovation in industry. Apple’s force-sensitive touchscreen interactions (called “3D touch”) is one example of an innovation that made it to market, but there are some indicators that Apple will abandon it after just a few short years of users not being able to discover it (a classic gulf of execution).

Pens

In addition to fingers, many researchers have explored the unique benefits of pen-based interactions to support handwriting, sketching, diagramming, or other touch-based interactions. These leverage the skill of grasping a pen or pencil that many are familiar with from manual writing. Pens are similar to using a mouse as a pointing device in that they both involve pointing, but pens are critically different in that they involve direct physical contact with targets of interest. This directness requires different sensing technologies, provides more degrees of freedom for movement and input, and rel more fully on the hand’s complex musculature.

Some of these pen-based interactions are simply replacements for fingers. For example, the Palm Pilot, popular in the 1990’s, required the use of a stylus for it’s resistive touch-screen, but the pens themselves were plastic. They merely served to prevent fatigue from applying pressure to the screen with a finger and to increase the precision of touch during handwriting or interface interactions.

However, pens impose their own unique gulfs of execution and evaluation. For example, many pens are not active until a device is set to a mode to receive pen input. The Apple Pencil, for example, only works in particular modes and interfaces, and so it is up to a person to experiment with an interface to discover whether it is pencil compatible. Pens themselves can also have buttons and switches that control modes in software, which require people to learn what the modes control and what effect they have on input and interaction. Pens also sometimes fail to play well with the need to enter text, as typing is faster than tapping one character at a time with a pen. One consequence of these gulfs of execution and efficiency issues is that pens are often used for specific applications such as drawing or sketching, where someone can focus on learning the pen’s capabilities and is unlikely to be entering much text.

Researchers have explored new types of pen interactions that attempt to break beyond these niche applications. For example, some techniques explore a user using touch input with a non-dominant hand, and pen with a dominant hand 8,13 8 William Hamilton, Andruid Kerne, Tom Robbins (2012). High-performance pen + touch modality interactions: a real-time strategy game eSports context. ACM Symposium on User Interface Software and Technology (UIST).

Ken Hinckley, Koji Yatani, Michel Pahud, Nicole Coddington, Jenny Rodenhouse, Andy Wilson, Hrvoje Benko, Bill Buxton (2010). Pen + touch = new tools. ACM Symposium on User Interface Software and Technology (UIST).

Xiaojun Bi, Tomer Moscovich, Gonzalo Ramos, Ravin Balakrishnan, Ken Hinckley (2008). An exploration of pen rolling for pen-based interaction. ACM Symposium on User Interface Software and Technology (UIST).

David Lee, KyoungHee Son, Joon Hyub Lee, Seok-Hyung Bae (2012). PhantomPen: virtualization of pen head for digital drawing free from pen occlusion & visual parallax. ACM Symposium on User Interface Software and Technology (UIST).

Other pen-based innovations are purely software based. For example, some interactions improve handwriting recognition by allowing users to correct recognition errors while writing 28 28 Michael Shilman, Desney S. Tan, Patrice Simard (2006). CueTIP: a mixed-initiative interface for correcting handwriting errors. ACM Symposium on User Interface Software and Technology (UIST).

François Guimbretière, Maureen Stone, Terry Winograd (2001). Fluid interaction with high-resolution wall-size displays. ACM Symposium on User Interface Software and Technology (UIST).

Dan R. Olsen, Jr. and Mitchell K. Harris (2008). Edge-respecting brushes. ACM Symposium on User Interface Software and Technology (UIST).

Robert C. Zeleznik, Andrew Bragdon, Chu-Chi Liu, Andrew Forsberg (2008). Lineogrammer: creating diagrams by drawing. ACM Symposium on User Interface Software and Technology (UIST).

Wu, P. C., Wang, R., Kin, K., Twigg, C., Han, S., Yang, M. H., & Chien, S. Y. (2017). DodecaPen: Accurate 6DoF tracking of a passive stylus. ACM Symposium on User Interface Software and Technology (UIST).

Gestures

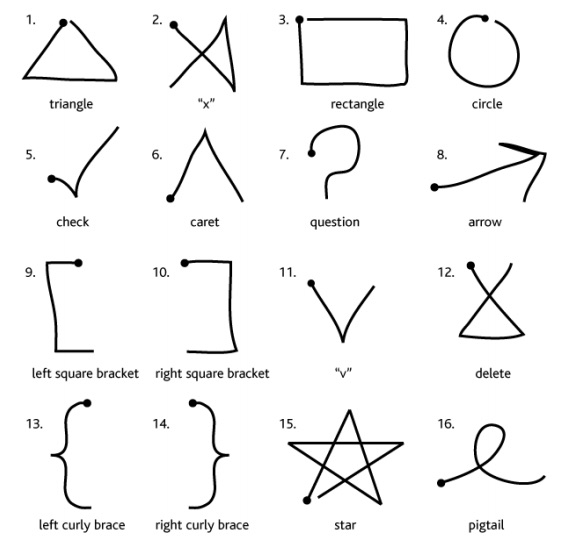

Whereas touch and pens involve traditional pointing , gesture-based interactions involve recognizing patterns in hand movement. Some gestures still recognize a gesture from a time-series of points in a 2-dimensional plane, such as the type of multi-touch gestures such as pinching and dragging on a touchscreen, or symbol recognition in handwriting or text entry. This type of gesture recognition can be done with a relatively simple recognition algorithm. 38 38 Jacob O. Wobbrock, Andrew D. Wilson, Yang Li (2007). Gestures without libraries, toolkits or training: a $1 recognizer for user interface prototypes. ACM Symposium on User Interface Software and Technology (UIST).

Other gestures rely on 3-dimensional input about the position of fingers and hands in space. Some recognition algorithms seek to recognize discrete hand positions, such as when the user brings their thumb and forefinger together (a pinch gesture). 37 37 Andrew D. Wilson (2006). Robust computer vision-based detection of pinching for one and two-handed gesture input. ACM Symposium on User Interface Software and Technology (UIST).

Eyal Krupka, Kfir Karmon, Noam Bloom, Daniel Freedman, Ilya Gurvich, Aviv Hurvitz, Ido Leichter, Yoni Smolin, Yuval Tzairi, Alon Vinnikov, Aharon Bar-Hillel (2017). Toward Realistic Hands Gesture Interface: Keeping it Simple for Developers and Machines. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Yang Zhang and Chris Harrison (2015). Tomo: Wearable, low-cost electrical impedance tomography for hand gesture recognition. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology (UIST '15).

Saiwen Wang, Jie Song, Jaime Lien, Ivan Poupyrev, Otmar Hilliges (2016). Interacting with Soli: Exploring fine-grained dynamic gesture recognition in the radio-frequency spectrum. ACM Symposium on User Interface Software and Technology (UIST).

Chen Zhao, Ke-Yu Chen, Md Tanvir Islam Aumi, Shwetak Patel, Matthew S. Reynolds (2014). SideSwipe: detecting in-air gestures around mobile devices using actual GSM signal. ACM Symposium on User Interface Software and Technology (UIST).

Andrea Colaço, Ahmed Kirmani, Hye Soo Yang, Nan-Wei Gong, Chris Schmandt, Vivek K. Goyal (2013). Mime: compact, low power 3D gesture sensing for interaction with head mounted displays. ACM Symposium on User Interface Software and Technology (UIST).

Jie Song, Gábor Sörös, Fabrizio Pece, Sean Ryan Fanello, Shahram Izadi, Cem Keskin, Otmar Hilliges (2014). In-air gestures around unmodified mobile devices. ACM Symposium on User Interface Software and Technology (UIST).

Aakar Gupta, Antony Irudayaraj, Vimal Chandran, Goutham Palaniappan, Khai N. Truong, Ravin Balakrishnan (2016). Haptic learning of semaphoric finger gestures. ACM Symposium on User Interface Software and Technology (UIST).

Yang Zhang, Robert Xiao, Chris Harrison (2016). Advancing hand gesture recognition with high resolution electrical impedance tomography. ACM Symposium on User Interface Software and Technology (UIST).

Jun Gong, Xing-Dong Yang, Pourang Irani (2016). WristWhirl: One-handed Continuous Smartwatch Input using Wrist Gestures. ACM Symposium on User Interface Software and Technology (UIST).

Gierad Laput, Robert Xiao, Chris Harrison (2016). ViBand: High-Fidelity Bio-Acoustic Sensing Using Commodity Smartwatch Accelerometers. ACM Symposium on User Interface Software and Technology (UIST).

While all of these inventions are exciting in their potential, gestures have significant gulfs of execution and evaluation. How does someone learn the gestures? How do we create tutorials that give feedback on correct gesture “posture”? When someone performs a gesture incorrectly, how can someone undo it if it had an unintended effect? What if the undo gesture is performed incorrectly? These questions ultimately arise from the unreliability of gesture classification.

Hand Tracking

Gesture-based systems look at patterns in hand motion to recognize a set of discrete poses or gestures. This is often appropriate when the user wants to trigger some action, but it does not offer the fidelity to support continuous hand-based actions, such as physical manipulation tasks in 3D space that require continuous tracking of hand and finger positions over time. Hand tracking systems are better suited for these tasks because they treat the hand as a continuous input device, rather than a gesture as a discrete event, estimating in real-time the hand’s position and orientation.

Most hand tracking systems use cameras and computer vision techniques to track the hand in space. These systems often rely on an approximate model of the hand skeleton, including bones and joints, and solve for the joint angles and hand pose that best fits the observed data. Researchers have used gloves with unique color patterns, shown above, to make the hand easier to identify and to simplify the process of pose estimation. 32 32 Robert Y. Wang and Jovan Popović (2009). Real-time hand-tracking with a color glove. ACM Transactions on Graphics.

Since then, researchers have developed and refined techniques using depth cameras like the Kinect for tracking the hand without the use of markers or gloves. 20,22,31,33 20 Mueller, F., Mehta, D., Sotnychenko, O., Sridhar, S., Casas, D., & Theobalt, C. (2017). Real-time hand tracking under occlusion from an egocentric rgb-d sensor. International Conference on Computer Vision.

Oberweger, M., Wohlhart, P., & Lepetit, V. (2015). Hands deep in deep learning for hand pose estimation. arXiv:1502.06807.

Jonathan Taylor, Lucas Bordeaux, Thomas Cashman, Bob Corish, Cem Keskin, Toby Sharp, Eduardo Soto, David Sweeney, Julien Valentin, Benjamin Luff, Arran Topalian, Erroll Wood, Sameh Khamis, Pushmeet Kohli, Shahram Izadi, Richard Banks, Andrew Fitzgibbon, Jamie Shotton (2016). Efficient and precise interactive hand tracking through joint, continuous optimization of pose and correspondences. ACM Transactions on Graphics.

Robert Wang, Sylvain Paris, Jovan Popović (2011). 6D hands: markerless hand-tracking for computer aided design. ACM Symposium on User Interface Software and Technology (UIST).

Mingyu Liu, Mathieu Nancel, Daniel Vogel (2015). Gunslinger: Subtle arms-down mid-air interaction. ACM Symposium on User Interface Software and Technology (UIST).

Benjamin Long, Sue Ann Seah, Tom Carter, Sriram Subramanian (2014). Rendering volumetric haptic shapes in mid-air using ultrasound. ACM Transactions on Graphics.

For head-mounted virtual and augmented reality systems, a common way to track the hands is through the use of positionally tracked controllers. Systems such as the Oculus Rift or HTC Vive, use cameras and infrared LEDs to track both the position and orientation of the controllers.

Like gesture interactions, the potential for classification error in hand tracking interactions can impose significant gulfs of execution and evaluation. However, because the applications of hand tracking often involve manipulation of 3D objects rather than invoking commands, the severity of these gulfs may be lower in practice. This is because object manipulation is essentially the same as direct manipulation: it’s easy to see what effect the hand tracking is having and correct it if the tracking is failing.

While there has been incredible innovation in hand-based input, there are still many open challenges. They can be hard to learn for new users, requiring careful attention to tutorials and training. And, because of the potential for recognition error, interfaces need some way of helping people correct errors, undo commands, and try again. Moreover, because all of these input techniques use hands, few are accessible to people with severe motor impairments in their hands, people lacking hands altogether, or if the interfaces use visual feedback to bridge gulfs of evaluation, people lacking sight. In the next chapter, we will discuss techniques that rely on other parts of a human body for input, and therefore can be more accessible to people with motor impairments.

References

-

Hrvoje Benko, Andrew D. Wilson, Ravin Balakrishnan (2008). Sphere: multi-touch interactions on a spherical display. ACM Symposium on User Interface Software and Technology (UIST).

-

Xiaojun Bi, Tomer Moscovich, Gonzalo Ramos, Ravin Balakrishnan, Ken Hinckley (2008). An exploration of pen rolling for pen-based interaction. ACM Symposium on User Interface Software and Technology (UIST).

-

Andrea Colaço, Ahmed Kirmani, Hye Soo Yang, Nan-Wei Gong, Chris Schmandt, Vivek K. Goyal (2013). Mime: compact, low power 3D gesture sensing for interaction with head mounted displays. ACM Symposium on User Interface Software and Technology (UIST).

-

Paul Dietz and Darren Leigh (2001). DiamondTouch: a multi-user touch technology. ACM Symposium on User Interface Software and Technology (UIST).

-

Jun Gong, Xing-Dong Yang, Pourang Irani (2016). WristWhirl: One-handed Continuous Smartwatch Input using Wrist Gestures. ACM Symposium on User Interface Software and Technology (UIST).

-

François Guimbretière, Maureen Stone, Terry Winograd (2001). Fluid interaction with high-resolution wall-size displays. ACM Symposium on User Interface Software and Technology (UIST).

-

Aakar Gupta, Antony Irudayaraj, Vimal Chandran, Goutham Palaniappan, Khai N. Truong, Ravin Balakrishnan (2016). Haptic learning of semaphoric finger gestures. ACM Symposium on User Interface Software and Technology (UIST).

-

William Hamilton, Andruid Kerne, Tom Robbins (2012). High-performance pen + touch modality interactions: a real-time strategy game eSports context. ACM Symposium on User Interface Software and Technology (UIST).

-

Chris Harrison and Scott E. Hudson (2008). Scratch input: creating large, inexpensive, unpowered and mobile finger input surfaces. ACM Symposium on User Interface Software and Technology (UIST).

-

Chris Harrison, Hrvoje Benko, Andrew D. Wilson (2011). OmniTouch: wearable multitouch interaction everywhere. ACM Symposium on User Interface Software and Technology (UIST).

-

Chris Harrison, Julia Schwarz, Scott E. Hudson (2011). TapSense: enhancing finger interaction on touch surfaces. ACM Symposium on User Interface Software and Technology (UIST).

-

Chris Harrison, Munehiko Sato, Ivan Poupyrev (2012). Capacitive fingerprinting: exploring user differentiation by sensing electrical properties of the human body. ACM Symposium on User Interface Software and Technology (UIST).

-

Ken Hinckley, Koji Yatani, Michel Pahud, Nicole Coddington, Jenny Rodenhouse, Andy Wilson, Hrvoje Benko, Bill Buxton (2010). Pen + touch = new tools. ACM Symposium on User Interface Software and Technology (UIST).

-

Christian Holz and Patrick Baudisch (2013). Fiberio: a touchscreen that senses fingerprints. ACM Symposium on User Interface Software and Technology (UIST).

-

Eyal Krupka, Kfir Karmon, Noam Bloom, Daniel Freedman, Ilya Gurvich, Aviv Hurvitz, Ido Leichter, Yoni Smolin, Yuval Tzairi, Alon Vinnikov, Aharon Bar-Hillel (2017). Toward Realistic Hands Gesture Interface: Keeping it Simple for Developers and Machines. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Gierad Laput, Robert Xiao, Chris Harrison (2016). ViBand: High-Fidelity Bio-Acoustic Sensing Using Commodity Smartwatch Accelerometers. ACM Symposium on User Interface Software and Technology (UIST).

-

David Lee, KyoungHee Son, Joon Hyub Lee, Seok-Hyung Bae (2012). PhantomPen: virtualization of pen head for digital drawing free from pen occlusion & visual parallax. ACM Symposium on User Interface Software and Technology (UIST).

-

Mingyu Liu, Mathieu Nancel, Daniel Vogel (2015). Gunslinger: Subtle arms-down mid-air interaction. ACM Symposium on User Interface Software and Technology (UIST).

-

Benjamin Long, Sue Ann Seah, Tom Carter, Sriram Subramanian (2014). Rendering volumetric haptic shapes in mid-air using ultrasound. ACM Transactions on Graphics.

-

Mueller, F., Mehta, D., Sotnychenko, O., Sridhar, S., Casas, D., & Theobalt, C. (2017). Real-time hand tracking under occlusion from an egocentric rgb-d sensor. International Conference on Computer Vision.

-

Sundar Murugappan, Vinayak, Niklas Elmqvist, Karthik Ramani (2012). Extended multitouch: recovering touch posture and differentiating users using a depth camera. ACM Symposium on User Interface Software and Technology (UIST).

-

Oberweger, M., Wohlhart, P., & Lepetit, V. (2015). Hands deep in deep learning for hand pose estimation. arXiv:1502.06807.

-

Simon Olberding, Nan-Wei Gong, John Tiab, Joseph A. Paradiso, Jürgen Steimle (2013). A cuttable multi-touch sensor. ACM Symposium on User Interface Software and Technology (UIST).

-

Dan R. Olsen, Jr. and Mitchell K. Harris (2008). Edge-respecting brushes. ACM Symposium on User Interface Software and Technology (UIST).

-

Makoto Ono, Buntarou Shizuki, Jiro Tanaka (2013). Touch & activate: adding interactivity to existing objects using active acoustic sensing. ACM Symposium on User Interface Software and Technology (UIST).

-

Christian Rendl, Patrick Greindl, Michael Haller, Martin Zirkl, Barbara Stadlober, Paul Hartmann (2012). PyzoFlex: printed piezoelectric pressure sensing foil. ACM Symposium on User Interface Software and Technology (UIST).

-

T. Scott Saponas, Chris Harrison, Hrvoje Benko (2011). PocketTouch: through-fabric capacitive touch input. ACM Symposium on User Interface Software and Technology (UIST).

-

Michael Shilman, Desney S. Tan, Patrice Simard (2006). CueTIP: a mixed-initiative interface for correcting handwriting errors. ACM Symposium on User Interface Software and Technology (UIST).

-

Jie Song, Gábor Sörös, Fabrizio Pece, Sean Ryan Fanello, Shahram Izadi, Cem Keskin, Otmar Hilliges (2014). In-air gestures around unmodified mobile devices. ACM Symposium on User Interface Software and Technology (UIST).

-

Yuta Sugiura, Masahiko Inami, Takeo Igarashi (2012). A thin stretchable interface for tangential force measurement. ACM Symposium on User Interface Software and Technology (UIST).

-

Jonathan Taylor, Lucas Bordeaux, Thomas Cashman, Bob Corish, Cem Keskin, Toby Sharp, Eduardo Soto, David Sweeney, Julien Valentin, Benjamin Luff, Arran Topalian, Erroll Wood, Sameh Khamis, Pushmeet Kohli, Shahram Izadi, Richard Banks, Andrew Fitzgibbon, Jamie Shotton (2016). Efficient and precise interactive hand tracking through joint, continuous optimization of pose and correspondences. ACM Transactions on Graphics.

-

Robert Y. Wang and Jovan Popović (2009). Real-time hand-tracking with a color glove. ACM Transactions on Graphics.

-

Robert Wang, Sylvain Paris, Jovan Popović (2011). 6D hands: markerless hand-tracking for computer aided design. ACM Symposium on User Interface Software and Technology (UIST).

-

Saiwen Wang, Jie Song, Jaime Lien, Ivan Poupyrev, Otmar Hilliges (2016). Interacting with Soli: Exploring fine-grained dynamic gesture recognition in the radio-frequency spectrum. ACM Symposium on User Interface Software and Technology (UIST).

-

Andrew M. Webb, Michel Pahud, Ken Hinckley, Bill Buxton (2016). Wearables as Context for Guiard-abiding Bimanual Touch. ACM Symposium on User Interface Software and Technology (UIST).

-

Mark Weiser (1991). The Computer for the 21st Century. Scientific American 265, 3 (September 1991), 94-104.

-

Andrew D. Wilson (2006). Robust computer vision-based detection of pinching for one and two-handed gesture input. ACM Symposium on User Interface Software and Technology (UIST).

-

Jacob O. Wobbrock, Andrew D. Wilson, Yang Li (2007). Gestures without libraries, toolkits or training: a $1 recognizer for user interface prototypes. ACM Symposium on User Interface Software and Technology (UIST).

-

Wu, P. C., Wang, R., Kin, K., Twigg, C., Han, S., Yang, M. H., & Chien, S. Y. (2017). DodecaPen: Accurate 6DoF tracking of a passive stylus. ACM Symposium on User Interface Software and Technology (UIST).

-

Robert C. Zeleznik, Andrew Bragdon, Chu-Chi Liu, Andrew Forsberg (2008). Lineogrammer: creating diagrams by drawing. ACM Symposium on User Interface Software and Technology (UIST).

-

Yang Zhang and Chris Harrison (2015). Tomo: Wearable, low-cost electrical impedance tomography for hand gesture recognition. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology (UIST '15).

-

Yang Zhang, Robert Xiao, Chris Harrison (2016). Advancing hand gesture recognition with high resolution electrical impedance tomography. ACM Symposium on User Interface Software and Technology (UIST).

-

Chen Zhao, Ke-Yu Chen, Md Tanvir Islam Aumi, Shwetak Patel, Matthew S. Reynolds (2014). SideSwipe: detecting in-air gestures around mobile devices using actual GSM signal. ACM Symposium on User Interface Software and Technology (UIST).