Genetic

algorithms (GAs) are procedures or methods for solving a wide class of

problems using many of the principles of Darwinian evolution.

Programs that use genetic algorithms usually start off with a

relatively random groups of solvers.

After the solvers run, the program evaluates the quality of the

solution. The solvers that yield the poorest results "die" (i.e.,

are removed from the solver pool by the program), and the remaining

ones get to reproduce. Typically the program changes a feature of

the surviving solvers during the reproduction, then adds the

"offspring" to the pool to compete against the previous generation's

survivors..

GAs are capable of finding solutions to many problems which can't be

solved by more traditional methods. Among these problems is the

Traveling Salesman problem. Given a salesman that has to travel

to a number of cities with known coordinates, what is the shortest path

or time possible to visit all cities?

Essentially, Genetic Algorithms (GA) are a method of "breeding"

computer programs and solutions to optimization or search problems by

means of simulated evolution. Processes loosely based on natural

selection, crossover, and mutation are repeatedly applied to a

population of binary strings which represent potential solutions. Over

time, the number of above-average individuals increases, and better fit

individuals are created, until a good solution to the problem at

hand is found.

Genetic algorithms are not too hard to program or understand, since

they are biological based. Thinking in terms of real-life evolution may

help you understand. Here is the general algorithm for a GA:

- Create

a Random Initial State: An initial population is created from a

random selection of solutions (which are analagous to chromosomes).

This is unlike the situation for Symbolic AI systems, where the initial

state in a problem is already given instead.

- Evaluate

Fitness: A value for fitness is assigned to each solution

(chromosome) depending on how close it actually is to solving the

problem (thus arriving to theanswer of the desired problem).

(These "solutions" are not to be confused with "answers" to

the problem, think of them as possible characteristics that the

system would employ in order to reach the answer.)

- Reproduce

(and Children Mutate): Those chromosomes with a higher fitness

value are more likely to reproduce offspring (which can mutate

after reproduction). The offspring is a product of the father and

mother, whose composition consists of a combination of genes from

them (this process is known as "crossing over".

- Next

Generation: If the new generation contains a solution that produces an

output that isclose enough or equal to the desired answer then

the problem has been solved. If this is not the case, then the

new generation will go through the same process as their parents did.

This will continue until a solution is reached.

Here is an introduction by the inventor of genetic algorithms, John Holland. Look at this after reading the previous intro.

Here is an introduction by the inventor of genetic algorithms, John Holland. Look at this after reading the previous intro.

Here is an introduction by the inventor of genetic algorithms, John Holland.

Genetic

algorithms are a class of search techniques inspired from the

biological process of evolution by means of natural selection. They can

be used to construct numerical optimization techniques that perform

robustly on problem characterized by ill-behaved search spaces.

Superficially, GAs may look like some peculiar variation on the Monte

Carlo method. There are two crucial differences: First, the probability

of a given solution being selected to participate in a breeding

event is made proportional to that solution's fitness (step 2);

better trial solutions breed more often, the computational equivalent

of natural selection. Second, the production of new trial solutions

from existing ones occurs through breeding. This involves encoding the

parameters defining each solution as a string-like structure

("chromosome"), and performing genetically inspired operations of

crossover and mutation to the pair of chromosomes encoding the two

parents, the end result of these operations being two new

chromosomes defining the two offspring. Applying the reverse

process of decoding those strings into solution parameters

completes the breeding process and yields two new offspring

solutions that incorporate information from both parents.

|

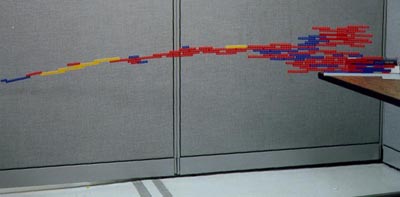

Cross a genetic algorithm with your favorite toy from childhood (Lego!)

and you get intelligent, biologically reminiscent structures. Dr. Pablo

Funes and his team at the Dynamical & Evolutionary Machine

Organization devised a very cool simulator that can be told to create

Lego structures of various kinds, such as bridges, using evolutionary

algorithms. The creative aspect provides interesting food for thought:

the system is given a goal and the solution design is entirely

dependant on the machine.

Cross a genetic algorithm with your favorite toy from childhood (Lego!)

and you get intelligent, biologically reminiscent structures. Dr. Pablo

Funes and his team at the Dynamical & Evolutionary Machine

Organization devised a very cool simulator that can be told to create

Lego structures of various kinds, such as bridges, using evolutionary

algorithms. The creative aspect provides interesting food for thought:

the system is given a goal and the solution design is entirely

dependant on the machine.