Accessibility

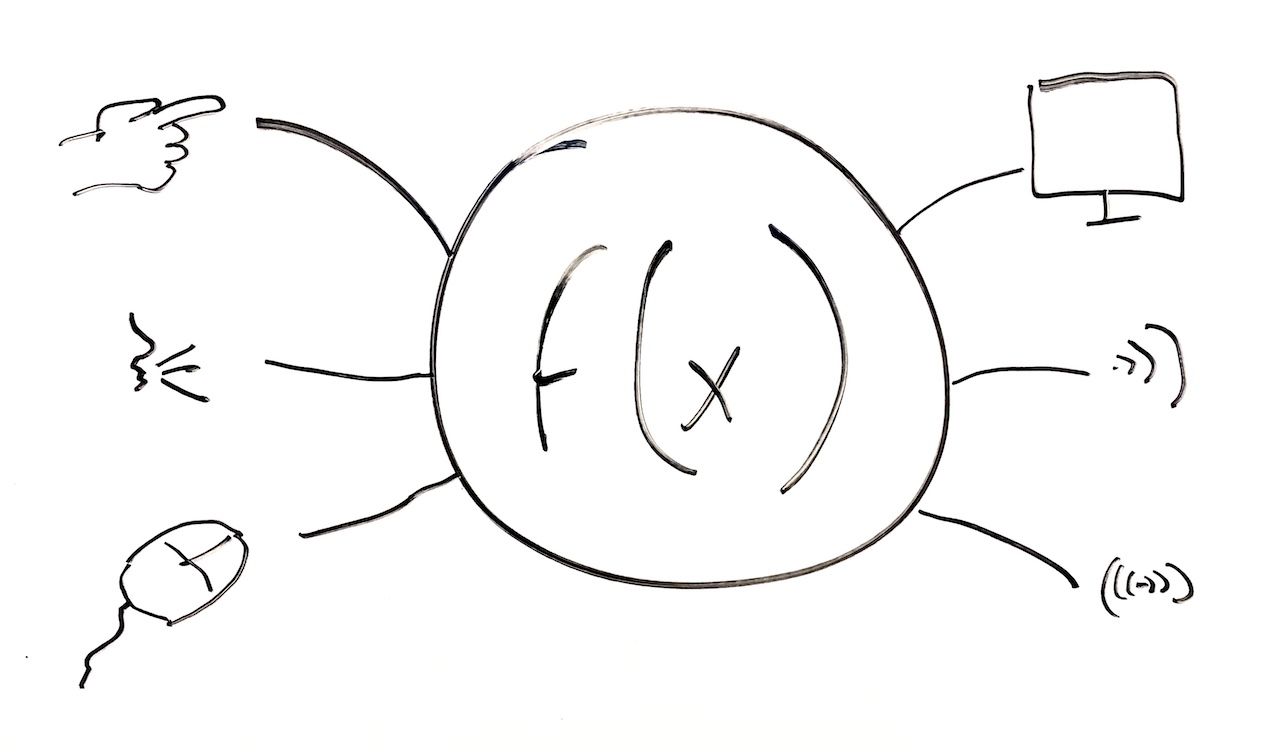

Thus far, most of our discussion has focused on what user interfaces are . I described them theoretically as a mapping from the sensory, cognitive, and social human world to these collections of functions exposed by a computer program . While that’s true, most of the mappings we’ve discussed have been input via our fingers and output to our visual perceptual systems. Most user interfaces have largely ignored other sources of human action and perception. We can speak. We can control over 600 different muscles. We can convey hundreds of types of non-verbal information through our gaze, posture, and orientation. We can see, hear, taste, smell, sense pressure, sense temperature, sense balance, sense our position in space, and feel pain, among dozens of other senses. This vast range of human abilities is largely unused in interface design.

This bias is understandable. Our fingers are incredibly agile, precise, and high bandwidth sources of action. Our visual perception is similarly rich, and one of the dominant ways that we engage with the physical world. Optimizing interfaces for these modalities is smart because it optimizes our ability to use interfaces.

However, this bias is also unreasonable because not everyone can see, use their fingers precisely, or read text. Designing interfaces that can only be used if one has these abilities means that vast numbers of people simply can’t use interfaces 11 11 Richard E. Ladner (2012). Communication technologies for people with sensory disabilities. Proceedings of the IEEE.

This might describe you or people you know. And in all likelihood, you will be disabled in one or more of these ways someday, as you age or temporarily, due to injuries, surgeries, or other situational impairments. And that means you’ll struggle or be unable to use the graphical user interfaces you’ve worked so hard to learn. And if you know no one that struggles with interfaces, it may be because they are stigmatized by their difficulties, not sharing their struggles and avoiding access technologies because they signal disability 14 14 Kristen Shinohara and Jacob O. Wobbrock (2011). In the shadow of misperception: assistive technology use and social interactions. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Of course, abilities vary, and this variation has different impacts on people’s ability to use interfaces. One of the most common forms of disability is blindness and low-vision. But even within these categories, there is diversity. Some people are completely blind, some have some sight but need magnification. Some people have color blindness, which can be minimally impactful, unless an interface relies heavily on colors that a person cannot distinguish. I am near-sighted, but still need glasses to interact with user interfaces close to my face. When I do not have my glasses, I have to rely on magnification to see visual aspects of user interface. And while the largest group of people with disabilities are those with vision issues, the long tail of other disabilities around speech, hearing, and motor ability, when combined, is just as large.

Accessibility

Of course, most interfaces assume that none of this variation exists. And ironically, it’s partly because the user interface toolkits we described in the architecture chapter embed this assumption deep in their architecture. Toolkits make it so easy to design graphical user interfaces that these are the only kind of interfaces designers make. This results in most interfaces being difficult or sometimes impossible for vast populations to use, which really makes no business sense 8 8 Sarah Horton and David Sloan (2015). Accessibility for business and pleasure. ACM interactions.

Molly Follette Story (1998). Maximizing Usability: The Principles of Universal Design. Assistive Technology, 10:1, 4-12.

Whereas universal interfaces work for everyone as is, access technologiesaccess technology: Interfaces that are used in tadem with other intefaces to improve their poor accessibility. are alternative user interfaces that attempt to make an existing user interface more universal. Access technologies include things like:

- Screen readers convert text on a graphical user interface to synthesized speech so that people who are blind or unable to read can interact with the interface.

- Captions annotate the speech and action in video as text, allowing people who are deaf or hard of hearing to consume the audio content of video.

- Braille , as shown in the image at the top of this chapter, is a tactile encoding of words for people who are visually impaired.

Consider, for example, this demonstration of screen readers and a braille display:

Fundamentally, universal user interface designs are ones that can be operated via any input and output modality . If user interfaces are really just ways of accessing functions defined in a computer program, there’s really nothing about a user interface that requires it to be visual or operated with fingers. Take, for example, an ATM machine. Why is it structured as a large screen with several buttons? A speech interface could expose identical banking functionality through speech and hearing. Or, imagine an interface in which a camera just looks at someone’s wallet and their face and figures out what they need: more cash, to deposit a check, to check their balance. The input and output modalities an interface uses to expose functionality is really arbitrary: using fingers and eyes is just easier for most people in most situations.

Advances in Accessibility

The challenge for user interface designers then is to not only design the functionality a user interface exposes, but also design a myriad of ways of accessing that functionality through any modality. Unfortunately, conceiving of ways to use all of our senses and abilities is not easy. It took us more than 20 years to invent graphical user interfaces optimized for sight and hands. It’s taken another 20 years to optimize touch-based interactions. It’s not surprising that it’s taking us just as long or longer to invent seamless interfaces for speech, gesture, and other uses of our muscles, and efficient ways of perceiving user interface output through hearing, feeling, and other senses.

These inventions, however, are numerous and span the full spectrum of modalities. For instance, access technologies like screen readers have been around since shortly after Section 508 of the Rehabilitation Act of 1973, and have converted digital text into synthesized speech. This has made it possible for people who are blind or have low-vision to interact with graphical user interfaces. But now, interfaces go well beyond desktop GUIs. For example, just before the ubiquity of touch screens, the SlideRule system showed how to make touch screens accessible to blind users by reading labels of UI throughout multi-touch 10 10 Shaun K. Kane, Jeffrey P. Bigham, Jacob O. Wobbrock (2008). Slide rule: making mobile touch screens accessible to blind people using multi-touch interaction techniques. ACM SIGACCESS Conference on Computers and Accessibility.

For decades, screen readers have only worked on computers, but recent innovations like VizLens (above) have combined machine vision and crowdsourcing to support arbitrary interfaces in the world, such as microwaves, refrigerators, ATMs, and other appliances 7 7 Anhong Guo, Xiang 'Anthony' Chen, Haoran Qi, Samuel White, Suman Ghosh, Chieko Asakawa, Jeffrey P. Bigham (2016). VizLens: A robust and interactive screen reader for interfaces in the real world. ACM Symposium on User Interface Software and Technology (UIST).

With the rapid rise in popularity of the web, web accessibility has also been a popular topic of research. Problems abound, but one of the most notable is the inaccessibility of images. Images on the web often come without Abigale Stangl, Meredith Ringel Morris, and Danna Gurari (2020). Person, Shoes, Tree. Is the Person Naked? What People with Vision Impairments Want in Image Descriptions. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Garreth W. Tigwell, Benjamin M. Gorman, and Rachel Menzies (2020). Emoji Accessibility for Visually Impaired People. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Muhammad Asiful Islam, Yevgen Borodin, I. V. Ramakrishnan (2010). Mixture model based label association techniques for web accessibility. ACM Symposium on User Interface Software and Technology (UIST).

Dragan Ahmetovic, Daisuke Sato, Uran Oh, Tatsuya Ishihara, Kris Kitani, and Chieko Asakawa (2020). ReCog: Supporting Blind People in Recognizing Personal Objects. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Jeffrey P. Bigham (2014). Making the web easier to see with opportunistic accessibility improvement. ACM Symposium on User Interface Software and Technology (UIST).

alt tags that describe the image for people unable to see. User interface controls often lack labels for screen readers to read. Some work shows that some of the the information needs in these descriptions are universal—people describing needing descriptions of people and objects in images—but other needs are highly specific to a context, such as subjective descriptions of people on dating websites 15 15

For people who are deaf or hard of hearing, videos, dialog, or other audio output interfaces are a major accessibility barrier to using computers or engaging in computer-mediated communication. Researchers have invented systems like Legion , which harness crowd workers to provide real-time captioning of arbitrary audio streams with only a few seconds of latency 12 12 Walter Lasecki, Christopher Miller, Adam Sadilek, Andrew Abumoussa, Donato Borrello, Raja Kushalnagar, Jeffrey Bigham (2012). Real-time captioning by groups of non-experts. ACM Symposium on User Interface Software and Technology (UIST).

Neva Cherniavsky, Jaehong Chon, Jacob O. Wobbrock, Richard E. Ladner, Eve A. Riskin (2009). Activity analysis enabling real-time video communication on mobile phones for deaf users. ACM Symposium on User Interface Software and Technology (UIST).

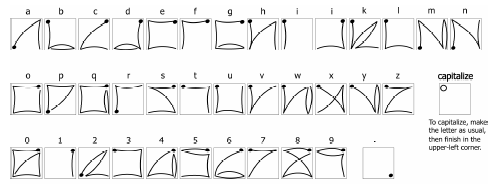

For people who have motor impairments, such as motor tremors , fine control over mouse, keyboards, or multi-touch interfaces can be quite challenging, especially for tasks like text-entry, which require very precise movements. Researchers have explored several ways to make interfaces more accessible for people without fine motor control. EdgeWrite , for example, is a gesture set (shown above) that only requires tracing the edges and diagonals of a square 19 19 Jacob O. Wobbrock, Brad A. Myers, John A. Kembel (2003). EdgeWrite: a stylus-based text entry method designed for high accuracy and stability of motion. ACM Symposium on User Interface Software and Technology (UIST).

Krzysztof Z. Gajos, Jacob O. Wobbrock, Daniel S. Weld (2007). Automatically generating user interfaces adapted to users' motor and vision capabilities. ACM Symposium on User Interface Software and Technology (UIST).

Martez E. Mott, Radu-Daniel Vatavu, Shaun K. Kane, Jacob O. Wobbrock (2016). Smart touch: Improving touch accuracy for people with motor impairments with template matching. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Jacob O. Wobbrock, Shaun K. Kane, Krzysztof Z. Gajos, Susumu Harada, Jon Froehlich (2011). Ability-Based Design: Concept, Principles and Examples. ACM Transactions on Accessible Computing.

Morgan Dixon and James Fogarty (2010). Prefab: implementing advanced behaviors using pixel-based reverse engineering of interface structure. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Sight, hearing, and motor abilities have been the major focus of innovation, but an increasing body of work also considers neurodiversity. For example, autistic people, people with down syndrome, and people with dyslexia may process information in different and unique ways, requiring interface designs that support a diversity of interaction paradigms. As we have discussed, interfaces can be a major source of such information complexity. This has led to interface innovations that facilitate a range of aids, including images and videos for conveying information, iconographic, recognition-based speech generation for communication, carefully designed digital surveys to gather information in health contexts, and memory-aids to facilitate recall. Some work has found that while these interfaces can facilitate communication, they are often not independent solutions, and require exceptional customization to be useful 6 6 Ryan Colin Gibson, Mark D. Dunlop, Matt-Mouley Bouamrane, and Revathy Nayar (2020). Designing Clinical AAC Tablet Applications with Adults who have Mild Intellectual Disabilities. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Whereas all of the innovations above aimed to make particular types of information accessible to people with particular abilities, some techniques target accessibility problems at the level of software architecture. For example, accessibility frameworks and features like Apple’s VoiceOver in iOS are system-wide: when a developer uses Apple’s standard user interface toolkits to build a UI, the UI is automatically compatible with VoiceOver , and therefore automatically screen-readable. Because it’s often difficult to convince developers of operating systems and user interfaces to make their software accessible, researchers have also explored ways of modifying interfaces automatically. For example, Prefab (above) is an approach that recognizes the user interface controls based on how they are rendered on-screen, which allows it to build a model of the UI’s layout 4 4 Morgan Dixon and James Fogarty (2010). Prefab: implementing advanced behaviors using pixel-based reverse engineering of interface structure. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

Amanda Swearngin, Amy J. Ko, James Fogarty (2017). Genie: Input Retargeting on the Web through Command Reverse Engineering. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

While all of the ideas above can make interfaces more universal, they can also have other unintended benefits for people without disabilities. For example, it turns out screen readers are great for people with ADHD, who may have an easier time attending to speech than text. Making web content more readable for people with low-vision also makes it easier for people with situational impairments, such as dilated pupils after a eye doctor appointment. Captions in videos aren’t just good for people who are deaf and hard of hearing; they’re also good for watching video in quiet spaces. Investing in these accessibility innovations then isn’t just about impacting that 15% of people with disabilities, but also the rest of humanity.

References

-

Dragan Ahmetovic, Daisuke Sato, Uran Oh, Tatsuya Ishihara, Kris Kitani, and Chieko Asakawa (2020). ReCog: Supporting Blind People in Recognizing Personal Objects. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Jeffrey P. Bigham (2014). Making the web easier to see with opportunistic accessibility improvement. ACM Symposium on User Interface Software and Technology (UIST).

-

Neva Cherniavsky, Jaehong Chon, Jacob O. Wobbrock, Richard E. Ladner, Eve A. Riskin (2009). Activity analysis enabling real-time video communication on mobile phones for deaf users. ACM Symposium on User Interface Software and Technology (UIST).

-

Morgan Dixon and James Fogarty (2010). Prefab: implementing advanced behaviors using pixel-based reverse engineering of interface structure. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Krzysztof Z. Gajos, Jacob O. Wobbrock, Daniel S. Weld (2007). Automatically generating user interfaces adapted to users' motor and vision capabilities. ACM Symposium on User Interface Software and Technology (UIST).

-

Ryan Colin Gibson, Mark D. Dunlop, Matt-Mouley Bouamrane, and Revathy Nayar (2020). Designing Clinical AAC Tablet Applications with Adults who have Mild Intellectual Disabilities. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Anhong Guo, Xiang 'Anthony' Chen, Haoran Qi, Samuel White, Suman Ghosh, Chieko Asakawa, Jeffrey P. Bigham (2016). VizLens: A robust and interactive screen reader for interfaces in the real world. ACM Symposium on User Interface Software and Technology (UIST).

-

Sarah Horton and David Sloan (2015). Accessibility for business and pleasure. ACM interactions.

-

Muhammad Asiful Islam, Yevgen Borodin, I. V. Ramakrishnan (2010). Mixture model based label association techniques for web accessibility. ACM Symposium on User Interface Software and Technology (UIST).

-

Shaun K. Kane, Jeffrey P. Bigham, Jacob O. Wobbrock (2008). Slide rule: making mobile touch screens accessible to blind people using multi-touch interaction techniques. ACM SIGACCESS Conference on Computers and Accessibility.

-

Richard E. Ladner (2012). Communication technologies for people with sensory disabilities. Proceedings of the IEEE.

-

Walter Lasecki, Christopher Miller, Adam Sadilek, Andrew Abumoussa, Donato Borrello, Raja Kushalnagar, Jeffrey Bigham (2012). Real-time captioning by groups of non-experts. ACM Symposium on User Interface Software and Technology (UIST).

-

Martez E. Mott, Radu-Daniel Vatavu, Shaun K. Kane, Jacob O. Wobbrock (2016). Smart touch: Improving touch accuracy for people with motor impairments with template matching. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Kristen Shinohara and Jacob O. Wobbrock (2011). In the shadow of misperception: assistive technology use and social interactions. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Abigale Stangl, Meredith Ringel Morris, and Danna Gurari (2020). Person, Shoes, Tree. Is the Person Naked? What People with Vision Impairments Want in Image Descriptions. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Molly Follette Story (1998). Maximizing Usability: The Principles of Universal Design. Assistive Technology, 10:1, 4-12.

-

Amanda Swearngin, Amy J. Ko, James Fogarty (2017). Genie: Input Retargeting on the Web through Command Reverse Engineering. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Garreth W. Tigwell, Benjamin M. Gorman, and Rachel Menzies (2020). Emoji Accessibility for Visually Impaired People. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Jacob O. Wobbrock, Brad A. Myers, John A. Kembel (2003). EdgeWrite: a stylus-based text entry method designed for high accuracy and stability of motion. ACM Symposium on User Interface Software and Technology (UIST).

-

Jacob O. Wobbrock, Shaun K. Kane, Krzysztof Z. Gajos, Susumu Harada, Jon Froehlich (2011). Ability-Based Design: Concept, Principles and Examples. ACM Transactions on Accessible Computing.