Universality and average-case algorithm runtimes

Let $H > 0$ be a positive-definite $N \times N$ matrix, and let $x_0$ be an $N$-dimensional random vector. The power method to compute the largest eigenvalue of $H$ is given by the iteration, for $j = 1,2,\ldots$

$$y_j = x_{j-1}/\|x_{j-1}\|_2,$$

$$x_j = Hy_j,$$

$$\mu_j = x_j^* y_j.$$

Then $\mu_j \to \lambda_{\max}$, the largest eigenvalue of $H$ as $j \to \infty$. With P. Deift, we considered the case where $H = XX^*/M$ is a sample covariance matrix. Here $X \in \mathbb C^{N \times M}$ has independent entries with mean zero and variance one (and some technical tail assumptions, $X$ could be real or complex as well). Define the (random) iteration count

$$ T(H,x_0,\epsilon) = \min\{ j: |\mu_j - \mu_{j-1} | < \epsilon^2 \}. $$

The algorithm is terminated when the difference of two successive iterations is small. We proved the following universal, average-case theorem for $T$:

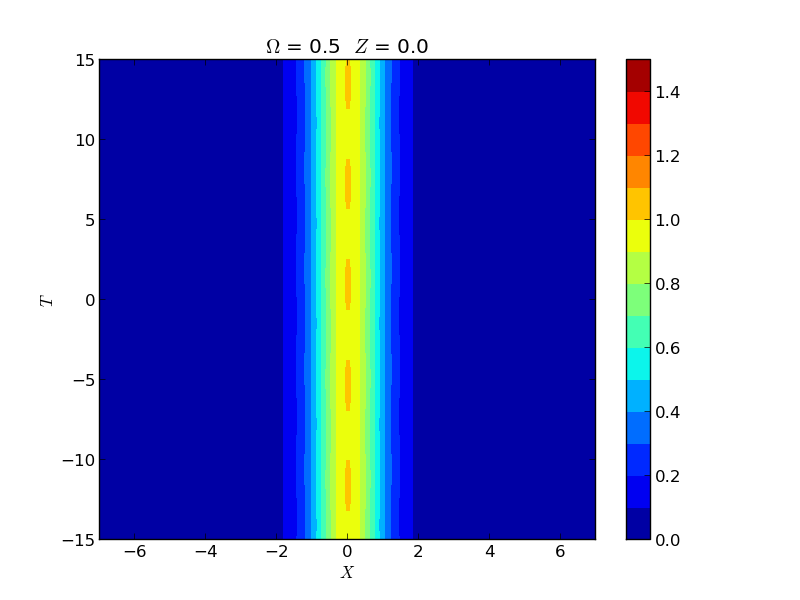

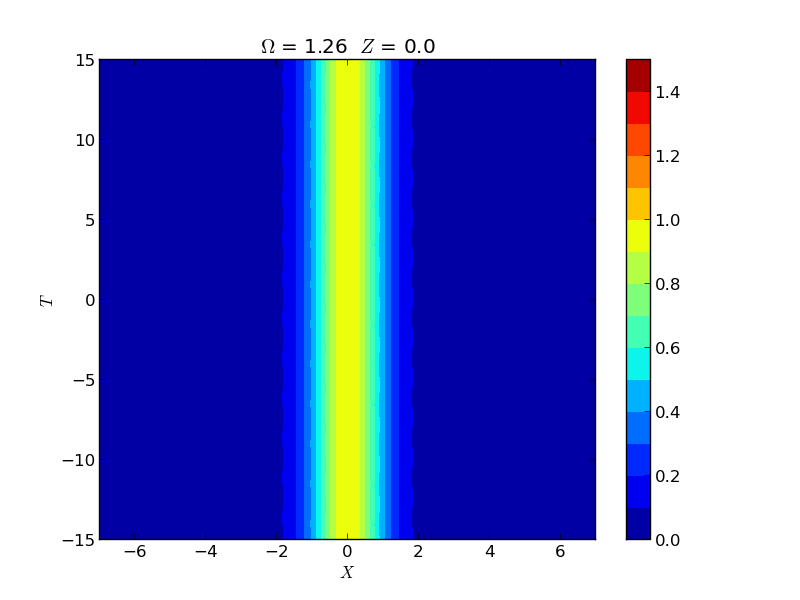

Theorem: Let $\epsilon \ll N^{-5/3 - \sigma}, \sigma > 0$ and $M \sim N/d$, $0 < d < 1$. Then there exists a distribution function $F_\beta^{\mathrm{gap}}(t)$ and a constant $c$ depending only on $d$ such that $$ \lim_{N \to \infty} \mathbb P \left( \frac{ T(H,x_0,\epsilon)}{c N^{2/3} (\log \epsilon^{-1} - \frac{2}{3} \log N)} \leq t \right) = F_\beta^{\mathrm{gap}}(t), \quad t \geq 0.$$

Furthermore, the distribution function $F_\beta^{\mathrm{gap}}(t)$ can be identified as the limiting distribution, after rescaling, of the inverse of the top gap in the real ($\beta =1$) and complex ($\beta = 2$) cases. This theorem gives both universality for and the average-case behavior of the power method. Similar results hold for the inverse power method, the QR algorithm and the Toda algorithm.