Research

My research lies at the intersection of complex networks,

modeling, high performance simulation,

data/metadata/paradata management, and analysis and

visualization. I have applied these interests to problems in

computational neuroscience, cybersecurity, artificial

intelligence, and nonlinear dynamics.

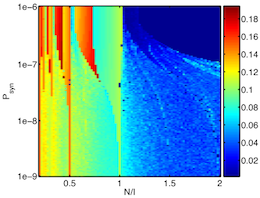

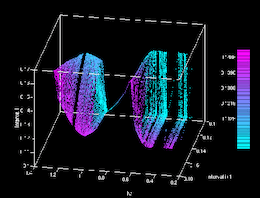

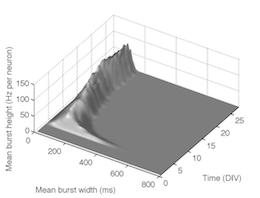

My near-term research focuses on two projects: modeling

of emergency services communications systems (ESCS; 911 in

North America) and modeling of growth and development of

cultures of biological neural networks.

Teaching

As a founding faculty member here at UWB, and a

near-founding-faculty member at HKUST, I have been involved

in the development and teaching of a broad cross-section of

the computer science core, as well as a range of upper-level

undergraduate and graduate electives. Subjects I have taught

include: introductory, medium-level, and advanced

programming, programming tools, object-oriented design, data

structures and algorithms, discrete mathematics, calculus,

technical writing for software professionals, computer

graphics, computer vision, visualization, multimedia,

computer architecture, artificial intelligence, neural

networks, complex systems, signal processing, and expert

systems.

Back to Top

Research.

So what is this mind of ours: what are

these atoms with consciousness? Last week's potatoes!

They now can remember what was going on in my mind

a year ago — a mind which has long ago been

replaced.

— Richard P. Feynman

My research group develops and maintains

a high-performance simulation framework, called Graphitti,

to enable researchers to develop large-scale and

long-duration simulations of graph-based systems. This

simulator has been applied to biological nervous systems

and next-generation 911 communications.

We also build and maintain Workbench, a system that

allows investigators to track simulation code, experiment

configurations, and data produced over many iterations of

coding and simulation. Once fully developed, this will

allow them to be confident that their results are valid

and easily identify when changing assumptions alter the

results.

We eat our own dog food: we use this framework in our

own research in computational neuroscience and critical

infrastructure security.

Back to Top

Teaching.

Artificial Neural Networks

A "nuts and

bolts" coverage of the mathematical and

algorithmic fundamentals of ANNs. Topics include

basic neurobiology, linear algebra, optimization,

supervised and unsupervised learning,

backpropagation in multi-layer perceptrons,

competitive and recurrent networks, radial basis

functions, and learning in deep neural

networks. See the Fall 2018

CSS 485 syllabus and schedule.

A "nuts and

bolts" coverage of the mathematical and

algorithmic fundamentals of ANNs. Topics include

basic neurobiology, linear algebra, optimization,

supervised and unsupervised learning,

backpropagation in multi-layer perceptrons,

competitive and recurrent networks, radial basis

functions, and learning in deep neural

networks. See the Fall 2018

CSS 485 syllabus and schedule.

Signal Computing

How computers can

process information from the physical world as digital

signals. Topics include physical properties of

source information, devices

for information capture,

digitization, compression, digital media

representation, mathematics and algorithms relating to

digital signal

processing, and network communication. See the Fall 2016

CSS 457 syllabus and schedule.

How computers can

process information from the physical world as digital

signals. Topics include physical properties of

source information, devices

for information capture,

digitization, compression, digital media

representation, mathematics and algorithms relating to

digital signal

processing, and network communication. See the Fall 2016

CSS 457 syllabus and schedule.

Programming Issues With Object-Oriented Languages

This class works as

a combination of laboratory and tutorial, to help

students investigate and understand some of the deeper

and more obscure aspects of software development

in C++. Covers language and development/execution

environment differences, including data types,

control structures, arrays, and I/O; addressing

and memory management issues including pointers,

references, functions, and their passing

conventions; object-oriented design specifics

related to structured data and classes.

This class works as

a combination of laboratory and tutorial, to help

students investigate and understand some of the deeper

and more obscure aspects of software development

in C++. Covers language and development/execution

environment differences, including data types,

control structures, arrays, and I/O; addressing

and memory management issues including pointers,

references, functions, and their passing

conventions; object-oriented design specifics

related to structured data and classes.

Computational Neuroscience

Everything we are and

do, from

the simplest physical act to the most complex and

subtle thought, is

contained within the three

pounds of grey matter we carry within our heads. After

millennia of pondering and

decades of scientific research, we've only just begun

to unlock the nature of how nervous systems accomplish

their myriad tasks. This course provides an

overview of what we've learned about nervous system

function and an understanding of

how much we still don't know. Students evaluate the

biophysical properties of nervous systems from the

point of view of computation, and apply

computational tools to simulate and analyze nervous

system operation.

Everything we are and

do, from

the simplest physical act to the most complex and

subtle thought, is

contained within the three

pounds of grey matter we carry within our heads. After

millennia of pondering and

decades of scientific research, we've only just begun

to unlock the nature of how nervous systems accomplish

their myriad tasks. This course provides an

overview of what we've learned about nervous system

function and an understanding of

how much we still don't know. Students evaluate the

biophysical properties of nervous systems from the

point of view of computation, and apply

computational tools to simulate and analyze nervous

system operation.

Expert Systems

This course

introduces students to rule-based programming in

the context of declarative modeling of expert

human knowledge. It has an additional focus on

building expert systems applications as part of

larger systems, including web-based and enterprise

systems. Besides rule-based programming, expert

systems operation, and knowledge engineering,

topics will include aspects of Java that are

useful for developing these systems, such as

JavaBeans, serialization, applets, servlets, J2EE,

JavaServer Pages, Tomcat, web services, and

XML.

This course

introduces students to rule-based programming in

the context of declarative modeling of expert

human knowledge. It has an additional focus on

building expert systems applications as part of

larger systems, including web-based and enterprise

systems. Besides rule-based programming, expert

systems operation, and knowledge engineering,

topics will include aspects of Java that are

useful for developing these systems, such as

JavaBeans, serialization, applets, servlets, J2EE,

JavaServer Pages, Tomcat, web services, and

XML.

Back to Top

About Me.

I grew up in Philadelphia, reading

science fiction and dreaming of someday having a robot

army to call my own. I've always been interested in

the intersection of engineering and biology. As an

undergrad at Washington University in St. Louis, I

double-majored in Computer Science and Electrical

Engineering. Between undergrad and graduate studies, I

worked for Philips and Texas Instruments. In grad

school, I had the opportunity to take neuroscience

classes in the UCLA Medical School. I was fascinated with

the idea of building simulations of living systems and

how that could allow researchers to answer questions

that could be posed in rigorously mathematical terms

— questions that couldn't easily be answered by

experiments on living organisms, but could be verified

that way.

I have a lot of fun following my nose when it comes

to research and working with students in both

classroom and research settings. Around 2008, about

the time I was promoted to full Professor, I was

honored to be entrusted with leading UW Bothell's

Computing and Software Systems Program and looking out

for the well being of its faculty, staff, and

students. More recently, I was charged with

supporting development of graduate programs in our

School of STEM, and currently am expanding

cybersecurity in UWB STEM and throughout UW as the

director of our National Center of Academic

Excellence. Throughout, I've cultivated a keen sense

of humor and social justice, formed during a childhood

listening to George

Carlin albums and watching Billy

Jack movies.

Back to Top