mmf.archive¶

| Archive([flat, tostring, check_on_insert, ...]) | Archival tool. |

| DataSet(module_name[, mode, path, ...]) | Creates a module module_name in the directory path representing a set of data. |

| ArchiveError | Archiving error. |

| DuplicateError(name) | Object already exists. |

| restore(archive[, env]) | Return dictionary obtained by evaluating the string arch. |

| repr_(obj[, robust]) | Return representation of obj. |

| get_imports(obj[, env]) | Return imports = [(module, iname, uiname)] where |

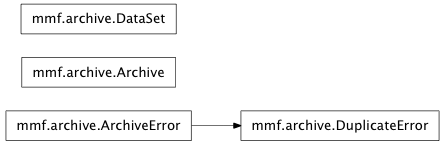

Inheritance diagram for mmf.archive:

This is an idea for a model of objects that save their state. Here is a typical usage:

>>> a = Archive()

>>> a.insert(x=3)

'x'

>>> a.insert(y=4)

'y'

>>> s = str(a)

Here you could write to a file:

f = open('file.py', 'w')

f.write(s)

f.close()

And after you could read from the file again:

f = open('file.py')

s = f.read()

f.close()

Now we can restore the archive:

>>> d = {}

>>> exec(s, d)

>>> d['x']

3

>>> d['y']

4

Objects can aid this by implementing an archive method, for example:

def archive_1(self):

'''Return (rep, args, imports) where obj can be reconstructed

from the string rep evaluated in the context of args with the

specified imports = list of (module, iname, uiname) where one

has either "import module as uiname", "from module import

iname" or "from module import iname as uiname".

'''

args = dict(a=self.a, b=self.b, ...)

module = self.__class__.__module__

name = self.__class__.__name__

imports = [(module, name, name)]

keyvals = ["=".join((k, k)) for (k, v) in args]

rep = "%s(%s)"%(name, ", ".join(keyvals))

return (rep, args, imports)

The idea is to save state in a file that looks like the following:

import numpy as _numpy

a = _numpy.array([1, 2, 3])

del _numpy

Note

When you want to execute the string, always pass an execution context to unpack:

>>> a = Archive()

>>> a.insert(x=3)

'x'

>>> s = str(a)

>>> d = {}

>>> exec(s, d)

>>> d['x']

3

If you just execute the code, it will attempt to delete the ‘__builtins__’ module (so as not to clutter the dictionary) and may render the interpreter unusable!

As a last resort, we consider the repr of the object: if this starts with < as is customary for instances of many classes, then we try pickling the object, otherwise we try using the repr (which allows builtin types to be simply archived for example).

Limitations¶

Archives must not contain explicit circular dependencies. These must be managed by constructors:

>>> l1 = []

>>> l2 = [l1]

>>> l1.append(l2)

>>> l1 # repr does not even work...

[[[...]]]

>>> a = Archive()

>>> a.insert(l=l1)

'l'

>>> str(a)

Traceback (most recent call last):

...

CycleError: Archive contains cyclic dependencies.

Large Archives¶

For small amounts of data, the string representation of Archive is usually sufficient. For large amounts of binary data, however, this

is extremely inefficient. In this case, a separate archive format is used where the archive is turned into a module that contains a binary data-file.

Todo

- Consider using imports rather than execfile etc. for loading DataSet s. This allows the components to be byte-compiled for performance. (Only really helps if the components have lots of code – most of my loading performance issues are due instead to the execution of constructors, so this will not help.) Also important for python 3.0 conversion.

- Make sure that numpy arrays from tostring() are NOT subject to replacement somehow. Not exactly sure how to reproduce the problem, but it is quite common for these to have things like ‘_x’ in the string.

- Graph reduction occurs for nodes that have more than one parent. This does not consider the possibility that a single node may refer to the same object several times. This has to be examined so that non-reducible nodes are not reduced (see the test case which fails).

- _replace_rep() is stupid (it just does text replacements). The alternative _replace_rep_robust() is slow.

- It would be nice to be able to use import A.B and then just use the name A.B.x, A.B.y etc. However, the name A could clash with other symbols, and it cannot be renamed (i.e. import A_1.B would not work). To avoid name clashes, we always use either import A.B as B or the from A.B import x forms which can be renamed.

- Maybe allow rep’s to be suites for objects that require construction and initialization. (Could also allow a special method to be called to restore the object such as restore().)

- Performance: There have been some performance issues: c9e9fff8662f: A major improvement was made (this is not in archive!?!). daa21ec81421: Another bottleneck was removed. 23999d0c395e: Some of the code to make unique indices was running in O(n^2) time because of expensive “in” lookups. This was fixed by adding a _maxint cache. The remaining performance issues appear to be in _replace_rep.

Developer’s Note¶

After issue 12 arose, I have decided to change the structure of archives to minimize the need to replace text. New archives will evaluate objects in a local scope. Here is an example, first in the old format:

from mmf.objects import Container as _Container

_y = [1, 2, 3, 4]

l1 = [_y, [1, _y]]

l2 = [_y, l1]

c = _Container(_y=_y, x=1, l=l2)

del _Container

del _y

try: del __builtins__

except NameError: pass

Now in an explicit local scoping format using dictionaries:

_g = {}

_g['_y'] = [1, 2, 3, 4]

_d = dict(y=_g['_y'])

l1 = _g['_l1'] = eval('[y, [1, y]]', _d)

_d = dict(y=_g['_y'],

l1=l1)

l2 = _g['_l2'] = eval('[y, l1]', _d)

_d = dict(x=1,

_y=_g['_y'],

l2=l2,

Container=__import__('mmf.objects', fromlist=['Container']).Container)

c = _g['_c'] = eval('Container(x=x, _y=_y, l=l2)', _d)

del _g, _d

try: del __builtins__

except NameError: pass

Now a version using local scopes to eschew eval(). One can use either classes or functions: preliminary profiling shows functions to be slightly faster - and there is no need for using global - so I am using that for now. Local variables are assigned using keyword arguments. The idea is to establish a one-to-one correspondence between functions and each object so that the representation can be evaluated without requiring textual replacements that have been the source of errors.

The old format is clearer, but the replacements require render it somewhat unreliable:

_y = [1, 2, 3, 4] # No arguments here

def _d(y):

return [y, [1, y]]

l1 = _d(y=_y)

def _d(y):

return [y, l1]

l2 = _d(y=_y)

def _d(x):

from mmf.objects import Container as Container

return Container(x=x, _y=_y, l=l2)

c = _d(x=1)

del _d

del _y

try: del __builtins__

except NameError: pass

- class mmf.archive.Archive(flat=True, tostring=True, check_on_insert=False, array_threshold=inf, datafile=None, pytables=True, allowed_names=None, gname_prefix='_g', scoped=True, robust_replace=True)¶

Bases: object

Archival tool.

Maintains a list of symbols to import in order to reconstruct the states of objects and contains methods to convert objects to strings for archival.

A set of options is provided that allow large pieces of data to be stored externally. These pieces of data (numpy arrays) will need to be stored at the time of archival and restored prior to executing the archival string. (See array_threshold and data).

Notes

A required invariant is that all uname be unique.

Examples

First we make a simple archive as a string (no external storage) and then restore it.

>>> arch = Archive(scoped=False) # Old form of scoped >>> arch.insert(x=4) 'x'

We can include functions and classes: These are stored by their names and imports.

>>> import numpy as np >>> arch.insert(f=np.sin, g=restore) ['g', 'f']

Here we include a list and a dictionary containing that list. The resulting archive will only have one copy of the list since it is referenced.

>>> l0 = ['a', 'b'] >>> l = [1, 2, 3, l0] >>> d = dict(l0=l0, l=l, s='hi') >>> arch.insert(d=d, l=l) ['d', 'l']

Presently the archive is just a graph of objects and string representations of the objects that have been directly inserted. For instance, l0 above has not been directly included, so if it were to change at this point, this would affect the archive.

To make the archive persistent so there is no dependence on external objects, we call make_persistent(). This would also save any external data as we shall see later.

>>> _tmp = arch.make_persistent()

This is not strictly needed as it will be called implicitly by the following call to __str__() which returns the string representation. (Note also that this will thus be called whenever the archive is printed.)

>>> s = str(arch) >>> print s from mmf.archive... import restore as _restore from numpy import sin as _sin from __builtin__ import dict as _dict _l_5 = ['a', 'b'] l = [1, 2, 3, _l_5] d = _dict([('s', 'hi'), ('l', l), ('l0', _l_5)]) g = _restore f = _sin x = 4 del _restore del _sin del _dict del _l_5 try: del __builtins__ except NameError: pass

Now we can restore this by executing the string. This should be done in a dictionary environment.

>>> res = {} >>> exec(s, res) >>> res['l'] [1, 2, 3, ['a', 'b']] >>> res['d']['l0'] ['a', 'b']

Note that the shared list here is the same list:

>>> id(res['l'][3]) == id(res['d']['l0']) True

Attributes

- class mmf.archive.DataSet(module_name, mode='r', path='.', synchronize=True, verbose=False, _reload=False, array_threshold=100, backup_data=False, name_prefix='x_', timeout=60)¶

Bases: object

Creates a module module_name in the directory path representing a set of data.

The data set consists of a set of names other not starting with an underscore ‘_’. Accessing (using __getattr__() or equivalent) any of these names will trigger a dynamic load of the data associated with that name. This data will not be cached, so if the returned object is deleted, the memory should be freed, allowing for the use of data sets larger than available memory. Assigning (using __setattr__() or equivalent) these will immediately store the corresponding data to disk.

In addition to the data proper, some information can be associated with each object that will be loaded each time the archive is opened. This information is accessed using __getitem__() and __setitem__() and will be stored in the __init__.py file of the module.

Note

A potential problem with writable archives is one of concurrency: two open instances of the archive might have conflicting updates. We have two mechanisms for dealing with this as specified by the synchronize flag.

Warning

The mechanism for saving is dependent on __setattr__() and __setitem__() being called. This means that you must not rely on mutating members. This will not trigger a save. For example, the following will not behave as expected:

>>> import tempfile, shutil, os # Make a unique temporary module >>> t = tempfile.mkdtemp(dir='.') >>> os.rmdir(t) >>> modname = t[2:] >>> ds = DataSet(modname, 'w') >>> ds.d = dict(a=1, b=2) # Will write to disk >>> ds1 = DataSet(modname, 'r') >>> ds1.d # See? {'a': 1, 'b': 2}

This is dangerous... Do not do this.

>>> ds.d['a'] = 6 # No write! >>> ds1.d['a'] # This was not updated 1

Instead, do something like this: Store the mutable object in a local variable, manipulate it, then reassign it:

>>> d = ds.d >>> d['a'] = 6 >>> ds.d = d # This assignment will write >>> ds1.d['a'] 6 >>> shutil.rmtree(t)

Examples

First we make the directory that will hold the data. Here we use the tempfile module to make a unique name.

>>> import tempfile, shutil # Make a unique temporary module >>> t = tempfile.mkdtemp(dir='.') >>> os.rmdir(t) >>> modname = t[2:]

Now, make the data set.

>>> ds = DataSet(modname, 'w')

Here is the data we are going to store.

>>> import numpy as np >>> nxs = [10, 20] >>> mus = [1.2, 2.5] >>> dat = dict([((nx, mu), np.ones(nx)*mu) ... for mu in mus ... for nx in nxs])

Now we add the data. It is written upon insertion:

>>> ds.nxs = nxs >>> ds.mus = mus

If you want to include information about each point, then you can do that with the dictionary interface:

>>> ds['nxs'] = 'Particle numbers' >>> ds['mus'] = 'Chemical potentials'

This information will be loaded every time, but the data will only be loaded when requested.

Here is a typical usage, storing data with some associated metadata in one shot using _insert(). This a public member, but we still use an underscore so that there is no chance of a name conflict with a data member called ‘insert’ should a user want one...

>>> for (nx, mu) in dat: ... ds._insert(dat[(nx, mu)], info=(nx, mu)) ['x_0'] ['x_1'] ['x_2'] ['x_3']

>>> print(ds) DataSet './...' containing ['mus', 'nxs', 'x_2', 'x_3', 'x_0', 'x_1']

Here is the complete set of info:

This information is stored in the _info_dict dictionary as a set of records. Don’t modify this directly though as this will not properly write the data...

>>> [(k, ds[k]) for k in sorted(ds)] [('mus', 'Chemical potentials'), ('nxs', 'Particle numbers'), ('x_0', (10, 2.5)), ('x_1', (20, 1.2)), ('x_2', (10, 1.2)), ('x_3', (20, 2.5))] >>> [(k, getattr(ds, k)) for k in sorted(ds)] [('mus', [1.2, 2.5]), ('nxs', [10, 20]), ('x_0', array([ 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5])), ('x_1', array([ 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2])), ('x_2', array([ 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2, 1.2])), ('x_3', array([ 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5]))]

Todo

Fix module interface...

To load the archive, you can import it as a module:

>> mod1 = __import__(modname)

The info is again available in info_dict and the actual data can be loaded using the load() method. This allows for the data set to include large amounts of data, only loading what is needed:

>> mod1._info_dict['x_0'].info (20, 2.5) >> mod1._info_dict['x_0'].load() array([ 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5, 2.5])If you want to modify the data set, then create a new data set object pointing to the same place:

>>> ds1 = DataSet(modname, 'w') >>> print(ds1) DataSet './...' containing ['mus', 'nxs', 'x_2', 'x_3', 'x_0', 'x_1']

This may be modified, but see the warnings above.

>>> import numpy as np >>> ds1.x_0 = np.ones(5)

This should work, but fails within doctests. Don’t know why...:

>> reload(mod1) # doctest: +SKIP <module '...' from '.../mmf/archive/.../__init__.py'>

Here we open a read-only copy:

>>> ds2 = DataSet(modname) >>> ds2.x_0 array([ 1., 1., 1., 1., 1.]) >>> ds2.x_0 = 6 Traceback (most recent call last): ... ValueError: DataSet opened in read-only mode. >>> shutil.rmtree(t)

Constructor. Note that all of the parameters are stored as attributes with a leading underscore appended to the name.

Parameters : synchronize : bool, optional

If this is True (default), then before any read or write, the data set will refresh all variables from their current state on disk. The resulting data set (with the new changes) will then be saved on disk. During the write, the archive will be locked so that only one DataSet and write at a time.

If it is False, then locking is performed once a writable DataSet is opened and only one writable instance will be able to be created at a time.

module_name : str

This is the name of the module under path where the data set will be stored.

mode : ‘r’, ‘w’

Read only or read/write.

path : str

Directory to make data set module.

name_prefix : str

This – appended with an integer – is used to form unique names when insert() is called without specifying a name.

verbose : bool

If True, then report actions.

array_threshold : int

Threshold size above which arrays are stored as hd5 files.

backup_data : bool

If True, then backup copies of overwritten data will be saved.

timeout : int, optional

Time (in seconds) to wait for a writing lock to be released before raising an IOException exception. (Default is 60s.)

.. warning: Although you can change entries by using the `store` :

method of the records, this will not write the “__init__.py” file until close() or write() are called. As a safeguard, these are called when the object is deleted, but the user should explicitly call these when new data is added.

.. warning: The locking mechanism is to prevent two archives :

from clobbering upon writing. It is not designed to prevent reading invalid information from a partially written archive (the reading mechanism does not use the locks).

- __init__(module_name, mode='r', path='.', synchronize=True, verbose=False, _reload=False, array_threshold=100, backup_data=False, name_prefix='x_', timeout=60)¶

Constructor. Note that all of the parameters are stored as attributes with a leading underscore appended to the name.

Parameters : synchronize : bool, optional

If this is True (default), then before any read or write, the data set will refresh all variables from their current state on disk. The resulting data set (with the new changes) will then be saved on disk. During the write, the archive will be locked so that only one DataSet and write at a time.

If it is False, then locking is performed once a writable DataSet is opened and only one writable instance will be able to be created at a time.

module_name : str

This is the name of the module under path where the data set will be stored.

mode : ‘r’, ‘w’

Read only or read/write.

path : str

Directory to make data set module.

name_prefix : str

This – appended with an integer – is used to form unique names when insert() is called without specifying a name.

verbose : bool

If True, then report actions.

array_threshold : int

Threshold size above which arrays are stored as hd5 files.

backup_data : bool

If True, then backup copies of overwritten data will be saved.

timeout : int, optional

Time (in seconds) to wait for a writing lock to be released before raising an IOException exception. (Default is 60s.)

.. warning: Although you can change entries by using the `store` :

method of the records, this will not write the “__init__.py” file until close() or write() are called. As a safeguard, these are called when the object is deleted, but the user should explicitly call these when new data is added.

.. warning: The locking mechanism is to prevent two archives :

from clobbering upon writing. It is not designed to prevent reading invalid information from a partially written archive (the reading mechanism does not use the locks).

- class mmf.archive.ArchiveError¶

Bases: exceptions.Exception

Archiving error.

- __init__()¶

x.__init__(...) initializes x; see help(type(x)) for signature

- class mmf.archive.DuplicateError(name)¶

Bases: mmf.archive.ArchiveError

Object already exists.

- __init__(name)¶

- mmf.archive.restore(archive, env={})¶

Return dictionary obtained by evaluating the string arch.

arch is typically returned by converting an Archive instance into a string using str() or repr():

Examples

>>> a = Archive() >>> a.insert(a=1, b=2) ['a', 'b'] >>> arch = str(a) >>> d = restore(arch) >>> print "%(a)i, %(b)i"%d 1, 2

- mmf.archive.repr_(obj, robust=True)¶

Return representation of obj.

Stand-in repr function for objects that support the archive_1 method.

Examples

>>> class A(object): ... def __init__(self, x): ... self.x = x ... def archive_1(self): ... return ('A(x=x)', dict(x=self.x), []) ... def __repr__(self): ... return repr_(self) >>> A(x=[1]) A(x=[1])

- mmf.archive.get_imports(obj, env=None)¶

Return imports = [(module, iname, uiname)] where iname is the constructor of obj to be used and called as:

from module import iname as uiname obj = uiname(...)

This may be useful when writing archive_1() methods.

Examples

>>> import numpy as np >>> a = np.array([1, 2, 3]) >>> get_imports(a) [('numpy', 'ndarray', 'ndarray')]