In association with other adaptrodes and in the context of a neuron-like processing element, we have previously shown that multi-time scale associative learning is achieved [10],[11]. Furthermore, the adaptrode mechanism can easily enforce certain technical constraints on the temporal order of processed signals such that natural causal relations are encoded in the learned associations [10].

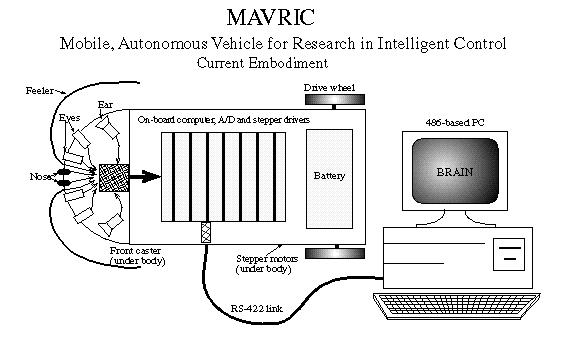

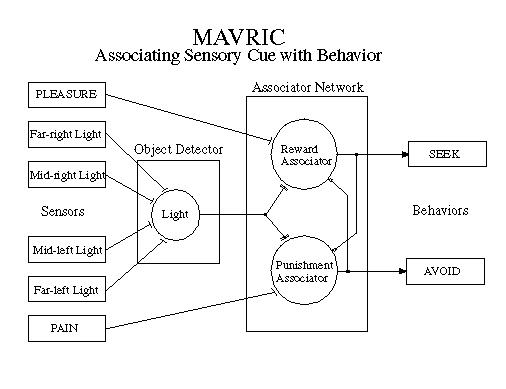

The research environment that we have chosen is autonomous robotics. MAVRIC (Mobile Autonomous Vehicle for Research in Intelligent Control) is an embodied [4] Braitenberg vehicle [3] that is situated [4] in a nonstationary, dynamic environment such as an ordinary room with movable and moving objects. MAVRIC is outfitted with a variety of sensors that allow it to sense the state of the environment (see Figure 1.) These sensors are simple light, touch, sound and (simulated) smell [10]. In the Figure, the cephalic (head) end (left side of figure) is arrayed with a variety of sensors, all of which produce proportional signals. An on-board computer polls the A/D board, controls the stepper motors and provides communications with the main computing platform, a 486-PC. Though a battery can be used to power MAVRIC (as shown), for long runs, a power cable is tethered along with the RS-422 cable. A program called ``BRAI N'' runs on the PC to simulate the neurons of MAVRIC's brain.

MAVRIC's brain is based entirely on adaptrode neurons. No other computation is employed than the simulation of the neurons [10]. MAVRIC can now roam about and learn to associate various environmental cues such as the presence of a light coupled with sounding of a specific tone, with the occurrence of mission-relevant events, such as the occurrence of a sought object, where those cues have causal relations to the events. That is, the cue event(s) are linked to the mission-relevant event by some causal mechanism. The cue is a cue precisely because of this relationship. MAVRIC's job is to learn those associations so as to maximize its chances of finding and exploiting the events [10]. The challenge presented to the adaptrode learning mechanism is to encode associations which are, themselves, time varying or nonstationary without destroying previously learned associations --- "destructive interference" [14].

In this paper we report on the basic architecture of this artificial brain and discuss some of the early findings/insights we have made along with some comments on future directions we hope to take. A major result of the work with MAVRIC has been the discovery of a new search method that employs quasi-chaotic oscillation to generate novel search paths while constraining the search envelop. The resulting search improves the robot's chances of finding a mission-critical object when the general direction is known but the exact location is not. The search is reminiscent of foraging search by animals.

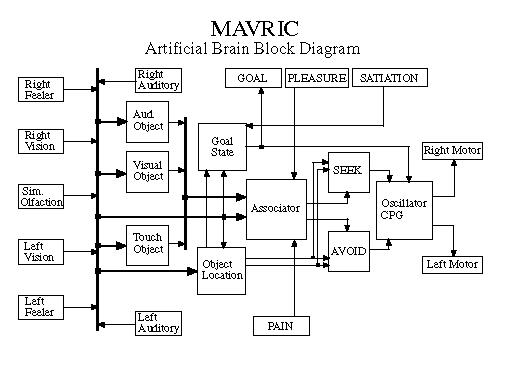

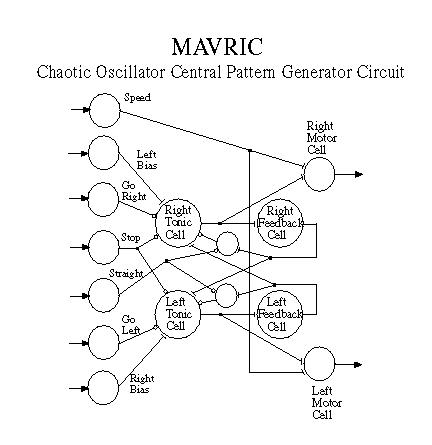

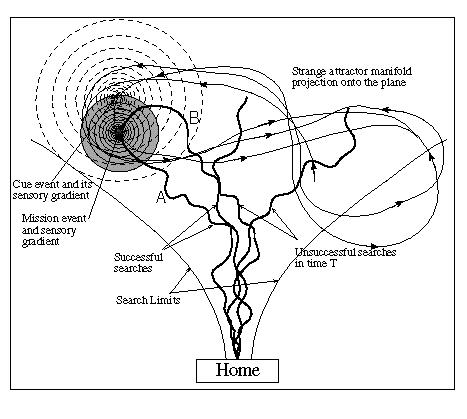

We have developed an interesting oscillator network (Figure 3) which generates a pair of signals one of which is fed to the right motor and the other to the left motor. The combined effect of these signals produces a stochastic sinusoidal weave in the forward motion of the robot -- a sort of drunken walk. Attractor reconstruction analysis of the net value of the signals (left output - right output) shows a strange attractor basin indicating that the signal is at least quasi-chaotic.

When not being stimulated by any meaningful sensory inputs, MAVRIC will weave in a constrained, yet novel, sinusoidal path as it searches its environment. The way in which MAVRIC appears to be following an ordered but stochastic search, reminiscent of a bloodhound weaving back and forth trying to pick up a scent, has led us to investigate the theory of foraging search as an alternative to stochastic methods. Foraging search in animals is a means by which the animal can find dynamically and sparsely distributed resources in a huge space [15],[13],[6]. One component of such a search is that lacking any clues as to the whereabouts of a patch of resource, the animal conducts a stochastic, but not random walk, path selection procedure[note 1] which ensures that it will explore a wider corridor than it would have by moving in a strictly straight line. This component is important in a dynamic environment where resources are either moving or coming into and out of existence (e.g., ripening of fruits in season) in a sporadic and episodic fashion [9]. Another component of foraging search --- learning cues --- will be discussed below.

The oscillator circuit was employed because it behaves similarly to central pattern generator (CPG) circuits used by many animals for motor control. The major advantage of these circuits is that input signals to different points in the circuit can modulate or shape the output to achieve changes in direction and/or speed. A ``Go Straight'' signal damps the oscillation amplitude so that MAVRIC moves straight ahead. A ``Go Right'' signal damps the right output signal so that the left motor runs faster than the right motor, thus turning the robot to the right. This feature is exploited to make the robot home in on cues that it detects in the course of searching. Once a recognized signal is detected, MAVRIC ceases its quasi-chaotic weave and moves in a relatively straight line toward the signal source using one of two methods to be discussed below.

Heat, it turned out, was not a good choice due to problems with sensitivity and convection currents. Subsequently, the heat sensors were replaced by a microphone and narrow bandpass filter (see description of auditory system below). A gradient is simulated by controlling the volume of a tone emitted by a speaker located in the environment. If MAVRIC gets closer to the speaker (the object being sought) the volume is increased by an amount that can be detected by the on-board amplifier and a difference computed by the sniffer circuit. Similarly, if MAVRIC moves away from the speaker, the volume is decreased. Unfortunately, this alternative, while working well, has proved somewhat problematic in its own right. Due to the poor sensitivity of the amplifying circuits on-board (a reflection of budgetary constraints), the volume needed to represent MAVRIC reaching the object was distracting to the human observers. We had selected a pitch well within human hearing. Perhaps a pitch above 20k Hz. would have been more appropriate.

Four light sensors, arrayed at divergent angles across the "cephalic" end constitute the vision system in classic Braitenberg fashion. Light is used as one of the conditionable stimulus sources [10]. MAVRIC can sense the direction toward a brighter-than-background light source through a spatial difference computation. As a conditionable stimulus, light neither attracts nor repels MAVRIC unless it has learned to associate light with seeking or avoidance reaction (see next section ).

Two semi-directional microphones and a set of narrow bandpass filters provide MAVRIC with some primitive auditory sense. At present, only two tones are used. As with light, these tones are conditionable with respect to meaningful stimuli. Tone 'A' might be used to signal the presence of a mission-critical object while tone 'B' could signal a threat. MAVRIC's ability to sense direction with these tones is limited, so they are used as secondary association cues. When detected, MAVRIC still needs to search for the object using light and smell.

The last sense that MAVRIC uses is touch. It is outfitted with two compliant "feelers" that originate near the center line of the cephalus and wrap outward and around to the sides. These feelers generate a proportional signal when MAVRIC touches something. The signal is quickly strong if MAVRIC runs into an object head--on (at an admittedly snail's pace!), and is slow and weak if it brushes past an object. This sense allows MAVRIC to decide to back up or turn tangentially depending on the circumstances.

All of the sensory inputs are proportional. Signals are not conditioned or linearized. They are digitized by an 8-bit A/D converter and sampled at the rate of 10 times per second.

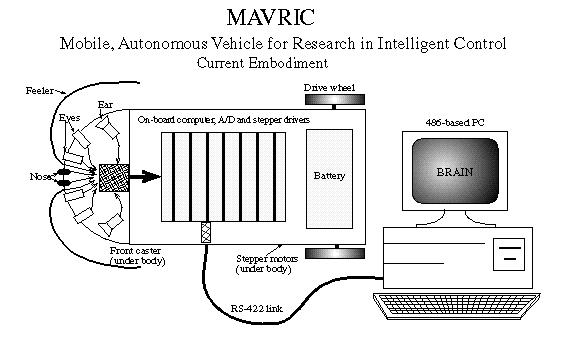

The associator network decides whether a stimulus is benign, desirable or harmful based on past experience. It then issues the appropriate signals to either the SEEK or the AVOID controller if the decision is desirable or harmful, respectively. If the stimulus is benign (not associated) then neither SEEK or AVOID is stimulated and the robot simply wanders in its "drunken walk" weave searching for something of interest.

The pain and pleasure inputs (as well as the satiation input of Figure 2) are currently implemented in software under the control of the experimenter who must decide whether some particular experimental event is to be harmful or pleasurable. MAVRIC does not now have pain or satiation sensors as such.

The gradient following "sniffer" circuit has been used to get MAVRIC to detect and follow a gradient. We have used a specified tone to represent a "genetically" hard-wired scent of a reward (e.g., food). The range of this scent is kept limited to a relatively small area around the mission-critical object. MAVRIC is set loose at one end of the research lab and the object is placed in an arbitrary location in the lab. MAVRIC starts its quasi-chaotic forage (paper) as described above. If MAVRIC is "lucky" its path will wind through the area of the scent gradient, in which case MAVRIC changes behavior and follows the gradient toward the source. This form of foraging is successful only if the mission-critical resource is sufficiently dense in distribution or MAVRIC is given a sufficiently long period in which to search.

A better solution is to allow MAVRIC to learn cue events that are causally associated with the occurrence of the mission-critical resource, but that have an inherently larger radius of detection, like light or sound. As per the description of the associator circuit above, MAVRIC can, in fact, learn to associate the occurrence of a light source with that of a resource. Since the light can be detected from a much larger distance, MAVRIC can, upon detection of the light, home in on the light source which brings it within the range of the scent gradient. It then follows the latter to the object it was seeking. The experimental layout for this behavior is depicted in Figure 5. MAVRIC starts its search from a "HOME" position in the lab. The point labelled "Mission event" marks the presence of food. The rings radiating outward from that point represent the "odor" gradient. Several typical search paths are shown along with the estimated envelop ("Search Limits") obtained from a number of search iterations. Note the "drunken weave" walk taken by the robot in its search. This is the pattern that is generated by the chaotic oscillator network. The weaving pattern is not a random walk, yet it does produce a novel path for each successive search. MAVRIC's success at finding food is dependent on the radius of the detectible gradient, relative to the surface area swept out by the search limits.

In the figure the irregularly shaped figure-8 trajectory with arrowheads actually represents a projection of a classical deterministic chaotic attractor onto the plane (we used two dimensions of the Lorenz attractor). Using uniformly distributed time intervals, the occurrence and duration of mission events is governed by this trajectory. Thus we create an environment which is nonstationary stochastic but not random. Also shown is a second event/gradient object that is chosen to be causally linked with the occurrence of the mission event. This event (e.g., the light source) and its gradient is called the "Cue event" and it will be generated according to another uniformly distributed probability relative to the occurrence of the mission event. The "trick" of intelligence here is that the gradient of the cue event can be sensed at a much greater distance than can the mission gradient. For example the point source of bright light will be sensed over a larger percentage of MAVRIC's range. It then turns out that if the cue event has the correct causal relation with the mission event, MAVRIC will learn this association through experiential encounters and begin to follow the light gradient as if it were the odor gradient. It will, however, preferentially follow the odor gradient once it becomes detected. In this way MAVRIC learns to use environmental cues to improve its foraging performance.

A moronic snail brain is seemingly not in the mainstream of AI research. As far as anyone can ascertain snails do not reason or recognize faces or remember stories. Is it possible that studying such a "primitive" system can lead to insights that may help us to one day to build machines that do have cognitive skills? From the biological side we are encouraged. Alkon [1] reports that some of the same cellular mechanisms involved in memory traces in the brains of real snails can be found in the neurons of mammalian brains. In the AI/Robotics camp we are not alone in our philosophical motivations [4].

But what have we garnered from MAVRIC that leads us to believe this track is worth pursuing? There are several important indications that the "bottom--up" development of intelligent, autonomous agents is realizable.

Many AI and connectionist approaches to pattern recognition are based on learning patterns or pattern classifications with little or no context. That is, the learned patterns have no meaning for the system, they are just facts. In MAVRIC, only the most crude sense of a pattern is learned, the association between tones and lights, for example. But those associations are always learned in the context of what is good or bad for the robot. Thus, every pattern has meaning and hence provides motivation for behavior. Basic reactive behaviors [4],[7] respond to stimuli that are prewired to represent harmful events or supportive events. This is analogous to an animal's genetically-governed wiring for pain or pleasure. What the robot learns is the causal relationship between events which, themselves are innocuous (nonsemantic) and those that carry meaning in the sense of the prewired responses. The temporal ordering of causal relationships thus allow the robot to respond to learned stimuli as if they were the hardwired semantic stimuli. We believe this is more to the point of symbol grounding [5]. Symbols (patterns) come to be recognized for their ultimate relation to meaningful stimuli rather than just to sensory grounding. Coupled with secondary conditioning [10] we have the potential basis for abstraction of conditionable sensory stimuli. It is this potential that gives us confidence that this approach will lead to higher levels of intelligence.

The second insight could be phrased as: More behaviors = more sensors + more intelligence. Many of the reactive behaviors (e.g., SEEK and AVOID) are mutually exclusive, while others are priority based, somewhat along the lines of the subsumptive architectures of [4]. Just as there are nonsemantic sensory modalities at the input end, we have found it beneficial to add nonreactive behaviors to the output. As we have added these behaviors (e.g., quasi-chaotic search) along with nonsemantic sensors, the associator network and support networks such as the "Object Location" network of Figure 2 have likewise grown. In fact it appears that as the number of sensor modalities/actions increases linearly we may expect to see a quadratic increase in the number of neurons needed to associatively process the data and produce a corresponding new behavior. There really isn't anything surprising here from the perspective of biology. As we go up the phylogenetic scale we see a substantial increase in the size of the cerebral lobes compared to the brain stem as animals get "smarter". What has been somewhat surprising is the way in which we can add behaviors so easily without destroying the basic reactive functions of the robot. This suggests that evolutionary processes such as module-doubling, accretion and specialization may be applicable in the development of useful (read selected) robots as it seems to be in nature.

There are severe limitations to the amount of processing that can be done with our present platform. However there are still a few areas we wish to explore that are within the realm of the current system. One of these is the role of nonassociative learning such as habituation and sensitization in the shaping of behavior. We suspect, based on the biological counterparts, that these adaptive responses will play an important part in increasing the intelligence of MAVRIC.

In order to incorporate more and more complex sensors, such as CCD arrays for image-like vision, we will clearly need to increase the processing horsepower of our brain hardware. Our goal is to move to a parallel processing environment such as the Transputer which seems ideally suited to running our simulated brain. Recently a very large commercial research lab of one of the world's leading computer companies donated a quadputer board and software to our lab to help us get to the next stage.

More recently one of us (Mobus) has been exploring a software--based agent called a cyberbot that is meant to forage for resources in an extended, distributed computing environment such as the Internet. The cyberbot is the software equivalent of a robot. It is fully embodied in terms of detecting objects in its environment (e.g., file names) and executing behaviors (e.g., jumping to the next node in a network). It is fully situated in a real, vast, dynamic and nonstationary environment --- the phenomenal growth rate of the Internet is now legend. The cyberbot vehicle, therefore, is not a mere simulation just because it is in software. Rather we suspect it will prove every bit as interesting as a physical robot for exploring concepts in foraging behavior and the animatic route to higher intelligence.