Muhammad Aurangzeb Ahmad

University of Washington Bothell. Harborview Medical Center.

I am a Resident Fellow at the Harborview Medical Center (UW Medicine). I am also an Affiliate Assistant Professor in the Department of Computer Science at University of Washington Bothell and Affiliate Faculty member at Responsible AI Systems and Experiences, University of Washington. I received my PhD in Computer Science from University of Minnesota and Bachelors in Computer Science from the Rochester Institute of Technology.

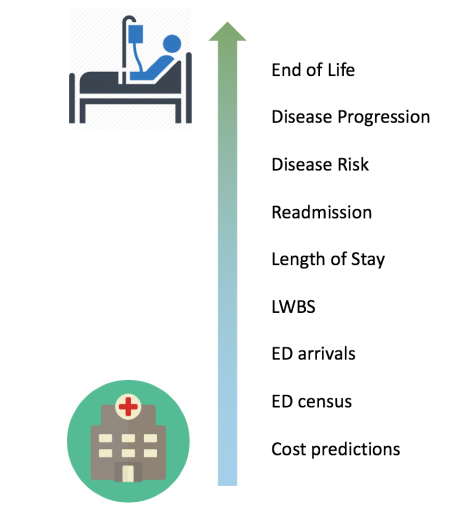

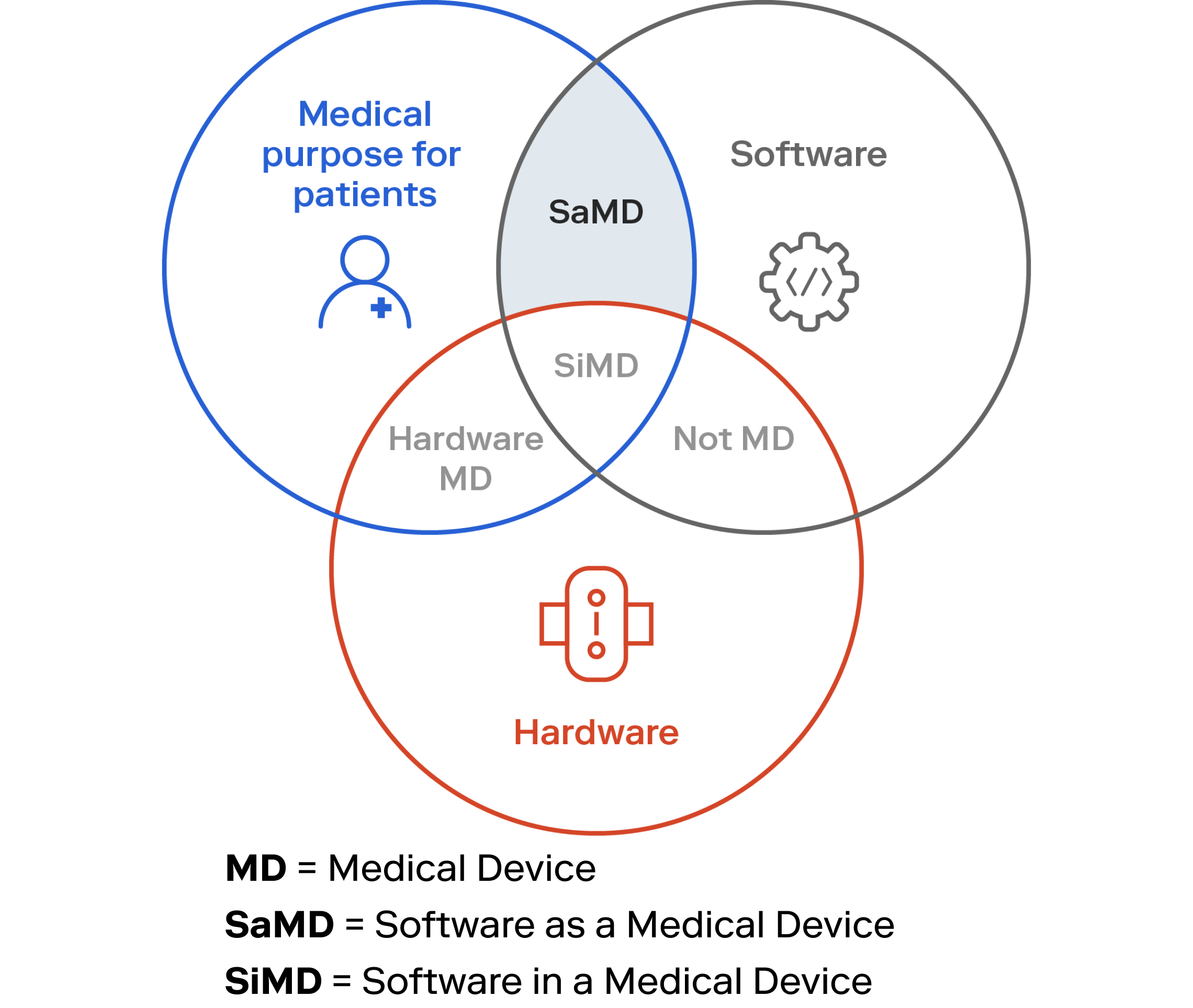

Research: ai in healthcare, responsible ai, digital twins, personality emulation.

news

| Feb 06, 2025 | I was on The Ethical Challenges of AI: Humanistic and Religious Responses to the Demands of Artificial Intelligence panel at the United Nations along with John Tasioulas (University of Oxford), Father Paolo Benanti (AI advisor to Pope Francis), Nathalie Smuha (KU Leuven), and Benedetta Audia (UN Advisor). |

|---|---|

| Jan 17, 2025 | The New York Times did a follow up study on AI and religion where they mention my work on creating the Rabbit Bot. |

| Jan 14, 2025 | Giving a talk on Wesley Homes on When Your Grandpa Is a Bot: AI, Death, and Digital Dopplegangers on January 14, 2025 and then at Coordinators of Patient Services in Bellevue, WA on January 15, 2025 |

| Jan 02, 2025 | The New York Times has an article on the use of AI in sermons where they profile of Rabbi Josh Fixler and the AI generated sermons for Rabbit Bot, a system that I created. |

| Nov 15, 2024 | This week I have two papers out: (1) Building persoanlity adaptive conversational agents for mental health therapy at the International Workshop on Parallel and AI-based Bioinformatics and Biomedicine ParBio at ACM-BCB (2) 𝐸2𝑇2: Emote Embedding for Twitch Toxicity Detection at CSCW |