A Theory of Interfaces

First history and now theory? What a way to start a practical book about user interfaces. But as social psychologist Kurt Lewin said, “There’s nothing as practical as a good theory” 6 6 Lewin (1943). Theory and practice in the real world. The Oxford Handbook of Organization Theory.

Let’s start with why theories are practical. Theories, in essence, are explanations for what something is and how something works. These explanatory models of phenomena in the world help us not only comprehend the phenomena, but also predict what will happen in the future with some confidence, and perhaps more importantly, they give us a conceptual vocabulary to exchange ideas about the phenomena. A good theory about user interfaces would be practical because it would help explain what user interface are, and what governs whether they are usable, learnable, efficient, error-prone, etc.

HCI researchers have written a lot about theory, including theories from other disciplines, and new theories specific to HCI 5,9 5 Jacko, J. A. (2012). Human computer interaction handbook: Fundamentals, evolving technologies, emerging applications. CRC Press.

Rogers, Y. (2012). HCI theory: classical, modern, contemporary. Synthesis Lectures on Human-Centered Informatics, 5(2), 1-129.

What are interfaces?

Let’s begin with what user interfaces are . User interfacesuser interface: A special kind of software designed to map human activities in the physical world (clicks, presses, taps, speech, etc.) to functions defined in a computer program. are software and/or hardware that bridge the world of human action and computer action . The first world is the natural world of matter, motion, sensing, action, reaction, and cognition, in which people (and other living things) build models of the things around them in order to make predictions about the effects of their actions. It is a world of stimulus, response, and adaptation. For example, when an infant is learning to walk, they’re constantly engaged in perceiving the world, taking a step, and experiencing things like gravity and pain, all of which refine a model of locomotion that prevents pain and helps achieve other objectives. Our human ability to model the world and then reshape the world around us using those models is what makes us so adaptive to environmental change. It’s the learning we do about the world that allows us to survive and thrive in it. Computers, and the interfaces we use to operate them, are one of the things that humans adapt to.

The other world is the world of computing. This is a world ultimately defined by a small set of arithmetic operations such as adding, multiplying, and dividing, and an even smaller set of operations that control which operations happen next. These instructions, combined with data storage, input devices to acquire data, and output devices to share the results of computation, define a world in which there is only forward motion. The computer always does what the next instruction says to do, whether that’s reading input, writing output, computing something, or making a decision. Sitting atop these instructions are functionsfunction: An idea from mathematics of using algorithms to map some input (e.g., numbers, text, or other data), to some output. Functions can be as simple as basic arithmetic (e.g., multiply , which takes two numbers and computes their product), or as complex as machine vision ( object recognition , which takes an image and a machine learned classifier trained on millions of images and produces a set of text descriptions of objects in the image. , which take input and produce output using some algorithms. Essentially all computer behavior leverages this idea of a function, and the result is that all computer programs (and all software) are essentially collections of functions that humans can invoke to compute things and have effects on data. All of this functional behavior is fundamentally deterministic; it is data from the world (content, clocks, sensors, network traffic, etc.), and increasingly, data that models the world and the people in it, that gives it its unpredictable, sometimes magical or intelligent qualities.

Now, both of the above are basic theories of people and computers. In light of this, what are user interfaces? User interfaces are mappings from the sensory, cognitive, and social human world to computational functions in computer programs. For example, a save button in a user interface is nothing more than an elaborate way of mapping a person’s physical mouse click or tap on a touch screen to a command to execute a function that will take some data in memory and permanently store it somewhere. Similarly, a browser window, displayed on a computer screen, is an elaborate way of taking the carefully rendered pixel-by-pixel layout of a web page, and displaying it on a computer screen so that sighted people can perceive it with their human perceptual systems. These mappings from physical to digital, digital to physical, are how we talk to computers.

Learning interfaces

If we didn’t have user interfaces to execute functions in computer programs, it would still be possible to use computers. We’d just have to program them using programming languages (which, as we discuss later in Programming Interfaces , can be hard to learn). What user interfaces offer are more learnable representations of these functions, their inputs, their algorithms, and their outputs, so that a person can build mental models of these representations that allow them to sense, take action, and achieve their goals, as they do with anything in the natural world. However, there is nothing natural about user interfaces: buttons, scrolling, windows, pages, and other interface metaphors are all artificial, invented ideas, designed to help people use computers without having to program them. While this makes using computers easier, interfaces must still be learned.

Don Norman, in his book, The Design of Everyday Things 8 8 Don Norman (2013). The design of everyday things: Revised and expanded edition. Basic Books.

Grossman, T., Fitzmaurice, G., & Attar, R. (2009). A survey of software learnability: metrics, methodologies and guidelines. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

What is it that people need to learn to take action on an interface? Norman (and later Gaver 2 2 Gaver, W.W. (1991). Technology affordances. Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI '91). New Orleans, Louisiana (April 27-May 2, 1991). New York: ACM Press, pp. 79-84.

Rex Hartson (2003). Cognitive, physical, sensory, and functional affordances in interaction design. Behaviour & Information Technology.

Don Norman (2013). The design of everyday things: Revised and expanded edition. Basic Books.

How can a person know what affordances an interface has? That’s where the concept of a signifiersignifier: Any indicator of an affordance in an interface. becomes important. Signifiers are any sensory or cognitive indicator of the presence of an affordance. Consider, for example, how you know that a computer mouse can be clicked. It’s physical shape might evoke the industrial design of a button. It might have little tangible surfaces that entreat you to push your finger on them. A mouse could even have visual sensory signifiers, like a slowly changing colored surface that attempts to say, “ I’m interactive, try touching me. ” These are mostly sensory indicators of an affordance. Personal digital assistants like Alexa, in contrast, lack most of these signifiers. What about an Amazon Echo says, “ You can say Alexa and speak a command? ” In this case, Amazon relies on tutorials, stickers, and even television commercials to signify this affordance.

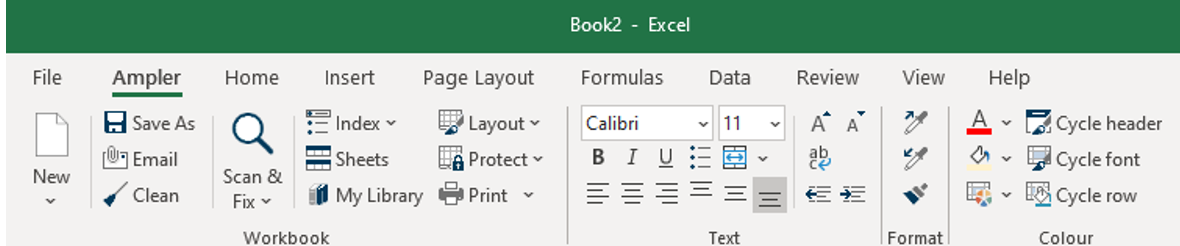

While both of these examples involve hardware, the same concepts of affordance and signifier apply to software too. Buttons in a graphical user interface have an affordance: if you click within their rectangular boundary, the computer will execute a command. If you have sight, you know a button is clickable because long ago you learned that buttons have particular visual properties such as a rectangular shape and a label. If you are blind, you might know a button is clickable because your screen reader announces that something is a “button” and reads its label. All of this requires you knowing that interfaces have affordances such as buttons that are signified by a particular visual motif. Therefore, a central challenge of designing a user interface is deciding what affordances an interface will have and how to signify that they exist.

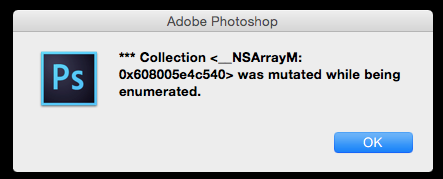

But execution is only half of the story. Norman also discussed gulfs of evaluationgulf of evaluation: Everything a person must learn in order to understand the effect of their action on an interface, including understanding error messages or a lack of response from an interface. , which are the gaps between the output of a user interface and a user’s goal. Once a person has performed some action on a user interface via some functional affordance, the computer will take that input and do something with it. It’s up to the user to then map that feedback onto their goal. If that mapping is simple and direct, then the gulf is a small. For example, consider an interface for printing a document. If after pressing a print button, the feedback was “ Your document was sent to the printer for printing, ” that would clearly convey progress toward the user’s goal, minimizing the gulf between the output and the goal. If after pressing the print button, the feedback was “ Job 114 spooled, ” the gulf is larger, forcing the user to know what a “ job ” is, what “ spooling ” is, and what any of that has to do with printing their document.

In designing user interfaces, there are many ways to bridge gulfs of execution and evaluation. One is to just teach people all of these affordances and help them understand all of the user interface’s feedback. A person might take a class to learn the range of tasks that can be accomplished with a user interface, steps to accomplish those tasks, concepts required to understand those steps, and deeper models of the interface that can help them potentially devise their own procedures for accomplishing goals. Alternatively, a person can read tutorials, tooltips, help, and other content, each taking the place of a human teacher, approximating the same kinds of instruction a person might give. There are entire disciplines of technical writing and information experience that focus on providing seamless, informative, and encouraging introductions to how to use a user interface. 7 7 Linda Newman Lior (2013). Writing for Interaction: Crafting the Information Experience for Web and Software Apps. Morgan Kaufmann.

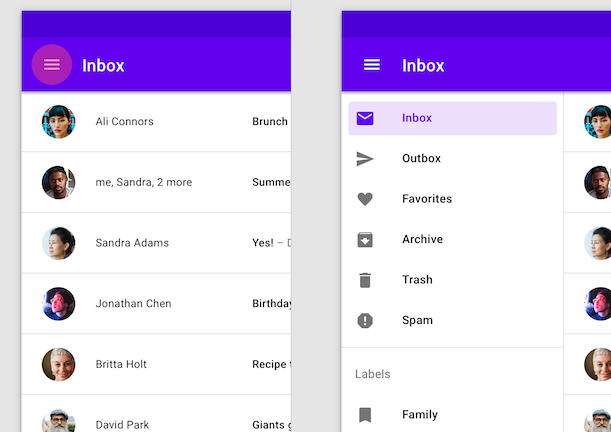

To many user interface designers, the need to explicitly teach a user interface is a sign of design failure. There is a belief that designs should be “self-explanatory” or “intuitive.” What these phrases actually mean are that the interface is doing the teaching rather than a person or some documentation. To bridge gulfs of execution , a user interface designer might conceive of physical, cognitive, and sensory affordances that are quickly learnable, for example. One way to make them quickly learnable is to leverage conventionsconvention: A widely-used and widely-learned interface design pattern (e.g., a web form, a login screen, a hamburger menu on a mobile website). , which are essentially user interface design patterns that people have already learned by learning other user interfaces. Want to make it easy to learn how to add an item to a cart on an e-commerce website? Use a button labeled “ Add to cart, ” a design convention that most people will have already learned from using other e-commerce sites.Alternatively, interfaces might even try to anticipate what people want to do, personalizing what’s available, and in doing so, minimizing how much a person has to learn. From a design perspective, there’s nothing inherently wrong with learning, it’s just a cost that a designer may or may not want to impose on a new user. (Perhaps learning a new interface affords new power not possible with old conventions, and so the cost is justified).

To bridge gulfs of evaluation , a user interface needs to provide feedbackfeedback: Output from a computer program intended to explain what action a computer took in response to a user’s input (e.g., confirmation messages, error messages, or visible updates). that explains what effect the person’s action had on the computer. Some feedback is explicit instruction that essentially teaches the person what functional affordances exist, what their effects are, what their limitations are, how to invoke them in other ways, what new functional affordances are now available and where to find them, what to do if the effect of the action wasn’t desired, and so on. Clicking the “ Add to cart ” button, for example, might result in some instructive feedback like this:

I added this item to your cart. You can look at your cart over there. If you didn’t mean to add that to your cart, you can take it out like this. If you’re ready to buy everything in your cart, you can go here. Don’t know what a cart is? Read this documentation.

Some feedback is implicit, suggesting the effect of an action, but not explaining it explicitly. For example, after pressing “ Add to cart ,” there might be an icon showing an abstract icon of an item being added to a cart, with some kind of animation to capture the person’s attention. Whether implicit or explicit, all of this feedback is still contextual instruction on the specific effect of the user’s input (and optionally more general instruction about other affordances in the user interface that will help a user accomplish their goal).

The result of all of this learning is a mental modelmental model: A person’s beliefs about an interface’s affordances and how to operate them. 1 1 Carroll, J. M., Anderson, N. S. (1987). Mental models in human-computer interaction: Research issues about what the user of software knows. National Academies.

Note that in this entire discussion, we’ve said little about tasks or goals. The broader HCI literature theorizes about those broadly 5,9 5 Jacko, J. A. (2012). Human computer interaction handbook: Fundamentals, evolving technologies, emerging applications. CRC Press.

Rogers, Y. (2012). HCI theory: classical, modern, contemporary. Synthesis Lectures on Human-Centered Informatics, 5(2), 1-129.

Using theory

You have some new concepts about user interfaces, and an underlying theoretical sense about what user interfaces are and why designing them is hard. Let’s recap:

- User interfaces bridge human goals and cognition to functions defined in computer programs.

- To use interfaces successfully, people must learn an interface’s affordances and how they can be used to achiever their goals.

- This learning must come from somewhere, either instruction by people, explanations in documentation, or teaching by the user interface itself.

- Most user interfaces have large gulfs of execution and evaluation, requiring substantial learning.

The grand challenge of user interface design is therefore trying to conceive of interaction designs that have small gulfs of execution and evaluation, while also offering expressiveness, efficiency, power, and other attributes that augment human ability.

These ideas are broadly relevant to all kinds of interfaces, including all those already invented, and new ones invented every day in research. And so these concepts are central to design. Starting from this theoretical view of user interfaces allows you to ask the right questions. For example, rather than trying to vaguely identify the most “intuitive” experience, you can systematically ask: “ Exactly what is our software’s functionality affordances and what signifiers will we design to teach it? ” Or, rather than relying on stereotypes, such as “ older adults will struggle to learn to use computers, ” you can be more precise, saying, “ This particular population of adults has not learned the design conventions of iOS, and so they will need to learn those before successfully utilizing this application’s interface. ”

Approaching user interface design and evaluation from this perspective will help you identify major gaps in your user interface design analytically and systematically, rather than relying only on observations of someone struggling to use an interface, or worse yet, stereotypes or ill-defined concepts such as “intuitive” or “user-friendly.” Of course, in practice, not everyone will know these theories, and other constraints will often prevent you from doing what you know might be right. That tension between theory and practice is inevitable, and something we’ll return to throughout this book.

References

-

Carroll, J. M., Anderson, N. S. (1987). Mental models in human-computer interaction: Research issues about what the user of software knows. National Academies.

-

Gaver, W.W. (1991). Technology affordances. Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI '91). New Orleans, Louisiana (April 27-May 2, 1991). New York: ACM Press, pp. 79-84.

-

Grossman, T., Fitzmaurice, G., & Attar, R. (2009). A survey of software learnability: metrics, methodologies and guidelines. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

-

Rex Hartson (2003). Cognitive, physical, sensory, and functional affordances in interaction design. Behaviour & Information Technology.

-

Jacko, J. A. (2012). Human computer interaction handbook: Fundamentals, evolving technologies, emerging applications. CRC Press.

-

Lewin (1943). Theory and practice in the real world. The Oxford Handbook of Organization Theory.

-

Linda Newman Lior (2013). Writing for Interaction: Crafting the Information Experience for Web and Software Apps. Morgan Kaufmann.

-

Don Norman (2013). The design of everyday things: Revised and expanded edition. Basic Books.

-

Rogers, Y. (2012). HCI theory: classical, modern, contemporary. Synthesis Lectures on Human-Centered Informatics, 5(2), 1-129.