Amy J. Ko, Ph.D.

Are you a K-12 teacher interested in research next summer? Fill out our interest form.

My research imagines and enables equitable, joyous, liberatory learning about computing and information, in schools and beyond.

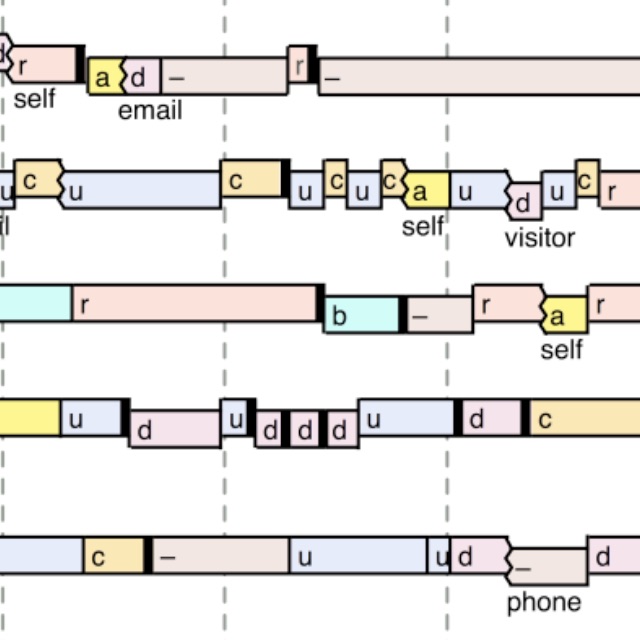

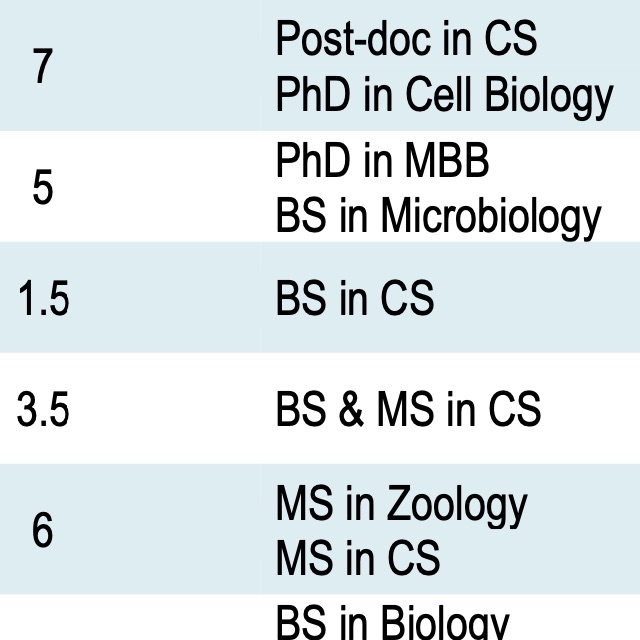

I work with outstanding postdocs, doctoral students, undergraduates, teachers and communities on this vision. My current projects within this goal are largely shaped by the faculty, students, and teachers in the Center for Learning, Computing, and Imagination, our partner teachers, school leaders, and families, and to a lesser extent, my active grants.

We publish primarily in Computing Education and Human-Computer Interaction and I work to broaden scholarly discourse as Editor-in-Chief of ACM TOCE and facilitating Reciprocal Reviews. More importantly, we share our discoveries broadly by blogging, presenting, teaching, writing, and and connecting with community, including the CS for All Washington advocacy community, the PNW CS Teach consortium of teacher educators.

Want to do research with me? Read about my lab, and join us in creating a more equitable future of computing that includes everyone.

Discoveries 🔗

My lab and I have discovered many things since I started doing research in 1999. Here are some of the highlights from our work. How I describe these is always evolving as we learn more.

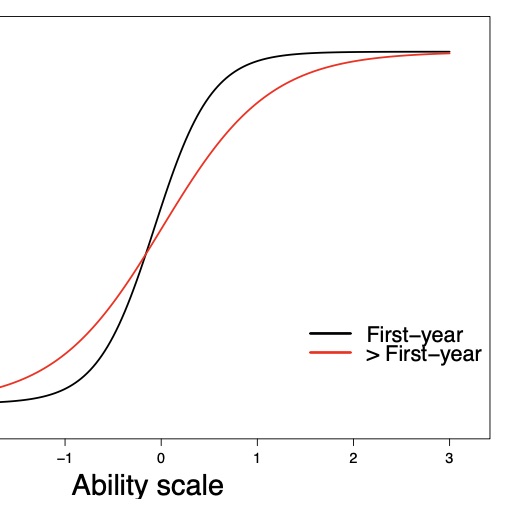

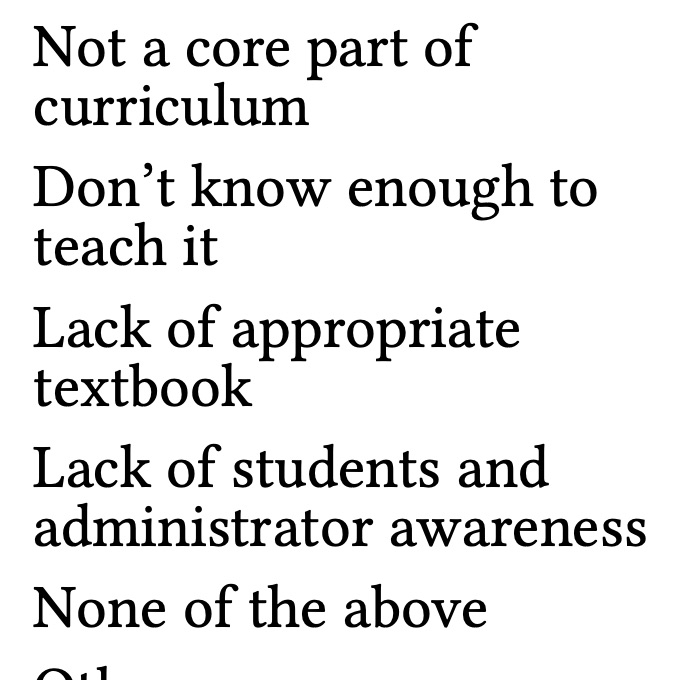

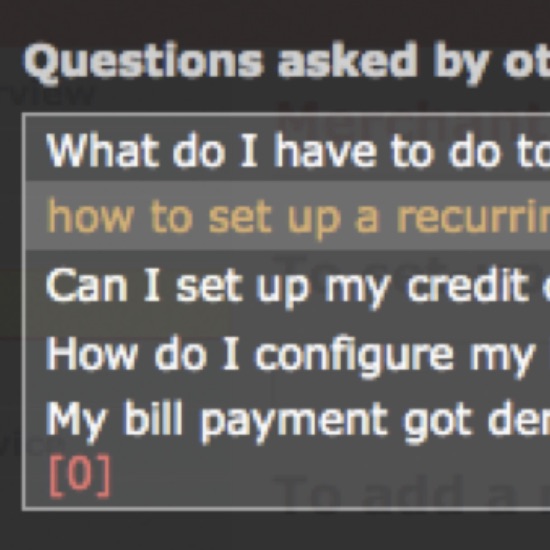

Techniques from psychometrics can help, but they are far from usable by everyday CS teachers.

Most learners don't want to be that deliberate about their process, favoring less effective trial and error strategies. But framing it as aunthetic practice can help.

Tools can help, but even more so, using data and domains that people understand is even better.

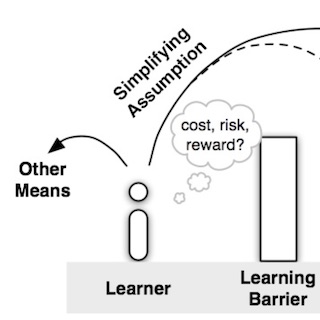

Programming is hard for many reasons, but my work showed that it is also hard because tools, APIs, and IDEs make information about program behavior particulary difficulty to find.

This is because writing skills are dependent on reading skills. Unfortunately, learning to read code correctly can be boring.

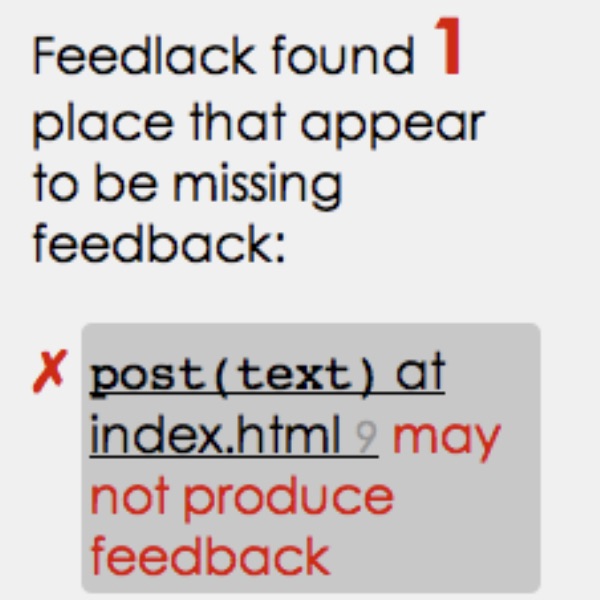

Compiler feedback is usually impersonal and mean; we found that being nicer has powerful impacts on learners' attention, compelling them to pay attention to valuable direct instruction.

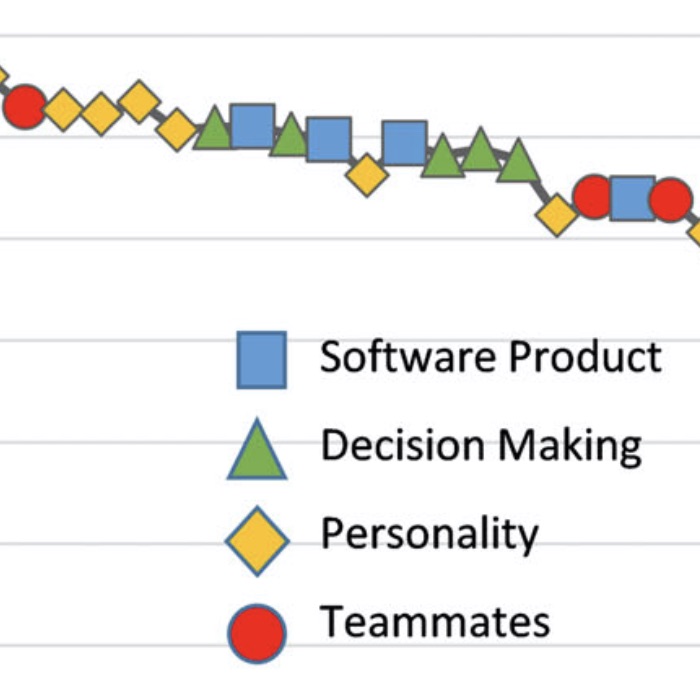

Through a series of studies, I unconvered the many ways that developers depend on information from people and systems to make engineering decisions, and how some of the most crucial information is hard or impossible to find.

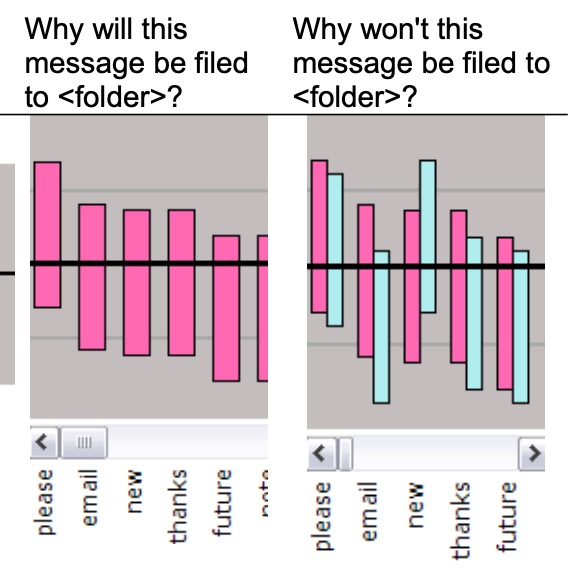

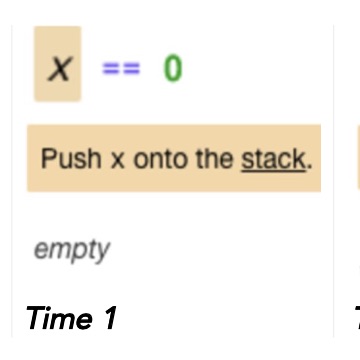

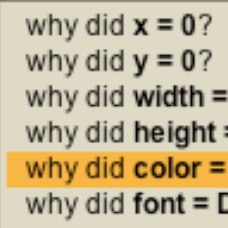

I invented tools and algorithms for deriving 'why' and 'why not' questions from programs and automatically answering those questions, helping people efficiently and interactively debug the root causes of program failures.

Many defects in dynamically typed programs can be found by operationalizing simple observations about how people write code, often forgetting to close the loop that statically typed programs can easily point out.

verificationtools 🖥️ demo {} code 📄papers

The seeminly technical context of bug reports are where large communities of users and small teams of developers engage in power struggles about what software should and shouldn't do.

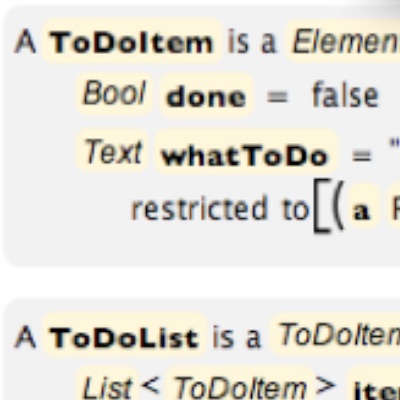

The structured editors of the 1980's were hard to build and use; I invented ways of making both easier by viewing programs as user interfaces, not documents.

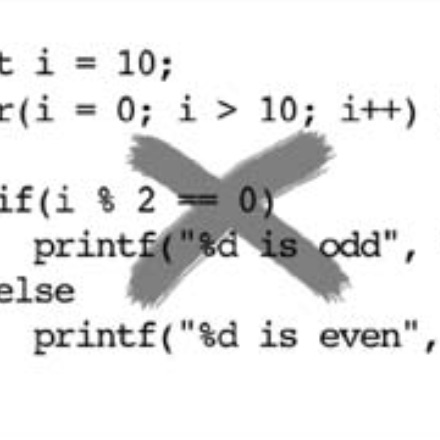

Much of my work during my dissertation examined where software failures come from; cognitive slips interact with the large state space that people create when programming to generate defects that are hard to localize.

Last updated 4/5/2025. To the extent

possible under law, Amy J. Ko has waived all copyright and related or neighboring rights to the design

and implementation of Amy's faculty site. This work is

published from the United States. See this site's GitHub repository to view source and provide feedback.

Last updated 4/5/2025. To the extent

possible under law, Amy J. Ko has waived all copyright and related or neighboring rights to the design

and implementation of Amy's faculty site. This work is

published from the United States. See this site's GitHub repository to view source and provide feedback.